当前位置:网站首页>Ten thousand words explanation - mindarmour Xiaobai tutorial!

Ten thousand words explanation - mindarmour Xiaobai tutorial!

2022-06-26 23:56:00 【Shengsi mindspire】

MindArmour Use

Configuration environment :CPU Environmental Science

First download mindspore, Refer to the official website

(https://www.mindspore.cn/install)

install MindArmour

Confirm the system environment information

• The hardware platform is Ascend、GPU or CPU.

• Reference resources MindSpore Installation guide

(https://www.mindspore.cn/install) ,

complete MindSpore Installation .MindArmour And MindSpore The version should be consistent .

• For other dependencies, see setup.py

(https://gitee.com/mindspore/mindarmour/blob/master/setup.py).

● Installation mode ●

May adopt pip Installation or source code compilation installation .

● pip install ●

pip install https://ms-release.obs.cn-north-4.myhuaweicloud.com/{version}/MindArmour/any/mindarmour-{version}-py3-none-any.whl --trusted-host ms-release.obs.cn-north-4.myhuaweicloud.com -i https://pypi.tuna.tsinghua.edu.cn/simple

• In the networked state , install whl The package will be downloaded automatically MindArmour Dependencies of the installation package ( For details of dependencies, see setup.py ), In other cases, it shall be installed by itself .

• {version} Express MindArmour Version number , For example, download 1.3.0 edition MindArmour when ,{version} Should be written as 1.3.0.

● Source code installation ●

1. from Gitee Download the source code .

git clone https://gitee.com/mindspore/mindarmour.git

2. In the source root directory , Execute the following command to compile and install MindArmour.

cd mindarmour

python setup.py install

● Verify that the installation was successful ●

Execute the following command , If no error is reported No module named 'mindarmour', The installation is successful .

python -c 'import mindarmour'

The specific operation is as follows :

Pictured , Not installed at first , Show no mindarmour library

pip Command direct installation .

Input enter after , No error reports , The installation is correct .

Get into python Environmental Science , The installation is correct .

Let's run a test and play .

Use NAD The algorithm improves the security of the model

( Click below to read the original download )

Start

I made a mistake at the beginning . Don't worry , Let's look at the information .

This appeared to be , temporary CPU I can't run yet .

“got device target GPU”. But analyze it carefully , We found that the previous sentence “support type cpu”.

Let's combine the error information , Just modify the... In the code target that will do .

MindSpore The compatibility of is still very strong ,

Just a little debugging .

Sure enough , It's done. target="CPU" That's all right.

That's really good .

After three rounds of training , The accuracy has reached 97% 了

GPU Show on

I haven't played enough , Then we are gpu Play again on the

I almost forgot the name of the environment I created , Originally called mindspore1.5-gpu

Some of the problems I met

GPU function armour

When it's running , Somehow something went wrong , Don't python There's something wrong with the command ?

Turned out to be c It's full , I put cuda Unloaded . It seems that we have to add another memory card during the winter vacation ... Then I'll write to you in winter vacation gpu Well .

● Full demo ●

pycharm retrofitting jupyter

1、 install Jupyter

pip install jupyter

2、 install pycharm pro , And then start

Build the attacked model

With MNIST For the demonstration dataset , The user-defined simple model is used as the attacked model .

Import correlation package

import os

import numpy as np

from scipy.special import softmax

from mindspore import dataset as ds

from mindspore import dtype as mstype

import mindspore.dataset.vision.c_transforms as CV

import mindspore.dataset.transforms.c_transforms as C

from mindspore.dataset.vision import Inter

import mindspore.nn as nn

from mindspore.nn import SoftmaxCrossEntropyWithLogits

from mindspore.common.initializer import TruncatedNormal

from mindspore import Model, Tensor, context

from mindspore.train.callback import LossMonitor

from mindarmour.adv_robustness.attacks import FastGradientSignMethod

from mindarmour.utils import LogUtil

from mindarmour.adv_robustness.evaluations import AttackEvaluate

context.set_context(mode=context.GRAPH_MODE, device_target="Ascend")

LOGGER = LogUtil.get_instance()

LOGGER.set_level("INFO")

TAG = 'demo'

When you download the file , Will report distrust http, No problem , Never mind .

Be careful , stay CPU Up operation , Set to target="CPU"

Load data set

utilize MindSpore Of dataset Provided MnistDataset Interface loading MNIST Data sets .

# generate dataset for train of test

def generate_mnist_dataset(data_path, batch_size=32, repeat_size=1,

num_parallel_workers=1, sparse=True):

"""

create dataset for training or testing

"""

# define dataset

ds1 = ds.MnistDataset(data_path)

# define operation parameters

resize_height, resize_width = 32, 32

rescale = 1.0 / 255.0

shift = 0.0

# define map operations

resize_op = CV.Resize((resize_height, resize_width),

interpolation=Inter.LINEAR)

rescale_op = CV.Rescale(rescale, shift)

hwc2chw_op = CV.HWC2CHW()

type_cast_op = C.TypeCast(mstype.int32)

# apply map operations on images

if not sparse:

one_hot_enco = C.OneHot(10)

ds1 = ds1.map(operations=one_hot_enco, input_columns="label",

num_parallel_workers=num_parallel_workers)

type_cast_op = C.TypeCast(mstype.float32)

ds1 = ds1.map(operations=type_cast_op, input_columns="label",

num_parallel_workers=num_parallel_workers)

ds1 = ds1.map(operations=resize_op, input_columns="image",

num_parallel_workers=num_parallel_workers)

ds1 = ds1.map(operations=rescale_op, input_columns="image",

num_parallel_workers=num_parallel_workers)

ds1 = ds1.map(operations=hwc2chw_op, input_columns="image",

num_parallel_workers=num_parallel_workers)

# apply DatasetOps

buffer_size = 10000

ds1 = ds1.shuffle(buffer_size=buffer_size)

ds1 = ds1.batch(batch_size, drop_remainder=True)

ds1 = ds1.repeat(repeat_size)

return ds1

● Build a model ●

Here we use LeNet The model, for example , You can also build your own training model .

1. Definition LeNet Model network

def conv(in_channels, out_channels, kernel_size, stride=1, padding=0):

weight = weight_variable()

return nn.Conv2d(in_channels, out_channels,

kernel_size=kernel_size, stride=stride, padding=padding,

weight_init=weight, has_bias=False, pad_mode="valid")

def fc_with_initialize(input_channels, out_channels):

weight = weight_variable()

bias = weight_variable()

return nn.Dense(input_channels, out_channels, weight, bias)

def weight_variable():

return TruncatedNormal(0.02)

class LeNet5(nn.Cell):

"""

Lenet network

"""

def __init__(self):

super(LeNet5, self).__init__()

self.conv1 = conv(1, 6, 5)

self.conv2 = conv(6, 16, 5)

self.fc1 = fc_with_initialize(16*5*5, 120)

self.fc2 = fc_with_initialize(120, 84)

self.fc3 = fc_with_initialize(84, 10)

self.relu = nn.ReLU()

self.max_pool2d = nn.MaxPool2d(kernel_size=2, stride=2)

self.flatten = nn.Flatten()

def construct(self, x):

x = self.conv1(x)

x = self.relu(x)

x = self.max_pool2d(x)

x = self.conv2(x)

x = self.relu(x)

x = self.max_pool2d(x)

x = self.flatten(x)

x = self.fc1(x)

x = self.relu(x)

x = self.fc2(x)

x = self.relu(x)

x = self.fc3(x)

return x2. Training LeNet Model , Use the data loading function defined above generate_mnist_dataset Load data

mnist_path = "../common/dataset/MNIST/"

batch_size = 32

# train original model

ds_train = generate_mnist_dataset(os.path.join(mnist_path, "train"),

batch_size=batch_size, repeat_size=1,

sparse=False)

net = LeNet5()

loss = SoftmaxCrossEntropyWithLogits(sparse=False)

opt = nn.Momentum(net.trainable_params(), 0.01, 0.09)

model = Model(net, loss, opt, metrics=None)

model.train(10, ds_train, callbacks=[LossMonitor()],

dataset_sink_mode=False)

Here are the results of the training model

# 2. get test data

ds_test = generate_mnist_dataset(os.path.join(mnist_path, "test"),

batch_size=batch_size, repeat_size=1,

sparse=False)

inputs = []

labels = []

for data in ds_test.create_tuple_iterator():

inputs.append(data[0].asnumpy().astype(np.float32))

labels.append(data[1].asnumpy())

test_inputs = np.concatenate(inputs)

test_labels = np.concatenate(labels)1. test model

# prediction accuracy before attack

net.set_train(False)

test_logits = net(Tensor(test_inputs)).asnumpy()

tmp = np.argmax(test_logits, axis=1) == np.argmax(test_labels, axis=1)

accuracy = np.mean(tmp)

LOGGER.info(TAG, 'prediction accuracy before attacking is : %s', accuracy)

In the test results, the classification accuracy reaches 97%.

Counter attack

call MindArmour Provided FGSM Interface (FastGradientSignMethod).

# attacking

# get adv data

attack = FastGradientSignMethod(net, eps=0.3, loss_fn=loss)

adv_data = attack.batch_generate(test_inputs, test_labels)

# get accuracy of adv data on original model

adv_logits = net(Tensor(adv_data)).asnumpy()

adv_proba = softmax(adv_logits, axis=1)

tmp = np.argmax(adv_proba, axis=1) == np.argmax(test_labels, axis=1)

accuracy_adv = np.mean(tmp)

LOGGER.info(TAG, 'prediction accuracy after attacking is : %s', accuracy_adv)

attack_evaluate = AttackEvaluate(test_inputs.transpose(0, 2, 3, 1),

test_labels,

adv_data.transpose(0, 2, 3, 1),

adv_proba)

LOGGER.info(TAG, 'mis-classification rate of adversaries is : %s',

attack_evaluate.mis_classification_rate())

LOGGER.info(TAG, 'The average confidence of adversarial class is : %s',

attack_evaluate.avg_conf_adv_class())

LOGGER.info(TAG, 'The average confidence of true class is : %s',

attack_evaluate.avg_conf_true_class())

LOGGER.info(TAG, 'The average distance (l0, l2, linf) between original '

'samples and adversarial samples are: %s',

attack_evaluate.avg_lp_distance())

LOGGER.info(TAG, 'The average structural similarity between original '

'samples and adversarial samples are: %s',

attack_evaluate.avg_ssim())The attack results are as follows :

prediction accuracy after attacking is : 0.052083

mis-classification rate of adversaries is : 0.947917

The average confidence of adversarial class is : 0.803375

The average confidence of true class is : 0.042139

The average distance (l0, l2, linf) between original samples and adversarial samples are: (1.698870, 0.465888, 0.300000)

The average structural similarity between original samples and adversarial samples are: 0.332538

give the result as follows .

On the model FGSM After no target attack , Model accuracy is determined by 11%, The misclassification rate is as high as 89%, The average confidence of the prediction category of the countermeasure sample of successful attack (ACAC) by 0.721933, The average confidence of the real category of the countermeasure sample of successful attack (ACTC) by 0.05756182, At the same time, the zero norm distance between the generated countermeasure sample and the original sample is given 、 Two norm distance and infinite norm distance , The average structural similarity between each countermeasure sample and the original sample is 0.5708779.

Antagonistic defense

NaturalAdversarialDefense(NAD) It is a simple and effective defense method against samples , Use confrontation training , Build confrontation samples in the process of model training , And mix the counter sample with the original sample , Train the model together . As the number of training increases , The model improves the robustness against samples in the process of training .NAD The algorithm uses FGSM As an attack algorithm , Build confrontation samples .

Defense is achieved

call MindArmour Provided NAD Defense interface (NaturalAdversarialDefense).

from mindarmour.adv_robustness.defenses import NaturalAdversarialDefense

# defense

net.set_train()

nad = NaturalAdversarialDefense(net, loss_fn=loss, optimizer=opt,

bounds=(0.0, 1.0), eps=0.3)

nad.batch_defense(test_inputs, test_labels, batch_size=32, epochs=10)

# get accuracy of test data on defensed model

net.set_train(False)

test_logits = net(Tensor(test_inputs)).asnumpy()

tmp = np.argmax(test_logits, axis=1) == np.argmax(test_labels, axis=1)

accuracy = np.mean(tmp)

LOGGER.info(TAG, 'accuracy of TEST data on defensed model is : %s', accuracy)

# get accuracy of adv data on defensed model

adv_logits = net(Tensor(adv_data)).asnumpy()

adv_proba = softmax(adv_logits, axis=1)

tmp = np.argmax(adv_proba, axis=1) == np.argmax(test_labels, axis=1)

accuracy_adv = np.mean(tmp)

attack_evaluate = AttackEvaluate(test_inputs.transpose(0, 2, 3, 1),

test_labels,

adv_data.transpose(0, 2, 3, 1),

adv_proba)

LOGGER.info(TAG, 'accuracy of adv data on defensed model is : %s',

np.mean(accuracy_adv))

LOGGER.info(TAG, 'defense mis-classification rate of adversaries is : %s',

attack_evaluate.mis_classification_rate())

LOGGER.info(TAG, 'The average confidence of adversarial class is : %s',

attack_evaluate.avg_conf_adv_class())

LOGGER.info(TAG, 'The average confidence of true class is : %s',

attack_evaluate.avg_conf_true_class())

stay CPU Up and running , I've heard the sound of the fan !

A few seconds later , The fan sound decreases , Ready to view the results .

Defense effect

accuracy of TEST data on defensed model is : 0.981270

accuracy of adv data on defensed model is : 0.813602

defense mis-classification rate of adversaries is : 0.186398

The average confidence of adversarial class is : 0.653031

The average confidence of true class is : 0.184980

Use NAD After defense against the sample , The misclassification rate of the model is reduced to 18%, The model effectively defends against the sample . meanwhile , The classification accuracy of the model for the original test data set is 98%.

Compare with the data on the official website :

accuracy of TEST data on defensed model is : 0.974259

accuracy of adv data on defensed model is : 0.856370

defense mis-classification rate of adversaries is : 0.143629

The average confidence of adversarial class is : 0.616670

The average confidence of true class is : 0.177374

Use NAD After defense against the sample , The misclassification rate of the model for countermeasure samples ranges from 95% Down to 14%, The model effectively defends against the sample . meanwhile , The classification accuracy of the model for the original test data set is 97%.

Open source code

dear friend , I have included in this article MindArmour The practical code is open source to gitee, The code is already in CPU Debugging passed on , Welcome to download and use , After debugging by yourself, you will have a deeper understanding .

link :https://gitee.com/qmckw/mindspore-armour

MindSpore Official information

GitHub : https://github.com/mindspore-ai/mindspore

Gitee : https : //gitee.com/mindspore/mindspore

official QQ Group : 486831414

边栏推荐

- 不会写免杀也能轻松过defender上线CS

- Introduction to software engineering -- Chapter 4 -- formal description technology

- Why does EDR need defense in depth to combat ransomware?

- MindSpore新型轻量级神经网络GhostNet,在ImageNet分类、图像识别和目标检测等多个应用场景效果优异!

- [interface] pyqt5 and swing transformer for face recognition

- Which securities dealers recommend? Is it safe to open an account online now?

- 手机能开户炒股吗 网上开户炒股安全吗

- Is it reliable to speculate in stocks by mobile phone? Is it safe to open an account and speculate in stocks online

- Leetcode 718. 最长重复子数组(暴力枚举,待解决)

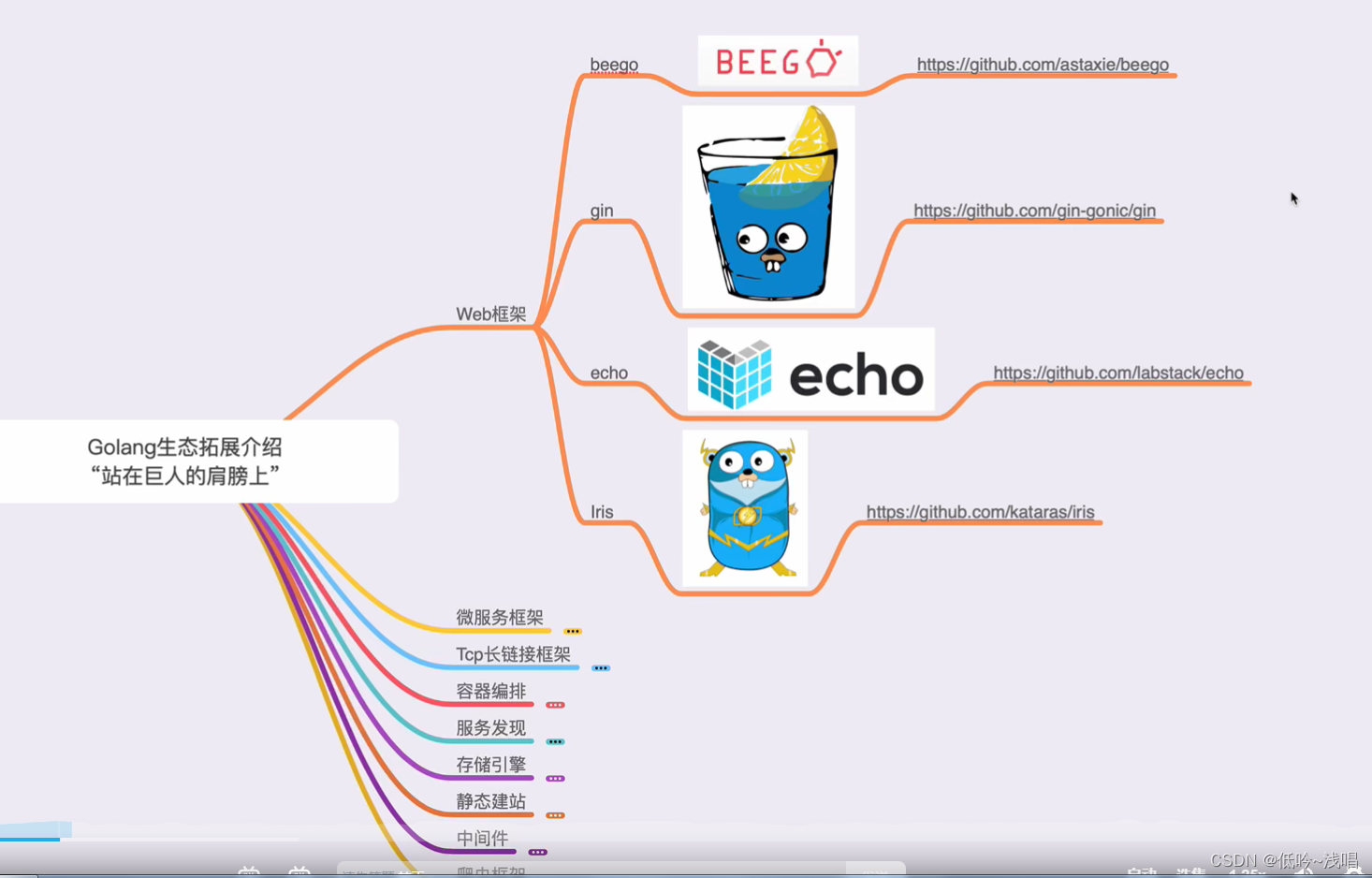

- go语言的服务发现、存储引擎、静态网站

猜你喜欢

From bitmap to bloom filter, C # implementation

代码之外:写作是倒逼成长的最佳方式

微信小程序自动生成打卡海报

12 color ring three primary colors

The processing of private chat function in go language

敲重点!最全大模型训练合集!

My advanced learning notes of C language ----- keywords

ASP.Net Core创建MVC项目上传文件(缓冲方式)

固有色和环境色

Development and learning route of golang language

随机推荐

Where is it safer to open an account to buy funds

在手机开户买股票安全吗 网上开户炒股安全吗

股票开户有哪些优惠活动?手机开户安全么?

Safe and cost-effective payment in Thailand

Unity初学者肯定能用得上的50个小技巧

Would you like to buy stocks? Where do you open an account in a securities company? The Commission is lower and safer

互联网行业,常见含金量高的证书,看看你有几个?

技术干货|什么是大模型?超大模型?Foundation Model?

Can I open an account for stock trading on my mobile phone? Is it safe to open an account for stock trading on the Internet

Amway! How to provide high-quality issue? That's what Xueba wrote!

技术干货|极速、极智、极简的昇思MindSpore Lite:助力华为Watch更加智能

通过两个stack来实现Queue

Can I open an account for stock trading on my mobile phone? Is it safe to open an account for stock trading on the Internet

【Try to Hack】正向shell和反向shell

国产框架MindSpore联合山水自然保护中心,寻找、保护「中华水塔」中的宝藏生命

go中的微服务和容器编排

Understanding of "the eigenvectors corresponding to different eigenvalues cannot be orthogonalized"

Pinpoint attackers with burp

Openpyxl module

typora设置标题自动编号