当前位置:网站首页>CEPH principle

CEPH principle

2022-07-02 17:24:00 【30 hurdles in life】

ceph It's a unified distributed storage system , Provide better performance , reliability , Extensibility .

brief introduction :

High performance

Abandon the traditional centralized storage addressing scheme , use CRUSH Algorithm , Data is evenly distributed , High degree of parallelism

Considering the isolation of disaster tolerance area , It can realize the replica setting rules of various loads , For example, cross machine room , Rack sense, etc

Capable of supporting thousands of storage nodes , Support TB To PB The data of

High availability

The number of copies can be flexibly controlled

Support fault domain separation , Strong data consistency

A variety of fault scenarios are automatically repaired and self-healing

No single point of failure , Automatic management

high scalability

De centralization

Expand flexibility

It grows linearly as the number of nodes increases

Rich features

Three storage interfaces are supported , Block storage , File store , Object storage

Support for custom interfaces , Support multiple language drivers

framework

Three interfaces are supported

object : It's original API And compatible swift and s3 Of API

block : Support thin provisioning , snapshot , clone

file: posix Interface Support for snapshots

Components

monitor: One ceph Clusters need more than one monitor It's a small cluster , They passed paxos Synchronous data , For preservation OSD Metadata

OSD: Full name object storage device, That is, the process responsible for returning specific data in response to client requests , One ceph Generally, there are many clusters osd. Main functions: storage of user data , When directly using the hard disk seat to store the target , A hard disk is called OSD, When using a directory seat to store targets , This directory is also called OSD

MDS: Full name ceph metadata server , yes i One cephFs Metadata services that services depend on , Object storage and fast device storage need to change services

object: ceph The bottom storage unit is object object , A piece of data , A configuration is an object , Every object Contains a ID Metadata Raw data

pool:pool Is a logical partition for storing objects , It usually specifies the type of data redundancy depends on the number of copies , The default is 3 copy , For different storage types , Need separate pool, Such as RBD

PG: Full name placement Groups It 's a logical concept , One OSD Contains multiple PG, introduce PG This layer is actually for better distribution of data and positioning data , Every pool It contains many pg, It is a collection of objects , The smallest unit of data balance and recovery at the server is PG.

pool yes ceph Logical partitions that store data , It starts namespace The role of

Every pool Contain a certain amount of PG

PG Objects in are mapped to different object On

pool It's distributed throughout the cluster

filestore On bulestore:fileStore It is the back-end storage engine used by default in the old version , If you use filestore It is recommended to use xfs file system ,bulestore Is a new backend storage engine , Can directly manage raw hard disk , Abandoned ext4 On XFS Wait for the local file system . You can directly operate the physical hard disk , At the same time, the efficiency is also much higher .

RADOS: Full name Reliable Autonomic Distributed Object Store. yes Ceph The essence of clusters , Used to realize data distribution ,FailOver Cluster operation .

Librados:librados yes rados Provide library , because rados It's hard to access the protocol directly , Because of the top RBD,RGW,cephFs It's all through librados Access to the , At present php,ruby,java,python,c,C++ Support

crush:crush yes ceph The data distribution algorithm used , Similar to consistent hashing , Let the data go where it's supposed to be

RBD: Full name rados block device yes ceph Block equipment services provided to the outside world , Such as virtual machine hard disk , Support snapshot function

RGW: Full name rados gateway, yes ceph Object storage services provided externally , Interface and s3 and swfit compatible .

cephFs: Full name ceph file system yes ceph File system services provided externally

Block storage :

Typical equipment disk array , disk , It is mainly used to map the raw disk space to the host

advantage : adopt raid And lvm methods , Data protection

How fast and cheap hard drives are combined , Provide capacity

How fast are the logical disks combined , Provide reading and writing efficiency

shortcoming

use SAN When building a network , Optical switches The cost is high

Data cannot be shared between hosts

Use scenarios :

docker Containers , Virtual machine disk storage allocation

The logging stored

File store

File store :

Typical equipment ,FTP,NFS The server , For customer service, the problem of storing files that cannot be shared , So with file storage , On the server FTP NFS The server , File storage .

advantage : Low cost , Any machine can . Convenient files can be shared .

shortcoming : Low efficiency of reading and writing . The transmission rate is slow .

Use scenarios

The logging stored

File storage with directory structure

Object storage

Typical equipment :

Distributed server with large capacity hard disk ,swift,s3. Multiple servers have built-in high-capacity hard disks , Install the object storage management software , Provide external read-write access

advantage : High speed of reading and writing with block storage ; With file storage sharing and other features

Use scenarios : Picture storage , Video storage

Deploy

Because we are here kubernetes Use in cluster , Also for the convenience of management , Here we use rock To deploy ceph colony ,rook Is an open source cloud native storage orchestration tool , Provide platform framework , And support for various storage solutions . Use the cloud native environment for this integration .

rook Turn storage software into group management , Self expanding and self-healing storage services , Through automated deployment , start-up , To configure , supply , Expand , upgrade , transfer , disaster recovery , Monitoring and resource management .rook The underlying uses cloud native container management , Scheduling and orchestration provide the ability to provide these functions . In fact, it is what we usually say operator.rook Use the extension function to deeply integrate it into the cloud native environment , And for scheduling , Life cycle management , Resource management , Security , Monitoring provides a seamless experience .

rook Contains multiple components :

rook operator:rook Core components ,rook operator It's a simple container , Automatically start the storage cluster , And monitor the storage daemon , To ensure the health of the storage cluster .

rook agent: Run on each storage node , And configure a flexvolume perhaps CSI plug-in unit , and kubernetes Storage volume control framework ,Agent Handle all storage operations , For example, add network storage devices , Record the storage volume and format file system on the host .

rook discovers: Monitor storage devices attached to storage nodes

rook It will also be used kubernetes pod In the form of , Deploy ceph Of MON,OSD as well as MGR Daemon .ROOK operator Let users through CRD To create and manage storage clusters , Each resource defines itself CRD

rookcluster: It provides the ability to configure storage clusters , Use cases provide fast storage , Object storage , And shared file systems , Each cluster has multiple pool

pool: Support block storage ,pool It also provides internal support for files and online storage

object store: use s3 Compatible interface open storage service

file system: For multiple kubernetes pod Provide shared storage

边栏推荐

- 2322. Remove the minimum fraction of edges from the tree (XOR and & Simulation)

- Microservice architecture practice: using Jenkins to realize automatic construction

- Blog theme "text" summer fresh Special Edition

- GeoServer:发布PostGIS数据源

- Amazon cloud technology community builder application window opens

- PCL知识点——体素化网格方法对点云进行下采样

- 一年顶十年

- 13、Darknet YOLO3

- < IV & gt; H264 decode output YUV file

- 【Leetcode】14. 最长公共前缀

猜你喜欢

Soul, a social meta universe platform, rushed to Hong Kong stocks: Tencent is a shareholder with an annual revenue of 1.28 billion

Qwebengineview crash and alternatives

剑指 Offer 21. 调整数组顺序使奇数位于偶数前面

![[shutter] dart data type (dynamic data type)](/img/6d/60277377852294c133b94205066e9e.jpg)

[shutter] dart data type (dynamic data type)

Exploration of mobile application performance tools

Interpretation of key parameters in MOSFET device manual

si446使用记录(一):基本资料获取

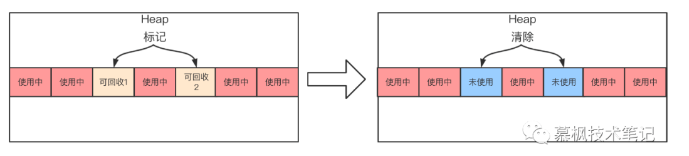

Believe in yourself and finish the JVM interview this time

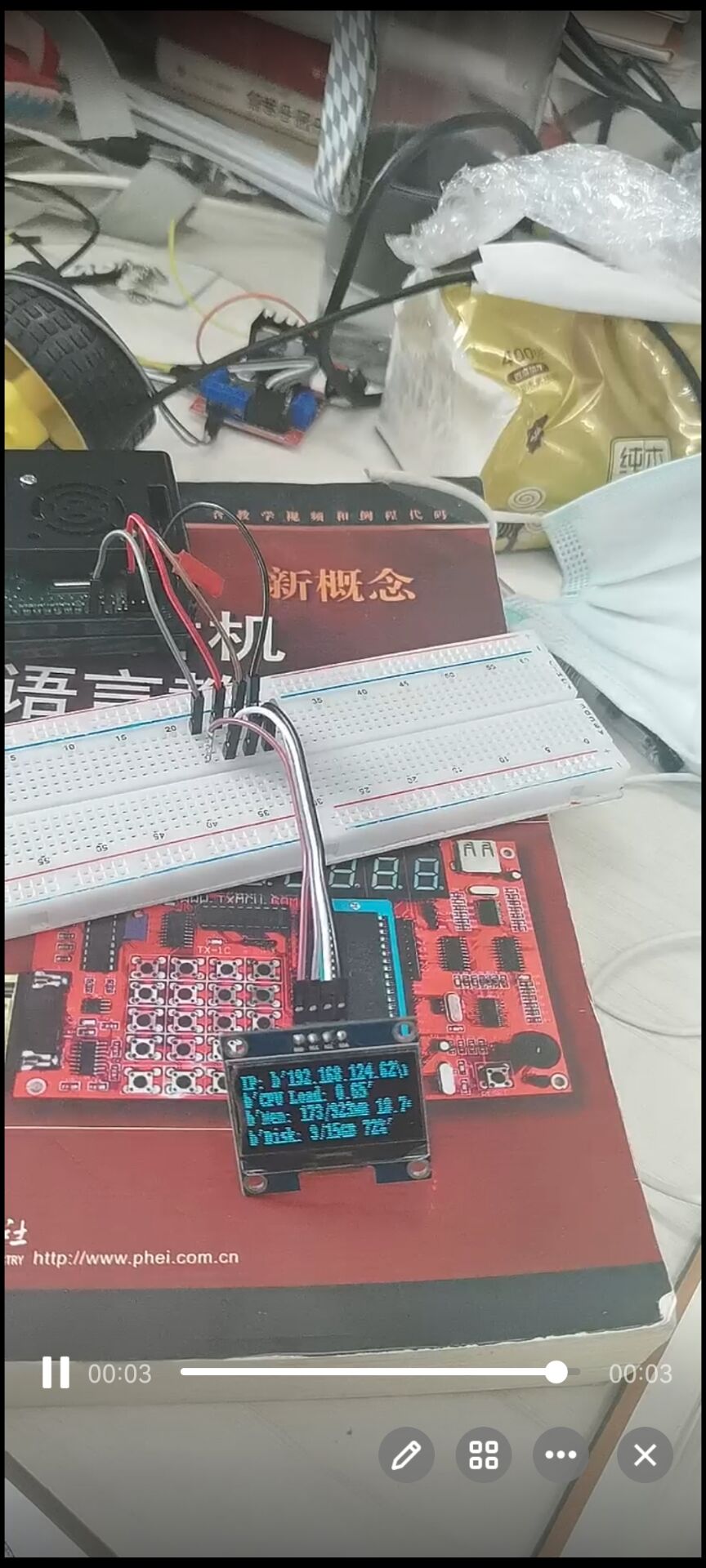

智能垃圾桶(五)——点亮OLED

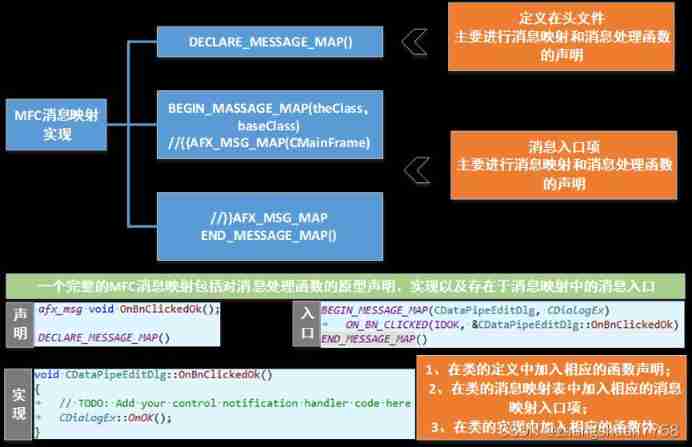

The difference of message mechanism between MFC and QT

随机推荐

详细介绍scrollIntoView()方法属性

Sword finger offer 21 Adjust the array order so that odd numbers precede even numbers

2、 Expansion of mock platform

简单介绍BASE64Encoder的使用

Win10 system uses pip to install juypter notebook process record (installed on a disk other than the system disk)

The difference of message mechanism between MFC and QT

The poor family once again gave birth to a noble son: Jiangxi poor county got the provincial number one, what did you do right?

Introduction to Hisilicon hi3798mv100 set top box chip [easy to understand]

Listing of chaozhuo Aviation Technology Co., Ltd.: raising 900million yuan, with a market value of more than 6billion yuan, becoming the first science and technology innovation board enterprise in Xia

A case study of college entrance examination prediction based on multivariate time series

Sword finger offer 26 Substructure of tree

例题 非线性整数规划

Ocio V2 reverse LUT

871. Minimum refueling times

[essay solicitation activity] Dear developer, RT thread community calls you to contribute

Baobab's gem IPO was terminated: Tang Guangyu once planned to raise 1.8 billion to control 47% of the equity

Linux Installation PostgreSQL + Patroni cluster problem

Fuyuan medicine is listed on the Shanghai Stock Exchange: the market value is 10.5 billion, and Hu Baifan is worth more than 4billion

剑指 Offer 21. 调整数组顺序使奇数位于偶数前面

The beginning of life