当前位置:网站首页>Gbdt source code analysis of boosting

Gbdt source code analysis of boosting

2022-07-25 15:08:00 【Weigtang 406 team】

Logarithmic loss function

LR in , Maximum likelihood is used to calculate parameters , That is, some parameters are obtained to maximize the probability of known samples . For the classification problem , The training set we know label, If all samples are predicted accurately , that y ^ = y \hat{y}=y y^=y. namely :

- y = 0 y=0 y=0, P ( Y ∣ X ) P(Y|X) P(Y∣X) As close as possible 0

- y = 1 y=1 y=1, P ( Y ∣ X ) P(Y|X) P(Y∣X) As close as possible 1

Then the target is : ∏ i = 1 N [ P ( Y = 1 ∣ X = x i ) ] y i [ 1 − P ( Y = 0 ∣ X = x i ) ] 1 − y i \prod_{i=1}^{N}\left[P\left(Y=1|X=x_{i}\right)\right]^{y_{i}}\left[1-P\left(Y=0|X=x_{i}\right)\right]^{1-y_{i}} ∏i=1N[P(Y=1∣X=xi)]yi[1−P(Y=0∣X=xi)]1−yi As big as possible

Further use log Transform quadrature into summation , Then the loss function is defined as follows :

L o s s = − ∑ i = 1 N y i [ P ( Y = 1 ∣ X = x i ) ] + 1 − y i [ 1 − P ( Y = 0 ∣ X = x i ) ] Loss = -\sum_{i=1}^{N}{y_{i}}\left[P\left(Y=1|X=x_{i}\right)\right]+{1-y_{i}}\left[1-P\left(Y=0|X=x_{i}\right)\right] Loss=−i=1∑Nyi[P(Y=1∣X=xi)]+1−yi[1−P(Y=0∣X=xi)]

hypothesis y ∈ { 0 , 1 } y\in\{0,1\} y∈{ 0,1}, For a sample ( x i x_i xi, y i y_i yi), Logarithmic loss function logloss Can be defined as :

L ( y i , p ( Y = 1 ∣ x i ) ) = − { y i log p i + ( 1 − y i ) log ( 1 − p i ) } L\left(y_{i}, p\left(Y=1|x_{i}\right)\right)=-\left\{y_{i} \log p_{i}+\left(1-y_{i}\right) \log \left(1-p_{i}\right)\right\} L(yi,p(Y=1∣xi))=−{ yilogpi+(1−yi)log(1−pi)}

stay GBDT in , Every round is fitting the negative gradient , The first step in forecasting is the accumulation of continuous values , Finally through sigmoid The function maps to 0-1 Between .

f m ( x ) = f m − 1 ( x ) + α m G m ( x ) = ∑ t = 1 m G t ( x ) P ( Y = 1 ∣ X = x i ) = s i g m o i d ( f m ( x i ) ) = p ( x i ) = 1 1 + e ( − f m ( x i ) ) \begin{aligned} &{f_m}\left( x \right) = {f_{m - 1}}\left( x \right) + {\alpha _m}{G_m}\left( x \right) = \sum\limits_{t = 1}^m { {G_t}\left( x \right)}\\ &P(Y=1|X=x_i)=sigmoid(f_m(x_i))=p{(x_i)}=\frac{1}{1+e^{\left(-f_{m}\left(x_{i}\right)\right)}} \end{aligned} fm(x)=fm−1(x)+αmGm(x)=t=1∑mGt(x)P(Y=1∣X=xi)=sigmoid(fm(xi))=p(xi)=1+e(−fm(xi))1

hold f m ( x ) f_m(x) fm(x) Plug in loss:

− L o s s ( y i , f m ( x i ) ) = y i log ( 1 1 + e ( − f m ( x i ) ) ) + ( 1 − y i ) log ( e ( − f m ( x i ) ) 1 + e ( − f m ( x i ) ) ) = − y i log ( 1 + e ( − f m ( x i ) ) ) + ( 1 − y i ) { log ( e ( − f m ( x i ) ) ) − log ( 1 + e ( − f m ( x i ) ) ) } = − y i log ( 1 + e ( − f m ( x i ) ) ) + log ( e ( − f m ( x i ) ) ) − log ( 1 + e ( − f m ( x i ) ) ) − y i log ( e ( − f m ( x i ) ) ) + y i log ( 1 + e ( − f m ( x i ) ) ) = y i f m ( x i ) − log ( 1 + e f m ( x i ) ) s o : L o s s ( y i , f m ( x i ) ) = − { y i f m ( x i ) − log ( 1 + e f m ( x i ) ) } = − y i f m ( x i ) + log ( 1 + e f m ( x i ) ) \begin{aligned} -Loss(y_i,f_m(x_i))&=y_{i} \log \left(\frac{1}{1+e^{\left(-f_{m}\left(x_{i}\right)\right)}}\right)+\left(1-y_{i}\right) \log \left(\frac{e^{\left(-f_{m}\left(x_{i}\right)\right)}}{1+e^{\left(-f_{m}\left(x_{i}\right)\right)}}\right)\\ &=-y_{i} \log \left(1+e^{\left(-f_{m}\left(x_{i}\right)\right)}\right)+\left(1-y_{i}\right)\left\{\log \left(e^{\left(-f_{m}\left(x_{i}\right)\right)}\right)-\log \left(1+e^{\left(-f_{m}\left(x_{i}\right)\right)}\right)\right\}\\ &=-y_{i}\log \left(1+e^{\left(-f_{m}\left(x_{i}\right)\right)}\right)+\log \left(e^{\left(-f_{m}\left(x_{i}\right)\right)}\right)-\log \left(1+e^{\left(-f_{m}\left(x_{i}\right)\right)}\right)-y_{i} \log \left(e^{\left(-f_{m}\left(x_{i}\right)\right)}\right)+y_{i} \log \left(1+e^{\left(-f_{m}\left(x_{i}\right)\right)}\right) \\ &= y_{i} f_{m}\left(x_{i}\right)-\log \left(1+e^{f_{m}\left(x_{i}\right)}\right)\\ so:Loss(y_i,f_m(x_i))&=-\{ y_{i} f_{m}\left(x_{i}\right)-\log \left(1+e^{f_{m}\left(x_{i}\right)}\right)\}\\ &=- y_{i} f_{m}\left(x_{i}\right)+\log \left(1+e^{f_{m}\left(x_{i}\right)}\right) \end{aligned} −Loss(yi,fm(xi))so:Loss(yi,fm(xi))=yilog(1+e(−fm(xi))1)+(1−yi)log(1+e(−fm(xi))e(−fm(xi)))=−yilog(1+e(−fm(xi)))+(1−yi){ log(e(−fm(xi)))−log(1+e(−fm(xi)))}=−yilog(1+e(−fm(xi)))+log(e(−fm(xi)))−log(1+e(−fm(xi)))−yilog(e(−fm(xi)))+yilog(1+e(−fm(xi)))=yifm(xi)−log(1+efm(xi))=−{ yifm(xi)−log(1+efm(xi))}=−yifm(xi)+log(1+efm(xi))

Negative gradient

Then the negative gradient is :

δ L δ f = − y + e f 1 + e f = − y + 1 1 + e − f \begin{aligned} \frac{ { \delta L}}{ { \delta f}} &=-y+\frac{ {\mathop{ {e}}\nolimits^{ {f}}}}{ {1+\mathop{ {e}}\nolimits^{ {f}}}} \\ &=-y+\frac{ {1}}{ {1+\mathop{ {e}}\nolimits^{ {-f}}}} \end{aligned} δfδL=−y+1+efef=−y+1+e−f1

For the first m Sub iteration , f = f m − 1 f=f_{m-1} f=fm−1, You can find the negative gradient of each point

Sklearn Source code

Intro

- sklearn edition :0.22

- The original code is _gb.py, Convenient test and result data , Change it to _gb_Source.py

- use iris Data for code testing and verification

Focus on the following parts :

- Parameter initialization 、check part

- The initial value given in the iteration process

- Specific iteration process of each round

- Model storage and prediction

import numpy as np

import pandas as pd

from sklearn import datasets

from sklearn.ensemble._gb_Source import GradientBoostingClassifier

from sklearn.utils.validation import check_array, column_or_1d

from sklearn.tree._tree import DTYPE, DOUBLE

from sklearn.tree import DecisionTreeRegressor

from sklearn.tree._tree import TREE_LEAF

from scipy.sparse import csc_matrix

from scipy.sparse import csr_matrix

from scipy.sparse import issparse

from scipy.special import expit

from sklearn.utils.multiclass import check_classification_targets

from sklearn.utils import check_random_state

from sklearn.utils import check_array

from sklearn.utils import column_or_1d

from sklearn.utils import check_consistent_length

from sklearn.utils import deprecated

from sklearn.utils.fixes import logsumexp

from sklearn.utils.stats import _weighted_percentile

from sklearn.utils.validation import check_is_fitted

from sklearn.utils.multiclass import check_classification_targets

from sklearn.exceptions import NotFittedError

from sklearn.dummy import DummyClassifier

import math

from sklearn import tree

iris_data = datasets.load_iris()

iris_df = pd.DataFrame(iris_data.data, columns=iris_data.feature_names)

iris_df["target"] = iris_data.target # 0 1 2

iris_df["target_name"] = iris_df.target.replace({

0: "setosa", 1: "versicolor", 2: "virginica"})

iris_df = iris_df.query("target_name in ('virginica', 'versicolor')")

iris_df = iris_df.iloc[0:80]

print(iris_df.target_name.value_counts())

iris_df.head()

versicolor 50

virginica 30

Name: target_name, dtype: int64

| sepal length (cm) | sepal width (cm) | petal length (cm) | petal width (cm) | target | target_name | |

|---|---|---|---|---|---|---|

| 50 | 7.0 | 3.2 | 4.7 | 1.4 | 1 | versicolor |

| 51 | 6.4 | 3.2 | 4.5 | 1.5 | 1 | versicolor |

| 52 | 6.9 | 3.1 | 4.9 | 1.5 | 1 | versicolor |

| 53 | 5.5 | 2.3 | 4.0 | 1.3 | 1 | versicolor |

| 54 | 6.5 | 2.8 | 4.6 | 1.5 | 1 | versicolor |

Parameter initialization 、check

call BaseGradientBoosting Class fit When the method is used , Will complete the initialization of some parameters .

Initialization mainly depends on two points :

- check_array hold X and y Turn it into an array ,column_or_1d hold y Turn into 1 Dimension group

- check_consistent_length test X and y Whether the data volume is consistent

self._validate_yIt's done y Of encode, Convert data into 0 1 2 Such an ordered valueself.classes_、self.n_classes_Respectively represent the enumeration of category values and the number of enumeration values

Focus on _validate_y function :

def _validate_y(self, y, sample_weight):

check_classification_targets(y) # check Is it category data , Looking at the code is more like passing check Variable y Of unique Quantity judgment type

# unique Enumerated values 、 Convert to value 0,1,2 wait , The original value sorting , Mapping to 0,1,2

self.classes_, y = np.unique(y, return_inverse=True)

# np.bincount Count the number of each value , because y The order has been arranged in front , So it is the number of each value

# In statistics, non 0 The number of , In theory, there will be no abnormality ...

n_trim_classes = np.count_nonzero(np.bincount(y, sample_weight))

if n_trim_classes < 2:

raise ValueError("y contains %d class after sample_weight "

"trimmed classes with zero weights, while a "

"minimum of 2 classes are required."

% n_trim_classes)

self.n_classes_ = len(self.classes_)

return y

It involves some np Of api, You can check the documents for details . All in all ,_validate_y hold ['a','b','c'] mapping [0,1,2].self.classes_= Sorted original string ,y Is replaced by the mapped value . another , Two classification fit when , It's called GradientBoostingClassifier in override Of _validate_y, instead of BaseGradientBoosting Medium ~

gbm = GradientBoostingClassifier(random_state=10,n_estimators=5)

gbm.fit(X=iris_df.iloc[:, 0:4], y=iris_df.target_name)

GradientBoostingClassifier(ccp_alpha=0.0, criterion='friedman_mse', init=None,

learning_rate=0.1, loss='deviance', max_depth=3,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=5,

n_iter_no_change=None, presort='deprecated',

random_state=10, subsample=1.0, tol=0.0001,

validation_fraction=0.1, verbose=0,

warm_start=False)

gbm.n_classes_

2

gbm.classes_

array(['versicolor', 'virginica'], dtype=object)

fit When you finish y Of encode, Prediction is completed decode.

gbm._validate_y(iris_df.target_name,sample_weight=None)

array([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1], dtype=int64)

The initial value of iteration is determined

gbm.loss

'deviance'

if (self.loss not in self._SUPPORTED_LOSS

or self.loss not in _gb_losses.LOSS_FUNCTIONS):

raise ValueError("Loss '{0:s}' not supported. ".format(self.loss))

# loss_class It is equivalent to directly assigning classes

if self.loss == 'deviance':

loss_class = (_gb_losses.MultinomialDeviance

if len(self.classes_) > 2

else _gb_losses.BinomialDeviance)

else:

loss_class = _gb_losses.LOSS_FUNCTIONS[self.loss]

if self.loss in ('huber', 'quantile'):

self.loss_ = loss_class(self.n_classes_, self.alpha)

else:

self.loss_ = loss_class(self.n_classes_) # Number of incoming sample categories ?? Get an instance of a class

First look at loss What happened? :

_check_paramsComplete the initialization and... Of some parameters check function .self.lossParameters are passed in , Default deviance. First verify whether the passed value is within the supportable range- according to

self.classes_Judge the number of multiple classifications or Two classification loss_classEquivalent to BinomialDeviance classself.loss_It's an example of this class , For dichotomy , Parameter passed in 2, But when generating instances , Force the parameter to 1- In superclass LossFunction in , attribute K The value of is changed to 1, namely

self.loss_.K=1, This K The latter is crucial to the latitude of the generated data

class BinomialDeviance(ClassificationLossFunction):

# Be careful n_class Forced to 1 了 , That is to say K yes 1 了

def __init__(self, n_classes):

if n_classes != 2:

raise ValueError("{0:s} requires 2 classes; got {1:d} class(es)"

.format(self.__class__.__name__, n_classes))

# we only need to fit one tree for binary clf.

super().__init__(n_classes=1)

Next, let's look at the initial value

def _init_state(self):

"""Initialize model state and allocate model state data structures. """

self.init_ = self.init

# The predicted value of the first round is returned , That is, when the first iteration is decided y^hat, The default classification is based on a priori , Choose those who account for more

# The default is None, Call... Directly at this time DummyEstimator function , Computational a priori

if self.init_ is None:

self.init_ = self.loss_.init_estimator()

self.estimators_ = np.empty((self.n_estimators, self.loss_.K),

dtype=np.object)

self.train_score_ = np.zeros((self.n_estimators,), dtype=np.float64)

# do oob?

if self.subsample < 1.0:

self.oob_improvement_ = np.zeros((self.n_estimators),

dtype=np.float64)

_init_state complete loss and estimators_ The initialization . Note that for two categories , The generated dimension is (self.n_estimators,1) Empty array , The regression tree used to store iterations

The initial value is determined by init Appoint :

estimator or ‘zero’, default=None

An estimator object that is used to compute the initial predictions. init has to provide fit and predict_proba. If ‘zero’, the initial raw predictions are set to zero. By default, a DummyEstimator predicting the classes priors is used.

_init_stateInitialize parameters ,self.init_ = self.init, holdinitValue is assigned to_init- Followed by judgment , If

self.init_ is None,self.init_ = self.loss_.init_estimator() - call

self.loss_.init_estimator(), namely BinomialDeviance Of init_estimator Method , return DummyClassifier(strategy=‘prior’) - Completed above

self._init_state()The process self.init_ == 'zero'when ,raw_predictions by (n,1) Array of , All the elements are 0- otherwise ,

self.init_.fit(X, y, sample_weight=sample_weight), callDummyClassifier.fit raw_predictions = self.loss_.get_init_raw_predictions(X, self.init_), It's a little complicated here , Look directly at the code

def get_init_raw_predictions(self, X, estimator):

probas = estimator.predict_proba(X)

# postive A priori probability value of class

proba_pos_class = probas[:, 1]

# eps Is to take a nonnegative minimum

eps = np.finfo(np.float32).eps

# Limit proba_pos_class The maximum and minimum values cannot exceed the given eps and 1-eps, More than is equal to

proba_pos_class = np.clip(proba_pos_class, eps, 1 - eps)

# Inverse function , Finally, calculate the probability again , So first use the inverse function

# log(x / (1 - x)) is the inverse of the sigmoid (expit) function

raw_predictions = np.log(proba_pos_class / (1 - proba_pos_class))

# After transformation, the dimension becomes (n,1), This is the same as _update_terminal_regions Medium raw_predictions[:, k]

# correspond , For the classification problem ,k Fixed is 1

return raw_predictions.reshape(-1, 1).astype(np.float64)

prior_model = DummyClassifier(strategy='prior')

prior_model.fit(X=iris_df.iloc[:, 0:4], y=iris_df.target_name)

DummyClassifier(constant=None, random_state=None, strategy='prior')

prior_model.predict_proba(X=iris_df.iloc[:, 0:4])[0:5]

array([[0.625, 0.375],

[0.625, 0.375],

[0.625, 0.375],

[0.625, 0.375],

[0.625, 0.375]])

In short , If the positive and negative samples are 3:5,p(y=1) The probability is predicted as 0.375, And then through sigmoid The inverse function of raw_predictions. We need to make clear the so-called raw_predictions It's actually a continuous value , That is, the cumulative value superimposed step by step in the iteration process . It's all through sigmoid The switch to 0-1 Between . In the above code np Function can view the document by itself , Learn more about .

Now verify the logic :

from sklearn.ensemble._gb_losses import BinomialDeviance

gbm_loss = BinomialDeviance(2)

gbm_loss.K

1

so K Forced assignment 1, It has nothing to do with input

raw_predictions = gbm_loss.get_init_raw_predictions(X=iris_df.iloc[:, 0:4],estimator=prior_model)

raw_predictions.shape

(80, 1)

raw_predictions[0:5]

array([[-0.51082562],

[-0.51082562],

[-0.51082562],

[-0.51082562],

[-0.51082562]])

Check it out again sigmoid transformation

from scipy.special import expit

print(expit(-0.51082562),1/(1+np.exp(0.51082562)))

0.37500000088265406 0.37500000088265406

and DummyClassifier The prior probability of prediction is consistent

iterative process

n_stages = self._fit_stages(

X, y, raw_predictions, sample_weight, self._rng, X_val, y_val,

sample_weight_val, begin_at_stage, monitor, X_idx_sorted)

self._fit_stagesThe main logic is nothing , Namely for loopself.n_estimatorsTime , callself._fit_stage, We need to pay attention to how the value and model of each training are updated- namely :

raw_predictions = self._fit_stage(i, X, y, raw_predictions, sample_weight, sample_mask,random_state, X_idx_sorted, X_csc, X_csr)

For each iteration , The main steps are as follows :

- According to the last raw_predictions Calculate the negative gradient

- Regression tree predicts negative gradient , Update the predicted value of leaf nodes , Update tree structure

- to update raw_predictions, Store the tree model

self.estimators_in

Let's go through the logic of updating the model once

Train the regression tree , Enter the reference X= Characteristic data and y= Negative gradient

# First put the string category label Turn into 0-1 type

y = gbm._validate_y(iris_df.target_name,sample_weight=None)

y

array([0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,

1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1], dtype=int64)

raw_predictions[0:5]

array([[-0.51082562],

[-0.51082562],

[-0.51082562],

[-0.51082562],

[-0.51082562]])

residual = gbm.loss_.negative_gradient(y,raw_predictions)

residual

array([-0.375, -0.375, -0.375, -0.375, -0.375, -0.375, -0.375, -0.375,

-0.375, -0.375, -0.375, -0.375, -0.375, -0.375, -0.375, -0.375,

-0.375, -0.375, -0.375, -0.375, -0.375, -0.375, -0.375, -0.375,

-0.375, -0.375, -0.375, -0.375, -0.375, -0.375, -0.375, -0.375,

-0.375, -0.375, -0.375, -0.375, -0.375, -0.375, -0.375, -0.375,

-0.375, -0.375, -0.375, -0.375, -0.375, -0.375, -0.375, -0.375,

-0.375, -0.375, 0.625, 0.625, 0.625, 0.625, 0.625, 0.625,

0.625, 0.625, 0.625, 0.625, 0.625, 0.625, 0.625, 0.625,

0.625, 0.625, 0.625, 0.625, 0.625, 0.625, 0.625, 0.625,

0.625, 0.625, 0.625, 0.625, 0.625, 0.625, 0.625, 0.625])

def negative_gradient(self, y, raw_predictions, **kargs):

"""Compute the residual (= negative gradient).

Parameters

----------

y : 1d array, shape (n_samples,)

True labels.

raw_predictions : 2d array, shape (n_samples, K)

The raw_predictions (i.e. values from the tree leaves) of the

tree ensemble at iteration ``i - 1``.

"""

# expit(x) = 1/(1+exp(-x))

return y - expit(raw_predictions.ravel())

The formula of negative gradient has been derived previously , Code as above , Very clear .y For the real label value [0,1],raw_predictions Is the value predicted in the previous round , To validate the ~

δ L δ f = − y + e f 1 + e f = − y + 1 1 + e − f = − 0 + 1 / ( 1 + e − ( − 0.51082562 ) ) = 1 / ( 1 + e 0.51082562 ) = 0.3750 \begin{aligned} \frac{ { \delta L}}{ { \delta f}} &=-y+\frac{ {\mathop{ {e}}\nolimits^{ {f}}}}{ {1+\mathop{ {e}}\nolimits^{ {f}}}} \\ &=-y+\frac{ {1}}{ {1+\mathop{ {e}}\nolimits^{ {-f}}}} \\ &=-0+1/(1+e^{-(-0.51082562)}) \\ &=1/(1+e^{0.51082562})\\ &=0.3750 \end{aligned} δfδL=−y+1+efef=−y+1+e−f1=−0+1/(1+e−(−0.51082562))=1/(1+e0.51082562)=0.3750

1/(1+math.exp(0.51082562))

0.37500000088265406

Fitting regression tree & Update leaf nodes

Parameters and source code may be different , It doesn't matter , Look at the main contradiction

dtree = DecisionTreeRegressor(criterion="friedman_mse")

dtree.fit(iris_df.iloc[:, 0:4], residual)

DecisionTreeRegressor(ccp_alpha=0.0, criterion='friedman_mse', max_depth=None,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort='deprecated',

random_state=None, splitter='best')

from IPython.display import Image

import pydotplus

dot_data = tree.export_graphviz(dtree, out_file=None,

feature_names=iris_data.feature_names,

class_names=iris_data.target_names,

filled=True, rounded=True,

special_characters=True)

graph = pydotplus.graph_from_dot_data(dot_data)

Image(graph.create_png())

The formula for updating the predicted value of leaf nodes is very intuitive , But the code involves a lot tree Methods , Not very familiar with , Make do with it

def _update_terminal_region(self, tree, terminal_regions, leaf, X, y,

residual, raw_predictions, sample_weight):

"""Make a single Newton-Raphson step.

our node estimate is given by:

sum(w * (y - prob)) / sum(w * prob * (1 - prob))

we take advantage that: y - prob = residual

"""

terminal_region = np.where(terminal_regions == leaf)[0]

residual = residual.take(terminal_region, axis=0)

y = y.take(terminal_region, axis=0)

sample_weight = sample_weight.take(terminal_region, axis=0)

numerator = np.sum(sample_weight * residual)

denominator = np.sum(sample_weight *

(y - residual) * (1 - y + residual))

# prevents overflow and division by zero

# Determine the value of leaf nodes

if abs(denominator) < 1e-150:

tree.value[leaf, 0, 0] = 0.0

else:

tree.value[leaf, 0, 0] = numerator / denominator

dtree.apply(iris_df.iloc[:,0:4])

array([ 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,

2, 2, 2, 14, 2, 2, 2, 2, 2, 2, 11, 2, 2, 2, 2, 2, 6,

2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 16,

16, 16, 16, 16, 16, 10, 16, 16, 16, 16, 16, 16, 16, 16, 16, 16, 16,

16, 4, 16, 16, 16, 16, 16, 16, 15, 16, 16, 7], dtype=int64)

dtree.tree_.children_left

array([ 1, 2, -1, 4, -1, 6, -1, -1, 9, 10, -1, -1, 13, 14, -1, -1, -1],

dtype=int64)

The first 3、5、7 Nodes such as are leaf nodes , Computation first 3 Whether the predicted value of nodes is y The average of

Be careful : The first 3 Nodes are indexed from 1 Start counting ,apply Method returns from 0 Start counting

np.where(dtree.apply(iris_df.iloc[:,0:4]) == 2)[0]

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16,

17, 18, 19, 21, 22, 23, 24, 25, 26, 28, 29, 30, 31, 32, 34, 35, 36,

37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49], dtype=int64)

for i in [2, 4, 6, 7]:

print(

"leaf num - %s:" % i, "(check value %s)" %

residual[np.where(dtree.apply(iris_df.iloc[:, 0:4]) == i)[0]].mean(),

"(real value%s)" % dtree.tree_.value[i])

leaf num - 2: (check value -0.37499999999999994) (real value[[-0.375]])

leaf num - 4: (check value 0.625) (real value[[0.625]])

leaf num - 6: (check value -0.37499999999999994) (real value[[-0.375]])

leaf num - 7: (check value 0.625) (real value[[0.625]])

resudial_sample = residual.take(np.where(dtree.apply(iris_df.iloc[:,0:4]) == 2)[0])

y_sample = gbm._validate_y(iris_df.target_name,sample_weight=None).take(np.where(dtree.apply(iris_df.iloc[:,0:4]) == 2)[0], axis=0)

numerator = np.sum(resudial_sample)

denominator = np.sum((y_sample - resudial_sample) * (1 - y_sample + resudial_sample))

numerator / denominator

-1.5999999999999999

The conventional CART Back to the tree , The predicted value at the leaf node or Output value , Is the average value of the label value of the training sample falling in the leaf node area ( When the mean square error , The optimal ). And binary GBDT in , It is not considered to be as close to the negative gradient as possible , Instead, aim directly at the original target , The logarithmic loss function is the smallest . namely :

c t j = arg min ⏟ c ∑ x i ∈ R t j L ( y i , f t − 1 ( x i ) + c ) c_{t j}=\underbrace{\arg \min }_{c} \sum_{x_{i} \in R_{t j}} L\left(y_{i}, f_{t-1}\left(x_{i}\right)+c\right) ctj=cargminxi∈Rtj∑L(yi,ft−1(xi)+c)

The above formula is difficult to optimize , Generally, the following approximate values are used instead :

c t j = ∑ x i ∈ R t j r t i ∑ x i ∈ R t j ( y i − r t i ) ( 1 − y i + r t i ) c_{t j}=\frac{\sum_{x_{i} \in R_{t j}} r_{t i}} { \sum_{x_{i} \in R_{t j}}(y_i-r_{t i})\left(1-y_i+r_{t i}\right)} ctj=∑xi∈Rtj(yi−rti)(1−yi+rti)∑xi∈Rtjrti

Look at the code :

# Update the predicted value of a leaf node separately

def _update_terminal_region(self, tree, terminal_regions, leaf, X, y,

residual, raw_predictions, sample_weight):

"""Make a single Newton-Raphson step.

our node estimate is given by:

sum(w * (y - prob)) / sum(w * prob * (1 - prob))

we take advantage that: y - prob = residual

"""

# Find the sample index belonging to the leaf node

terminal_region = np.where(terminal_regions == leaf)[0]

# Take the negative gradient value of the corresponding sample and label value

residual = residual.take(terminal_region, axis=0)

y = y.take(terminal_region, axis=0)

# Sample weight , Let go of

sample_weight = sample_weight.take(terminal_region, axis=0)

# sum Negative gradient

numerator = np.sum(sample_weight * residual)

denominator = np.sum(sample_weight *

(y - residual) * (1 - y + residual))

# prevents overflow and division by zero

# Determine the value of leaf nodes

if abs(denominator) < 1e-150:

tree.value[leaf, 0, 0] = 0.0

else:

tree.value[leaf, 0, 0] = numerator / denominator

tree As a variable , Modify the value directly , And pass ,list、array Has a similar effect , Subsequent modification of residuals also directly updates the value in this way .

Now verify the above calculation logic :

First look at the tree diagram of the first classifier , You can easily see the value of the leaf node

from IPython.display import Image

import pydotplus

dot_data = tree.export_graphviz(gbm.estimators_[0][0], out_file=None,

feature_names=iris_data.feature_names,

class_names=iris_data.target_names,

filled=True, rounded=True,

special_characters=True)

graph = pydotplus.graph_from_dot_data(dot_data)

Image(graph.create_png())

gbm.estimators_[0][0].apply(iris_df.iloc[:, 0:4])

array([ 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,

2, 2, 2, 11, 2, 2, 2, 2, 2, 2, 9, 2, 2, 2, 2, 2, 4,

2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2, 12,

12, 12, 12, 12, 12, 8, 12, 12, 12, 12, 12, 12, 12, 12, 12, 12, 12,

12, 4, 12, 12, 12, 12, 12, 12, 11, 12, 12, 5], dtype=int64)

gbm.estimators_[0][0].tree_.children_left

array([ 1, 2, -1, 4, -1, -1, 7, 8, -1, -1, 11, -1, -1], dtype=int64)

By modifying the source code , Save each residual in self.raw_predictions_list in

Calculate leaf nodes [3] The corresponding output value

leaf2_sample_index = np.where(gbm.estimators_[0][0].apply(iris_df.iloc[:, 0:4])==2)[0]

leaf2_sample_index

array([ 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16,

17, 18, 19, 21, 22, 23, 24, 25, 26, 28, 29, 30, 31, 32, 34, 35, 36,

37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49], dtype=int64)

y_sample = gbm._validate_y(iris_df.target_name,sample_weight=None).take(leaf2_sample_index, axis=0)

resudial_sample = gbm.residual_list[0][0].take(leaf2_sample_index, axis=0)

numerator = np.sum(resudial_sample)

denominator = np.sum((y_sample - resudial_sample) * (1 - y_sample + resudial_sample))

numerator / denominator

-1.5999999999999999

gbm.estimators_[0][0].tree_.value[2, 0, 0]

-1.5999999999999999

raw_predictions to update

Regression tree fitting above , Get a new regression tree

raw_predictions[:, k] += \

learning_rate * tree.value[:, 0, 0].take(terminal_regions, axis=0)

- In the second category ,k=1, No matter how many categories

- The value predicted by the new regression tree +raw_predictions Update the original value raw_predictions

- This is the logic of addition

The second training

- Updated raw_predictions and y Calculate and update the negative gradient

- (X,residual) Train the new regression tree

- Continue iteration raw_predictions

Iteration termination condition

Achieve the preset n_estimators Stop iterating

The model in the iteration process is stored in self.estimators_ in

Model to predict

raw_prediction

The model prediction is divided into two steps :

- N Models get N results ,raw_prediction

- N results

*learning_rateAdd up + Initialized value ,sigmoid convert to 0-1 Probability between

F M ( x ) = F 0 ( x ) + ∑ m = 1 M ∑ j = 1 J m c m , j I ( x ∈ R m , j ) F_{M}(x)=F_{0}(x)+\sum_{m=1}^{M} \sum_{j=1}^{J_{m}} c_{m, j} I\left(x \in R_{m, j}\right) FM(x)=F0(x)+m=1∑Mj=1∑Jmcm,jI(x∈Rm,j)

# Unless otherwise specified , Return a priori , That is, those with more categories

def _raw_predict_init(self, X):

"""Check input and compute raw predictions of the init estimtor."""

self._check_initialized()

X = self.estimators_[0, 0]._validate_X_predict(X, check_input=True)

if X.shape[1] != self.n_features_:

raise ValueError("X.shape[1] should be {0:d}, not {1:d}.".format(

self.n_features_, X.shape[1]))

if self.init_ == 'zero':

raw_predictions = np.zeros(shape=(X.shape[0], self.loss_.K),

dtype=np.float64)

else:

raw_predictions = self.loss_.get_init_raw_predictions(

X, self.init_).astype(np.float64)

return raw_predictions

# The following explanation seems to return the sum of the predicted values , The specific code seems to be c Written , Hide the

def _raw_predict(self, X):

"""Return the sum of the trees raw predictions (+ init estimator)."""

# Initial predicted value

raw_predictions = self._raw_predict_init(X)

# Go through the iterative process , Find the final raw_predictions

predict_stages(self.estimators_, X, self.learning_rate,

raw_predictions)

return raw_predictions

The core code is pwd file , I can't see the source code . Directly follow the above logic , Do validation ~

The initial value is consistent with the logic in the previous initialization ~gbm.init_ namely DummyClassifier First get the prediction probability , Again sigmod Inverse function obtained raw_prediction

gbm.loss_.get_init_raw_predictions(iris_df.iloc[0:2,0:4],gbm.init_)

array([[-0.51082562],

[-0.51082562]])

Get each tree Multiply the predicted value of by learning_rate

pre_list = []

for i in gbm.estimators_:

pre_list.append(i[0].predict(iris_df.iloc[0:1,0:4])[0]*gbm.learning_rate)

pre_list

[-0.16,

-0.1511286273379727,

-0.14395717809370576,

-0.13806361187211877,

-0.13315505338282463]

gbm.loss_.get_init_raw_predictions(iris_df.iloc[0:1,0:4],gbm.init_)[0][0]+sum(pre_list)

-1.2371300944526125

predict_proba

sigmoid Function into probability

def predict_proba(self, X):

raw_predictions = self.decision_function(X)

try:

# Did Logit Transformation

return self.loss_._raw_prediction_to_proba(raw_predictions)

except NotFittedError:

raise

except AttributeError:

raise AttributeError('loss=%r does not support predict_proba' %

self.loss)

gbm.predict_proba(iris_df.iloc[0:1,0:4])

array([[0.77506407, 0.22493593]])

expit(gbm.loss_.get_init_raw_predictions(iris_df.iloc[0:1,0:4],gbm.init_)[0][0]+sum(pre_list))

0.2249359293642033

Consistent with the direct prediction , Logic verification is completed

predict

predict There is one label decode The logic of , During the previous initialization , adopt gbm._validate_y hold label Worth doing encode, Like Ben case in , hold 'versicolor', 'virginica' It maps to 0、1

def predict(self, X):

# Some code is not pure py Of , All in all , Finally, the prediction value of each model is accumulated to a result

raw_predictions = self.decision_function(X)

# Find the index where the maximum probability value is located

encoded_labels = \

self.loss_._raw_prediction_to_decision(raw_predictions)

# encoded_labels yes [0,1] It's worth ,return Return its corresponding original y The data of , Such as (good,bad)、(+1,-1) such

return self.classes_.take(encoded_labels, axis=0)

-------------------------------------------------------------------------------------

def _raw_prediction_to_proba(self, raw_predictions):

proba = np.ones((raw_predictions.shape[0], 2), dtype=np.float64) # Generate N individual [1,1], Used to store probability

proba[:, 1] = expit(raw_predictions.ravel())# Did logit Transformation , Map values to 0-1 Between

proba[:, 0] -= proba[:, 1]

return proba

def _raw_prediction_to_decision(self, raw_predictions):

# Probability transformation

proba = self._raw_prediction_to_proba(raw_predictions)

return np.argmax(proba, axis=1) # It seems that the returned index ( Which probability is greater ,0or1), The code will further map the index to the corresponding category

decision_functionFunction to getraw_prediction_raw_prediction_to_decisionGet the index with high probability , In fact, it is 0、1predictIndex based , Map to the corresponding original category

summary

model training

- Initializing page 0 A weak learner F 0 F_0 F0, According to the participation , May be DummyClassifier, obtain raw_prediction

- Training

n_estimatorsA classifier- 1) according to raw_prediction and y Calculate the negative gradient residual

- 2)( features X, Negative gradient residual) Training cart Back to the tree

- 3) Update the output value of each leaf node

- 4) to update

raw_prediction,raw_prediction+learning_rate*leaf_outputThat is, add the learning rate times tree The predicted value of - Continue with the above 4 Step , To iterate

- The number of classifiers reaches the specified number , Stop iterating

Model to predict

- Wait until the initialization value , That is, the second above 0 The predicted value obtained by a weak learner

- secondly

n_estimatorsThe prediction results of classifiers are multiplied by the learning rate - Add the above two results , Re pass sigmoid transformation , Get the probability

Ref

[1] https://www.6aiq.com/article/1583859185789

[2] https://liangyaorong.github.io/blog/2017/scikit-learn%E4%B8%AD%E7%9A%84GBDT%E5%AE%9E%E7%8E%B0/#2.6

[3] https://blog.csdn.net/qq_22238533/article/details/79192579

[4] https://www.cnblogs.com/pinard/p/6140514.html#!comments

Tossed for nearly a month , I'm still too lazy , Hopelessly lazy . Neither cleverness nor diligence accounts for , Still thinking about laziness , absurd !

2021-05-18 Jiulong lake, Jiangning District, Nanjing

边栏推荐

- 【微信小程序】小程序宿主环境详解

- 剑指Offer | 二进制中1的个数

- Pl/sql creates and executes ORALCE stored procedures and returns the result set

- [C题目]力扣206. 反转链表

- 32 use of chrome debugging tools

- 解决asp.net上传文件时文件太大导致的错误

- sql server强行断开连接

- C语言函数复习(传值传址【二分查找】,递归【阶乘,汉诺塔等】)

- Detailed explanation of lio-sam operation process and code

- System.AccessViolationException: 尝试读取或写入受保护的内存。这通常指示其他内存已损坏

猜你喜欢

EDA chip design solution based on AMD epyc server

27 选择器的分类

outline和box-shadow实现外轮廓圆角高光效果

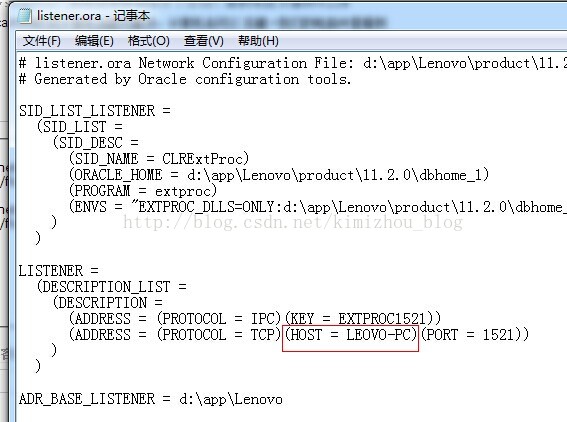

oracle_ 12505 error resolution

Yarn: the file yarn.ps1 cannot be loaded because running scripts is prohibited on this system.

6月产品升级观察站

密码强度验证示例

推荐10个堪称神器的学习网站

37 element mode (inline element, block element, inline block element)

006 operator introduction

随机推荐

oracle_ 12505 error resolution

LeetCode_ Factorization_ Simple_ 263. Ugly number

Cmake specify opencv version

《三子棋》C语言数组应用 --n皇后问题雏形

Realsense ROS installation configuration introduction and problem solving

Scala110-combineByKey

[C topic] force buckle 876. Intermediate node of linked list

Scala110-combineByKey

"Ask every day" briefly talk about JMM / talk about your understanding of JMM

Qt connect 中, SIGNAL,SLOT 与 lambda 对比

sql server强行断开连接

Raft of distributed consistency protocol

I2C device driver hierarchy

Unable to start web server when Nacos starts

ice 100G 网卡分片报文 hash 问题

[comprehensive pen test] difficulty 4/5, classic application of line segment tree for character processing

js URLEncode函数

sql to linq 之存储过程偏

如何解决Visual Studio中scanf编译报错的问题

BigDecimal rounds the data