当前位置:网站首页>PCA dimension reduction application of OpenCV (I)

PCA dimension reduction application of OpenCV (I)

2022-06-21 14:31:00 【Modest learning and progress】

About opencv Inside PCA Dimension reduction

Today, I finally opencv The built-in dimension reduction function runs through , It took me a day ,,

First of all, thank the author of this blog :

http://blog.codinglabs.org/articles/pca-tutorial.html

I've seen him “PCA The mathematical principle of ”, I really feel enlightened , Simplify the profound mathematical principles , The narration is vivid and clear .

All right. , Let's get to the point :

First, I extracted before dimensionality reduction VLAD Is characterized by 60*64 Dimensional , namely 3840 dimension , My picture library has 5063 A picture , So before dimensionality reduction, my image library VLAD Is characterized by 5063*3840, Let's give him a name , It's called VLAD_all, yes 5063 That's ok ,3840 Oh , Don't confuse rows and columns , I want to put 3840 The characteristic of dimension is reduced to 128 dimension , That is to reduce 30 times ,

Finally, my image library features become 5063*128, All right. , Look at the code :

PCA pca(before_PCA, Mat(), CV_PCA_DATA_AS_ROW, 128);

before_PCA What is stored is the library features before dimensionality reduction , That's it. 5063*3840, You can just bring it in here without transposing , After this sentence , The eigenvector after dimensionality reduction is 128*3840 Of ( yes 128 That's ok ,3840 Column , I wrote the following strictly in the order of rows and columns , I won't go into details )

namely Mat eigenvectors = pca.eigenvectors.clone();

eigenvectors That's what's in it 128*3840 Eigenvector of , Note that this matrix is not the result of dimensionality reduction , It is just an eigenvector matrix generated in the middle , This matrix is very important , Please refer to the above for details “PCA The mathematical principle of ”,

All right. , Let's start with the most important :

Mat VLAD_all_pca = eigenvectors*VLAD_all.t();

Notice I'm using theta VLAD_all Transpose of , Otherwise, if the rows and columns of matrix multiplication do not correspond to each other, it will be interrupted , Let's analyze the most important code in this line :

eigenvectors yes 128*3840 Eigenvector of ,VLAD_all.t() yes 3840*5063 The library of VLAD Transpose of features , So it just corresponds to ,eigenvectors yes 3840 Column ,VLAD_all.t() yes 3840 That's ok , It can be multiplied correspondingly ,, All right. , Let's guess the result of multiplication VLAD_all_pca How many rows and columns of data are stored ,

you 're right , This VLAD_all_pca Namely 128*5063( Transpose as appropriate , I have suffered a loss in the transposition ), Now we use it as the new VLAD features , This is what I said PCA Real image library features after dimensionality reduction . Is it better than before 5063*3840 Much less , The original 5063*3840xml File is 327M,

After the dimension reduction VLAD_all_pca Of xml File is 10.9M, Yes , The small 30 times , That is to say, the speed is increased 30 times ( Of course, this is a rude statement. In fact, there will be a lot of other time expenses ).

//**************************** Original picture , Read picture path , read xml The file path needs to be changed in three places , also pictures_num****************************

#include <boost/lexical_cast.hpp>

#include <iostream>

#include <string>

#include <iostream>

#include <vector>

#include <boost/filesystem.hpp>

#include <opencv/cv.h>

#include <opencv/highgui.h>

#include <opencv/ml.h>

#include <opencv2/nonfree/features2d.hpp>

#include <string>

#include <iostream>

#include <vector>

#include <boost/filesystem.hpp>

#include <opencv/cv.h>

#include <opencv/highgui.h>

#include <opencv/ml.h>

#include <opencv2/nonfree/features2d.hpp>

using namespace boost::filesystem;

using namespace std;

using namespace cv;

//#pragma comment( lib, "opencv_highgui249d.lib")

//#pragma comment( lib, "opencv_core49d.lib")

// Global variables

#define numClusters 60 // Number of cluster centers , If the Extract_VLAD_Features function , stay surf Next numClusters No more than 64, stay sift Under the circumstances numClusters It should not be greater than 128

Mat Descriptor_all(0, 64, CV_32FC1);

Mat VLAD_all(0, 64 * numClusters, CV_32FC1);// Store the overall picture library VLAD features ,

Mat centers(numClusters, 64, CV_32FC1);// In fact, the centers It is possible to define no size , After clustering centers It automatically stores the clustering results centers(numClusters, 128, CV_32FC1);

Mat labels(0, numClusters, CV_32FC1);

Mat Extract_VLAD_Features(Mat &descriptors, Mat ¢ers, float alpha = 1);

float ComputeSimilarity(Mat vlad_feat_query, Mat vlad_feat_train);

void Extractor_allDescriptors(path&basepath);

void Extractor_allVLAD(char filePath[150], int n_picture);// Read n_picture A picture

int main(){

double time = static_cast<double>(getTickCount());

FileStorage fsd("D:/kmeans_data/centers60_64_74picture.xml", FileStorage::READ);

fsd["centers"] >> centers;

fsd.release();

// Read library pictures VLAD Eigenvector

double timedata = static_cast<double>(getTickCount());

FileStorage fvlad("D:/kmeans_data/all_VLAD5063.xml", FileStorage::READ);

fvlad["VLAD_all"] >> VLAD_all;

fvlad.release();

timedata = ((double)getTickCount() - timedata) / getTickFrequency();

cout << " read VLAD_all Data time :" << timedata << " second " << endl << endl;

Dimensionality reduction , Generate the eigenvectors required for dimensionality reduction :

//double PCA_time = static_cast<double>(getTickCount());

//int pca_num = 128;// Down to 128 dimension

//Mat before_PCA = VLAD_all.clone();//before_PCA Used to store dimension features before dimension reduction , It is VLAD_all The transpose , namely before_PCA yes 60*64 That's ok ,5063 Column ,5063 yes fvlad Number of library features read , How many pictures are there in the gallery before_PCA How many columns are there ,

//Mat after_PCA_eigenvectors;// Store the reduced dimension eigenvector

//cout << " Underway PCA Dimension reduction ..." << endl;

//PCA pca(before_PCA, Mat(), CV_PCA_DATA_AS_ROW, pca_num);

//Mat eigenvectors = pca.eigenvectors.clone();//eigenvectors It is used to store the reduced feature vector

//PCA_time = ((double)getTickCount() - PCA_time) / getTickFrequency();

//cout << "PCA Dimensionality reduction time :" << PCA_time << " second " << endl << endl;

//FileStorage f_pca("D:/kmeans_data/after_PCA_eigenvectors.xml", FileStorage::WRITE);

//f_pca << "after_PCA_eigenvectors" << eigenvectors;

//f_pca.release();

Read the above eigenvector , Real dimensionality reduction processing for input data :

double time_T = static_cast<double>(getTickCount());

Mat after_PCA_eigenvectors;// Store the reduced dimension eigenvector

FileStorage f_pca("D:/kmeans_data/after_PCA_eigenvectors.xml", FileStorage::READ);

f_pca["after_PCA_eigenvectors"] >> after_PCA_eigenvectors;

f_pca.release();

Mat VLAD_all_pca = after_PCA_eigenvectors*VLAD_all.t();//VLAD_all_pca It is used to store the real reduced dimension all_VLAD5063 data

FileStorage fs_pca("D:/kmeans_data/VLAD_all_pca.xml", FileStorage::WRITE);

fs_pca << "VLAD_all_pca" << VLAD_all_pca;

fs_pca.release();

cout << " Dimension reduced data has been extracted successfully !" << endl;

time_T = ((double)getTickCount() - time_T) / getTickFrequency();

cout << " Read feature 、PCA Matrix multiplication time :" << time_T << " second " << endl << endl;

time = ((double)getTickCount() - time) / getTickFrequency();

cout << " Total time :" << time << " second " << endl << endl;

system("pause");

return 0;

}

Let's see the effect : The three pictures at the bottom right are 5063 The three pictures most similar to the original one found in the cool pictures ,

The first is the recognition result before dimensionality reduction , The last two pictures are the recognition results after dimension reduction , You can see the features from 3840 Dimension down to 128 The recognition effect seems to be very good after the dimension , Careful students can see my data , The recognition speed before dimensionality reduction is about 9.8S, After the dimension reduction 1.3S, Of course, this also includes reading files , Many links can be optimized .

Actually, I've referred to this before :http://blog.csdn.net/u010555682/article/details/52563970

I just feel that the rows and columns of the feature vectors extracted by him are different from mine , Maybe I didn't understand it thoroughly , Children's shoes are afraid of being around, so don't look at him .

Mr. , by the way , About VLAD Feature image retrieval can refer to the article written by this person , Although I don't like the author , But the article still has reference value :

http://www.cnblogs.com/mafuqiang/p/6909556.html

If you want to study image retrieval in detail, you can see this master's thesis , Well written : be based on GMM_VLAD Image retrieval of _ Chenmanyu

from :https://blog.csdn.net/guanyonglai/article/details/78418078#commentBox

边栏推荐

- Design and implementation of object system in redis

- Cmake upgrade

- kernel GDB

- UBI error: ubi_ read_ volume_ table: the layout volume was not found

- Subshell

- Define structure dynamically when macro is defined

- C#&. Net to implement a distributed event bus from 0 (1)

- Mingw-w64 installation tutorial

- 2021 the latest selenium truly bypasses webdriver detection

- 网上开户安全吗?新手可以开账户吗

猜你喜欢

How can an e-commerce system automatically cancel an order when it times out?

A blazor webassembly application that can automatically generate page components based on objects or types

Gensim error attributeerror: type object 'word2vec' has no attribute 'load_ word2vec_ format‘

In the autumn of 2022, from being rejected to sp+, talk about the experience and harvest of YK bacteria in 2021

Qt-3-basic components

Numpy: basic package for high performance scientific computing & data analysis

MySQL failover and master-slave switchover based on MHA

Explain the design idea and capacity expansion mechanism of ThreadLocal in detail

7hutool actual fileutil file tool class (common operation methods for more than 100 files)

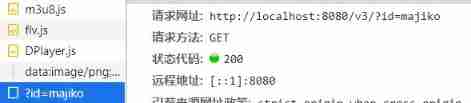

Dplayer development barrage background

随机推荐

Review notes of web development technology

ARP interaction process

Comprehensively analyze the key points of knowledge required for interface testing and interface testing

理财产品的赎回时间是怎么规定的?

Chapter 2 - physical layer (II) circuit switching, message switching and packet switching (datagram and virtual circuit)

Explain the design idea and capacity expansion mechanism of ThreadLocal in detail

Oracle client11 and pl/sql12 installation

English accumulation__ annoyance

Record the troubleshooting process of excessive CPU usage

Configuring MySQL master-slave and implementing semi synchronous replication in docker environment

技术分享 | MySQL中一个聚类增量统计 SQL 的需求

Postman reports error write eproto 93988952error10000f7ssl routinesopenssl_ internalWRONG_ VERSION_ NUM

LINQ extension methods - any() vs. where() vs. exists() - LINQ extension methods - any() vs. where() vs. exists()

Declare war on uncivilized code II

Qt-6-file IO

网上开户安全吗?新手可以开账户吗

Skills of assigning IP address by DHCP in secondary route after wireless bridge

Alibaba cloud log service is available in Net project

Analysis of ROC and AUC

STM32F0-DAY1