当前位置:网站首页>Bayesian inference problem, MCMC and variational inference

Bayesian inference problem, MCMC and variational inference

2022-06-28 19:20:00 【Lian Li o】

Contents

The Bayesian inference problem

What is Bayesian inference?

- Simply speaking , Bayesian reasoning It is statistical reasoning based on Bayesian paradigm . The basic idea of Bayesian paradigm is to use Bayes theorem To express posterior knowledge p ( θ ∣ x ) p(\theta|x) p(θ∣x) (the “posterior”)、 Prior knowledge p ( θ ) p(\theta) p(θ) (the “prior”) And likelihood p ( x ∣ θ ) p(x|\theta) p(x∣θ) (the “likelihood”) The relationship between

Computational difficulties

- In many scenarios ,prior and likelihood It's all known , but Normalization factor evidence But you need to integrate to get :

The above integral will become difficult to solve in the high-dimensional case , So you need to use some Approximate method To estimate the posterior probability

The above integral will become difficult to solve in the high-dimensional case , So you need to use some Approximate method To estimate the posterior probability - Common approximation methods are Markov Chain Monte Carlo and Variational Inference (one should keep in mind that these methods can also be precious when facing other computational difficulties related to Bayesian inference)

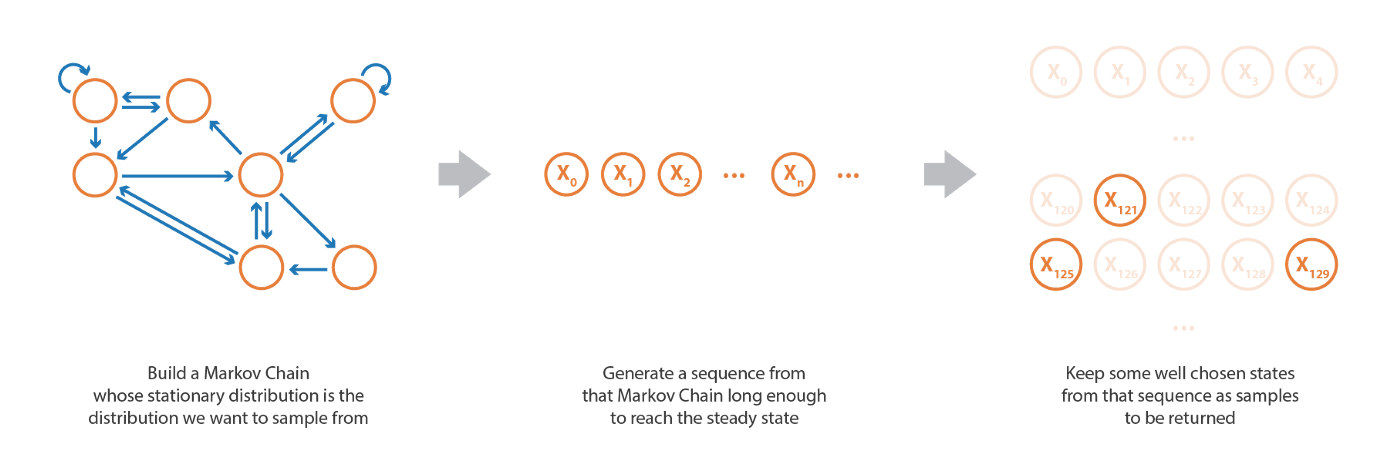

Markov Chains Monte Carlo (MCMC) – A sampling based approach

- Markov chain Monte Carlo method (Markov Chain Monte Carlo, MCMC) (MCMC Whether the probability distribution to be sampled is normalized is not sensitive , It can be sampled even without normalization )

Variational Inference (VI) – An approximation based approach

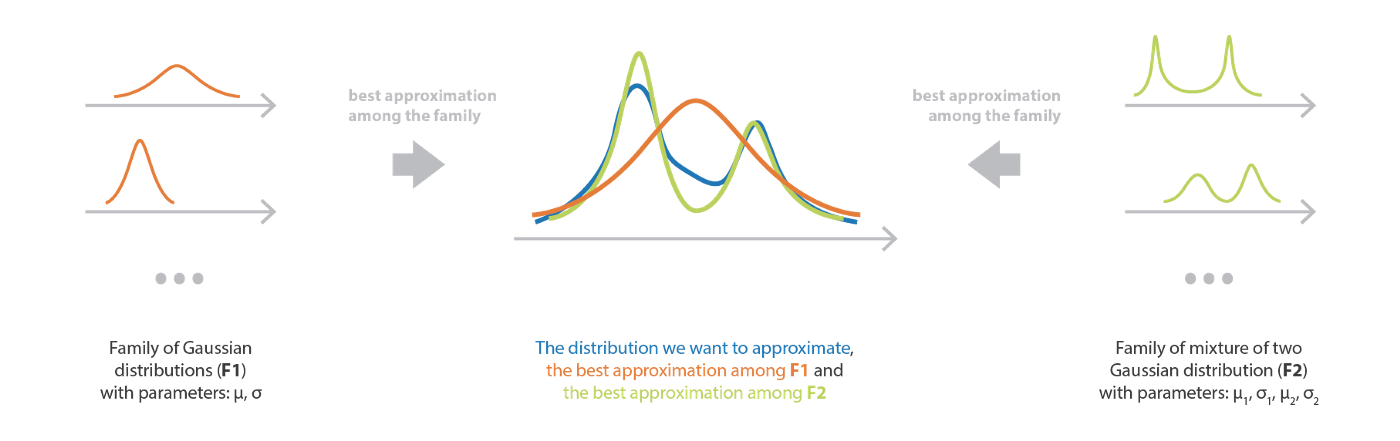

The approximation approach

- And MCMC Sampling based on Markov chain is different , Variational reasoning Aimed at The best approximate probability distribution of the complex probability distribution to be sampled is found from the specified probability distribution family , In fact, it is to solve an optimization problem

- To be specific , First you need to define a Parameterized probability distribution family , The different probability distributions are determined by the corresponding parameters (e.g. The normal distribution consists of μ \mu μ and σ \sigma σ control )

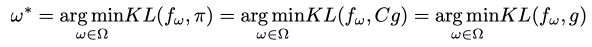

Then you need to start from F Ω F_\Omega FΩ Find a probability distribution closest to the probability distribution to be sampled ω ∗ \omega^* ω∗, The solution is as follows Optimization problems :

Then you need to start from F Ω F_\Omega FΩ Find a probability distribution closest to the probability distribution to be sampled ω ∗ \omega^* ω∗, The solution is as follows Optimization problems : among , π \pi π Is the probability distribution to be sampled , E ( p , q ) E(p,q) E(p,q) Used to measure the distance between two probability distributions . In variational reasoning , E ( p , q ) E(p,q) E(p,q) by KL The divergence , The optimization process adopts gradient descent ( because KL Divergence is right p p p Normalization insensitive , Therefore, variational reasoning does not require normalization of the probability distribution to be sampled )

among , π \pi π Is the probability distribution to be sampled , E ( p , q ) E(p,q) E(p,q) Used to measure the distance between two probability distributions . In variational reasoning , E ( p , q ) E(p,q) E(p,q) by KL The divergence , The optimization process adopts gradient descent ( because KL Divergence is right p p p Normalization insensitive , Therefore, variational reasoning does not require normalization of the probability distribution to be sampled )

Family of distribution

- The choice of probability distribution family It is actually a very strong prior information , It determines the approximate probability distribution to be sampled bias And the optimization process Complexity . If the distribution family is too simple , So similar bias It will be very big , But the optimization process is simple , conversely bias It will be smaller , But the optimization process is more complicated . therefore , We need to maintain bias And complexity

Mean field variation family (mean-field variational family)

- In the mean field variational family , All components of a random vector are independent , therefore Probability density function It can be written in the following formula :

among , z z z by m m m A random vector of dimensions , f j f_j fj by z z z Of the j j j Probability density function of components

among , z z z by m m m A random vector of dimensions , f j f_j fj by z z z Of the j j j Probability density function of components

Kullback-Leibler divergence

- When looking for the approximate distribution of the probability distribution to be sampled , We hope that the optimization process is insensitive to the normalization factor , While using KL Divergence as a measure can well meet this condition . set up π \pi π Is the probability distribution to be sampled , C C C Is the normalization factor

be

be  therefore In the use of KL Divergence as a measure , The optimization process is insensitive to the normalization factor , We do not need to normalize the sampling probability :

therefore In the use of KL Divergence as a measure , The optimization process is insensitive to the normalization factor , We do not need to normalize the sampling probability :

Optimisation process and intuition

- The above optimization problem can be solved by gradient descent And other methods to find the optimal solution

Intuition

- To better understand the above optimization process , The following is an example of Bayesian inference :

As you can see from the last item , The best approximate a posteriori probability distribution will make based on the observed data x x x Of The log likelihood is expected to be as large as possible , At the same time, the approximate posterior distribution And a priori distribution KL The divergence should be as small as possible (prior/likelihood balance)

As you can see from the last item , The best approximate a posteriori probability distribution will make based on the observed data x x x Of The log likelihood is expected to be as large as possible , At the same time, the approximate posterior distribution And a priori distribution KL The divergence should be as small as possible (prior/likelihood balance)

MCMC v.s. VI

- MCMC and VI There are different applications . One side ,MCMC Sampling process Large amount of calculation but bias smaller , Therefore, it is suitable for situations that need to get accurate results without caring about the time cost . On the other hand ,VI The selection and optimization process of the probability distribution family in bias, comparison MCMC for bias more but Less computational overhead , Therefore, it is suitable for large-scale reasoning problems that need fast computation

References

- Bayesian inference problem, MCMC and variational inference

- more about VI: Variational Inference: A Review for Statisticians

- more about MCMC: Introduction to Markov Chain Monte Carlo、An Introduction to MCMC for Machine Learning

- more about Gibbs Sampling applied to LDA: Tutorial on Topic Modelling and Gibbs Sampling、lecture note on LDA Gibbs Sampler

边栏推荐

猜你喜欢

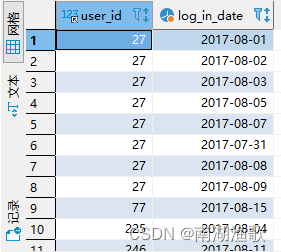

SQL interview question: find the maximum number of consecutive login days

如何通过W3school学习JS/如何使用W3school的JS参考手册

C#连接数据库完成增删改查操作

Summary of the use of qobjectcleanuphandler in QT

释放互联网价值的 Web3

视频压缩处理之ffmpeg用法

Web3 that unleashes the value of the Internet

Business layer modification - reverse modification based on the existing framework

毕业设计-基于Unity的餐厅经营游戏的设计与开发(附源码、开题报告、论文、答辩PPT、演示视频,带数据库)

怎样去除DataFrame字段列名

随机推荐

How many objects are created after new string ("hello")?

Shell script batch modify file directory permissions

Win11如何给系统盘瘦身?Win11系统盘瘦身方法

图神经网络入门 (GNN, GCN)

Business layer modification - reverse modification based on the existing framework

国内有正规安全的外汇交易商吗?

数据库学习笔记(SQL04)

leetcode 1423. Maximum points you can obtain from cards

Some error prone points of C language pointer

PCL calculation of center and radius of circumscribed circle of plane triangle

泰山OFFICE技术讲座:WORD奇怪的字体高度

How to change the status bar at the bottom of win11 to black? How to change the status bar at the bottom of win11 to black

列表加入计时器(正计时、倒计时)

Advanced - Introduction to business transaction design and development

F(x)构建方程 ,梯度下降求偏导,损失函数确定偏导调整,激活函数处理非线性问题

Grafana biaxial graph with your hands

Ffmpeg learning summary

G biaxial graph SQL script

Ffmpeg usage in video compression processing

MDM数据分析功能说明