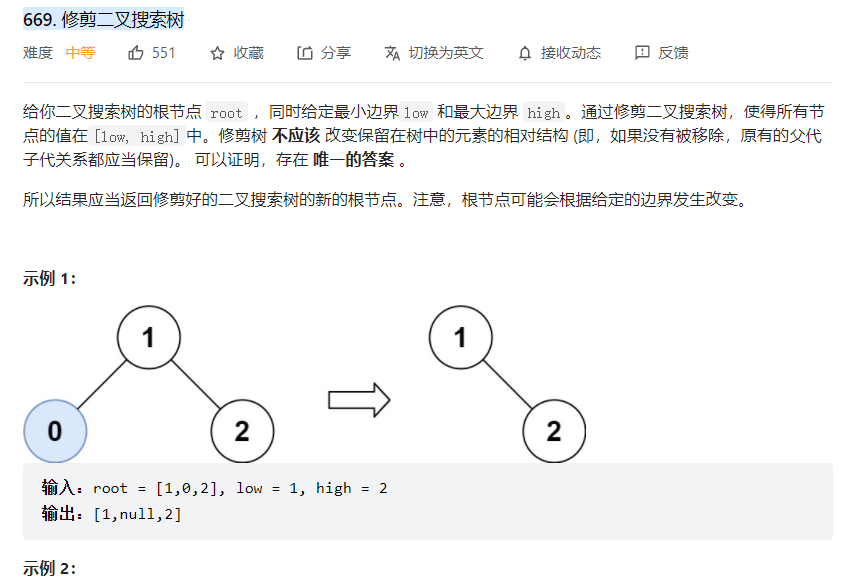

Intermediate isolation level (Intermediate Isolation Levels)

For implementing isolation levels PL-2( read 、 No write operation guarantees sufficient consistency ( for example : Reverse direct dependency related consistency )) The data storage system is relatively isolated PL-3( read 、 Write operations can ensure sufficient consistency ) There is a big gap in the consistency guarantee that can be realized in the system , But its performance is greatly improved compared with the latter . To bridge the gap between the two , A kind of isolation level, i. e. intermediate isolation level, is proposed (Intermediate Isolation Levels), So as to achieve a balance between the two .

Isolation level PL-2+(Isolation Level PL-2+)

This situation is very common in practice , for example : Inventory status and order status in e-commerce , Bank account information query, etc . A lot of times , Developers use when developing applications ASNI-92 Of read commited( Read submitted ) As the isolation level of transactions, it ensures the consistency of the system in these scenarios . However , Consider the following transaction history :

Hb:r1(x0, -20) r2(x0, -20) r2(y0, 100) w2(x2, 100) w2(y2, -90) c2 r1(y2, -90) c1[x0<<x2, y0<<y2]

chart 14 The above transaction history cannot guarantee business constraints x + y >0 , Because business T1 After execution x+y=-110

Hi: r1(x0, 60) r2(x0, 60) w2(x2, 100) C2 r3(x2, 100) w3(y3, 75) C3 r1(y3, 75) C1 [x0<<x2]

chart 15 The above transaction history is generated DSG(H) The sequence in is not limited to two transactions , But in 3 Between transactions .

The isolation level can be found from the above example PL-2 Not enough to achieve the required consistency of the system , Therefore, the isolation level PL-2 Strengthen . Isolation level PL-2+ Yes for isolation level PL-2 The weakest kind of reinforcement . Isolation level PL-2+ For data storage, it only needs to ensure that the observed data storage system state is consistent when the client reads the corresponding results , And no isolation level is required PL-3 Scene . Assuming that the complete constraints defined by the application are correct, the state of the data storage system will remain consistent after its related transactions are committed . and , When an application contains transactions for update operations T1 The observation of the state of the data storage system conforms to the correct integrity constraints and the transaction can run independently until it is completed , It can be assumed that even if it changes the state of the submitted data storage system , The entire system contains application state integrity constraints in transactions Ti It will continue after submission . In such a system, in order to avoid the above scenario , The system needs to achieve basic consistency . The concept of fundamental consistency is defined by Weihl Put forward . If a transaction Tj The result of the read operation is the result of a series of execution of a subset composed of multiple committed transactions , And each of these transactions updates the transaction history record obtained by concurrent execution of the transaction, and the steps in the transaction history record obtained by sequential execution are the same , It can be considered that the system has achieved basic consistency . Define consistently on the basis of , If results of the multiple committed update transactions executed in sequence are always consistent , Then a certain transaction can be guaranteed Tj All observations on the data storage system are consistent . Business Tj You cannot read results from a collection of arbitrary transactions , It must read the results from a collection of transactions that have formed an execution sequence , And it must see the changes brought about by the update of each committed transaction corresponding to each step of the sequence . The key to achieving basic consistency is to make the transactions in the system avoid the perceived loss of updates to other transactions that depend on it (Missing Transaction Updates). Transaction update aware loss (Missing Transaction Updates): That is, a certain transaction Tj For the version of a data object it reads xj Due to transaction Tj Another transaction executed after this read operation of Tk Write operations to the same data object have become obsolete ( edition xj << xk) The situation was undetectable .

No dependencies lost (No-Depend-Misses)

By analyzing the relationship between transactions, we can find , As long as no dependencies are lost (No-Depend-Misses), It's business Tj For transactions Tk There are any positive dependencies , Business Tj It can avoid the transaction Ti Update perceived loss , You can avoid the loss of transaction update awareness . and PL-3 The isolation level ensures no dependency loss ( Contains forward , reverse )【Chan,Gray】, So by relaxing the corresponding conditions of its restrictions , That is, at the isolation level PL-2+ In the exclusion scenario G1 The exclusion is added on the basis of G1-single scene , To achieve the same effect .

Repel the scene :

G1,G1-single

G1-single

When generated based on the transaction history DSG(H) The graph contains a directed ring with reverse dependencies , And this ring has and only has one inverse dependent directed edge (Single Anti-dependency Cycles), Then the transaction history contains scenarios G1-single. For example, the previous text is at the isolation level PL-3 Transaction history described in H0( chart 11) It contains the scene G1-single, Therefore, the isolation level is not met PL-2+. By excluding G-single, The system can guarantee no dependency loss ( All reverse dependencies are excluded ).

Isolation level PL-2+ It can eliminate unreal reading ( Unreal reading contains a reverse dependency ), However, the inconsistency of data storage state caused by updating data cannot be completely avoided , For example, common write-skew The problem of ( See transaction history below ) It cannot be ruled out .

Hs: r1(x0, 1) r1(y0, 5) r2(x0, 1) r2(y0, 5) w1(x1, 4) C1 w2(y2, 8) C2 [x0<<x1, y0<<y2]

chart 16 Due to the circular problem of reverse dependency write-skew The problem of , A ring consisting of more than one inversely dependent directed edge , The respective write operations of the direct reverse dependency change the corresponding different data objects , explain PL-2+ There is no need to read data from a complete changed state that contains everything , It allows for the inclusion of all things that have been observed in the past ( Things may look different , So the state may be different ) To read data in the updated state of .

Client angle :

For applications, isolation levels PL-2+ It is very suitable for read-only transactions and scenarios where programmers can control write operations that will affect the consistency of the data storage system ( for example , E-commerce product display and low-frequency trading scenarios with more reading and less writing ). because PL-2+ Transactions need to understand the changes that all other transactions have made to the system , From this perspective PL-2+ Maintain the cause and effect consistency of the system .

Isolation level PL-CS( Stable cursor (Isolation Level Cursor Stability))

Cursors, like pointers, are a technique for referencing specific data objects , It can specify any location in the query result set , Then the user is allowed to process the data in the specified location . Most commercial data storage systems ( database ) Will provide a solution to ensure consistency by using cursors. This is the famous stable cursor (Cursor Stability)[Dat90]. Stable cursors use cursors to refer to a particular... That a transaction is accessing ( For example ) Data objects , There can be multiple cursors in a transaction , When a business Ti A data object is accessed through a cursor , You do not need to release the read lock immediately ( Like REPEATABLE READ) Instead, the read lock is held until the cursor is removed or the transaction is committed . When this transaction updates the data , Then this lock is upgraded to a bit write lock . Because you can always hold a read lock on a data object , So you can rule out the loss of updates ( If other transactions want to update this object, they must notify the current transaction to release the read lock ). Stable cursors also have no time - related limitations .

At the isolation level PL-3 Transaction history in H0 in ( See transaction for details ( Multiple objects 、 More operation ) Part 1 ) Two transactions occurred T1 and T2 For a single data object x Update and T2 The update of has been lost .

H0:r1(x0, 20) r2(x0, 20) w2(x2, 26) C2 w1(x1, 25) C1 [x0<<x2<<x1]

chart 17 In systems that use stable cursors, such scenarios cannot occur , Because business T1 Because data objects can be held through cursors x Read lock , So the business T2 There is no way to x Make any corrections ( namely w2(x2, 26) This step cannot be performed ) Unless the transaction T1 The transaction can only be executed after the transaction is committed or the cursor is actively removed . Because it is through cursor reference that the effect occurs for a specific data object , So it can be used for DSG(H) Do some little specialisation , That is, the directed edge is not for all data objects, and the directed edge is marked with the corresponding label for a single data object , To form the so-called label directed graph (Labeled DSG(H),LDSG).

Repel the scene :

G1,G1-cursor(x) Labeled Single Anti-dependency Cycles

G1-cursor(x) Labeled Single Anti-dependency Cycles

When generated based on the transaction history LDSG(H) The graph contains a directed ring with reverse dependencies and one or more write dependencies , Each directed edge of this ring has a corresponding label , At the same time, it has and only has one reverse dependent directed edge , Then the transaction history contains scenarios G1-cursor(x) Labeled Single Anti-dependency Cycles. Due to repulsion G1,G1-cursor(x) Labeled Single Anti-dependency Cycles, So in a system that implements a stable cursor, the transaction history in the above example H0 Is not allowed to appear .

Client angle :

For applications, isolation levels PL-CS It ensures that when a data item in the data object ( data row ) By a process 、 After the thread changes, before the transaction of this process is committed , This data item cannot be read by other processes or threads . meanwhile , It also ensures that the current row of each changeable cursor is not changed by another application process . If it is out of the range of the cursor , In the unit of work (unit of work pattern) Data objects read during can be changed by another application process ( Cursor has been released ).

Isolation level PL-2L (Isolation Level PL-2L ( Lock based PL-2))

In the early ANSI-92 Data storage systems in the era are generally based on lock mechanism ( Use long write lock and short read lock ) Implementation read committed (READ COMMITTED) Isolation level . Since the lock mechanism provides a stronger guarantee of consistency than for read committed (READ COMMITTED) The consistency that needs to be ensured , therefore Adya Based on the particularity of the lock mechanism, the corresponding isolation level is given PL-2L. In this case , When the application migrates the corresponding data storage system platform ( Especially from the old platform to the new platform , Many new systems do not use pessimistic locking to implement read committed, but use other optimized concurrency control methods , For example, optimistic lock ), It can enable programmers to read and submit to the old data platform and the new data platform (READ COMMITTED) Subtle differences in implementation are noticed , And make corresponding amendments to the existing code to make the system continue to work .

Lock monotonicity (lock-monotonicity property)

Implement lock based PL-2L Isolation level data storage system needs to realize lock monotonicity (lock-monotonicity property), So called lock monotonicity (lock-monotonicity property) If there is a transaction in the transaction history Ti Events ri(xj)( It's business Ti Read by transaction Tj Data object version written xj), After this event , The data storage system will guarantee Business Ti Another and transaction will be observed Ti Transactions with read dependencies Tj And the impact on the system of the operation of all other transactions on which it depends ( for example : Status update ). If each record in the transaction history of this system has a data number , The transaction Ti The number of records seen during execution must be monotonically increasing . Because it is based on lock , So the transactions in the system Ti Trying to read a data object xj Time of day , You need to use a short read lock to obtain the corresponding access rights , Due to the mutual exclusion of locks , If the transaction Tj Is the last modification of the data object x And gives xj The business of , The transaction Tj Submitted , Otherwise, the transaction Tj Will always hold this lock , So that the transaction Ti Unable to perform read operation , and Tj Any other transactions that depend on it must also be committed .

Repel the scene :

G1,G-monotonic

G-monotonic

When generated based on the transaction history USG(H,Ti) The diagram contains a with reverse dependencies ( This is a directed edge read event that represents a reverse dependency ri(xj) Or predicate queries , for example ri(Dept=Sales: x1; y2)) To other affairs Tk) And any number of ordered or dependent directed edges , The transaction history contains scenarios G-monotonic, And can not achieve monotonic reading (Monotonic Reads). By excluding scenarios G-monotonic, The system can realize lock monotonicity (lock-monotonicity property).

Expand the serialization diagram (Unfolded Serialization Graph or USG)

Similar to other isolation levels , Isolation level PL-2L Also through for DSG(H) Make corresponding adjustments to reflect the particularity of the corresponding isolation level . Isolation level PL-2L The use is to expand the serialization diagram (Unfolded Serialization Graph or USG). Because the lock is related to a transaction Ti Is linked to a read operation of , So you can use USG(H,Ti) To identify a transaction Ti History of USG chart . and DSG Graph consistency ,USG The diagram also retains all committed transactions as nodes ( Except for business Ti), Due to transaction Ti To be associated with the corresponding lock , So the transaction will be Ti Split into multiple nodes , Each node represents the corresponding read of this transaction 、 Write the corresponding events of each operation .USG The directed edges in the graph represent transactions Ti Related reading 、 Write the relationship of events . Record the transaction history as follows H2L For example :

H2L: w1(x1,1) w1(y1,1) C1 w2(y2,2) w2(x2,2) w2(z2,2) r3(x2,2) w3(z3,3) r3(y1,1) C2 C3 [x1<<x2, y1<<y2, z2<<z3]

chart 18 To achieve lock monotonicity (lock-monotonicity property) Data storage system , When a transaction in the transaction history Ti There are read Events ri(xj) Or predicate queries , for example ri(Dept=Sales: x1; y2) There is , The data storage system will hook the lock to the read event . stay USG In the figure, the transaction Ti The operations involved are decomposed into multiple events , Based on other transactions and transactions at the same time Ti The read event corresponding to the internal read operation of ri(xj) perhaps ( Or predicate queries , for example ri(Dept=Sales: x1; y2)) Add corresponding directed edges according to the relevant dependency types . For transactions Ti If the event involved is a continuous event, it shall be based on Ti The order in is connected by a directed edge , The direction refers to the event that occurs after the first event . From this, you can see the above transaction history H2L It does not conform to the isolation level because it contains a ring PL-2L.

The following transaction history Hm Comply with the isolation level PL-2L

Hm: w1(x1,1) w1(y1,1) C1 w2(y2,2) w2(x2,2) r3(y1,1) r3(x2,2) C2 C3

chart 19

Because only a lock and a read event are defined ( Lock monotonicity is related to an operation ( A lock is associated with a data object through an operation )), But no read event is specified ( Also cannot specify ), So a lock can be associated with any read event . This operation occurs ( Read event generation ) The timing is more important . for example : Business Ti Fix the data object x And y, Even if lock monotony is implemented, transactions still occur Tj Read transaction Ti Revised version xi And business Ti The version that hasn't come yet and has been revised y The situation of . This is because if Tj In the reading xi Read... Before y, And read lock is related to read data object x Associated with the operation of , In this way, the read lock cannot be used to guarantee the transaction Tj Read data object y When Ti Submitted , In turn, there is no guarantee that the data object y Is the latest version of Ti The latest version of the modified write operation of . From this, we can see that the monotonicity property of lock is better than the isolation level PL-2+ Non dependency loss of (No-Depend-Misses) Weaker , And there's no guarantee that Tj Observe consistent database state ; Because there is no dependency, the missing attribute has nothing to do with the timing of the operation , It ensures that no matter what happens Tj When to read xi, Business Tj Will not miss the transaction Ti Influence . So the isolation level PL-2L Although excluding G-monotonic, But it does not rule out transactions completely Ti Loss of update status of read operation to write operation of other transactions . The transaction history above Hm in , Although through lock and read Events r3(x2,2) relation , In this way, the transaction T3 stay r3 Transactions are not ignored after an event occurs T2 And x The value written by the write operation related to the data object , But it still ignores transactions T2 Write event for w2(y2,2). This is at the isolation level PL-2+ It won't appear in , Because the scene G1-singl All scenarios including a single reverse dependency ring are included , and G-monotonic It can only be counted as a subset , So the isolation level PL-2+ It has nothing to do with the timing of the lock , As long as the transaction T3 On business T2 Depend on , Isolation level PL-2+ Transactions are not allowed T3 Missed transactions T2 Change of system state caused by .

At the isolation level PL-2L under , When the read event is a predicate query ( for example ri(Dept=Sales: x1; y2)), Because the data object involved in the lock associated with the read event is not a single data object, but all objects whose predicates match successfully , In this way, the system can ensure the consistency of the following predicate based read operations :

1) If the transaction Ti Predicate based read operations detect a transaction Tj System state change caused by , It observes transactions Ti The full impact and Ti The impact of all transactions that depend on . In extreme cases , When a transaction Ti When there is only one read operation , This read operation involves all data objects of all systems , Business Ti A consistent database state will be observed , The isolation level is obtained PL-2+( because , There is only one read event , So in this case, it has nothing to do with timing , This guarantees that there is no dependency loss (No-Depend-Misses), That is, all transactions Tj The changes will be made by the transaction Ti To detect ).

2) When the system guarantees the atomicity of predicate based read operations and implements the isolation level based on locks , Since each predicate based read operation is performed after obtaining the predicate read lock , Therefore, it can be regarded as an isolation level PL-3 Transaction execution .

The second consistency guarantee is stronger than the first .

Client angle :

For client reference programs , Isolation level PL-2L It is most commonly used in some old systems that use locks to achieve various isolation levels . Under certain business scenarios , When the code of the system business requires that the information obtained after a certain read operation event is unchanged, then this isolation level PL-2L It's also very useful. .

Isolation level PL-SI(Isolation Level Snapshot Isolation)( Snapshot Isolation ))

stay Adya In the definition of , When a business Ti Start execution ( for example : From the first operation event (start(Ti))), The system selects a starting point for this transaction si, And use this as a benchmark to determine the starting point of this transaction and the submission of other transactions ( End )(c(Ti)=commit(Ti) or abort(Ti)) The order of precedence . Although an earlier point is usually selected for convenience ( It can be any previous point ), Business Ti In fact, the determination of the starting point of is often determined by the business needs , Not necessarily a business Ti Start from this transaction Ti The most recent commit of another transaction ( end ) The point after point is the starting point . for example : If the business needs all other transactions, the system update cannot be a transaction Ti Perceived , be Ti The starting point of must be before any other transaction is committed .

Definition :

Adya Read from snapshot / Write operations are used to isolate snapshots (Snapshot Isolation) Define and describe , also Adya The definition of removes the implementation - related parts , Only for the relationship between the start and end of each transaction in the transaction history itself :

Read the snapshot :

If business Ti All reads performed occur at their starting point , When a transaction Ti Reading events ri(xj) In the transaction history H It records , The transaction Ti And any other transaction Tj There are only two kinds of situations ( In the definition " It means time order (time-precedes order) Concept ),

1) Only business Ti And transaction Tj, The transaction Tj Submit events for cj In the transaction Ti Occurs before the start event of (cj<t si)

2) When a transaction Ti And transaction Tj There is another transaction besides Tk happen , And transaction Tk There is an event wk(xk) Also in the transaction history H It records , be

Business Ti The starting point of is in the transaction Tk Before the submission point of (si <t ck) perhaps

Business Tk The end point of is in the transaction Ti Before the starting point of (ck <t si) At the same time, the version of the data object xk stay xj Before .

Snapshot write :

If a concurrent transaction occurs, that is Business Ti And transaction Tj Running at the same time , Transactions are not allowed Ti And transaction Tj The same data object cannot be modified at the same time . That is, if the transaction Ti And transaction Tj Events wi(xi) And events wj(xj) Are simultaneously recorded in the transaction history H in , The transaction Ti And transaction Tj Must be serial operation (ci <t sj perhaps cj<t si).

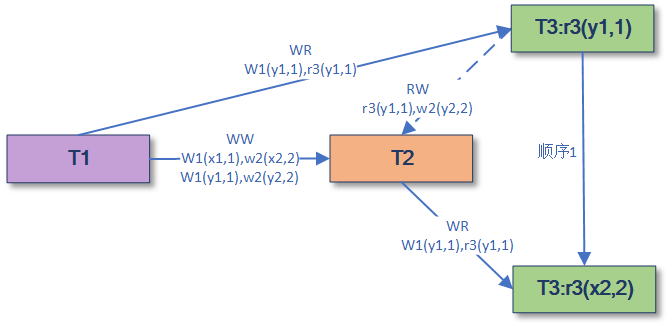

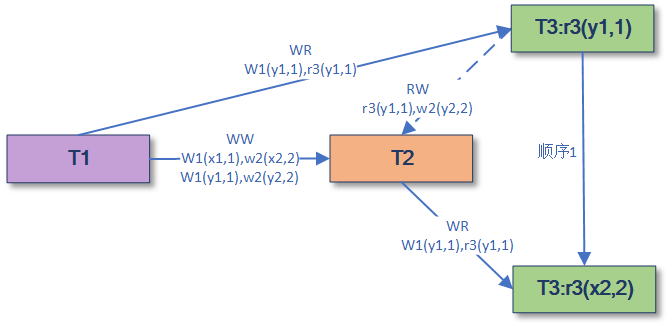

chart 20 The first part of the figure illustrates the second type of snapshot reading (1) situation , The next two sections explain (2) situation . By ensuring that if the transaction Ti Read transaction Tj Information about , be cj And si There is no transaction between k Submission of (ck), To ensure that events ri(xj) The accuracy of will not be lost ( Prevent this from happening due to reverse dependencies ).

Chronological order (time-precedes order)

The relationship between the start point and the commit point of a transaction cannot be confirmed due to the sequence of events in the transaction history , History of transactions H The order of transactions on is based on the chronological order (time-precedes order) Partial order of ( The chronological order is based on the transaction history ( Such as version record , Operation record ) Judge the sequence between the start and end of each transaction, rather than based on the timestamp of the operation ):

Within the same transaction , When a transaction Ti The starting point of si At the commit point of this transaction ci Previously, it can be recorded as (si <t ci)( Causal consistency , Don't think about concurrency , Incomplete order )

Between different transactions , That is, all transactions involved in the system Ti And business Tj Come on , If the scheduling of the storage system selects a transaction Tj The beginning of sj In the order of transactions Ti Submission of ci After dot, it can be recorded as (ci <t sj) And vice versa (sj <t ci)( It can be determined by the read events defined in the snapshot read )

The order of transactions in the transaction history is based on chronological order (time-precedes order) Partial order of , Therefore, there is no complete one-to-one correspondence between the sequence of transaction records and the actual time of events ( Otherwise, it is a full sequence record ), Therefore, it is impossible to determine the relationship between each starting point and commit of different transactions only by the sequence of events in the transaction history . Here's an example :

Hsi:w1(x1) C1 w3(z3) C3 r2(x1) r2(z0) C2 (z0<<z3; c1 <t s2) Although in the transaction history , event w3(z3) Recorded in the r2(z0) Before , And transaction T3 The commit event of is recorded in the transaction T2 The starting point of r2(x1) Before the incident , In fact, the order of data versions and transactions T2 Events r2(z0)(C3 It certainly didn't happen ) It indicates that the system has no choice between time and sequence s2 be ranked at C3 after .

in addition , If the time sequence is associated with a unique physical time , That is, the time sequence is consistent with the physical occurrence time of each transaction , Then the client application system can get higher than PL-2+ even to the extent that PL-3 Consistency assurance of . Because once the time sequence is consistent with the physical occurrence time of each transaction , The transaction Ti Must read in Ti The update of the system caused by all committed transactions before the real-time start time of .

Concurrent transactions (Concurrent Transactions)

When a transaction Ti The start time of is less than the transaction Tj The end time of ( sj <t ci)

chart 21 Concurrent transaction identification and si and sj Between ,tci as well as tcj There is no relation between them

Start dependency (Start-Depends): If the transaction Tj The end point of is in the transaction Ti Before the starting point of (cj ) It can be regarded as the Tj For transactions Ti With startup dependency (Start-Depends) Relationship .

Start sorting sequence diagram (Start-ordered Serialization Graph,SSG(H)): Based on the startup dependency, you can add DSG(H) Change a little to become SSG(H) .SSG Keep and DSG Graph like nodes and directed edges that contain startup dependency information ( Have added to the edge “s”) Together as directed edges . The following is a transaction history that conforms to snapshot isolation :

HSI: w1(x1,1) w1(y1,1) C1 w2(x2,2) r3(x1,1) w2(y2,2) r3(y1,1) C2 C3 [x1 << x2, y1<<y2; C1 <t s2, C1 <t s3]

chart 22 Although it complies with snapshot isolation , By business T3 Starting point event of r3(x1,1) It can be seen that , Business T1 In the transaction T3 Submitted before , And the business T2 The commit point of must be in the transaction T3 After the starting point of , So the business T2 On business T3 Is a reverse dependency , Business T3 Unable to observe transactions T2 For data objects x Update .

Repel the scene :

G-SIa: Interference,G-SIb: Missed Effects

G-SIa: interfere (Interference)

When a transaction history is generated SSG(H) The diagram contains transactions Tj For transactions Ti There is direct reading / Write dependency , However, if there is no startup dependency, it means SSG(H) Figure contains G-SIa. Without startup dependencies, there is no protection against interference caused by concurrent transactions , That is, there are reads between concurrent things / Write dependency .

G-SIb: Lost effect ( Missed Effects).

When a transaction history is generated SSG(H) If a graph contains a directed ring with reverse dependencies, it shows SSG(H) Figure contains G-SIb. The transaction can be judged by a directed ring consisting of a reverse dependency and a start dependency directed edge Ti Whether it will be lost in Ti Update of transactions committed before the start point .

G-SIb Because it contains additional startup dependency restrictions, it is better than G1-single Stronger ( Similar to the strength definition of consistency, the stricter the restriction, the stronger ).

From the client's point of view :

Snapshot Isolation (Snapshot Isolation) and PL-3 comparison , It can only guarantee the transaction Ti All operations must be from the same state s Read information , But snapshot isolation does not guarantee transactions T Get a status after execution t The parent state of ( namely t The immediately preceding state of a state ) It's state s, I.e. status s( snapshot ) There is no guarantee or condition t No other state exists between .

As shown in the figure below :

chart 23 Definition transactions that conform to snapshot reads Ti The starting point of is in the transaction Tk Before the submission point of (si (snapshot(Ti) ≤ start(Ti)) But the State t The parent state of is not a state s It's the state k.

Snapshot based isolated protocol (snapshot-based protocols)

Due to different requirements and usage scenarios , If the Adya Snapshot isolation (Snapshot Isolation) Definition as a basis , Isolate snapshots (Snapshot Isolation) If the corresponding constraints are adjusted accordingly, multiple snapshot based isolation with different strengths can be obtained .

From the client's point of view, you can start from 3 Observe the differences of these snapshot based isolated protocols from three dimensions :

1) Time dimension , The timestamp used by different protocols is based on logical time or physical time

2) Whether different protocols allow the use of expired snapshots ( Does the snapshot contain changes to all transactions committed before a transaction start time )

3) The integrity of the State , That is, whether the snapshot contains the complete state of the data storage system or only needs to comply with the cause and effect consistency .

1. Isolation level PL-ANSI (ANSI Snapshot Isolation(ANSI Snapshot Isolation ))/

Isolation level PL-GSI (Generalized Snapshot Isolation (GSI)( General snapshot isolation ))/

Isolation level Clock-SI (Clock-Snapshot Isolation( Clock snapshot isolation ))

Isolation level PL-ANSI Snapshot Isolation :

from Berenson Put forward [Berenson et al] Is the most original and well-known definition of snapshot isolation . One is based on ANSI Snapshots isolate running transactions Ti, It always comes from somewhere ( Logic ) moment ---- Initial timestamp (start-timestamp) Read data from the generated snapshot composed of the submitted data . ( let me put it another way , The initial timestamp of the snapshot is that the snapshot can be generated at the beginning of the transaction , This initial timestamp can be equal to the time of the first read operation of the transaction, or it can be generated at any logical time before it (ss(Ti)(snapshot(Ti)) ≤ si(start(Ti)) < ci(end(Ti)))). So other transactions are in this transaction T1 The update operation after the initial timestamp of cannot be transacted because it cannot affect the snapshot T1 Perceived . When a transaction T1 A submission timestamp will be set when submission is required (commit-timestamp) And submit . If business Ti When you need to submit , There is another concurrent transaction (Concurrent Transactions)T2( It's business T2 Initial timestamp and commit timestamp and transaction T1 The initial timestamp of and the submission timestamp of overlap ) Already for transaction T1 The data object to be updated has been updated and submitted , On a first come, first served basis ( That is, the first committed transaction wins ) To stop the transaction T1 To prevent the loss of updates .ANSI Snapshot isolation and Adya The biggest difference in the definition of snapshot isolation is ,Adya Is based on chronological order (time-precedes order) There is no direct relationship between the relationship between transactions and timestamp ,ANSI The snapshot isolation definition is more related to the operation of transactions , It is required that the related operations have a full order sort based on timestamp , Therefore ratio Adya The definition of snapshot isolation is stronger . In order not to block the read operation of transactions to improve the execution efficiency of the system ,ANSI Snapshot isolation allows transactions to use expired snapshots , As long as it can maintain snapshot data starting from the initial timestamp .

Isolation level PL-GSI( General snapshot isolation Generalized Snapshot Isolation (GSI)):

Elnikety,Fernando And so on ANSI Snapshot isolation allows reading expired snapshots. Snapshot isolation is applied to distributed multi replica systems, and a general snapshot isolation is proposed (Generalized Snapshot Isolation (GSI)). Generally, snapshot isolation also requires that all operations form a full sequence based on time stamps and use ANSI Expired snapshots are allowed in the snapshot isolation definition ( namely snapshot(Ti) This point . It allows the read commit(Tj) The value of is not necessarily up to date ,commit(Tj) The timestamp of is not the largest among all the commit operations in the transaction history ), It also continues to retain some attributes that require that the data read by the transaction must be isolated from the latest snapshot . Notwithstanding and guaranteed PL-3( serialize ) The consistency that level isolation can provide is weak . However , By allowing clients to get corresponding snapshots from different copies of the system , It can reduce the blocking and cancellation of corresponding system read and write operations , Improve the efficiency of the system . General snapshot isolation (Generalized Snapshot Isolation (GSI)) relative ANSI Snapshot isolation defines related operations more clearly ( Generally, snapshot isolation is also a full order sort based on the timestamp of operations in the transaction history ), And redefined based on these concepts ANSI Snapshot Isolation :

snapshot(Ti): Generation timestamp of transaction based snapshot

start(Ti): The start time of the transaction ( First operation timestamp )

commit(Ti): Transaction submission time ( Submit operation timestamp )

abort(Ti): Transaction cancellation time ( Cancel operation timestamp )

end(Ti): Transaction end time (commit(Ti) perhaps abort(Ti))( Equivalent to commit or cancel timestamp )

Generally, snapshot isolation is defined in terms of read and commit :

read : When a transaction Ti Reading events ri(xj) In the transaction history H It records ( That is, things Ti For things Tj There are read dependencies ), The transaction Ti And any other transaction Tj There are only two kinds of situations

1) Only business Ti And transaction Tj, The transaction Tj Submit events for commit(Tj) In the transaction Ti Generation time of snapshot of snapshot(Ti) It happened before (commit(Tj) < snapshot(Ti))

2) When a transaction Ti And transaction Tj There is another transaction besides Tk happen , And transaction Tk There is an event wk(xk) Also in the transaction history H It records , Then things Tk The submission point of cannot be in things Tj Submission points and things Ti Between the starting points of ( Guarantee things Tk The submission of can include for things Ti Observed ), namely :

Business Ti The starting point of is in the transaction Tk Before the submission point of (snapshot(Ti) < commit(Tk)) or

Business Tk The commit point of is in the transaction Tj Before the submission point of (commit(Tk) < commit(Tj)).

Submit : Any two transactions Ti、Tj, When a transaction Ti When submitting , Business Ti And transaction Tj They cannot influence each other (impact), That is, the data sets involved in the update of these two transactions cannot have an intersection , And transaction Ti Transactions cannot exist during the entire time period from snapshot generation and commit Tj The timestamp of the event that submitted the operation .( namely snapshot(Ti) < commit(Tj) < commit(Ti)).

Because general snapshot isolation is still unavoidable write skew Other questions , Therefore, snapshot isolation and ANSI Like snapshot isolation, it can only be provided with PL-3 Relatively weak consistency assurance compared with isolation . Since the transaction may observe some “ used ” snapshot , To ensure the correctness of submitting update transactions , For each update transaction, the system must check its write set according to the write set involved in the write operation of the recently committed transaction just like the previously committed transaction . Therefore, in order to ensure the consistency of the system , It is also based on a higher consistency guarantee, namely serialization (PL-3) The corresponding dynamic checking rules are proposed : If any two transactions i、j, Business Ti Transactions exist throughout the time period from snapshot generation and commit Tj The timestamp of the event that submitted the operation (snapshot(Ti) < commit(Tj) < commit(Ti)) , The transaction Ti Data sets and transactions involved in the read operation of Tj The data set involved in the write operation of must have no intersection . This rule can ensure that the transaction history generated by the system that implements general snapshot isolation is serialized , But because it involves checking the set of read operations , So it will bring a lot of performance penalties . For this reason, the corresponding static inspection conditions are put forward : Any two update transactions Ti And Tj The data set involved in the write operation of has an intersection or any two transactions Ti And Tj The data set involved in any read / write operation of the must not have any intersection (writeset(Ti) ∩ writeset(Tj) <> ∅∨( readset(Ti) ∩ writeset(Tj) = ∅∧writeset(Ti) ∩ readset(Tj) = ∅)). Any two update transactions of a system that meets this condition do not intersect because the data sets involved in the write operation meet the GSI The definition of is therefore necessarily serial , For read and write operations, the data sets involved are guaranteed to have no intersection , So there is no direct dependency between the two .

Isolation level PL-Clock-SI ( Clock snapshot isolation Clock-Snapshot Isolation):

Jiaqing Du From the perspective of time dimension decentralization , take ANSI Combined with physical clock , By using the partitions of the distributed system ( copy ) The local clock of the server, rather than the central clock, can be efficiently implemented in the distributed system ANSI Snapshot Isolation . Suppose a distributed system that implements clock snapshot isolation stores data in partitions , And the local clock of each server is through Network Time Protocol (NTP) Perform corresponding synchronization and control the absolute value of clock synchronization deviation within a limited range . Because to achieve ANSI Snapshot Isolation , The data of all its snapshots must contain, and only contain, all data that precedes snapshot(Ti) Submitted Commit Transaction data for , The commit operation of all transactions will generate a new snapshot based on the commit timestamp , And can form a total order relation . The system should also ensure that there is no write conflict between concurrent transactions ( The definition is similar to GSI Definition of submission of ) Otherwise, cancel the corresponding update transaction . Because it is associated with the physical clock , And it stores data in partitions , This involves the possibility of remote data submission to other partitions , Therefore, data submission is divided into local and remote , There is no difference between local submission and normal operation , The data remote transaction execution is divided into Prepared perhaps Committing Two phases ( Use 2PC Submit ), Each phase will have a corresponding local timestamp , At the same time, consider the clock synchronization deviation .

The system using clock snapshot isolation needs to achieve the following :

When a partition takes a snapshot due to a read operation , Get the corresponding timestamp directly from the local clock snapshot(Ti)

read :

The timestamp of the read operation is the timestamp generated by the local clock of the partition snapshot(Ti), When this read operation requires remote reading of data on another partition , This timestamp is larger than the partition that is responsible for submitting transactions ( Assume that the partition where the data is located and the read operation are not in the same partition , The read operation is a remote operation ) The timestamp generated by the local clock of , The read operation on the remote partition will wait until the clock synchronization deviation between the two is eliminated . then , If there is a transaction committed on another partition , Then continue to wait until the timestamps generated in the two phases of the commit operation of the things on this partition are both less than the timestamps of the read operation and the commit operation status is submitted , You can start the read operation and read less than snapshot(Ti) Data provided by the latest commit operation of . The delay generated for clock synchronization offset elimination here is to ensure that the snapshot contains the updated data of the most recently committed operation .

Submit :

If a single partition commits locally ( Within a single partition ) You can use the local clock as the submission timestamp to submit directly , And whether the data sets involved in concurrent transaction write operations in the same time period are overlapped locally ( Writing conflict ) Check , If there is nothing that will affect each other, submit . If remote submission is required , Based on 2PC Protocol for remote distributed transaction submission , Select the maximum preparation timestamp as the submission timestamp 2PC Submit ; In order to ensure that the sorting based on the submission timestamp is complete , The time stamp is indistinguishable due to repetition , So each partition is executing 2PC During the preparation phase, you will add your own partitions id, You need to perform the same checks as a single partition locally on each partition that performs write operations .

chart 24 For the snapshot creation delay caused by time synchronization deviation and commit operation delay

Clock snapshot isolation is also allowed “ used ” snapshot , The delay time allowed for this snapshot ranges from 0( The snapshot keeps the latest data at all times ) To the maximum of the following two data ( Data before the maximum delay of the system ).

(1) The time required to commit the transaction synchronization to the completion of stable persistence plus a round-trip network delay

(2) The maximum clock offset between partition nodes minus the one-way network delay between two partitions

Isolation level PL-StrongSI(Isolation Level Strong Snapshot Isolation ( Strong snapshot isolation ))

Strong snapshot isolation (Strong Snapshot Isolation): History of transactions that comply with strong snapshot isolation H, First of all, history is required H Each pair of committed transactions in ( Business Ti And transaction Tj) accord with ANSI Snapshot Isolation (Snapshot Isolation) Definition . Again , Transaction history H Each pair of committed transactions in ( Business Ti And transaction Tj), If business Tj The commit operation of occurs in the transaction Tj Before the first operation of , The event cj The timestamp of is smaller than the transaction Ti Event of the first operation of si The timestamp ( namely commit(Tj) Snapshot isolation does not guarantee this , Because the snapshot can be any point before the start rather than the actual execution time of the transaction ( Physical time ), That is to say snapshot(Ti) Systems with strong snapshot isolation need to be time-based ( Physical time ) Maintain a complete sequence of transactions , It requires that any pair of transactions have a sequential relationship ( Business Tj The starting point is Ti Start after submission ), And the sequence is based on time , The latest committed transaction Tj Of commit(T) The operation timestamp will be greater than any existing start or commit timestamp published by the system , The system also wraps transactions Tj It is observed that there are transactions Ti The full status of the data storage system including the update of . To achieve the above requirements, all operations of one transaction are completely isolated from other transactions , Strong snapshot isolation, like strict serialization, defines the order of things based on physical time , So as to provide PL-3 Even strict serialization consistency guarantee .

Isolation level PL-Strong SSI (Strong Session Snapshot Isolation( Strong session snapshot isolation ))/

Isolation level PL-Prefix-consistent SI (Prefix-consistent Snapshot Isolation Front consistent snapshot isolation )

Because strong snapshot isolation requires the entire data storage system to maintain a unified sequence of all transactions , The impact on the performance of the system that implements this isolation level is too great . So , You can relax the restrictions on maintaining a uniform sequence of transactions , Maintain the unified order of all transactions in the session scope ( For distributed systems, data storage systems often have multiple clients and generate multiple sessions ), And the system will give a code to each session associated with the transaction LH (T). Transaction history in strong session snapshot isolation H, First of all, history is required H Each pair of committed and session encoded transactions in the (LH(Ti)=LH(Tj)) Comply with the basic snapshot isolation within the session (Snapshot Isolation) Definition . Again , Business Tj Submit events for cj In the transaction Ti The starting event of si It happened before ( namely cj < si). therefore , Although strong session snapshot isolation eases some of the mandatory restrictions at the data storage system level , There is no essential change to the limitations of a single client : Same as strong snapshot isolation , A transaction can only read data from a fully completed state before the start of the transaction based on the time dimension , You can't submit from ( end ) The real-time point of time reads data in transactions after the transaction start point , At the same time, the system still needs to maintain an expensive full sequence in the session scope . If every transaction is a session , That is, there is only one transaction in a session , Then strong session snapshot isolation is equivalent to basic snapshot isolation (Snapshot Isolation). If you equate a session with a workflow , Strong session snapshot isolation can also be applied to distributed systems , And based on GSI Derive prefix consistent snapshot isolation (Prefix-consistent Snapshot Isolation). Pre consistent snapshot isolation is guaranteed in a workflow ( conversation ) among , Any two transactions Tj,Ti, If business Tj Commit in transaction Ti The starting point of start(Ti)( Not snapshot(Ti)) Before , The transaction Ti The snapshot of must contain transactions j Update of ( I.e commit(Tj) < start(Ti) be commit(Tj) Business Ti And transaction Tj There is read dependency between , Indicates that there is no other transaction Tk, Its commit(Tk) stay [commit(Tj), start(Ti)] Between . When the transaction is committed , Then there is no intersection between the two transactions in the data and time dimensions . By using prefix consistent snapshot isolation , It can ensure the correctness of strong session snapshot isolation in distributed systems .

Isolation level PL-PSI(Parallel Snapshot Isolation PSI( Parallel snapshot isolation ))

Parallel snapshot isolation from Sovran They started from the business scenario of distributed storage systems , By relaxing at the overall level of the system due to ANSI Snapshot isolation is a weak snapshot isolation required by the system to maintain the full order of all commit operations for the entire system snapshot . Parallel snapshot isolation allows partition nodes in different regions to submit in different order in order to increase the scalability of multi region large-scale distributed data storage systems .PSI Allow a thing to have different submission times on different regional nodes , Therefore, the sequence of operation events recorded in the history of things composed on each regional node is different . A thing has a commit time on each node , It first commits locally and then spreads to remote nodes .

The system with parallel snapshot isolation needs to realize the following points :

Node snapshot read : Since the beginning of the transaction , All operations are performed at the original site of the respective transaction. At the beginning of the transaction, the data is read according to the snapshot generated by the latest committed version .( Assume that the bifurcation has been resolved )

Submit : There is no interaction between any two things ( Reference resources GSI The definition of ), That is, there is no writing conflict . If two things are concurrent at a certain node and affect each other, they must be forked (disjoint)

All nodes submit to ensure cause and effect consistency : If at any node things Ti The order of submission is in things Tj Before the start time of , This order must be guaranteed at any other node .

Parallel snapshots isolate the data inconsistency of each node caused by allowing submission at different partition nodes . Bifurcations fall into two categories : Short bifurcation (Short fork) With long forks (long fork) The difference between the two is that the short fork has one thing to execute on each node , Several things executed concurrently as a whole will be merged immediately after submission (merge) Long forks take a long time and there are multiple things executing in sequence on each node , The whole is concurrent execution, and the things at each node are merged after execution .

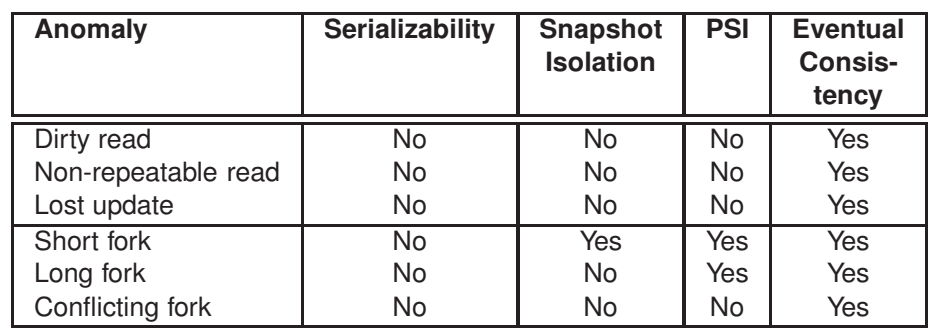

The comparison between parallel snapshot isolation and other isolation types is as follows :

chart 25 PSI Allow bifurcation (ref:Transactional storage for geo-replicated systems)

From the above snapshot isolation, it can be found that for large distributed data storage systems , Through the time dimension ( No. maintain a unified total order ), Whether the snapshot is up-to-date and the compromises and tradeoffs in the scope of state integrity , Thus, it is possible to realize all kinds of snapshot isolation on the distributed system with multi replica and decentralized architecture , And it can improve the overall execution efficiency of the system on the premise of ensuring the required correctness of the system in the actual application scenario . However , There is no unified snapshot isolation for each operation and the corresponding event and time positioning , Here we use Adya Based on the definition of , With GSI The definition of provides a general introduction to various snapshot isolation by reference .

Isolation level PL-FCV(Forward Consistent View( Forward consistent view ))

The forward consistent view is relaxed Adya Snapshot isolation is a part of the limitation of snapshot reading in the scenario of multiple concurrent transactions .

Repel the scene :G1,G-SIb: Missed Effects

With permission G-SIa, So when multiple things are concurrent, it is allowed to have dependencies between these things , thing Ti Allows you to observe updates submitted by other things after their starting point , That is, beyond the starting point “ forward ” Read . These reads only allow data to be viewed from a consistent data storage state . because G-SIb Than G-single More strictly (G-SIb A directed ring consisting of a reverse dependency and a start dependency is not allowed ), therefore PL-FCV Better than PL-2+.

Client perspective :

Forward consistent view is applicable to many concurrent write operations in some application systems , And the data storage system can ensure data consistency to improve the overall performance of the application system .

Isolation level PL-3U(PL-3U,Update Serializability( Update serialization ))

In many systems, the number of read-only things is far greater than that of written things ( for example :web Website, etc ), So if this kind of system maintains all operations in PL-3 Levels of isolation are too expensive . for example : Reduce the isolation level for read operations to PL-2+ For many systems, it is often enough . By lowering the level of read-only things , Only ensure that all updates submitted are serializable , To update serialization PL-3U.

Update serialization PL-3U The following conditions need to be realized :

No update conflicts lost : If things Ti Depend on sth Tj, And things Tj And its dependencies and reverse dependencies ( Since it contains reverse dependencies, it is stronger than no dependency loss which only contains dependencies ) The update of all related things should be things Ti To perceive .

Repel the scene :

G1,G-updagte

G-updagte:

When a thing is produced in the historical record DSG(H) Figure all updates from this history ( Read only things can be missing ) And something Ti, And this graph contains one or more rings composed of inverse dependent directed edges .

Hn3U: r1(S0, O) w1(X1, 50) w1(Y1, 50) c1 r2(S0, Open) w2(X2, 55) w2(Y2, 55) c2 w3(S3, Close) c3 rr(S3, Close) rr(X1, 50) rr(Y1, 50) cq [S0<<S3, X1 <<X2, Y1 <<Y2]

chart 26 When there is 2 Things include read-only things Tr thing T3 The data has been read before x1 And y1 It means that things Tr And things T2 There is a reverse dependency between , And things T1 And things T2 Because the data is read S0, Therefore, it is related to things T3 There are reverse dependencies . Because of the 2 A directed ring is composed of inverse dependencies , therefore PL-2+ Allow this thing to be recorded in history , and PL-3U Because the scene is excluded G-updagte It is not allowed to produce this historical record of things . meanwhile ,PL-3U and PL-3 Compare because PL-3U There is no requirement to include all reading things , So some are PL-3 Isolation level DSG There will be more than one reading thing in the picture (2 Read only or more ) And the renewal of things , stay PL-3U Isolation is basically formed DSG Because some read-only things in the graph are not included, a ring cannot be formed . such , This kind of situation will cause that the system does not fully comply with the serialization isolation level . If the client of the data storage system executes these read-only things and directly compares them with each other, it will be found that the execution order of the things will be inconsistent . however PL-3U The isolation level is inconsistent only in such cases , In other cases, it is consistent with PL-3 Isolation level of .

From the client's point of view :PL-3U(Snapshot Isolation) and PL-3 comparison , You only need to deal with the read-only things in some special scenarios ( If there is need to ).

The interrelationship between isolation levels

be better than PL-2 Isolation level (Intermediate Isolation Levels) Summary

reference :

https://pmg.csail.mit.edu/papers/adya-phd.pdf

A Critique of ANSI SQL Isolation Levels MSR-TR-95-51.PDF (microsoft.com)

Seeing is believing: a client-centric specification of database isolation | the morning paper

Generalized Isolation Level Definitions http://www.cs.cornell.edu/lorenzo/papers/Crooks17Seeing.pdf

https://pmg.csail.mit.edu/papers/icde00.pdf

Transactional storage for geo-replicated systems

Daudjee, K., and Salem, K. Lazy database replication with snapshot isolation

Clock-SI: Snapshot Isolation for Partitioned Data Stores Using Loosely Synchronized Clocks

Seeing is Believing: A Client-Centric Specification of Database Isolation

Database Replication Using Generalized Snapshot Isolation

https://www.geeksforgeeks.org/concurrency-control-in-dbms/?ref=lbp

原网站版权声明

本文为[InfoQ]所创,转载请带上原文链接,感谢

https://yzsam.com/2022/162/202206111504186886.html

![[multi thread performance tuning] what operations cause context switching?](/img/a6/5d82c81dba546092447debebf7fc3e.jpg)