当前位置:网站首页>How to ensure the double write consistency between cache and database?

How to ensure the double write consistency between cache and database?

2022-07-26 07:28:00 【Li xiaoenzi】

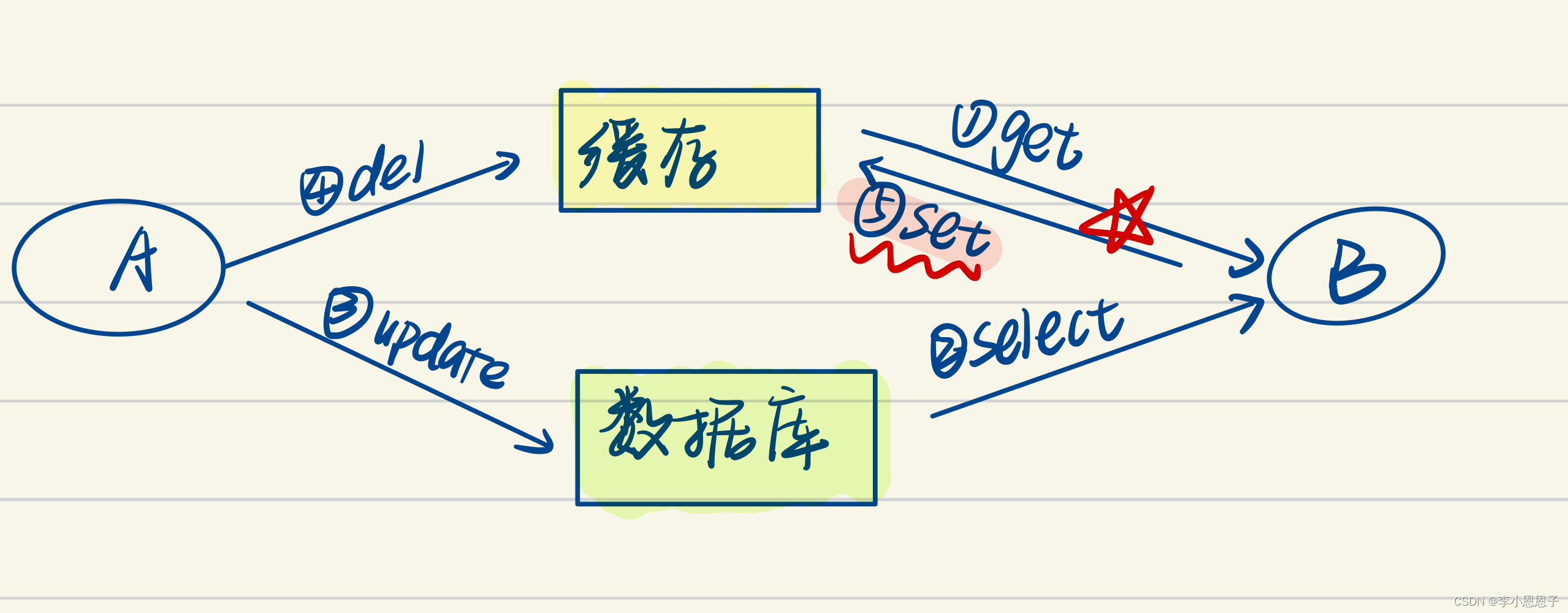

At present, most high concurrency systems use redis+mysql Database to ensure high performance , So how is the process of updating cache and database realized ?

Catalog

Category

There are roughly four categories to achieve double write consistency between cache and database , Namely :

① Update cache first , Update the database

② Update the database first , Update the cache again

③ So let's delete the cache , Update the database

④ Update the database first , Delete the cache

analysis

Two questions need to be considered :① Which is more appropriate to update cache or delete cache ?② Should I operate the database or cache first ?

- Which is more appropriate to update cache or delete cache ?

Advantages of updating cache : Every data change is updated to the cache , Therefore, the query hit rate is high .

Disadvantages of updating cache : The consumption of updating the cache is relatively large . Update cache frequently , It will affect the database performance , If you write frequent business scenarios , Then it may be that the cache is updated frequently but not read much , There is no practical point in updating the cache .

Advantages of deleting cache : It's easy to operate , Delete the cached data directly .

The disadvantage of deleting cache : Delete cache , The next query will miss , You need to read from the database to the cache .

Sum up , Deleting the cache will be better .

- Should I operate the database or cache first ?

First, compare deleting the cache first with deleting the database first Failure situation :

① So let's delete the cache , Update the database

- process A Delete cache - success

- process A Update the database - Failure

- process B The query cache , Found no , To query the database

- process B Query the database - success

- process B Update cache - success

It can be seen that , The final cache and database data is Agreement Of , But the data It's the original data , It's not new .

② Update the database first , Delete the cache

- process A Update the database - success

- process A Delete database - Failure

- process B The query cache - success Failed to delete cache , The data is Old data

It can be seen that , The final database and cached data is inconsistent .

Because there are problems when they fail , I don't know which one will be better , But when the second step fails , We'll all do Retry mechanism Solve the problem of operation failure . The retry mechanism usually adds the operation to the message queue , Then perform asynchronous update .

After adopting retry mechanism , Then let's compare them There was no failure Next Possible problems :

① So let's delete the cache , Update the database

- process A Delete cache - success

- process B The query cache , Found no , Go to the database to query

- process B Query the database - success , obtain Old data

- process B Update cache - success , take Old data Update to cache

- process A Update the database - success , take The new data Update to the database

We found that , Because between the two processes Concurrent operations , process A Both steps of the operation were successful , Between these two steps , process B Accessed cache . The end result is , Old data is stored in the cache , And the database stores new data , The two data are inconsistent .

② Update the database first , Delete the cache

- process A Update the database - success

- process B The query cache - success , But what I read is old data

- process A Delete cache - success

so , The final cache is consistent with the data in the database , And they are the latest data . but process B In this process, I read the old data , There may be other processes like process B equally , Between these two steps, I read the old data in the cache , But because the execution speed of these two steps will be faster , So it's not a big impact . After these two steps , When other processes read cached data again , There will be no process like B The problem. .

summary

Update the database first 、 Deleting the cache again is a solution with less impact . If the second step fails , Then the retry mechanism can be used to solve the problem .

expand - Delay double delete

Because of the situation just mentioned , So let's delete the cache , When there is no error in updating the database , Data inconsistency may also occur , Adopt delay double deletion to solve :

- Delete cache

- Update the database

- sleep n millisecond

- Delete cache

After blocking for some time , Delete cache again , You can delete the inconsistent data in the cache in this process . And the specific time , To evaluate the approximate time and specific setting of your business .

And for updating the database first , Then delete the cache without error , There is also a possibility :

The probability of this happening is very low , First of all The cache just failed There is a reading request at the moment of , And this The time for the read request to write back to the cache is longer than the time for updating the database , Normally, writing back to the cache is faster than updating the database .

If this extreme situation occurs , It also needs to adopt the delayed double deletion strategy , After updating the database, the cache will be deleted , here Open a new thread and wait n Delete the cache again in milliseconds , or Put the deletion request into the message queue , Ensure that the cache is new data , Or use message queuing to schedule messages , Delay deletion .

边栏推荐

- Leetcode:749. isolate virus [Unicom component + priority queue + status representation]

- 0动态规划 LeetCode1567. 乘积为正数的最长子数组长度

- Compose canvas custom circular progress bar

- Typora免费版下载安装

- 5. Multi table query

- NFT数字藏品系统开发:NFT数藏 的最佳数字营销策略有哪些

- C# 使用Log4Net记录日志(基础篇)

- 此章节用于补充2

- IDEA快捷键

- Network trimming: a data driven neuron pruning approach towards efficient deep architectures paper translation / Notes

猜你喜欢

金融任务实例实时、离线跑批Apache DolphinScheduler在新网银行的三大场景与五大优化

Machine learning related competition website

时间序列分析预测实战之ARIMA模型

单身杯web wp

从Boosting谈到LamdaMART

又是一年开源之夏,1.2万项目奖金等你来拿!

PG operation and maintenance -- logical backup and physical backup practice

Crawler data analysis

It's another summer of open source. 12000 project bonuses are waiting for you!

2021全球机器学习大会演讲稿

随机推荐

This section is intended to supplement

PXE高效批量网络装机

Apache dolphin scheduler 2.x nanny level source code analysis, China Mobile engineers uncover the whole process of service scheduling and start

NFT digital collection development: digital art collection enabling public welfare platform

With someone else's engine, can it be imitated?

How to expand and repartition the C disk?

Configure flask

记一次路由器频繁掉线问题的分析、解决与发展

C语言关键字extern

NLP natural language processing - Introduction to machine learning and natural language processing (3)

[classic thesis of recommendation system (10)] Alibaba SDM model

Idea shortcut key

3.0.0 alpha blockbuster release! Nine new functions and new UI unlock new capabilities of dispatching system

VScode无法启动问题解决思路

Crawler data analysis

NFT数字藏品开发:数字藏品与NFT的六大区别

Hcip--- BGP comprehensive experiment

MySQL syntax (2) (pure syntax)

Taishan office lecture: word error about inconsistent values of page margins

在线问题反馈模块实战(十四):实现在线答疑功能