当前位置:网站首页>Detailed explanation of decision tree

Detailed explanation of decision tree

2022-07-23 13:42:00 【TT ya】

Beginner little rookie , I hope it's like taking notes and recording what I've learned , Also hope to help the same entry-level people , I hope the big guys can help correct it ~ Tort made delete .

Catalog

Two 、 The composition of the decision tree

3、 ... and 、 The establishment of decision tree ( Based on information gain )

1、 Calculate the information entropy of the root node

2、 Calculate the information gain of the attribute

3、 Next, let's repeat 1,2 Continue to find appropriate attribute nodes

Four 、 Another division criterion of decision tree —— Gain rate (C4.5 Decision tree algorithm )

5、 ... and 、 Another division criterion of decision tree —— gini index (CART Decision tree )

2、 Decision tree establishment method ( Categorical regression is available )

2、 Pruning and its basic strategies

7、 ... and 、 Continuous and missing values

1、 Continuous value processing

8、 ... and 、 Multivariate decision trees

One 、 Mathematical basis

1、 Information entropy

(1) The basic definition

Suppose the sample set D share N class , The first k The proportion of class samples is  , be D The entropy of information is :

, be D The entropy of information is :![]()

Information entropy describes the expectation of the amount of information that an event may produce before the result comes out , It describes uncertainty .

The greater the entropy of information , The greater the uncertainty .H(D) The smaller the value of , be D The higher the purity .

notes :

(1) When calculating information entropy : If p = 0, be

= 0

(2)Ent(D) The minimum value of is 0, The maximum is

As shown in the figure below —— Binary source entropy function ( ):

):

(2) Conditional entropy

(3) Relevant laws

if X,Y Are independent of each other ,

H(Y|Y)=0

2、 Information gain

Information gain is a statistic , Used to describe the ability of an attribute to distinguish data samples . The larger the information gain , Then the more concise the decision tree will be . Here, the degree of information gain is measured by the change degree of information entropy . The formula is as follows :

Two 、 The composition of the decision tree

1、 Decision node

Nodes that branch through conditional judgment . Such as : Attribute values in a sample ( The eigenvalue ) Compare with the value on the decision node , So as to judge its flow direction .

2、 Leaf node

Nodes without children , Represents the final decision result .

3、 Depth of the decision tree

The maximum number of layers for all nodes .

The decision tree has a certain hierarchical structure , The number of levels of the root node is 0, From below, the number of sub node layers of each layer +1.

3、 ... and 、 The establishment of decision tree ( Based on information gain )

Let's take an example , It's more convenient

According to the following information, build a decision tree to predict whether to loan . We can see that there is 4 A factor : occupation , Age , Income and education .

1、 Calculate the information entropy of the root node

“ yes ” Occupy  ;“ no ” Occupy

;“ no ” Occupy

2、 Calculate the information gain of the attribute

(1) occupation

H(" occupation ") =

IG(D," occupation ") = H(D) - H(" occupation ") = 0.066

(2) Age ( With 35 Age is the boundary )

H(" Age ") =

IG(D," Age ") = H(D) - H(" Age ") = 0

(3) income ( With 10000 As a boundary )

H(" income ") =

IG(D," income ") = H(D) - H(" income ") = 0.266

(4) Education ( Take high school as the boundary )

H(" Education ") =

IG(D," Education ") = H(D) - H(" Education ") = 0.266

Select the attribute with the largest information gain as the partition attribute , Namely choice “ income ”

3、 Next, let's repeat 1,2 Continue to find appropriate attribute nodes

Determine the second attribute node

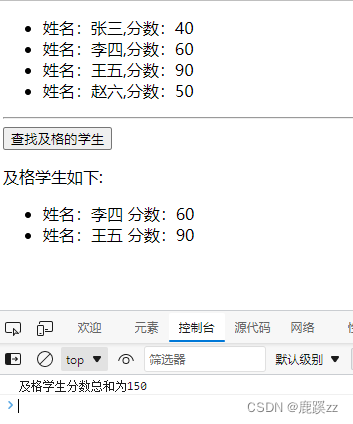

Step one :“ yes ” Occupy 0.5;“ no ” Occupy 0.5, therefore H=1

Step two :

Obviously , When the degree is in high school or above , Whether the loan is no ; When the degree is below high school , Whether the loan is yes .

So don't forget , Come straight to

In this way, the decision tree is built .

Four 、 Another division criterion of decision tree —— Gain rate (C4.5 Decision tree algorithm )

1、 The reason for introducing

Take the example above , We can see “ Education ” One column if we don't partition , Will produce 6 Branches , Each branch node contains only one sample . But such a decision tree does not have generalization ability , There is no effective prediction for new samples .

The information gain criterion has a preference for attributes with more values , To reduce the possible adverse effects of this preference , Some decision tree algorithms do not take information gain as the basis for the selection of optimal partition attributes , And choose the gain rate .

2、 Definition

among  It's called attribute a Of “ Intrinsic value ”

It's called attribute a Of “ Intrinsic value ”

attribute a The value is

, among

Express D All in attributes a The upper value is

A sample set of .

attribute a The greater the number of possible values of (V The bigger it is ),IV(a) The value of is usually larger .

3、 Example

Take the example above —— Calculation “ income ” Information gain rate

The above has been obtained

H(" income ") =

IG(D," income ") = H(D) - H(" income ") = 0.266

IV(" income ") =

4、 Be careful

The gain rate criterion has a preference for attributes with fewer values .

Therefore, the decision tree building method based on gain rate : First, find out the attributes whose information gain is higher than the average level from the candidate partition attributes , Then choose the one with the highest gain rate ( Instead of directly using the gain rate as the comparison standard ).

5、 ... and 、 Another division criterion of decision tree —— gini index (CART Decision tree )

1、 Definition

(1) Gini value

Gini(D) Reflected from the dataset D Two samples randomly selected from , The probability of the inconsistency of its class marks .

Gini(D) The smaller it is , Data sets D The higher the purity , The less uncertainty .

(2) gini index

2、 Decision tree establishment method ( Categorical regression is available )

In the candidate attribute set , The attribute with the smallest Gini index after selecting which is the optimal partition attribute .

6、 ... and 、 Pruning

1、 Put forward the reason

The decision tree may have too many branches , As a result, some characteristics of the training set itself are regarded as the general properties of all data, resulting in over fitting . The more complex the decision tree is , The higher the degree of over fitting . Therefore, we take the initiative to remove some branches to reduce the risk of over fitting .

2、 Pruning and its basic strategies

(1) prune : Pruning refers to deleting all the child nodes of a subtree , The root node acts as the leaf node .

(2) Basic strategy : Pre pruning and post pruning

3、 pre-pruning

(1) practice

In the process of decision tree generation , Each decision node was originally based on information gain 、 Purity indicators such as information gain rate or Gini index , The higher the value , Arrange nodes with higher priority . Due to pre pruning operation , Therefore, before dividing each node, it is necessary to judge whether the node is pruned , namely : Use the validation set to get the result according to the partition rules of the node . If the accuracy of the verification set is improved , No clipping is performed , The division is determined ; If the accuracy of the verification set remains unchanged or decreases , Then cut , And mark the current node as a leaf node .

(2) Specific examples

For example, in the above example “ Education ”

Let's choose the second one 5 Samples are validation sets

If not divided : The accuracy of the validation set is 50%( Half of it is , Half no ); If divided : Verify set accuracy 100%.

So we need to divide , No pruning .

(3) Advantages and disadvantages

advantage : Pre pruning makes many branches of the decision tree that have little relevance do not expand , This not only reduces the risk of over fitting , It also significantly reduces the training time cost and test time cost of the decision tree .

shortcoming : The current division of some branches can not improve the generalization ability , It may even lead to a temporary decline in generalization ability , However, the subsequent division based on it may improve the performance . Pre pruning is based on “ greedy ” The essence forbids these branches to expand , It brings the risk of under fitting to the pre pruning decision tree .

4、 After pruning

(1) practice

A decision tree has been generated from the training set , Then, from bottom to top, the decision node ( Non leaf node ) Use the test set to investigate , If the subtree corresponding to this node is replaced by a leaf node, the accuracy of the verification set can be improved ( This algorithm is similar to pre pruning ), Replace the subtree with leaf nodes , The generalization ability of the decision tree is improved .

(2) Advantages and disadvantages

advantage : Post pruning decision trees usually retain more branches than pre pruning decision trees . In general , The risk of under fitting of post pruning decision tree is very small , Generalization ability is often better than pre pruning decision tree .

shortcoming : The post pruning process is carried out after generating the decision tree , And all decision nodes in the tree should be inspected one by one from the bottom up , Therefore, its training time cost is much larger than that of non pruned decision tree and pre pruned decision tree .

7、 ... and 、 Continuous and missing values

1、 Continuous value processing

(1) Put forward the reason

Take a look at our example above , Some attribute values are discrete , Some are continuous . The number of values of continuous attributes is not limited , Therefore, nodes cannot be divided directly according to the values of continuous attributes .

(2) practice —— Continuous attribute discretization technology

The easiest way to do it is to dichotomy .

Extract all possible partition nodes

Given the sample set D And continuous properties a, hypothesis a stay D In the n Different values , Sort these values from small to large , Write it down as  . The selection formula of dividing points is :

. The selection formula of dividing points is : ( Altogether n-1 Points of division ).

( Altogether n-1 Points of division ).

Select the best partition node

Have n-1 After dividing nodes , You need to select the best dividing point , Then we can examine these partition points like discrete attributes . For example, calculate information gain , Choose which partition node gets the largest information gain .

2、 Missing value processing

(1) Put forward the reason

In the process of obtaining samples , It is inevitable that some attribute data will be missing in the final sample set for some reasons .

(2) practice

When there is very little missing data , Generally, those missing data are discarded directly ; And when there are many missing data , Simple discarding is a great waste of samples , Then deal with it in a certain way .

When there are many missing data :

Modify the calculation formula of information gain :

among :

- D Represents the entire sample ,

Indicates samples that do not contain missing values ;

- ρ Indicates completeness , Is the number of samples without missing values / The total number of samples ;

- k Is the value number of this attribute ;

Similar to the previous formula

, It refers to the proportion of the attribute value in all non missing samples . Let's say I have a “ Colour and lustre ” attribute , This property has 2 A value of : Black and white , share 10 A sample , Those with missing values are 3 individual . Examples without missing values are black 5 individual , White yes 2 individual . Then the value of black is

; White is

.

8、 ... and 、 Multivariate decision trees

The previous studies are all univariate decision trees , That is, each decision-making node only judges one attribute . For multivariable decision trees , Every decision node , Is a suitable linear classifier , That is, a group of classification rules composed of multiple attributes .

The construction of this linear classifier is based on our previous classification algorithms ( Take any node example :a* Age +b* income <c)

Such a decision tree will be relatively complex , Longer training time .

Nine 、python Realization

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

from sklearn import tree

iris = load_iris()# Dataset import

features = iris.data# Attribute characteristics

labels = iris.target# Category labels

train_features, test_features, train_labels, test_labels = train_test_split(features, labels, test_size=0.3, random_state=1)# Training set , Test set classification

clf = tree.DecisionTreeClassifier(criterion='entropy',max_depth=3)

clf = clf.fit(train_features, train_labels)#X,Y They are attribute features and classification label

test_labels_predict = clf.predict(test_features)# Predict the label of the test set

score = accuracy_score(test_labels, test_labels_predict)# Compare the predicted results with the actual results

print("CART Accuracy of classification tree %.4lf" % score)# Output results

dot_data = tree.export_graphviz(clf, out_file='iris_tree.dot')# Generate decision tree visualization dot file

Output results :

Accuracy of decision tree 0.9556I also generated one 'iris_tree.dot' file .

Enter the following command at the terminal under this directory :

dot -Tpng iris_tree.dot -o iris_tree.png

You can generate a 'iris_tree.dot' The decision tree image corresponding to the file

You are welcome to criticize and correct in the comment area , thank you ~

边栏推荐

- In depth interpretation of EVM's ecological Empire

- 浅谈Anroid设备的CPU类型以及so文件的放置目录

- Debug No5 basic lighting

- Day 8 notes

- QT creator.Pro file adds the corresponding library according to the kit

- 中国在又一个新兴科技领域领先美国,站在科技创新制高点

- Problem solving: script file 'scripts\pip script py‘ is not present.

- Smart canteen data analysis system

- NFT 交易市场的格局之变:从一家独大到百家争鸣

- Remote editing and debugging with vscode

猜你喜欢

基于BIM+3DGIS的智慧城市基础设施管理

The principle of Google interview questions is to analyze 12 table tennis balls, one of which is defective. Weigh it with a balance for 3 times to find it

Bit synchronization process of CAN controller

网易白帽子黑客训练营笔记(2)

Day 11 notes

In depth interpretation of EVM's ecological Empire

Method of entering mathematical formula into mark down document

vs2019:constexpr 函数“qCountLeadingZeroBits”不能生成常量表达式

轻重链剖分/树链剖分

ES6——周考题

随机推荐

Uncaught (in promise) Neo4jError: WebSocket connection failure. Due to security constraints in your

ES6——周考题

C#做一个简单浏览器

PHP gets the current timestamp three bit MS MS timestamp

PHP framework MVC class code audit

C语言插入排序(直接插入排序)

数据库-视图详探

ROS中引用和输出消息类型

Successful joint commissioning of Vientiane Aoke and CoDeSys Technology

动态规划每日一练(1)

Running matlab program on GPU

Qt Creator .pro文件根据kit添加对应库

离屏渲染 &FBO

Learn about canvas

云解决方案,为什么选择 AMD EPYC?

Notes on the ninth day

Knowledge map: basic concepts

docker redis

Shell运算符、$((运算式))” 或 “$[运算式]、expr方法、条件判断、test condition、[ condition ]、两个整数之间比较、按照文件权限进行判断、按照文件类型进行判断

ModuleNotFoundError: No module named ‘setuptools_ rust‘

= 0

= 0

, among

, among  Express D All in attributes a The upper value is

Express D All in attributes a The upper value is  A sample set of .

A sample set of . Indicates samples that do not contain missing values ;

Indicates samples that do not contain missing values ; Similar to the previous formula

Similar to the previous formula  , It refers to the proportion of the attribute value in all non missing samples . Let's say I have a “ Colour and lustre ” attribute , This property has 2 A value of : Black and white , share 10 A sample , Those with missing values are 3 individual . Examples without missing values are black 5 individual , White yes 2 individual . Then the value of black is

, It refers to the proportion of the attribute value in all non missing samples . Let's say I have a “ Colour and lustre ” attribute , This property has 2 A value of : Black and white , share 10 A sample , Those with missing values are 3 individual . Examples without missing values are black 5 individual , White yes 2 individual . Then the value of black is  ; White is

; White is  .

.