当前位置:网站首页>Google waymo proposed r4d: remote distance estimation using reference target

Google waymo proposed r4d: remote distance estimation using reference target

2022-06-24 13:51:00 【3D vision workshop】

Click on the above “3D Visual workshop ”, choice “ Star standard ”

The dry goods arrive at the first time

Author Huang Yu

Source computer vision deep learning and automatic driving

arXiv Upload on 6 month 10 Japanese papers "R4D: UTILIZING REFERENCE OBJECTS FOR LONGRANGE DISTANCE ESTIMATION", From Google WayMo company , Published in ICLR‘22.

Estimating the distance of the target is a key safety task of autonomous driving . Existing methods and datasets focus on short-range objectives , While ignoring equally important long-range goals . This paper introduces a new method of distance estimation , Validate with two data sets . then , Put forward R4D, A framework for accurately estimating the distance of a remote target from a reference target with known distance in the scene .

R4D Draw inspiration from human perception , Connect the target to all reference targets to build the diagram . The edge in the graph is opposite to the target - Coding with reference to the relative distance information between targets . Then note the importance of the module to measure the reference objectives , And combine them into a distance prediction for the target .

Experiments on two proposed datasets show that , Compared with existing baseline methods ,R4D The effectiveness and robustness of the system are significantly improved .

Estimating the distance between the target and the vehicle is very important for several autonomous driving tasks , Including changing lanes 、 Route planning 、 Speed adjustment 、 Collision avoidance, etc . Although existing methods and datasets focus on short-range targets , But know the distance of the long-distance target , That is, targets beyond the range of typical lidar ∼80 rice ( As shown in the figure )- For highway driving 、 Heavy truck driving and wet road driving are necessary .

According to the U.S. Department of transportation , The standard speed limit is 65 miles / Hours on the country road ∼ In an emergency , The distance for a passenger car to stop completely is 145 rice , It greatly exceeds the typical lidar sensing range . Heavy trucks or snow, ice and rain , The required stopping distance will increase significantly . for example , For truck transportation and wet road driving , The stopping distance is from 145 Rice increased to 183 Rice and 278 rice .

Besides , Considering the sudden sharp braking on the highway is unsafe , The target distance estimation beyond the minimum stopping distance is still very important , So as to provide enough time for gradual deceleration or lane change . therefore , In order to have enough time to respond appropriately and ensure safety , A auto drive system is required to estimate the distance to a remote target .

This key task is called remote distance estimation . say concretely , Given short-range lidar signal and camera image , The output of this task is the distance of the distant target ( Out of lidar range ). In accordance with existing practice , Measure the distance between the camera and the center of the target along the optical axis of the camera . For this new mission , Two datasets are introduced , Pseudo remote KITTI Data set and Waymo Open data set - Remote tags .

because KITTI The dataset does not provide the true distance of the remote target , Pseudo remote KITTI A dataset is a derived dataset , Deleted 40 Lidar points beyond meters , And will 40 All targets beyond meters are considered remote targets . what's more , stay Waymo A new large-scale data set is constructed on the basis of open data set , Contains real remote targets with annotations ( Distance from 80 M to 300 rice ).

All in all , Although these two data sets are in “ Remote ”(40 Meter or 80 Meters above ) There is a difference in the definition of , But they all include lidar points 、 Camera images and distance tags for distant targets .

Neither lidar nor camera can solve this long-range range estimation problem alone , Achieve the required accuracy . Most existing lidar technologies cannot meet the requirements of long-range sensing . according to Waymo and KITTI Autopilot car data set , The maximum range of the lidar is only 80 Rice or so , Less than the working distance required for the above scenario .

Although some advanced lidar systems claim to achieve a longer sensing range , for example Waymo The fifth generation lidar system and Velodyne Alpha PrimeTM, The sensing range can reach 300 rice , But lidar points are sparse at long distances , Therefore, it is easier to be blocked . therefore , Lidar alone is not enough to cover all security - Critical autonomous vehicle applications .

On the other hand , The camera can perceive the target at a greater range , And capture rich semantic information , Such as target appearance 、 Geometric and contextual cues . However , It does not by itself provide in-depth information . The classical geometry based algorithm can estimate the standard target according to the pixel size in the camera image ( Such as car 、 truck ) Distance of . However , Due to the error of scale estimation , These methods produce inaccurate results on long-range targets .

The result of the appearance based method in the long distance target is not satisfactory . This method relies on a single appearance cue to estimate the distance in the scene , Ignoring the background or other relevant signals . From a long distance , The target is visually small , This results in less appearance feature information . Although neither lidar nor camera can solve the problem of long-range range estimation alone , But these two signals provide additional clues to the task .

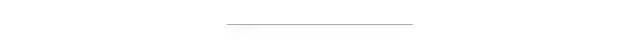

R4D Training a model to locate a long-range target at a known distance ( Designated target ), The specified target and reference are represented as a graph structure . As shown in the figure , Define the target as a node , These edges connect the target to the reference target . By extracting the target - Reference resources (Tar-Ref) The embedded , Propagate reference information to a remote designated destination .R4D Then all the targets - Reference embedded information is fed back to the attention module , This module weighs the relative importance of different reference information , And combine them into a distance prediction to fuse the information from different references .

Inspired by the observation that humans estimate the distance of a target relative to other references ,R4D Remote distance estimation using reference objects . The reference can be any combination of targets or points , The distance between these targets or points and the autonomous vehicle is known and accurate , For example, laser radar detection 、 Targets and map features detected by other sensors . The specified target and reference are represented as the figure above “Raw Inputs” The figure shown in the empty box . Specify that the target and its reference are nodes of the graph . The edge connects the specified target to its reference target , Code the pairwise relationship .

R4D The detailed architecture is shown in the figure : For modeling purposes - Refer to pairing relationship , It is recommended to extract joint embedding and geography - Distance embedding , Encode visual and geometric relationships respectively ; then , Inspired by the intuition of the different importance of the reference goals , Introduce an attention module , Selectively polymerize into pairs ; Last ,R4D Training through supplementary supervision : The relative distance between the target and its reference .

The monocular camera is used as the main sensor to detect the remote designated target , The short-range target detected by lidar is used as a reference . It is worth noting that ,R4D Not specifically designed for lidar or monocular imagery , It can be easily extended to other sensors and references .

1 Modeling pairwise relationships

Joint embedding

Visual cues for pairwise relationship modeling should be based on the specified target 、 Refer to the target and the scenario between the two targets . therefore , This paper presents a simple method to extract feature embedding from the union frame covering two targets .

Geography - Distance embedding

To provide geometric cues , Form the input with the following :

Specify the 2D frame center coordinates of the target and the reference target , And the relative position offset between them .

Specify the 2D border size of the target and the reference target , And the relative proportion between them .

Laser radar 3D The reference distance provided by the target detector .

then , Send this input to the multilayer perceptron , Generate geography - Distance embedding .

Given a specified goal 、 Reference resources 、 Union and geography - Distance embedding , Connect them together , Form the final target - Embedding of references , The embedded pair specifies the target - Model with reference to the relationship between objectives .

2 Attention based information aggregation

Combine pairs of specified targets - Reference target embedding , Estimate the distance of the specified target . A simple method is to average all references of the same specified target . However , Intuitively , As the result of the experiment , Reference goals are not equally important . for example , When locating a distant car in the upper right corner of the image , The car in the lower left corner of the image may not help much .

In order to guide the model to focus on the most important reference objectives , Introduce an attention based module . As shown in the figure , follow VectorNet To build embeddedness with local and global information . say concretely , use MLP And average pooled extraction global embedding ( As shown in the figure, yellow ).

then , Global embedding and original specified target - Reference embedments are connected together ( Blue in the picture ). Considering these big picture - Local embedding , Note that the module uses a full connection layer and softmax The layer predicts and normalizes the importance weights for each reference . Last , Use these weights to fuse the original specified targets - Reference embedded , Finally, we get an embedded .

3 Monitor relative distance

Embed prediction of specified target distance from specified target , Than from other indirect clues ( Such as reference embedding ) It is easier to predict the specified target distance . In order to encourage the model to learn the pairwise relationship between the specified goal and the reference goal , Not a short-circuit lead , The author provides additional supervision here . The design of this additional supervision is similar to the residual representation , Residual representation is widely used in computer vision , To help optimize the process .

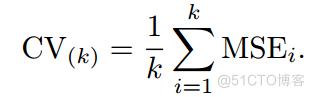

say concretely , In the training phase , Specify goals for each - Reference target embedding adds a relative ( Or residual ) Distance head . Specify the relative distance between the target and the reference target ∆d Given by the following formula ∆d = dt − dr, among dt Is the distance from the specified target .

original KITTI Data set containing RGB Images 、 LIDAR point cloud 、 2D and 3D borders and other per pixel annotations . With these rich comments ,KITTI Benchmarks for autonomous driving tasks have been developed on the dataset , Including scene flow estimation 、 Depth estimation 、 Detection and segmentation .

Even though KITTI Data sets provide LIDAR point clouds , However, it does not provide distance marking beyond the sensing range of lidar . Pseudo remote KITTI Data sets , From the original KITTI Derived from a dataset . By assuming that the effective detection range of lidar is only 40 rice , Remove further LIDAR Points . Targets beyond this effective sensing range are considered as remote designated targets , The distance of other targets is known and provided as input .

It is worth noting that , There is no overlap between the above specified target and the target with known distance . As agreed , The original training data is divided into two subsets , For training and verification respectively . Images that do not contain any remote targets will be deleted from the dataset . therefore , Pseudo remote KITTI The data set includes training set and verification set respectively 2181 Images 4233 Vehicles , as well as 2340 Images 4033 Vehicles . This derived dataset is relatively small , what's more , It does not contain real remote targets .

In view of the above pseudo remote KITTI Limitations of data sets , Here we build a new remote data set , The data set is built on Waymo Based on open data sets , Including distance from autonomous vehicles 300 Meters of vehicles . Its annotation is generated as follows .

First , Create... For targets within lidar range 3D box . then , Rough positioning with radar derived signals , Extend the lidar frame to the remote . Due to the low radar resolution , Smooth target trajectory constraint is adopted for marking , In order to ensure that 3D The box is consistent in time and space . Last , Given a long-range target 3D box , Calculate the distance to the autonomous vehicle .

in general , As shown in the figure , get 49,056 Zhang Hanhan 187,938 Training image of a remote vehicle , as well as 3,578 Zhang Hanhan 10,483 Verification images of remote vehicles . These images span different times of the day , Including the dawn 、 Day 、 Dusk and night .

Distance enhancement is designed to encourage R4D Learn from pairing relationships , And prevent using only a single short-circuit prompt ( Target embedding ). By emphasizing the correlation between pairwise embedding and distance prediction , The guidance model focuses on the relative distance between the reference target and the specified target . say concretely , Keep the relative distance fixed , Disturbance reference distance , The model is expected to predict the target distance under the same disturbance .

for example , Pictured (a) Shown , The relative distance between the specified target and the reference target is 120 rice , If the reference target distance is 80 rice , The model should predict 200 The target distance of meters (= 80 rice +120 rice ). Pictured (b) Shown , When the reference distance provided is disturbed ( For example, add 20 rice ) when , The model should predict 220 rice (= 100 rice +120 rice ) As the target distance , To maintain 120 The correct relative distance of meters . This helps the model not over adapt to the appearance cues provided by the specified target , More robust to minor changes in camera parameters or field of view .

Similar enhancement techniques have been successfully used to prevent short-circuit learning in other deep learning tasks . During training , Gaussian distribution X Middle sampling distance label disturbance ∼ N(μ,σ2),μ=0,σ=200. For pseudo remote KITTI Data sets , use σ=50.

The experimental results are as follows :

This article is only for academic sharing , If there is any infringement , Please contact to delete .

3D Visual workshop boutique course official website :3dcver.com

1. Multi sensor data fusion technology for automatic driving field

2. For the field of automatic driving 3D Whole stack learning route of point cloud target detection !( Single mode + Multimodal / data + Code )

3. Thoroughly understand the visual three-dimensional reconstruction : Principle analysis 、 Code explanation 、 Optimization and improvement

4. China's first point cloud processing course for industrial practice

5. laser - Vision -IMU-GPS The fusion SLAM Algorithm sorting and code explanation

6. Thoroughly understand the vision - inertia SLAM: be based on VINS-Fusion The class officially started

7. Thoroughly understand based on LOAM Framework of the 3D laser SLAM: Source code analysis to algorithm optimization

8. Thorough analysis of indoor 、 Outdoor laser SLAM Key algorithm principle 、 Code and actual combat (cartographer+LOAM +LIO-SAM)

10. Monocular depth estimation method : Algorithm sorting and code implementation

11. Deployment of deep learning model in autopilot

12. Camera model and calibration ( Monocular + Binocular + fisheye )

13. blockbuster ! Four rotor aircraft : Algorithm and practice

14.ROS2 From entry to mastery : Theory and practice

15. The first one in China 3D Defect detection tutorial : theory 、 Source code and actual combat

blockbuster !3DCVer- Academic paper writing contribution Communication group Established

Scan the code to add a little assistant wechat , can Apply to join 3D Visual workshop - Academic paper writing and contribution WeChat ac group , The purpose is to communicate with each other 、 Top issue 、SCI、EI And so on .

meanwhile You can also apply to join our subdivided direction communication group , At present, there are mainly 3D Vision 、CV& Deep learning 、SLAM、 Three dimensional reconstruction 、 Point cloud post processing 、 Autopilot 、 Multi-sensor fusion 、CV introduction 、 Three dimensional measurement 、VR/AR、3D Face recognition 、 Medical imaging 、 defect detection 、 Pedestrian recognition 、 Target tracking 、 Visual products landing 、 The visual contest 、 License plate recognition 、 Hardware selection 、 Academic exchange 、 Job exchange 、ORB-SLAM Series source code exchange 、 Depth estimation Wait for wechat group .

Be sure to note : Research direction + School / company + nickname , for example :”3D Vision + Shanghai Jiaotong University + quietly “. Please note... According to the format , Can be quickly passed and invited into the group . Original contribution Please also contact .

▲ Long press and add wechat group or contribute

▲ The official account of long click attention

3D Vision goes from entry to mastery of knowledge : in the light of 3D In the field of vision Video Course cheng ( 3D reconstruction series 、 3D point cloud series 、 Structured light series 、 Hand eye calibration 、 Camera calibration 、 laser / Vision SLAM、 Automatically Driving, etc )、 Summary of knowledge points 、 Introduction advanced learning route 、 newest paper Share 、 Question answer Carry out deep cultivation in five aspects , There are also algorithm engineers from various large factories to provide technical guidance . meanwhile , The planet will be jointly released by well-known enterprises 3D Vision related algorithm development positions and project docking information , Create a set of technology and employment as one of the iron fans gathering area , near 4000 Planet members create better AI The world is making progress together , Knowledge planet portal :

Study 3D Visual core technology , Scan to see the introduction ,3 Unconditional refund within days

There are high quality tutorial materials in the circle 、 Answer questions and solve doubts 、 Help you solve problems efficiently

Feel useful , Please give me a compliment ~

边栏推荐

猜你喜欢

每日一题day8-515. 在每个树行中找最大值

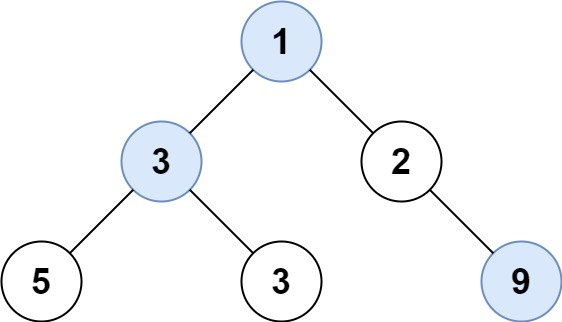

吉时利静电计宽测量范围

快手实时数仓保障体系研发实践

These default routes and static routes can not be configured and deployed. What kind of network workers are they!

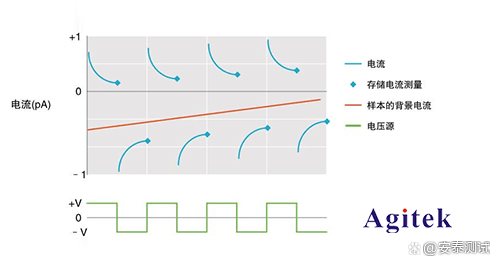

杰理之红外滤波【篇】

《中国数据库安全能力市场洞察,2022》报告研究正式启动

Hands on data analysis unit 3 model building and evaluation

How to manage tasks in the low code platform of the Internet of things?

知识经济时代,教会你做好知识管理

Activity lifecycle

随机推荐

杰理之检测 MIC 能量自动录音自动播放参考【篇】

金鱼哥RHCA回忆录:DO447管理清单和凭据--为访问清单主机创建机器凭据

Vim 常用快捷键

These default routes and static routes can not be configured and deployed. What kind of network workers are they!

Operation of simulated examination platform for examination questions of coal production and operation units (safety production management personnel) in 2022

2022年烟花爆竹生产单位安全生产管理人员考试题模拟考试题库模拟考试平台操作

数据科学家面临的七大挑战及解决方法

10 reduce common "tricks"

【R语言数据科学】(十四):随机变量和基本统计量

Kotlin anonymous function and lambda

数学之英文写作——基本中英文词汇(几何与三角的常用词汇)

Can a team do both projects and products?

一个团队可以既做项目又做产品吗?

常识知识点

One hour is worth seven days! Ingenuity in the work of programmers

杰理之无缝循环播放【篇】

What is the difference between sap QM and UD for inspection lots with hum?

[sdx62] wcn685x IPA failure analysis and solution

SAP QM qac1 transaction code cannot modify the quantity in the inspection lot containing Hu

The first open source MySQL HTAP database in China will be released soon, and the three highlights will be notified in advance