当前位置:网站首页>R language book learning 03 "in simple terms R language data analysis" - Chapter 10 association rules Chapter 11 random forest

R language book learning 03 "in simple terms R language data analysis" - Chapter 10 association rules Chapter 11 random forest

2022-06-11 21:52:00 【Deep bamboo breeze】

1. Association rules

1.1 summary

Association rules are one of the simple solutions to big data problems , It belongs to unsupervised learning algorithm . It is used to recognize patterns without any prior knowledge of patterns . It can be applied to personalized recommendation and other scenarios .

Association rules have two parts , The premise and the result . The prerequisite part is the items found in the data , The result is a combination of antecedents . By analyzing frequent if/then Pattern data and use support and confidence to identify the most important relationships to create association rules .

1.2 Code implementation

First use summary() Show data details , Use inspect() View the data , Use itemFrequency() Function to see how often an item appears .

Use eclat() Function to build the model , The first parameter is the data set used ,parameter Parameter is used to specify related indicators .

freq.itemsets<-eclat(data,parameter=list(supp=0.075,maxlen=15))And then use apriorr() Function to build association rules , The first parameter is the data set used ,parameter Used to specify the corresponding indicators , For example, what is the minimum confidence level required by the project .

gro<-apriori(data,parameter=list(support=0.006,confidence=0.25,minlen=2))Then use summary Function to view and evaluate .

1.3 visualization

Use arulesViz Packages can be visualized .

library(arulesViz)

plot(berryrules,method="graph")Use treemap Package can realize drawing treemap chart .

1.4 summary

Association rules are used to discover potential relationships in data , This relationship is different from the clustering algorithm , Use similarity to measure the relationship between data , Association rules include certain causal concepts .

2 Random forests

2.1 Basic concepts

Random forest is an integrated machine learning model , yes Bagging A special case of the algorithm . A random forest consists of multiple decision trees , It has been mentioned in previous books ( Number 01).

2.2 Code implementation

The most common package for building random forests is randomForest, among randomForest() Function is used to build a random forest model , These include Formula( The formula of the model )\ntree( The number of decision trees in a random forest )\mtry( The number of features extracted when dividing decision tree nodes ) Three parameters .

library(randomForest)

library(tidyverse)

tmp<-data.frame(x1=runif(100,0,1),x2=runif(100,0,1),x3=runif(100,0,1),x4=runif(100,01,1),x5=runif(100,0,1),x6=runif(100,0,1),x7=runif(100,0,1),x8=runif(100,0,1),y=sample(c(1,0),100,T))

tmp$y<-as.factor(tmp$y)

rf<-randomForest(y~.,data=tmp,proximity=TRUE)

For the variable importance of random forest, we can use varlmPlot() Functions to visualize , Use it directly plot() Function can get error results .

Use runeRF() Function to search for the optimal number of features . among x Represent the features used in the training model ,y It means label ,mtryStart Indicates how much to start searching ,ntreeTry Indicates how many trees there are in the random forest model ,setpFactor Represents the time interval of each iteration .

set.seed(1)

mtry<-tuneRF(x=tmp[,-9],y=tmp[,9]

pre<-predict(rf,newdata=tmp,type="prob")

边栏推荐

- 科普 | NFT的类型有哪些(上)

- Redis Foundation

- EndnoteX9简介及基本教程使用说明

- RPA超自动化 | 农耕记携手云扩加速财务智能化运营

- How to use the transaction code sat to find the name of the background storage database table corresponding to a sapgui screen field

- How to import workflows provided on SAP API hub to sap BTP

- 学习位段(1)

- Codeworks round 739 (Div. 3) problem solving Report

- Leetcode-322- change exchange

- 联调这夜,我把同事打了...

猜你喜欢

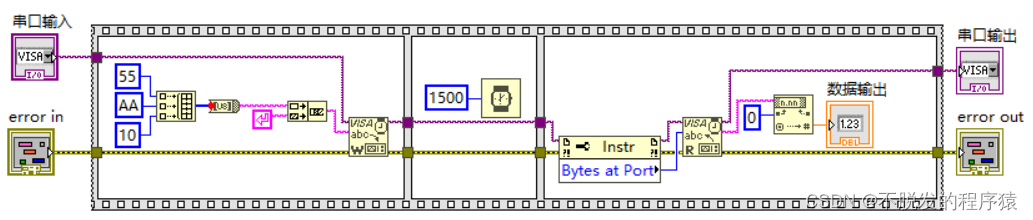

LabVIEW controls Arduino to realize infrared ranging (advanced chapter-6)

学习位段(1)

Leetcode-43- string multiplication

RPA超自动化 | 农耕记携手云扩加速财务智能化运营

Matlab: 文件夹锁定问题的解决

Leetcode-155-minimum stack

高考结束,人生才刚刚开始,10年职场老鸟给的建议

Leetcode-32- longest valid bracket

Release of version 5.6 of rainbow, add multiple installation methods, and optimize the topology operation experience

![[today in history] June 11: the co inventor of Monte Carlo method was born; Google launched Google Earth; Google acquires waze](/img/eb/147d4aac20639d50b204dcf424c9e2.png)

[today in history] June 11: the co inventor of Monte Carlo method was born; Google launched Google Earth; Google acquires waze

随机推荐

Building a custom CNN model: identifying covid-19

C语言实现迷宫问题

Leetcode-110-balanced binary tree

Why microservices are needed

How to view the installation date of the win system

【学术相关】申请审核制下,到双一流大学读博的难度有多大?

LabVIEW控制Arduino实现红外测距(进阶篇—6)

Flutter series: detailed explanation of container layout commonly used in flutter

Supplementary questions for the training ground on September 11, 2021

揭秘爆款的小程序,为何一黑到底

C语言实现八种排序(3)

Example of using zypper command

Codeforces Round #742 (Div. 2) F. One-Four Overload

如何使用事物码 SAT 查找某个 SAPGUI 屏幕字段对应的后台存储数据库表的名称试读版

In the future, cloud expansion technology is expected to be selected as a specialized, special and new enterprise in Shanghai

实现栈和队列

建造者模式

Leetcode-129- sum of numbers from root node to leaf node

【历史上的今天】6 月 11 日:蒙特卡罗方法的共同发明者出生;谷歌推出 Google 地球;谷歌收购 Waze

The same efficiency tool for leading enterprises to promote smart finance. Let's have a quick look?