当前位置:网站首页>How to ensure the sequence of messages, that messages are not lost or consumed repeatedly

How to ensure the sequence of messages, that messages are not lost or consumed repeatedly

2022-06-11 00:43:00 【Wenxiaowu】

How to ensure the order of messages

Business scenario : We need to mysql Of binlog Log synchronizes data from one database to another , Jiaruzai binlog For the same data insert,update,delete operation , We went to MQ Sequentially written insert,update,delete Three messages of operation , So according to the analysis , Finally sync to another library , This data is deleted . however , If these three messages are not in accordance with insert,update,delete The sequence is consumed , But according to delete,insert,update The order is consumed , Finally, this data will be saved to the new database . This leads to data confusion . Let's talk about RabbitMQ How to ensure the sequence of messages .

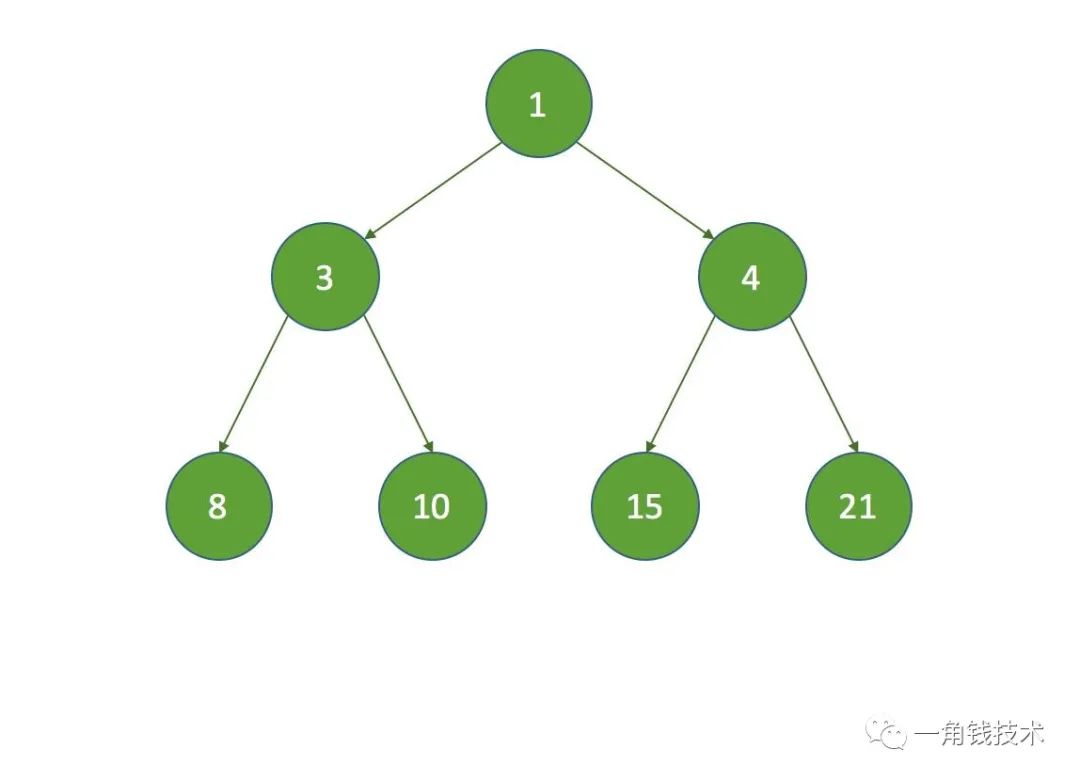

Be careful :queue( queue ) Messages in can only be consumed by one consumer , Then consumers are disordered in the process of consuming news . As shown in the figure above , If according to BAC The order of consumption , Then the data is saved in the database . This is not consistent with our expected results , If this happens a lot , So the data in the database is not completed correctly , Synchronization is also in vain .

As shown in the figure ,RabbitMQ Ensure the order of messages , Is to split multiple queue, Every queue Corresponding to one consumer( consumer ), Just a little more queue nothing more , It's a bit of a hassle ; Or just one queue But for one consumer, And then this consumer Internal use memory queue for queuing , And then distribute it to the different ones at the bottom worker To deal with it .

How to ensure that messages are not lost ?

1, The producer sends a message to MQ Data loss for

resolvent : On the producer side comfirm Validation mode , Every time you write a message, you assign a unique one id,

And then if you write RabbitMQ in ,RabbitMQ I'll send you back one ack news , Tell you the news ok 了 .

2,MQ Received a message , Temporarily stored in memory , Not yet spent , Hang up yourself , Data will be lost

Solution :MQ Set to persistent . Persist memory data to disk

3, The consumer just got the news , It hasn't been dealt with yet , Hang up ,MQ And think the consumer has finished

Solution : use RabbitMQ Provided ack Mechanism , Simply speaking , That you have to shut down RabbitMQ Automatic ack, Through a api Just call it , And every time you make sure you're done with your own code , In the program ack One . In this case , If you haven't dealt with it , No, No. ack 了 ? that RabbitMQ Think you're not done with it , This is the time RabbitMQ Will allocate this consumption to others consumer To deal with , The news will not be lost .

How to ensure that messages are not consumed repeatedly

Business scenario : Suppose you have a system , Consume a message and insert a piece of data into the database , If you repeat a message twice , You just inserted two , This data is not wrong ? But if you spend the second time , Make your own judgment about whether you've already consumed , If you throw it directly , In this way, a piece of data is not preserved , So as to ensure the correctness of the data .

A piece of data repeats twice , There is only one piece of data in the database , This guarantees the idempotence of the system .

Idempotency , Popular point theory , Just one data , Or a request , Repeat for you many times , You have to make sure that the corresponding data doesn't change , Don't make mistakes .

Solution :

For example, you take a data to write to the database , First, you can check according to the primary key , If all this data is available , You don't have to insert ,update Just a moment .

For example, you write Redis, That's OK , Anyway, every time set, Natural idempotence .

For example, you are not the above two scenes , That's a little more complicated , When you need to have producers send every piece of data , There is a global and unique id, Similar orders id Things like that , Then when you spend here , According to this id Go for example Redis Check it out , Have you ever consumed before ? If you haven't consumed , You deal with , And then this id Write Redis. If you've spent , Then don't deal with it , Make sure you don't repeat the same message .

For example, the unique key based on the database ensures that duplicate data will not be repeatedly inserted . Because there is only one key constraint , Repeated data insertion will only report errors , No dirty data in the database

边栏推荐

- Objects as points personal summary

- Kwai handled more than 54000 illegal accounts: how to crack down on illegal accounts on the platform

- Philips coo will be assigned to solve the dual crisis of "supply chain and product recall" in the face of crisis due to personnel change

- 【无标题】6666666

- 富文本活动测试1

- LeetCode 1996. Number of weak characters in the game*

- sql 语句--输入 月份 查日期(年月日),输出 月份

- USB IP core FPGA debugging (I)

- 763. dividing alphabetic intervals

- 海贼oj#148.字符串反转

猜你喜欢

MESI cache consistency protocol for concurrent programming

图的最短路径问题 详细分解版

自动化测试系列

![[MVC&Core]ASP. Introduction to net core MVC view value transfer](/img/c2/3e69cda2fed396505b5aa5888b9e5f.png)

[MVC&Core]ASP. Introduction to net core MVC view value transfer

Blog recommendation | building IOT applications -- Introduction to flip technology stack

Objects as points personal summary

阻塞队列 — DelayedWorkQueue源码分析

![[no title] 66666](/img/6c/df2ebb3e39d1e47b8dd74cfdddbb06.gif)

[no title] 66666

【无标题】4555

VTK例子--三个相交的平面

随机推荐

系统应用安装时,签名校验失败问题

[network planning] 1.5 seven layer network model and five layer network model

String time sorting, sorting time format strings

452. detonate the balloon with the minimum number of arrows

mybaits merge into

JVM garbage collection mechanism and common garbage collectors

[no title] 4555

[JVM] class loading mechanism

How to handle file cache and session?

canvas绘画折线段

自动化测试系列

Yii2 activerecord uses the ID associated with the table to automatically remove duplicates

f‘s‘f‘s‘f‘s‘d

What are absolute and relative paths, and what are their advantages and disadvantages?

【无标题】6666666

How to install mathtype6.9 and related problem solutions in office2016 (word2016)

Shengteng AI development experience based on target detection and identification of Huawei cloud ECS [Huawei cloud to jianzhiyuan]

On the quality assurance system of youzan search V2021

Computer screen recording free software GIF and other format videos

Static method static learning