当前位置:网站首页>Meituan won the first place in fewclue in the small sample learning list! Prompt learning+ self training practice

Meituan won the first place in fewclue in the small sample learning list! Prompt learning+ self training practice

2022-06-12 18:51:00 【Meituan technical team】

In recent days, , Meituan search and NLP Ministry NLP The small sample learning model of the central semantic understanding team FSL++ In the Chinese small sample language understanding authority evaluation benchmark FewCLUE Top of the list , Reasoning in natural language (OCNLI) Get the first place in a single task , And in very few samples ( A category only 100 More than a ) Under the condition of , In the news category (TNEWS)、 Classification of scientific literature (CSLDCP) It surpasses the accuracy of human recognition in task .

1 summary

CLUE(Chinese Language Understanding Evaluation)<sup>[1]</sup> It is the authoritative evaluation list of Chinese language understanding , Contains text categories 、 Sentence to sentence relationship 、 Reading comprehension and many other semantic analysis and semantic understanding subtasks , It has had a great impact on the academic and industrial circles .

FewCLUE<sup>[2,3]</sup> yes CLUE A sub list of secondary specialized schools for learning and evaluating small Chinese samples , It aims to combine the generality and powerful generalization ability of the pre training language model , Explore the best model of small sample learning and its practice in Chinese .FewCLUE There are only more than 100 labeled samples in some data sets of , It can measure the generalization performance of the model with few label samples , After the release, it attracted the attention of Netease 、 WeChat AI、 Alibaba 、IDEA research institute 、 Inspur Artificial Intelligence Research Institute and other enterprises and research institutes participated . not long ago , Meituan platform search and NLP Ministry NLP The small sample learning model of the central semantic understanding team FSL++ With superior performance in FewCLUE Top of the list , achieve SOTA level .

2 Methods to introduce

Although the large-scale pre training model has achieved very good results in major tasks , But on specific tasks , Still need a lot of annotation data . In all businesses of meituan , There is a wealth of NLP scene , It often requires high manual annotation cost . In the early stage of business development or when new business needs to be quickly launched , There are often insufficient labeled samples , Using tradition Pretrain( Preliminary training )+ Fine-Tune( fine-tuning ) The deep learning training method of the is often not up to the ideal index requirements , Therefore, it is very necessary to study the model training of small sample scenes .

This paper presents a set of large-scale models + Small sample joint training program FSL++, The optimization of model structure is integrated 、 Massive pre training 、 Sample enhancement 、 Integrated learning, self training and other model optimization strategies , Finally, it is under the benchmark of authoritative evaluation of Chinese language understanding FewCLUE The list has achieved excellent results , And the performance on some tasks exceeds the human level , And on some tasks ( Such as CLUEWSC) There is still room for improvement .

FewCLUE After the release of , Netease Fuxi uses self-developed EET Model <sup>[4]</sup>, And through the second training to enhance the semantic understanding ability of the model , Then add the template for multi task learning ;IDEA The Erlang God model of the Institute <sup>[5]</sup> stay BERT Based on the model, more advanced pre training techniques are used to train the large model , In the process of downstream task fine-tuning, add dynamic Mask Strategic Masked Language Model(MLM) As an auxiliary task . These methods all use Prompt Learning As the basic task Architecture , Compared with these self-developed large models , Our method is mainly in Prompt Learning On the basis of the framework, sample enhancement is added 、 Integrated learning, self-learning and other model optimization strategies , Greatly improve the task performance and robustness of the model , At the same time, this method can be applied to all kinds of pre training models , More flexible and convenient .

FSL++ The overall model structure is shown in the figure below 2 Shown .FewCLUE Data sets provide for each task 160 Pieces of tagged data and nearly 20000 pieces of unlabeled data . This time FewCLUE In practice , We first in Fine-Tune Stage construction multi template Prompt Learning, And use confrontation training for tagged data 、 Comparative learning 、Mixup And so on . Because these data enhancement strategies use different enhancement principles , It can be considered that there are significant differences between these models , After integrated learning, there will be better results . So after training with data enhancement strategy , We have multiple weakly supervised models , And use these weak supervised models to predict on unlabeled data , Get the pseudo label distribution of unlabeled data . after , We integrate the pseudo label distribution of unlabeled data predicted by different data enhancement models , Get a false label distribution of total unlabeled data , Then reconstruct the multi template Prompt Learning, And use the data enhancement strategy again , Choose the best strategy . at present , Our experiment is only one iteration , You can also try multiple iterations , But as the number of iterations increases , Ascension is no longer obvious .

2.1 Enhance pre training

The pre training language model is trained on a large unlabeled corpus . for example ,RoBERTa<sup>[6]</sup> stay 160GB The above text for training , Including encyclopedias 、 News articles 、 Literature and Web Content . Representations learned from these models , Achieve excellent performance in tasks that include datasets of various sizes from multiple sources .

FSL++ Model USES RoBERTa-large Model as the basic model , And adopt the Domain-Adaptive Pretraining (DAPT)<sup>[7]</sup> Pre training methods and the integration of task knowledge Task-Adaptive Pretraining (TAPT)<sup>[7]</sup>.DAPT Designed to pre train the model based on , Add a large number of unlabeled text in the field to continue training the language model , Then fine tune the data set of the specified task .

Continue to pre train the target text field , It can improve the performance of the language model , Especially on the downstream tasks related to the target text domain . also , The more relevant the pre training text is to the task domain , The greater the improvement . In this practice , We finally used in 100G Including entertainment programs 、 sports 、 health 、 International affairs 、 The movie 、 Celebrities and other fields CLUE Vocab<sup>[8]</sup> From pre training RoBERTa Large Model .TAPT On the basis of the pre training model , Add a small number of unlabeled corpus directly related to the task for pre training . in the light of TAPT Mission , The pre training data we chose to use was FewCLUE Unlabeled data provided by the list for each task .

besides , In the task of inter sentence relation , Such as Chinese natural language reasoning task OCNLI、 Chinese dialogue short text matching task BUSTM In the practice of , We use it in other inter sentence relation tasks, such as Chinese natural language inference dataset CMNLI、 Chinese short text similarity data set LCQMC The model parameters for pre training are used as the initial parameters , Compared with directly using the original model to complete the task , It can also improve certain effects .

2.2 Model structure

FewCLUE Contains a variety of task forms , We chose the appropriate model structure for each task . Text classification tasks and machine reading comprehension (MRC) The category words of the task itself carry information , Therefore, it is more suitable to model as Masked Language Model(MLM) form ; The inter sentence relationship task judges the relevance of two sentences , More similar to Next Sentence Prediction(NSP)<sup>[9]</sup> Task form . therefore , We chose for the classification task and the reading comprehension task PET<sup>[10]</sup> Model , Choose... For the inter sentence task EFL<sup>[11]</sup> Model ,EFL Method can construct negative samples by global sampling , Learn more robust classifiers .

2.2.1 Prompt Learning

Prompt Learning The main goal of is to minimize the gap between the pre training goal and the downstream fine-tuning goal . Usually existing pre training tasks include MLM Loss function , However, the downstream tasks did not adopt MLM, Instead, a new classifier is introduced , This makes the pre training task inconsistent with the downstream task .Prompt Learning No additional classifiers or other parameters are introduced , But by splicing templates (Template, That is to splice language fragments for input data , Thus the transformation task is MLM form ) And tag word mapping (Verbalizer, Find the corresponding word in the word list for each tag , Thus for MLM Task set forecast goals ), The model can be used in downstream tasks with a small number of samples .

In an effort to 3 Displayed e-commerce evaluation emotion analysis task EPRSTMT For example . Given the text “ This movie is really good , It's worth watching for a second time !”, The traditional text classification is based on CLS Part of the Embedding Connect the classifier , And map to 0-1 Classification (0: Negative ,1: positive ). This method needs to train new classifiers in small sample scenarios , It is difficult to get good results . And based on Prompt Learning The method is to create a template “ This is a piece. [MASK] review .”, Then splice the template with the original text , When training, predict through language model [MASK] The word of position , Then map it to the corresponding category ( good : positive , Bad : Negative ).

Due to lack of sufficient data , Sometimes it is difficult to identify the best performing template and tag word mappings . therefore , You can also use the design of multi template and multi label word mapping . By designing multiple templates , The final result is the integration of the results of multiple templates , Or design a one to many tag word mapping , Make one tag correspond to multiple words . Same as the above example , The following template combinations can be designed ( Left : Multiple templates for the same sentence , Right : Multi label mapping ).

Task sample

2.2.2 EFL

EFL The model stitches two sentences together , With the output layer [CLS] In position Embedding Then a classifier completes the prediction .EFL During the training , In addition to training set samples , Negative sample construction is also performed , During training , At every Batch Randomly select sentences from other data as negative samples , Data enhancement by constructing negative samples . although EFL The model needs to train new classifiers , But there are many open texts that contain / Inter sentence relation data set , Such as CMNLI、LCQMC etc. , This can be achieved through continuous learning on these samples (continue-train), Then transfer the learned parameters to the small sample scenario , use FewCLUE To further fine tune the task data set .

Task sample

2.3 Data to enhance

Data enhancement methods mainly include sample enhancement and Embedding enhance .NLP In the field , The purpose of data enhancement is to expand text data without changing semantics . The main methods include simple text substitution 、 Use language model to generate similar sentences , We tried EDA And other methods to expand text data , But the change of one word may cause the meaning of the whole sentence to flip , The replaced text carries a lot of noise , So it is difficult to generate enough enhancement data with simple rule sample changes . and Embedding enhance , Then the input is no longer operated , Turn to Embedding You can do it at all levels , It can be done by Embedding Increase disturbance or interpolation to improve the robustness of the model .

therefore , In this practice, we mainly carry out Embedding enhance . The data enhancement strategies we use are Mixup<sup>[12]</sup>、Manifold-Mixup<sup>[13]</sup>、 Confrontation training (Adversarial training, AT) <sup>[14]</sup> And comparative learning R-drop<sup>[15]</sup>. For a detailed introduction to the data enhancement strategy, see the previous technology blog Small sample learning and its application in meituan scene .

Mixup Through simple linear transformation of input data , Construct new composite samples and composite labels , It can enhance the generalization ability of the model . On various supervised or semi supervised tasks , Use Mixup Can greatly improve the generalization ability of the model .Mixup Method can be considered as a regularization operation , It requires that the combined features generated by the model at the feature level meet the linear constraints , And use this constraint to regularize the model . Intuitive to see , When the input of the model is a linear combination of the other two inputs , The output is also a linear combination of the output of the two data after they are input into the model alone , In fact, the model is required to be approximately a linear system .

Manifold Mixup The above Mixup Generalize operations to features . Because features have higher-order semantic information , Therefore, interpolation in its dimension may produce more meaningful samples . It's similar to BERT<sup>[9]</sup>、RoBERTa<sup>[6]</sup> In the model of , Randomly select the number of layers k, The feature representation of this layer is analyzed Mixup interpolation . ordinary Mixup The interpolation of occurs at the output layer Embedding part , and Manifold Mixup It is equivalent to adding this series of interpolation operations to the language model Transformers In a random layer of the structure .

Antagonism training can significantly improve the model by adding small disturbances to the input samples Loss. Confrontation training is to train a model that can effectively identify the original samples and confrontation samples . The basic principle is to construct some counter samples by adding disturbances , Give it to the model to train , Improve the robustness of the model when encountering counter samples , At the same time, it can also improve the performance and generalization ability of the model . The countermeasure sample needs to have two characteristics , Namely :

- Relative to the original input , The added disturbance is small .

- Can make the model wrong . Confrontation training has two functions , They are to improve the robustness of the model to malicious attacks and to improve the generalization ability of the model .

R-Drop Do the same sentence twice Dropout, And forced by Dropout The output probabilities of different sub models are consistent .Dropout Although the introduction of has a good effect , But it will lead to inconsistent training and reasoning process . In order to alleviate the inconsistency of the training reasoning process ,R-Drop Yes Dropout Regularize , Add restrictions on the distribution of output data in the output generated by the two sub models , Introducing data distribution metrics KL Divergence loss , bring Batch The distribution of the two data generated by the same sample is as close as possible , With distribution consistency . say concretely , For each training sample ,R-Drop Minimized by different Dropout The relationship between the output probability of the generated sub model KL The divergence .R-Drop As a training idea , It can be used in most supervised or semi supervised training , Strong commonality .

The three data enhancement strategies we use ,Mixup In the output layer of the language model Embedding And the internal random layer of the language model Transformers The linear variation of two samples in the output layer of , Antagonism training is to add a small disturbance to the sample , Contrast learning is to do the same sentence twice Dropout Form a positive sample pair , Reuse KL Divergence limits the consistency of the two sub models . All three strategies are adopted in Embedding Do something to enhance the generalization of the model , The models obtained by different strategies have different preferences , This provides conditions for the next step of integrated learning .

2.4 Integrated learning & Self training

Ensemble learning can combine multiple weakly supervised models , In order to get a better and more comprehensive strong supervision model . The potential idea of ensemble learning is that even if a weak classifier gets the wrong prediction , Other weak classifiers can also correct errors . If the difference between the models to be combined is significant , Then there is usually a better result after integrated learning .

Self training uses a small amount of labeled data and a large amount of unlabeled data to jointly train the model , First, a trained classifier is used to predict the labels of all unlabeled data , Then select the tags with high confidence as pseudo tag data , Combine the pseudo labeled data with the manually labeled training data to re train the classifier .

Integrated learning + Self training is a scheme that can utilize multiple models and unlabeled data . Among them , The general steps of integrated learning are : Train several different weak supervision models , Each model is used to predict the label probability distribution of unlabeled data , Calculate the weighted sum of label probability distribution , The false label probability distribution of unlabeled data is obtained . Self training refers to training one model to combine other models , The general steps are as follows : Train more than one Teacher Model ,Student The model learns the high confidence samples in the false label probability distribution Soft Prediction,Student Model as the last strong learner .

This time FewCLUE In practice , We first in Fine-Tune Stage construction multi template Prompt Learning, And use the antagonism training to the marked data 、 Comparative learning 、Mixup And so on . Because these data enhancement strategies use different enhancement principles , It can be considered that there are significant differences between these models , After integrated learning, there will be better results .

After training with data enhancement strategy , We have multiple weakly supervised models , And use these weak supervised models to predict on unlabeled data , Get the pseudo label distribution of unlabeled data . after , We integrate the pseudo label distribution of unlabeled data predicted by different data enhancement models , Get a false label distribution of total unlabeled data . In the process of filtering pseudo tag data , We do not necessarily choose the sample with the highest confidence , Because if every data enhancement model gives a high confidence , It indicates that this sample may be easy to learn , Not necessarily of great value .

We synthesize multiple data to enhance the confidence given by the model , Try to choose high confidence , But not easy to learn ( For example, the predictions of multiple models are not all consistent ). Then we use the set of labeled data and pseudo labeled data to reconstruct the multi template Prompt Learning, Use the data enhancement strategy again , And choose the best strategy . at present , At present, our experiment only has one iteration , You can also try multiple iterations , But as the number of iterations increases , Ascension also reduces , No longer significant .

3 experimental result

3.1 Data set introduction

FewCLUE The list provides 9 A mission , Among them are 4 A text categorization task ,2 Inter sentence relation task and 3 A reading comprehension task . Text classification tasks include e-commerce evaluation and emotion analysis 、 Classification of scientific literature 、 News categories and App Application description topic classification task . It is mainly classified into two categories of short texts 、 Short text multi classification and long text multi classification . Some of these tasks fall into many categories , exceed 100 class , And there is the problem of category imbalance . The task of inter sentence relation includes natural language reasoning and short text matching . Reading comprehension task includes idiom reading comprehension and choosing to fill in the blank , Judging keyword discrimination and pronoun disambiguation tasks . Each task generally provides 160 There are about 20000 pieces of labeled data and about 20000 pieces of unlabeled data . Because there are many categories of long text classification tasks , Too difficult , It also provides more tagged data . The detailed task data is shown in table 4 Shown :

3.2 Experimental comparison

surface 5 The comparison of experimental results of different models and parameters is shown . stay RoBERTa Base In the experiments , Use PET/EFL The model will go beyond the traditional direct Fine-Tune Model results 2-28PP. With PET/EFL Model based , To explore the effect of large models in small sample scenarios , We are RoBERTa Large We did experiments on , be relative to RoBERTa Base, Large models can improve models 0.5-13PP; In order to make better use of domain knowledge , We are going further through CLUE Enhanced pre training on datasets RoBERTa Large Clue Experiment on the model , The large model integrated with domain knowledge further improves the results 0.1-9pp. Based on this , In subsequent experiments , We are all here. RoBERTa Large Clue Experiment on .

surface 6 Show the PET/EFL Experimental results of data enhancement and integrated learning on the model , It can be found that data enhancement strategies are used even on large models , Models can also bring 0.8-9PP The promotion of , And further integrated learning & After training , Model performance will continue to improve 0.4-4PP.

Integrated learning + Self training steps , We tried several screening strategies :

- Select the sample with the highest confidence , This strategy brings about an improvement in 1PP within , Many of the pseudo label samples with the highest confidence are samples with consistent prediction of multiple models and high confidence , This part of the sample is easy to learn , The benefits of integrating this part of the sample are limited .

- Select samples with high confidence and controversial ( There is at least one model with inconsistent prediction results with other models , However, the overall confidence of multiple models exceeds the threshold 1), This strategy avoids particularly easy to learn samples , By setting the threshold value, too much dirty data can be avoided , Can bring 0-3PP The promotion of ;

- Combine the above two strategies , If the prediction results of multiple models for a sample are consistent , We choose confidence less than the threshold 2 The sample of ; If there is at least one model whose prediction results are inconsistent with those of other models , We choose confidence greater than the threshold 3 The sample of . This method also selects samples with high confidence to ensure the reliability of the output , The controversial samples are selected to ensure that the pseudo label samples screened out have great learning difficulties , Can bring 0.4-4PP The promotion of .

4 Application of small sample learning strategy in meituan scene

In all businesses of meituan , There is a wealth of NLP scene , Some tasks can be classified as text classification tasks and inter sentence relationship tasks , The small sample learning strategy mentioned above has been applied to various scenarios of meituan review , Expect to train a better model in the case of scarce data resources . Besides , The small sample learning strategy has been widely used in naturallanguageprocessing in meituan (NLP) Each part of the platform NLP Algorithm capability , Landed in many business scenarios and achieved significant benefits , Engineers inside meituan can experience through this platform NLP Center related capabilities .

Text classification task

Classification of medical and aesthetic subjects : The notes on meituan and comments are divided into 8 class : Curiosity 、 Explore the shop 、 Evaluation 、 Real life cases 、 The treatment process 、 Avoid pit 、 Effect comparison 、 The popular science . When a user clicks on a theme , Return the corresponding note content , Go online to meituan and comments App The encyclopedia page of the medical beauty channel 、 Scheme page experience sharing , Use small samples to learn 2,989 The accuracy of training data is improved 1.8PP, Reached 89.24%.

Strategy recognition : from UGC And explore travel strategies in your notes , Provide the content supply of tourism strategy , The strategy module applied to the fine search of scenic spots , Recall notes describing travel strategies , Use small samples to learn 384 The accuracy of training data is improved 2PP, Reached 87%.

Text classification of the school city : Learning City ( Meituan internal knowledge base ) There is a lot of user text , The text is divided into 17 Species category , Existing models are 700 Training on data , Learn from a small sample , Improve the accuracy of the existing model 2.5PP, achieve 84%.

Project screening :LE Life service / The current evaluation list page of beauty and other businesses is mixed with evaluation, which is inconvenient for users to quickly find decision-making information , Therefore, more structured classification labels are needed to meet the needs of users , Small sample learning is used in these two businesses 300-500 The accuracy of the data reaches 95%+( Multiple datasets are promoted separately 1.5-4PP).

Inter sentence relationship task

Medical beauty efficacy marking : Recall the notes of meituan and public comments according to their efficacy , The types of efficacy are : Replenishment 、 Skin whitening 、 Thin face 、 Wrinkle removal, etc , Go online to the medical beauty channel page , Yes 110 Two efficacy types need to be marked , Small sample learning only 2909 The accuracy of training data has reached 91.88%( promote 2.8PP).

Medical beauty brand marking : Brand upstream enterprises have the demands of brand publicity and marketing for their products , Content marketing is the mainstream 、 One of the effective marketing methods . Brand marking is to mark each brand, such as “ Yifu spring ”、“ You can only ” Recall notes detailing the brand , share 103 Brand , It has been online to the medical beauty brand Museum , Small sample learning only 1676 The accuracy of training data has reached 88.59%( promote 2.9PP).

5 summary

In this list submission , We built a system based on RoBERTa Semantic understanding model of , And through enhanced pre training 、PET/EFL Model 、 Data enhancement and integrated learning & Self training to improve the effect of the model . This model can complete text classification 、 The task of inferring inter sentence relations and several reading comprehension tasks .

By participating in this assessment task , We have a deeper understanding of algorithms and research in the field of natural language understanding in small sample scenarios , It also makes a thorough test of the Chinese landing ability of the cutting-edge algorithm , For further algorithm research 、 The algorithm has laid the foundation . Besides , The mission scenarios in this dataset are related to meituan search and NLP The business scenarios of the Department are very similar , Many of the strategies of the model are also directly applied to the actual business , Directly empower the business .

The author of this article

Luo Ying 、 Xu Jun 、 Xie Rui 、 Wuwei , All from meituan search and NLP Ministry /NLP center .

reference

- [1] FewCLUE Github Project address

- [2] FewCLUE A list of addresses

- [3] CLUE Github Project address

- [4] https://github.com/NetEase-FuXi/EET

- [5]https://github.com/IDEA-CCNL/Fengshenbang-LM

- [6] Liu, Yinhan, et al. "Roberta: A robustly optimized bert pretraining approach." arXiv preprint arXiv:1907.11692 (2019).

- [7] Gururangan, Suchin, et al. "Don't stop pretraining: adapt language models to domains and tasks." arXiv preprint arXiv:2004.10964 (2020).

- [8] Xu, Liang, Xuanwei Zhang, and Qianqian Dong. "CLUECorpus2020: A large-scale Chinese corpus for pre-training language model." arXiv preprint arXiv:2003.01355 (2020).

- [9] Devlin, Jacob, et al. "Bert: Pre-training of deep bidirectional transformers for language understanding." arXiv preprint arXiv:1810.04805 (2018).

- [10] Schick, Timo, and Hinrich Schütze. "It's not just size that matters: Small language models are also few-shot learners." arXiv preprint arXiv:2009.07118 (2020).

- [11] Wang, Sinong, et al. "Entailment as few-shot learner." arXiv preprint arXiv:2104.14690 (2021).

- [12] Zhang, Hongyi, et al. "mixup: Beyond empirical risk minimization." arXiv preprint arXiv:1710.09412 (2017).

- [13] Verma, Vikas, et al. "Manifold mixup: Better representations by interpolating hidden states." International Conference on Machine Learning. PMLR, 2019.

- [14] Verma, Vikas, et al. "Manifold mixup: Better representations by interpolating hidden states." International Conference on Machine Learning. PMLR, 2019.

- [15] Wu, Lijun, et al. "R-drop: regularized dropout for neural networks." Advances in Neural Information Processing Systems 34 (2021).

- [16] Small sample learning and its application in meituan scene

Read more technical articles of meituan technical team

front end | Algorithm | Back end | data | Security | Operation and maintenance | iOS | Android | test

| Reply to the official account menu bar dialog box 【2021 necessities 】、【2020 necessities 】、【2019 necessities 】、【2018 necessities 】、【2017 necessities 】 Other keywords , You can view the collection of technical articles of meituan technical team over the years .

边栏推荐

- leetcode:6096. Success logarithm of spells and potions [sort + dichotomy]

- 【历史上的今天】6 月 12 日:美国进入数字化电视时代;Mozilla 的最初开发者出生;3Com 和美国机器人公司合并

- Yoloe target detection notes

- Review of MySQL (VIII): Transactions

- CVPR 2022 oral Dalian Institute of technology proposed SCI: a fast and powerful low light image enhancement method

- SCI Writing - Methodology

- 2022.6.12 - leetcode. 89.

- 快速复制浏览器F12中的请求到Postman/或者生成相关语言的对应代码

- A story on the cloud of the Centennial Olympic Games belonging to Alibaba cloud video cloud

- 用一个性能提升了666倍的小案例说明在TiDB中正确使用索引的重要性

猜你喜欢

Difference between rxjs of() and of ({})

![leetcode:5270. Minimum path cost in Grid [simple level DP]](/img/c5/37fd1878e92f95340926e0ea75f150.png)

leetcode:5270. Minimum path cost in Grid [simple level DP]

leetcode:5289. 公平分发饼干【看数据范围 + dfs剪枝】

【历史上的今天】6 月 12 日:美国进入数字化电视时代;Mozilla 的最初开发者出生;3Com 和美国机器人公司合并

Review of MySQL (IX): index

Research Report on the overall scale, major manufacturers, major regions, products and applications of Electric Screwdrivers in the global market in 2022

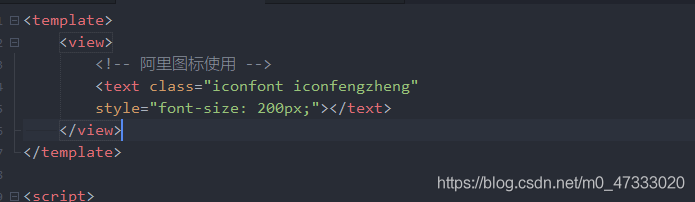

uniapp使用阿里图标

论大型政策性银行贷后,如何数字化转型 ?-亿信华辰

基于Halcon的矩形卡片【手动绘制ROI】的自由测量

How to modify the authorization of sina Weibo for other applications

随机推荐

在思科模擬器Cisco Packet Tracer實現自反ACL

[Huawei cloud stack] [shelf presence] issue 10: difficulties and solutions of it monitoring and diagnosis in the cloud scenario of government enterprise hybrid in the cloud native Era

吃饭咯 干锅肥肠 + 掌中宝!

MySQL advanced learning notes

Experiment 10 Bezier curve generation - experiment improvement - interactive generation of B-spline curve

国内如何下载Vega

Shenzhen has been shut down for 7 days since March 14. Home office experience | community essay solicitation

MySQL数据库(28):变量 variables

A story on the cloud of the Centennial Olympic Games belonging to Alibaba cloud video cloud

【图像去噪】基于各向异性滤波实现图像去噪附matlab代码

Review of MySQL (VIII): Transactions

Review of MySQL (I): go deep into MySQL

yoloe 目标检测使用笔记

Research Report on the overall scale, major manufacturers, major regions, products and application segmentation of swimming fins in the global market in 2022

Review of MySQL (IX): index

即时配送的订单分配策略:从建模和优化-笔记

Delivery lead time lightweight estimation practice - Notes

Leetcode 474. One and zero

2022.6.12 - leetcode. 89.

机器学习在美团配送系统的实践:用技术还原真实世界-笔记