当前位置:网站首页>语料库数据处理个案实例(句子检索相关个案)

语料库数据处理个案实例(句子检索相关个案)

2022-06-24 06:46:00 【Triumph19】

7.8 句子检索相关个案

- 本小节我们讨论两个句子检索相关的个案。第一个个案,我们检索文本中含有某个单词或某类单词的句子。第二个个案,我们检索文本中的被动句。

7.8.1 检索所有含有某单词的句子

- 在本个案中,我们首先检索ge.txt文本中含有"children"一词的句子。我们允许c字母大写或小写。请看下面的代码:

import re

file_in = open(r'D:\works\文本分析\leopythonbookdata-master\texts\ge.txt','r')

file_out = open(r'D:\works\文本分析\ge_children.txt','a')

for line in file_in.readlines():

if re.search(r'[Cc]hildren',line):

file_out.write(line)

file_in.close()

file_out.close()

7.8.2 检索所有含有以"-tional"结尾的句子

- 下面我们检索ge.txt文本中含有以"-tional"结尾的句子。请看下面的代码。与上面的代码相比,我们做了两点改动。一是将写出的文本名改成了"ge_tional.txt",二是将检索的正则表达式改成了r’\w+tional\b’,其中’\b’表示词的边界。

import re

file_in = open(r'D:\works\文本分析\leopythonbookdata-master\texts\ge.txt','r')

file_out = open(r'D:\works\文本分析\ge_tional.txt','a')

for line in file_in.readlines():

if re.search(r'\w+tional\b',line):

file_out.write(line+'\n')

file_in.close()

file_out.close()

7.9 实现Rrange软件功能

- 学术英语词汇研究是应用语言学和学术用途英语领域的重要研究问题。Nation(2001)将词汇分成常用词汇、学术词汇、科技词汇、罕见词等几类。Nation(2001)认为,学习者往往先学习常用词汇,然后再学习科技词汇,最后学习科技术语和罕见词。常用词汇一般指West(1953)开发的通用词表(General Service List,GSL)包含的英语中最常用的2000个词汇(或词族)。学者们也开发了诸多学术英语词表,以帮助研究者学习和使用学术英语词汇,其中,Coxhead(2000)开发的学术英语词表(Academic Word List,AWL)是近年来影响最大的学术英语词表,该词表包括570个高频学术词汇(或词族)。为了更好地帮助研究者将AWL词表应用于研究和教学资料开发,Paul Natio还开发了Range软件。该软件内置GSL中最常见1000个词汇(一下简称GSL1000)、GSL中最常见2000个词汇(一下简称GSL2000)和AWL三个词表。Range软件可以分析语料库文本中的哪些词汇属于GSL1000、GSL2000和AWL词表,并汇报三个词表词汇的数量及其占文本所有词汇数量的百分比(覆盖率)。

- 学术英语词汇研究是应用语言学和学术用途英语领域的重要研究课题。为实现Range软件的功能,大致需要四个步骤。首先,需要读入文本,建立文本的词频表;其次,读入GSL1000.txt、GSL2000.txt和AWL.txt三个词表;再次,分析读入文本的哪些词汇分属于上述三个词表,并统计它们的数量和所占百分比;最后,将结果写出到新建的文本文件中。下面,我们将根据上述步骤分四个部分介绍展示代码内容。

- 下面是代码的第一部分,建立ge.txt文本的单词频次表。我们首先读入ge.txt文本,然后通过正则表达式’\W’将所有非字母和数字部分替换成空格,再通过split()函数进行分词,并将分词后的单词存储到all_words列表中。接下来,我们定义一个空的字典wordlist_freq_dict,以制作ge.txt文本单词的频次表。我们循环遍历all_words列表中的单词,如果单词在字典的键(key)中,则其频次加1;否则,其频次为1。这样,我们将单词存储为字典的键,而词频为字典的值(value)。

(1)建立ge.txt文本的单词词频表

# Recognizing_gsl_awl_words.py,part 1

# the following is to make the wordlist with freq

# and store the info in a dictionary (wordlist_freq_dict)

import re

file_in = open(r'D:\works\文本分析\leopythonbookdata-master\texts\ge.txt','r')

all_words = []

for line in file_in.readlines():

line2 = line.lower()

line3 = re.sub(r'\W',r' ',line2) #将非字母和数字部分替换为空格

wordlist = line3.split()

for word in wordlist:

all_words.append(word)

wordlist_freq_dict = {

}

for word in all_words:

if word in wordlist_freq_dict.keys():

wordlist_freq_dict[word] += 1

else:

wordlist_freq_dict[word] = 1

file_in.close()

(2)读入词表

- 下面是代码的第二部分,读入GSL1000.txt、GSL2000.txt和AWL.txt三个词表。我们首先建立一个空的字典gsl_awl_dict,然后分别读取上述三个词表文件,将词表的单词存储为字典的键,将三个词表单词所对应的字典键分别定义为1,2,3。

# Recognizing_gsl_awl_words.py,Part 2

# the following is to read the GSL and the AWL words

# and save them in a dictionary

gsl1000_in = open(r'D:\works\文本分析\leopythonbookdata-master\texts\GSL1000.txt','r')

gsl2000_in = open(r'D:\works\文本分析\leopythonbookdata-master\texts\GSL2000.txt','r')

awl_in = open(r'D:\works\文本分析\leopythonbookdata-master\texts\AWL.txt','r')

gsl_awl_dict = {

}

for word in gsl1000_in.readlines():

gsl_awl_dict[word.strip()] = 1

for word in gsl2000_in.readlines():

gsl_awl_dict[word.strip()] = 2

for word in awl_in.readlines():

gsl_awl_dict[word.strip()] = 3

gsl1000_in.close()

gsl2000_in.close()

awl_in.close()

(3)分析ge.txt中的词汇属于那个词表

- 下面是代码的第三部分,分析读入文本的哪些词汇分属于上述三个词表,并统计它们的数量和所占百分比。我们首先分别定义四个空的字典变量,以分别储存上述三个词表的单词、频次(gsl1000_words,gsl2000_words,awl_words)和上述三个词表以外的单词(other_words)。首先,循环遍历wordlist_freq_dict的键,也就是ge.txt文本的单词(wordlist_freq_dict.keys()),如果单词不在GSL和AWL此表中(if word not in gsl_awl_dict.keys()),则other_words字典的键为该单词,值为4(other_words[word] = 4);如果单词在GSL_1000中,则gsl1000_words字典的键为该值,值为该单词在ge.txt文本中的频次;依次类推。

# Recognizing_gsl_awl_words.py,part 3-1

# the following is to categorize the words in wordlist_freq_dict

# into dictionaries of GSL1000 words,GSL2000 words,AWL words or others

gsl1000_words = {

}

gsl2000_words = {

}

awl_words = {

}

other_words = {

}

for word in wordlist_freq_dict.keys():

if word not in gsl_awl_dict.keys():

other_words[word] = 4

elif gsl_awl_dict[word] == 1:

gsl1000_words[word] = wordlist_freq_dict[word] #统计ge.txt出现的gsl1000中的单词次数

elif gsl_awl_dict[word] == 2:

gsl2000_words[word] = wordlist_freq_dict[word]

elif gsl_awl_dict[word] == 3:

awl_words[word] = wordlist_freq_dict[word]

(4)统计ge.txt中各类词(常用词、学术词汇、罕见词)次数

- 然后,我们计算各个词表单词的总频次,并将它们分别存储到gsl1000_freq_total等变量中。其计算方法为,先定义一个值为0的变量(如gsl1000_freq_total = 0),如果某单词再GSL1000中,则其频次加其再ge.txt中的频次。

# Recognizing_gsl_awl_words.py,part 3-2

# compute freq total

gsl1000_freq_total = 0

gsl2000_freq_total = 0

awl_freq_total = 0

other_freq_total = 0

for word in gsl1000_words:

gsl1000_freq_total += wordlist_freq_dict[word]

for word in gsl2000_words:

gsl2000_freq_total += wordlist_freq_dict[word]

for word in awl_words:

awl_freq_total += wordlist_freq_dict[word]

for word in other_words:

other_freq_total += wordlist_freq_dict[word]

(4)统计ge.txt中各类词词形和词数(常用词、学术词汇、罕见词)的数量

- 接下来,计算各个词表单词词形的数量,计算方法比较简单,单词词形的数量即是各字典的长度。然后,将各部分单词总频次相加,以计算ge.txt文本单词的总频次;将各部分单词词形数量相加,以计算ge.txt文本单词词形的总数量。

# Recognizing_gsl_awl_words.py,part 3-3

# to compute the number of words in gsl1000,gsl2000,awl and other words

gsl1000_num_of_words = len(gsl1000_words)

gsl2000_num_of_words = len(gsl2000_words)

awl_num_of_words = len(awl_words)

other_num_of_words = len(other_words)

# 计算ge.txt中总的单词数量

freq_total = gsl1000_freq_total + gsl2000_freq_total + awl_freq_total + other_freq_total

# 计算ge.txt中总的词形数

num_of_words_total = gsl1000_num_of_words + gsl2000_num_of_words + awl_num_of_words + other_num_of_words

- 下面是代码的第四部分,将结果写出到range_wordlist_results.txt文本中。首先打开一个写出range_wordlist_results.txt文本的文件句柄。然后,在文本中写出文本的标题’RESULTS OF WORD ANALYSIS’,标题后面空两行’\n\n’。然后,分别写出文本的总词形数量和各部分的词形数量。请注意,写出到文本的内容必须是字符串,因此,当写出内容为数值变量时,必须先用str()函数将其转换为字符串,如str(num_of_words_total)。

# Recognizing_gsl_awl_words.py,part 4-1

# the following is to write out the results

# first,define the file to save the results

file_out = open(r'D:\works\文本分析\range_wordlist_results.txt','a')

# then,write out the results

file_out.write('RESULTS OF WORD ANALYSIS\n\n')

file_out.write('Total No. of word types in Great Expectations: ' + str(num_of_words_total) + '\n\n')

file_out.write('Total No. of GSL1000 word types : ' + str(gsl1000_num_of_words) + '\n\n')

file_out.write('Total No. of GSL2000 word types : ' + str(gsl2000_num_of_words) + '\n')

file_out.write('Total No. of AWL word types : ' + str(awl_num_of_words) + '\n')

file_out.write('Total No. of other word types : ' + str(other_num_of_words) + '\n')

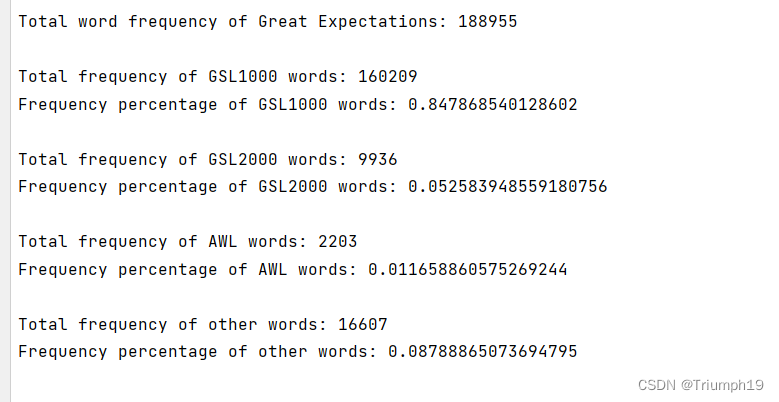

- 接下来,分别写出文本单词总频次、各部分单词的总频次和各部分单词频次所占总频次的百分比。有一个问题需要注意:由于各部分单词频次和总频次均为整数数值,在Python2.7中,整数型数值相除的结果依然为整数型数值,因此,在计算百分比时,需先将整数型数值转换成浮点型数值,如float(freq_total)。

# Recognizing_gsl_words.py,part 4-2

file_out.write('\n\n')

file_out.write('Total word frequency of Great Expectations: ' + str(freq_total) + '\n\n')

file_out.write('Total frequency of GSL1000 words: ' + str(gsl1000_freq_total) + '\n')

file_out.write('Frequency percentage of GSL1000 words: ' + str(gsl1000_freq_total / float(freq_total)) + '\n\n')

file_out.write('Total frequency of GSL2000 words: ' + str(gsl2000_freq_total) + '\n')

file_out.write('Frequency percentage of GSL2000 words: ' + str(gsl2000_freq_total / float(freq_total)) + '\n\n')

file_out.write('Total frequency of AWL words: ' + str(awl_freq_total) + '\n')

file_out.write('Frequency percentage of AWL words: ' + str(awl_freq_total / float(freq_total)) + '\n\n')

file_out.write('Total frequency of other words: ' + str(other_freq_total) + '\n')

file_out.write('Frequency percentage of other words: ' + str(other_freq_total / float(freq_total)) + '\n')

(5)统计ge.txt文本单词总频次以及各部分单词频次

- 最后,我们分别将这三个词表的单词及频次和三个词表以外的单词及频次写出到结果文件。

# Recognizing_gsl_awl_words.py, Part 4-3

# write out the GSL1000 words

file_out.write('\n\n')

file_out.write('##########\n')

file_out.write('Words in GSL1000\n\n')

for word in sorted(gsl1000_words.keys()):

file_out.write(word + '\t' + str(gsl1000_words[word]) + '\n')

# write out the GSL2000 words

file_out.write('\n\n')

file_out.write('##########\n')

file_out.write('Words in GSL2000\n\n')

for word in sorted(gsl2000_words.keys()):

file_out.write(word + '\t' + str(gsl2000_words[word]) + '\n')

# write out the AWL words

file_out.write('\n\n')

file_out.write('##########\n')

file_out.write('Words in AWL\n\n')

for word in sorted(awl_words.keys()):

file_out.write(word + '\t' + str(awl_words[word]) + '\n')

# write out other words

file_out.write('\n\n')

file_out.write('##########\n')

file_out.write('Other words\n\n')

for word in sorted(other_words.keys()):

file_out.write(word + '\t' + str(wordlist_freq_dict[word]) + '\n')

file_out.close()

- 有结果可见,Great Expectations文本共有词形数10764个,总单词数(总频次188955)个,其中GSL1000词汇占84.78%,GSL2000词汇占5.26%,AWL词汇占1.17%,其他词汇占8.79%。根据先前词汇研究相关结果,学术文本中AWL词汇大致占学术文本总频次8%~10%。而Great Expectations文本为小说体裁,因此其AWL词汇仅占1.17%。

边栏推荐

猜你喜欢

![(cve-2020-11978) command injection vulnerability recurrence in airflow DAG [vulhub range]](/img/33/d601a6f92b1b73798dceb027263223.png)

(cve-2020-11978) command injection vulnerability recurrence in airflow DAG [vulhub range]

鸿蒙os开发三

Blue Bridge Cup seven segment code (dfs/ shape pressing + parallel search)

![[equalizer] bit error rate performance comparison simulation of LS equalizer, def equalizer and LMMSE equalizer](/img/45/61258aa20cd287047c028f220b7f7a.png)

[equalizer] bit error rate performance comparison simulation of LS equalizer, def equalizer and LMMSE equalizer

How to open the soft keyboard in the computer, and how to open the soft keyboard in win10

Analog display of the module taking software verifies the correctness of the module taking data, and reversely converts the bin file of the lattice array to display

How can win11 set the CPU performance to be fully turned on? How does win11cpu set high performance mode?

jarvisoj_ level2

When MFC uses the console, the project path cannot have spaces or Chinese, otherwise an error will be reported. Lnk1342 fails to save the backup copy of the binary file to be edited, etc

RDD的执行原理

随机推荐

A case of bouncing around the system firewall

后疫情时代下,家庭服务机器人行业才刚启航

Global and Chinese market of Earl Grey tea 2022-2028: Research Report on technology, participants, trends, market size and share

[learn FPGA programming from scratch -42]: Vision - technological evolution of chip design in the "post Moorish era" - 1 - current situation

《canvas》之第1章 canvas概述

Anaconda 中使用 You Get

智能指针备注

Actual target shooting - skillfully use SMB to take down the off-line host

What challenges does the video streaming media platform face in transmitting HD video?

MySQL case: analysis of full-text indexing

A summary of the posture of bouncing and forwarding around the firewall

bjdctf_ 2020_ babystack

Global and Chinese market of inline drip irrigation 2022-2028: Research Report on technology, participants, trends, market size and share

(cve-2020-11978) command injection vulnerability recurrence in airflow DAG [vulhub range]

New ways to play web security [6] preventing repeated use of graphic verification codes

What is a CC attack? How to judge whether a website is attacked by CC? How to defend against CC attacks?

Q & A on cloud development cloudbase hot issues of "Huage youyue phase I"

tuple(元组)备注

[Lua language from bronze to king] Part 2: development environment construction +3 editor usage examples

[frame rate doubling] development and implementation of FPGA based video frame rate doubling system Verilog