当前位置:网站首页>Spark persistence strategy_ Cache optimization

Spark persistence strategy_ Cache optimization

2022-07-26 08:31:00 【StephenYYYou】

Catalog

Spark Persistence strategy _ Cache optimization

MEMORY_ONLY and MEMORY_AND_DISK

Spark Persistence strategy _ Cache optimization

RDD Persistence strategy of

When a RDD When frequent reuse is required ,spark Provide RDD Persistence of , By using persist()、cache() Two methods are used RDD The persistence of . As shown below :

//scala

myRDD.persist()

myRDD.cache()Why use persistence ?

because RDD1 after Action Generate a new RDD2 after , The original RDD1 Will be deleted from memory , If it needs to be reused in the next operation RDD1,Spark Will go all the way up , Reread data , Then recalculate RDD1, Then calculate . This will increase the number of disks IO And cost calculation , Persistence saves data , Wait for the next time Action When using .

cache and persist Source code

def persist(newLevel: StorageLevel): this.type = {

if (isLocallyCheckpointed) {

// This means the user previously called localCheckpoint(), which should have already

// marked this RDD for persisting. Here we should override the old storage level with

// one that is explicitly requested by the user (after adapting it to use disk).

persist(LocalRDDCheckpointData.transformStorageLevel(newLevel), allowOverride = true)

} else {

persist(newLevel, allowOverride = false)

}

}

/**

* Persist this RDD with the default storage level (`MEMORY_ONLY`).

*/

def persist(): this.type = persist(StorageLevel.MEMORY_ONLY)

/**

* Persist this RDD with the default storage level (`MEMORY_ONLY`).

*/

def cache(): this.type = persist()We can see from the source code that , cache A method is actually a call passed without parameters persis Method , So we just need to study persist The method can . Without participation persist The default parameter is StorageLevel.MEMORY_ONLY, We can take a look at the class StorageLevel Source code .

/**

* Various [[org.apache.spark.storage.StorageLevel]] defined and utility functions for creating

* new storage levels.

*/

object StorageLevel {

val NONE = new StorageLevel(false, false, false, false)

val DISK_ONLY = new StorageLevel(true, false, false, false)

val DISK_ONLY_2 = new StorageLevel(true, false, false, false, 2)

val MEMORY_ONLY = new StorageLevel(false, true, false, true)

val MEMORY_ONLY_2 = new StorageLevel(false, true, false, true, 2)

val MEMORY_ONLY_SER = new StorageLevel(false, true, false, false)

val MEMORY_ONLY_SER_2 = new StorageLevel(false, true, false, false, 2)

val MEMORY_AND_DISK = new StorageLevel(true, true, false, true)

val MEMORY_AND_DISK_2 = new StorageLevel(true, true, false, true, 2)

val MEMORY_AND_DISK_SER = new StorageLevel(true, true, false, false)

val MEMORY_AND_DISK_SER_2 = new StorageLevel(true, true, false, false, 2)

val OFF_HEAP = new StorageLevel(true, true, true, false, 1)

/**

* :: DeveloperApi ::

* Return the StorageLevel object with the specified name.

*/

@DeveloperApi

def fromString(s: String): StorageLevel = s match {

case "NONE" => NONE

case "DISK_ONLY" => DISK_ONLY

case "DISK_ONLY_2" => DISK_ONLY_2

case "MEMORY_ONLY" => MEMORY_ONLY

case "MEMORY_ONLY_2" => MEMORY_ONLY_2

case "MEMORY_ONLY_SER" => MEMORY_ONLY_SER

case "MEMORY_ONLY_SER_2" => MEMORY_ONLY_SER_2

case "MEMORY_AND_DISK" => MEMORY_AND_DISK

case "MEMORY_AND_DISK_2" => MEMORY_AND_DISK_2

case "MEMORY_AND_DISK_SER" => MEMORY_AND_DISK_SER

case "MEMORY_AND_DISK_SER_2" => MEMORY_AND_DISK_SER_2

case "OFF_HEAP" => OFF_HEAP

case _ => throw new IllegalArgumentException(s"Invalid StorageLevel: $s")

}

/**

* :: DeveloperApi ::

* Create a new StorageLevel object.

*/

@DeveloperApi

def apply(

useDisk: Boolean,

useMemory: Boolean,

useOffHeap: Boolean,

deserialized: Boolean,

replication: Int): StorageLevel = {

getCachedStorageLevel(

new StorageLevel(useDisk, useMemory, useOffHeap, deserialized, replication))

}

/**

* :: DeveloperApi ::

* Create a new StorageLevel object without setting useOffHeap.

*/

@DeveloperApi

def apply(

useDisk: Boolean,

useMemory: Boolean,

deserialized: Boolean,

replication: Int = 1): StorageLevel = {

getCachedStorageLevel(new StorageLevel(useDisk, useMemory, false, deserialized, replication))

}You can see ,StorageLevel Parameters include :

| Parameters : Default | meaning |

| useDisk: Boolean | Whether to use disk for persistence |

| useMemory: Boolean | Whether to use memory for persistence |

| useOffHeap: Boolean | Whether to use JAVA Heap memory |

| deserialized: Boolean | Whether to serialize |

| replication:1 | replications ( Make fault tolerance ) |

So we can get :

- NONE: It's the default configuration

- DISK_ONLY: Only cached on disk

- DISK_ONLY_2: Only cache on disk and keep 2 Copies

- MEMORY_ONLY: Only cached in disk memory

- MEMORY_ONLY_2: Only cache in disk memory and keep 2 Copies

- MEMORY_ONLY_SER: Only cached in disk memory and serialized

- MEMORY_ONLY_SER_2: It is only cached in disk memory and serialized and maintained 2 Copies

- MEMORY_AND_DISK: Cache after memory is full , It will be cached on disk

- MEMORY_AND_DISK_2: Cache after memory is full , It will be cached on disk and kept 2 Copies

- MEMORY_AND_DISK_SER: Cache after memory is full , It will be cached on disk and serialized

- MEMORY_AND_DISK_SER_2: Cache after memory is full , It will be cached on disk and serialized , And keep 2 Copies

- OFF_HEAP: Cache away from heap memory

Serialization can be similar to compression , Easy to save storage space , But it will increase the calculation cost , Because each use requires serialization and deserialization ; The default number of copies is 1, To prevent data loss , Enhance fault tolerance ;OFF_HEAP take RDD Stored in etachyon On , Make it have lower garbage collection cost , Understanding can ;DISK_ONLY Nothing to say , The following are the main comparisons MEMORY_ONLY and MEMORY_AND_DISK.

MEMORY_ONLY and MEMORY_AND_DISK

MEMORY_ONLY:RDD Cache and memory only , Partitions that cannot fit in memory will be re read from disk and calculated when used .

MEMORY_AND_DISK: Try to save in memory , Partitions that cannot be saved will be saved on disk , The process of recalculation is avoided .

Intuitively ,MEMORY_ONLY The calculation process is also needed , Relatively low efficiency , But in fact , Because it is calculated in memory , So the recalculation time consumption is much less than that of disk IO Of , So... Is usually used by default MEMORY_ONLY. Unless the intermediate computing overhead is particularly large , Use at this time MEMORY_AND_DISK Would be a better choice .

summary

边栏推荐

- 2022/7/18 exam summary

- [C language] programmer's basic skill method - "creation and destruction of function stack frames"

- Regular expression job

- Data validation typeerror: qiao Validate is not a function

- Kotlin program control

- flex三列布局

- 2022-7-4 personal qualifying 1 competition experience

- 内存管理-动态分区分配方式模拟

- 请问现在flinkcdc支持sqlserver实例名方式连接吗?

- 23.10 Admin features

猜你喜欢

![[GUI] swing package (window, pop-up window, label, panel, button, list, text box)](/img/05/8e7483768a4ad2036497cac136b77d.png)

[GUI] swing package (window, pop-up window, label, panel, button, list, text box)

Flitter imitates wechat long press pop-up copy recall paste collection and other custom customization

内存管理-动态分区分配方式模拟

外卖小哥,才是这个社会最大的托底

有点牛逼,一个月13万+

Kotlin operator

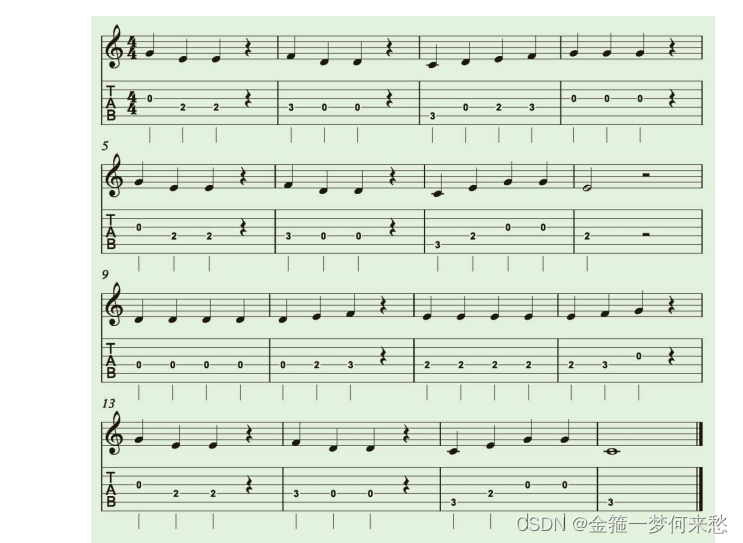

基础乐理 节奏联系题,很重要

基于Raft共识协议的KV数据库

Team members participate in 2022 China multimedia conference

Bee guitar score high octave and low octave

随机推荐

Flutter upgrade 2.10

Error handling response: Error: Syntax error, unrecognized expression: .c-container /deep/ .c-contai

Flutter WebView jitter

Prefix infix suffix expression (written conversion)

【时间复杂度空间复杂度】

Kotlin operator

Why reserve a capacitor station on the clock output?

flink oracle cdc 读取数据一直为null,有大佬知道么

SPSS用KMeans、两阶段聚类、RFM模型在P2P网络金融研究借款人、出款人行为规律数据

Kotlin function

Lesson 3: gcc compiler

vscode 实用快捷键

22-07-12 personal training match 1 competition experience

shell编程

Special lecture 2 dynamic planning learning experience (should be updated for a long time)

Template summary

OSPF summary

关于期刊论文所涉及的一些概念汇编+期刊查询方法

Mysql/mariadb (Galera multi master mode) cluster construction

22-07-16 personal training match 3 competition experience