当前位置:网站首页>Spark kernel (execution principle) environment preparation /spark job submission process

Spark kernel (execution principle) environment preparation /spark job submission process

2022-06-13 03:34:00 【TRX1024】

understand Spark Task submission to running process , There are two stages :

The first stage is in Yarn Execute outside the cluster , Mainly the submission of homework , Submit the assignment to Yarn Until cluster .

The second stage is Yarn The cluster to perform , Involving resource allocation 、AM、Driver、Executor And other components .

One 、 The first stage : Homework submission

1. Submit script

Job submission script

Generally submit a spark The mode of operation is spark-submit To submit

# Run on a Spark standalone cluster

./bin/spark-submit \

--class org.apache.spark.examples.SparkPi \

--master spark://207.184.161.138:7077 \

--executor-memory 20G \

--total-executor-cores 100 \

/path/to/examples.jar \

10002. Use spark-submit Order to commit , Look at the command script

#!/usr/bin/env bash

# 、 notes

if [ -z "${SPARK_HOME}" ]; then

source "$(dirname "$0")"/find-spark-home

fi

# disable randomized hash for string in Python 3.3+

export PYTHONHASHSEED=0

exec "${SPARK_HOME}"/bin/spark-class org.apache.spark.deploy.SparkSubmit "[email protected]"3. In fact, it's called org.apache.spark.deploy.SparkSubmit, This is the starting point for submitting the job

def doSubmit(args: Array[String]): Unit = {

// Initialize logging if it hasn't been done yet. Keep track of whether logging needs to

// be reset before the application starts.

val uninitLog = initializeLogIfNecessary(true, silent = true)

val appArgs = parseArguments(args)

if (appArgs.verbose) {

logInfo(appArgs.toString)

}

appArgs.action match {

case SparkSubmitAction.SUBMIT => submit(appArgs, uninitLog)

case SparkSubmitAction.KILL => kill(appArgs)

case SparkSubmitAction.REQUEST_STATUS => requestStatus(appArgs)

case SparkSubmitAction.PRINT_VERSION => printVersion()

}

}Did two things :

- Parsing command parameters

- call submit()

4.submit() towards yarn Submit tasks

// By setting the appropriate classpath , System properties and application parameters to prepare the boot environment , To run sub main classes based on cluster management and deployment mode .

val (childArgs, childClasspath, sparkConf, childMainClass) = prepareSubmitEnvironment(args)

Method will be spark Prepare for submission , Prepare the operating environment - Ready to start environment

- YarnClusterApplication Class creation client call submitApplication() Submit task to yarn

5. Now the task has been submitted to Yarn Of ResourcesManager The first phase ends

Two 、 The second stage Yarn colony

Paste the flow chart again :

explain :

【RM】 It means that RM The operating

【AM】 It means that AM The operating

【Executor】 It means that NM Upper Executor Process operation

step :

1.【RM】ResourcesManager start-up ApplicationManager(AM) process

client Will apply to RM One Container, To start up AM process

stay ApplicationManager in

- There is one yarnRmClinet To follow RM communicate

- There is one nmClient Used to communicate with other nodes

2.【AM】AM Start according to the parameters Driver Threads , And initialization SparkContext

3.【AM】 adopt yarnRmClinet And RM Connect , register AM, Application resources

4.【RM】 Return to the list of available resources

according to Preferred location And other related parameter information to allocate container resources

5.【AM】 Handling allocatable containers

At this time, the container information assigned to has been obtained

Run the allocated container ( To specify the NM Start the container )

6.【AM】AM Start in the allocated running container in the thread pool of Executor process

7.【Executor】 Get into Executor

Important attributes :

- driver object , And AM Medium Driver communicate

- ExecutorEnv Executor Environmental Science

- MassageLoop Inbox outbox

8.【Executor】driver Object to Driver register Executor( Reverse registration )

9.【AM】Driver reply Executor Registered successfully

10.【Executor】 Create and launch Executor Calculation object

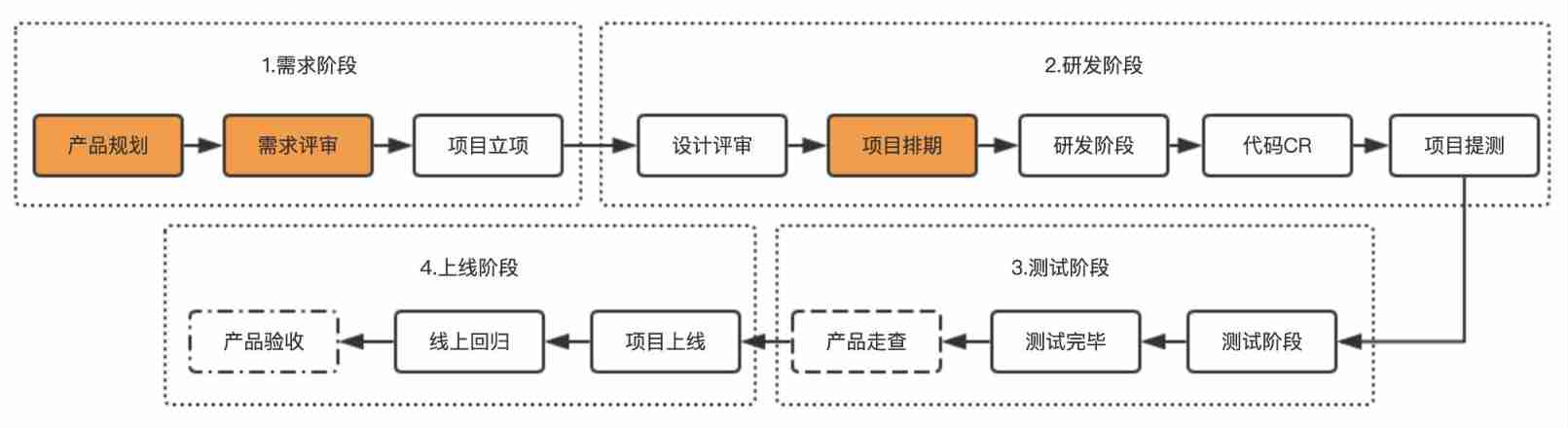

3、 ... and 、Spark General operation process

The picture above shows Spark General operation flow chart , Embodies the basic Spark The basic submission process of an application in deployment .

This process follows the following core steps :

1) After the task is submitted , Will start first Driver Program ;

2) And then Driver Register the application with the cluster manager ;

3) After that, the cluster manager allocates Executor And start the ;

4) Driver Start execution main function ,Spark Query is lazy execution , When executed Action Operator starts pushing backwards

count , According to wide dependence Stage Division , And then every one of them Stage Corresponding to one Taskset,Taskset How much is in it

individual Task, Find available resources Executor To schedule ;

5) According to the principle of localization ,Task Will be distributed to the designated Executor To carry out , During the execution of the task ,

Executor And will continue to work with Driver communicate , Report on task operation .

边栏推荐

- [JVM series 4] common JVM commands

- Redis memory optimization and distributed locking

- [azure data platform] ETL tool (5) -- use azure data factory data stream to convert data

- Union, intersection and difference sets of different MySQL databases

- Scala sets (array, list, set, map, tuple, option)

- Druid query

- Differences of several query methods in PDO

- Technical documentbookmark

- Three ways to start WPF project

- Golang picks up: why do we need generics

猜你喜欢

Video playback has repeatedly broken 1000w+, how to use the second dimension to create a popular model in Kwai

Spark Foundation

Microservice practice based on rustlang

DTCC | 2021 China map database technology conference link sharing

Yolov5 face+tensorrt: deployment based on win10+tensorrt8.2+vs2019

Economic panel topic 1: panel data of all districts and counties in China - more than 70 indicators such as population, pollution and agriculture (2000-2019)

2000-2019 enterprise registration data of provinces, cities and counties in China (including longitude and latitude, number of registrations and other multi indicator information)

Reading notes of effective managers

2-year experience summary to tell you how to do a good job in project management

![[JVM Series 2] runtime data area](/img/6c/82cc0ff9e14507f57492ed3f67a7f6.jpg)

[JVM Series 2] runtime data area

随机推荐

Alibaba cloud OSS access notes

English语法_方式副词-位置

MASA Auth - SSO与Identity设计

Doris creates OLAP, mysql, and broker tables

Azure SQL db/dw series (11) -- re understanding the query store (4) -- Query store maintenance

Spark Foundation

Dish recommendation system based on graph database

视频播放屡破1000W+,在快手如何利用二次元打造爆款

Graph data modeling tool

MySQL learning summary 10: detailed explanation of view use

[azure data platform] ETL tool (5) -- use azure data factory data stream to convert data

Aggregation analysis of research word association based on graph data

C language programming - input a string arbitrarily from the keyboard, calculate the actual number of characters and print out. It is required that the string processing function strlen() cannot be us

简述:分布式CAP理论和BASE理论

Summary of the latest rail transit (Subway + bus) stops and routes in key cities in China (II)

Data Governance Series 1: data governance framework [interpretation and analysis]

Cross border M & a database: SDC cross border database, Thomson database, A-share listed company M & a database and other multi index data (4w+)

The use of curl in PHP

MySQL group commit

Loading process of [JVM series 3] classes