当前位置:网站首页>Monte Carlo search tree (UCT) based on confidence upper bound to realize four sub chess

Monte Carlo search tree (UCT) based on confidence upper bound to realize four sub chess

2022-07-26 22:39:00 【biyezuopin】

Monte Carlo search tree based on upper bound of confidence (UCT) Realize four chess

Algorithm

This experiment adopts Monte Carlo search tree with upper bound of confidence (UCT) Realization , The algorithm is briefly described as follows :

Each node in the search tree represents a situation . For a node x,x Of the i Child node

Representation node x The situation is in the i The next situation formed after the listing . Search with the current situation as the root . For each node , Count all games based on the situation represented by this node and its child nodes , Record the total number of games n And the total number of wins m, Then the estimation of the winning probability of this node is

. In order to estimate the exact probability of winning , Enough game experiments are needed . Every experiment starts from the situation represented by the roots , Implement the following strategies :

If the current situation in the search tree has been node x Express , And all the sub situations produced by this situation in one step , All have been x The child nodes of represent , select UCB The drop corresponding to the largest child node in the upper bound of confidence . If you remember the node x The total number of trials and the number of wins recorded are n and m,x Child nodes of

among c Is the scale factor , In this study c=1, The specific value will be discussed later . here “ win victory ” It refers to the player represented by the current node ;

If the current situation in the search tree has been node x Express , But the secondary situation caused by a certain end of this situation , No reason x The child nodes of represent , Then choose to execute this settlement , And expand new nodes on the tree to record this new situation ;

If the current situation is not represented by any node in the search tree , Then perform a random drop . but ① If you execute a certain position, you can win directly , or ② If you don't implement a certain fall, you will fall directly , Then execute this settlement .

Update the information recorded by the nodes on the tree after each test . After performing enough tests , Select all child nodes of the root node to estimate the winning rate

Will converge to the real winning rate , Choose the biggest one as the formal decision .

UCT The algorithm balances exploration and utilization : When the number of attempts of a strategy is small , Its upper bound of confidence is high , The algorithm will try enough strange decisions ( Explore ); When a strategy is estimated to have a high winning rate , The upper limit of confidence is also high , The algorithm will focus on these strategies , Avoid wasting too much computation in a situation where the winning rate is too small ( utilize ).

The above algorithm is not naive UCT Algorithm , There are two improvements worth noting :

- If the node x No child nodes yet , Not all its child nodes are expanded at one time , Instead, it expands one by one ( See steps above 2), Avoid wasting too much computation at one time when expanding a node .

- step 3 Not a random strategy , But can perceive the situation that the next step is bound to win or lose . It doesn't take much calculation to decide whether to win or lose , But this action effectively avoids the blindness of pure random strategy .

Measurement and evaluation

To evaluate this algorithm , The following measurements were made . Although the random noise is large , But it can also explain some problems .

Monte Carlo algorithm requires a certain number of experiments , Estimate the winning rate

To converge to the real winning rate . In order to analyze whether the number of experiments performed by the algorithm is sufficient , Select several references AI Play chess with it , Each group of data is measured 20 Round game , Half of them are pioneers , The other half is the backhand . Measure program run time ( Proportional to the number of tests ) The relationship with the winning rate is as follows :

| reference AI;; The elapsed time | 10.dll | 20.dll | 30.dll | 40.dll | 50.dll | 60.dll | 70.dll | 80.dll | 90.dll | 100.dll |

|---|---|---|---|---|---|---|---|---|---|---|

| 0.5s | 1 | 1 | N/A | 1 | 0.95 | 0.95 | 0.95 | 0.85 | 0.95 | 0.85 |

| 1.0s | 1 | 1 | N/A | 1 | 0.95 | 1 | 1 | 1 | 0.95 | 0.65 |

| 1.5s | 1 | 1 | N/A | 0.9 | 1 | 1 | 0.95 | 1 | 1 | 0.6 |

| 2.0s | 1 | 0.95 | N/A | 1 | 1 | 0.95 | 0.85 | 1 | 1 | 0.75 |

| 2.5s | 1 | 0.95 | N/A | 1 | 1 | 0.95 | 1 | 0.95 | 1 | 0.75 |

It can be seen that ,0.5s~2.5s Within the scope of , There is no statistical difference between the winning rate and the running time . It can be considered that the number of tests is sufficient .

UCB There is a proportional coefficient in the upper bound of confidence c, To determine the best c Value , Make the following measurements . Still measure each group of data 20 Round game , Half of them are pioneers , The other half is the backhand .

| reference AI;c | 70.dll | 80.dll | 90.dll | 100.dll |

|---|---|---|---|---|

| 0.1 | 0 | 0.1 | 0.05 | 0 |

| 0.4 | 0.9 | 1 | 0.85 | 0.55 |

| 0.7 | 0.95 | 0.95 | 1 | 0.75 |

| 1.0 | 1 | 0.85 | 0.95 | 0.8 |

| 1.3 | 1 | 1 | 1 | 0.7 |

| 1.6 | 1 | 0.95 | 0.95 | 0.5 |

| 1.9 | 0.95 | 0.9 | 0.75 | 0.6 |

The plot :

It can be seen that c≤0.5 or c≥1.5 The winning rate decreased significantly , but 0.5<c<1.5 There is no significant difference in the winning rate . In this experiment, we choose c=1 As the final parameter .

Other attempts to improve

Except in “ Algorithm ” In addition to the two improvements described in the section , I also tried other improvement methods as follows , But experiments show that the effect is not good , Therefore, it has not been adopted in the final version .

UCT One disadvantage of the algorithm is : A better decision must be tried enough times , Its value can be reflected in the estimated winning rate

in , A lot of computation is wasted . This is because the winning rate of a node is obtained by the weighted average of the winning rates of all its child nodes , Instead of the maximum winning rate of its child nodes .

I try to set the winning probability of a node as a random variable , Suppose it follows a normal distribution N(μ,σ), With μ+σ As an alternative, the upper bound of confidence is searched by Monte Carlo . The winning probability of each node is defined as the maximum possible winning probability of its child nodes . Although the distribution to which the maximum of some normal distributions obey has no analytical solution , But it can be fitted by simple function , Through this method, the winning probability of each node in the tree can be estimated , Finally, choose the decision with the greatest expectation of winning .

Experiments show that , This method is not as successful as the naive Algorithm . I think it may be caused by the following reasons :

- “ Measurement and evaluation ” The analysis in the first section shows , The number of attempts in the naive algorithm is enough ,UCT The amount of wasted computation has not had too many negative effects ;

- The maximum values of some normal distribution variables no longer obey the normal distribution , According to this method, it is estimated that there will be deviation .

Description of code implementation

In the code ,Board Class is used to manage the current situation , Maintain the location and top Location information ;Search Class is used to execute UCT Search for .Strategy.cpp For interacting with the framework .

Method estimation will produce deviation .

Description of code implementation

In the code ,Board Class is used to manage the current situation , Maintain the location and top Location information ;Search Class is used to execute UCT Search for .Strategy.cpp For interacting with the framework .

边栏推荐

- Does Guosen Securities charge for opening a mobile account? Is it safe to open an account?

- what is qrc in qt

- Day07 MySql知识点再总结与多表查询

- [IO Development Notes] smart cloud intelligent watering device practice (3) - automatic code generation and transplantation

- DTS搭载全新自研内核,突破两地三中心架构的关键技术|腾讯云数据库

- Sequence table implementation

- The potential of emerging markets is unlimited, and advance.ai risk control products help Chinese offshore enterprises build a solid foundation for safe development

- 挖财钱堂和启牛学堂哪个更好一些?是安全的吗

- 国产DRAM年底将量产,但前路依旧漫漫!

- 毕业5年,从信息管理转行软件测试工程师,我的月薪终于突破了12k

猜你喜欢

Docker uses mysql:5.6 and owncloud image to build a personal network disk, install and build a private warehouse harbor

yolov1

v-model语法糖的实现

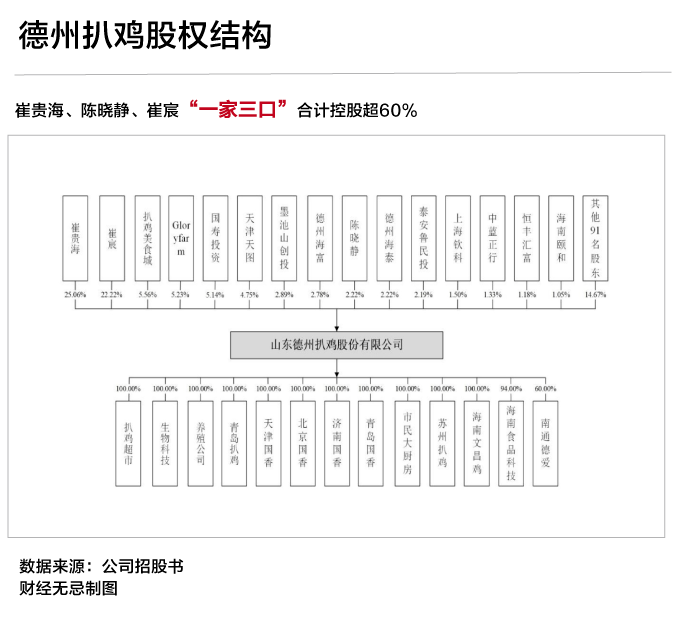

Sell 700million a year, and get ready for the IPO of Dezhou braised chicken

【Io开发笔记】机智云智能浇花器实战(3)-自动生成代码移植

测试开发是开发吗?

LeetCode 122:买卖股票的最佳时机 II

Those environment configurations and plug-ins of idea

Internet celebrity spicy bars can't catch young people

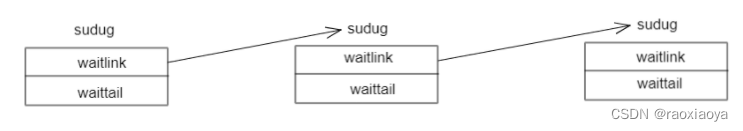

golang中的信号量的实现原理

随机推荐

缓存数据库Memcached

A few pictures help you clarify "China's financial institution system"

IDEA的那些环境配置及插件

JMeter -- response Chinese garbled code processing

DAO 的发展状态

v-model语法糖的实现

[IO Development Notes] smart cloud intelligent watering device practice (3) - automatic code generation and transplantation

毕业5年,从信息管理转行软件测试工程师,我的月薪终于突破了12k

Sell 700million a year, and get ready for the IPO of Dezhou braised chicken

摩尔定律的新推力,英特尔先进封装技术详解!

面试 必备

Iptables prevents nmap scanning and enables incremental backup of binlog

【HCIP】OSPF 路由计算

Day07 MySql知识点再总结与多表查询

Detailed explanation of SQL secondary injection

Use ECs and OSS to set up personal network disk

Luo Xu talks with Siemens wanghaibin: advanced manufacturing requires benefits from Digitalization

Module 8 (message queue MySQL table storing message data)

华为Atlas900揭秘:集成数千颗昇腾910芯片,算力堪比50万台PC!

浮动引起的高度塌陷问题