当前位置:网站首页>RSP: An Empirical Study of remote sensing pre training

RSP: An Empirical Study of remote sensing pre training

2022-06-11 16:32:00 【Computer Vision Research Institute】

Pay attention to the parallel stars

Never get lost

Institute of computer vision

official account ID|ComputerVisionGzq

Study Group | Scan the code to get the join mode on the homepage

Computer Vision Institute column

author :Edison_G

Deep learning has greatly affected the research in the field of remote sensing image analysis .

Deep learning has greatly affected the research in the field of remote sensing image analysis . However , Most existing remote sensing depth models use ImageNet Pre training weight initialization , Among them, there is inevitably a large domain gap between natural images and aerial images , This may limit the fine-tuning performance of downstream remote sensing scene tasks .

So , Jingdong Exploration Research Institute and Wuhan University 、 University of Sydney With the help of the largest remote sensing scene so far MillionAID, Training from scratch includes convolutional neural networks (CNN) And vision which has shown good performance in natural image computer vision tasks Transformer(Vision Transformer) The Internet , A series of basic backbone models of remote sensing pre training based on supervised learning are obtained for the first time . And further study ImageNet Preliminary training (IMP) And remote sensing pre training (RSP) Including semantic segmentation 、 The impact of a series of downstream tasks including target detection .

The experimental results confirm the advanced Vision Transformer Series model ViTAE Superiority in remote sensing missions , And found RSP It is unique in the effectiveness of remote sensing tasks and the perception of related semantics . The experimental results further show that RSP It will be affected by the difference between upstream and downstream tasks , These findings put forward new requirements for large-scale remote sensing data sets and pre training methods .

01

Research background

In recent years , Depth learning has the advantage of automatically extracting depth features that reflect the inherent attributes of objects , It has made an impressive breakthrough in the field of computer vision , Remote sensing is no exception . In the field of remote sensing , The most commonly used depth model is convolutional neural network (CNN). at present , Almost all remote sensing depth models are the most famous image data sets in the field of computer vision ImageNet-1K Pre-training on the data set , This data set comes from 1,000 Millions of real-world images in different categories enable the model to learn powerful representations . Then these pre trained models can be used as the backbone network of remote sensing tasks for further fine-tuning .

Although these models have achieved remarkable results in remote sensing missions , But there are still some problems to be studied . Intuitively speaking , Compared to natural images , The remote sensing image is in the perspective 、 Color 、 texture 、 Layout 、 There is obviously a large domain gap in terms of objects . Previous methods try to narrow this gap by further fine-tuning the pre training model on the remote sensing image data set . However ,ImageNet Preliminary training (IMP) The introduced system deviation has a side effect on the performance that can not be ignored . On the other hand , We noticed that , With the progress of Remote Sensing Technology , A variety of sensors capture a wealth of remote sensing images , Can be used for pre training . As a representative example ,MillionAID It is by far the largest remote sensing image data set , It is from Google Earth, which contains multiple sensor images (GE) It's collected on the website , And have similar ImageNet-1K Millions of images , This makes remote sensing pre training (RSP) Make it possible .

RSP Be able to train the depth model from scratch , This means that candidate models need not be limited to off the shelf CNN. therefore , In this paper , We also studied vision Transformer(Vision Transformer) The backbone network , They have shown amazing performance in the field of computer vision . And CNN Compared with convolution which is good at local modeling ,Vision Transformer The bulls in (MHSA) It can flexibly capture different global contexts . lately , The Exploration Institute proposed ViTAE The model explores convolution and MHSA The parallel structure of , To simultaneously model locality and long-range dependencies , stay ImageNet Good results have been achieved in classification tasks and downstream visual tasks . Besides , It also extracts multi-scale features by expanding convolution module and hierarchical design , This is important for computer vision downstream tasks , Especially in the task of remote sensing image understanding , All have important value . So we studied CNN And hierarchy Vision Transformer The network goes through RSP after , In scene recognition 、 Semantic segmentation 、 Fine tuning performance on remote sensing tasks such as target detection and change detection . To achieve these goals , We conducted extensive experiments on nine popular data sets , And draw some useful conclusions .RSP It is a new research direction in remote sensing image understanding , But it's still in the exploratory stage , Especially based on Vision Transformer The pre training method of this new network architecture . We hope this research can fill this gap , And provide useful insights for future research .

02

MillionAID, ViTAE and ViTAEv2 Introduction to

1.MillionAID

MillionAID It is by far the largest data set in the field of remote sensing . It contains 100,0848 Non overlapping scenes , Yes 51 class , Each category has about 2,000-45,000 Images . This data set is from Google Earth , Including but not limited to SPOT、IKONOS、WorldView and Landsat Series of sensors , Therefore, the image resolution is different . The maximum resolution can reach 0.5m, The smallest is 153m. Image sizes range from 110*110 To 31,672*31,672. The data sets are RGB Images , It is very suitable for training typical visual neural network models .

2.ViTAE and ViTAEv2

ViTAE It is an advanced technology recently proposed by the exploration and Research Institute Vision Transformer Model , It has a deep and narrow design , Downsampling quickly at the beginning of the network , Then deepen the network , Reduce model size and computing costs while improving performance .ViTAE The model first passes through three Reduction Cell Down sample the input image to 1/16 The resolution of the . And ViT similar , Before adding a location code , take class token With the third Reduction Cell The output connection of . Then stack multiple Normal Cell, And always maintain the resolution of the feature map . the last one Normal Cell Of class token Input to the linear layer for classification .ViTAE Model in ImageNet Excellent classification performance on datasets , But it is inconvenient like CNN That produces hierarchical intermediate features , So as to migrate to segmentation 、 Other downstream tasks such as detection and attitude estimation ( There are some new technologies to solve this problem , for example ViTDet, And has achieved good results , Please pay attention to our recurrence Repo:https://github.com/ViTAE-Transformer/ViTDet).

On this basis , The exploration research institute proposed ViTAEv2, It USES a ResNet and Swin And other popular backbone networks . stay ViTAEv2 in , The network is divided into four stages . Each phase begins with Reduction Cell Take the next sample , Then stack multiple Normal Cell Feature transformation . At the last stage Normal Cell Then use the global average pooling layer to replace class token. When fine tuning downstream tasks , The pooling layer is removed , The rest of the network is connected to the decoder of the corresponding task . chart 2 Show the original ViTAE and ViTAEv2 The network architecture of .

Reduction Cell and Normal Cell yes ViTAE The two most important modules in , They are based on typical Transformer Modules .Reduction Cell It is used for down sampling and provides multi-scale context . say concretely , In the input normalization layer and MHSA Before layer , These features are reduced by a pyramid (PRM). The module contains multiple parallel extended convolutions with different expansion rates , The step size controls the spatial downsampling rate . stay PRM after , Features from parallel branches are connected in the channel dimension .PRM take CNN The scale invariance of ViTAE, Local modeling is done by inputting PRM At the same time, the features of are sent to the parallel convolution module (PCM) Middle to finish .PCM Located in and containing PRM and MHSA In an additional branch with parallel global dependency paths , It consists of three successive convolution layers . By adjusting the stride ,PCM Lower sampling rate and PRM identical . come from MHSA、PCM And the three characteristics of the original residual branch in the input feedforward network (FFN) Before the addition fusion . It should be noted that ,Normal Cell and Reduction Cell Having a similar structure , But not including PRM modular .

suffer Swin Transformer Inspired by the ,ViTAEv2 Above cell Some of MHSA Is replaced by a window MHSA(WMHSA) To reduce computing costs . Considering that the feature size becomes smaller in the later stage , There is no need to divide features with windows . therefore , Only the first two stages MHSA By WMHSA replace . It should be noted that ,ViTAEv2 Adopted WMHSA No need to be like Swin Transformer That's how you do the cyclic offset , because WMHSA Is in PRM The merging of multiscale features is performed on , The information exchange between different regions has been realized through the overlapping receptive fields of expanded convolution . Besides , Because convolution has been able to encode position information ,ViTAEv2 There is no need to use relative position coding .ViTAE and ViTAEv2 Different from cell The detailed structure and comparison of 3 Shown .

In this study , We mainly evaluate the original ViTAE Of “Small” edition , be known as ViTAE-S. Corresponding , We also used ViTAEv2-S Model , Because it has excellent representation ability and better mobility for downstream tasks .

03

Implementation of remote sensing pre training

1. Determine the pre training model

We first determine the use of RSP Type of depth model . So , We from MillionAID A mini training set and a mini evaluation set are built in the official training set , There were 9775 and 225 Zhang image . notes : The latter group is selected randomly from each category 5 Images to balance categories . about CNN, Using the classic ResNet-50 . Because this study mainly discusses RSP Under the CNN and Vision Transformer The performance of the model , Therefore, we also evaluated a series of typical Vision Transformer Network of , Include DeiT-S 、PVT-S and Swin-T. One consideration in selecting a particular version of the model is to ensure that these models are consistent with ResNet-50 as well as ViTAE-S The model has similar parameters . Besides , in consideration of ViT It's vision Transformer The most basic model of , We chose the minimum version ViT-B Model for reference .

surface II The results of each model are shown , It can be seen that , Even though ViT-B The maximum number of parameters is , But its performance is not as good as the classic ResNet-50.DeiT-S The worst , Because we did not use the teacher model to assist the training . Because our task is to use remote sensing images for pre training , Therefore, obtaining the corresponding teacher model can be regarded as our goal rather than the premise . By introducing the design paradigm of feature pyramid ,PVT-S And ViT-B Improved accuracy compared to . On this basis , original ViTAE-S The model further considers the traditions of locality and scale invariance CNN With inductive bias .

However , Due to the early down sampling module (Reduction Cell, RC) The feature resolution in is large , More calculations are needed , So it takes more training time .Swin-T By restricting... In a fixed window MHSA To solve this problem , Window offset is used to implicitly promote the communication between windows .ViTAEv2 This kind of window multi head self attention is introduced (Window MHSA, WMHSA), And because convolution bypass has been able to promote cross window information exchange , Thus, the window offset and relative position coding operations are omitted . Final ,ViTAEv2-S For optimal performance , And 2.3% Of top-1 The accuracy rate exceeds the second place .

Based on the above results , The specific procedure for selecting candidate models is as follows . First , We choose ResNet-50 As a rule CNN The representative network in . After remote sensing pre training ResNet-50, A new set of data can be provided on a series of remote sensing data sets CNN Reference baseline . Due to the low accuracy 、 There are many parameters , We have no choice DeiT-S and ViT-B Model as a candidate model . Besides , Due to stacking Transformer The design of the , They are difficult to migrate to downstream tasks .( There are some new technologies to solve this problem , for example ViTDet, And has achieved good results , Please pay attention to our recurrence Repo:https://github.com/ViTAE-Transformer/ViTDet).

Swin Transformer Also has the PVT The characteristic pyramid structure of , And USES the WMHSA Replace the overall situation MHSA, Save memory and computation . because Swin-T Of top-1 Accuracy greater than PVT And requires less training time , So we also chose Swin-T As a candidate model . about ViTAE Model , We choose the model with the highest performance , namely ViTAEv2-S, With the expectation that in the follow-up task ( Such as remote sensing scene recognition ) It has good performance .

2. Get the right weight

After determining the above candidate models , We do them RSP To get the weight of the pre training . say concretely , To keep category balance , We are MillionAID Randomly select from each category of the dataset 1,000 Zhang image , Form inclusion 51,000 Verification set of images , And contain 50,000 Picture of ImageNet The size of the validation set is quite , And put the rest 949,848 Images for training .

To get the right pre training weights , We are training algebra in different ways (epoch) Under the configuration of ViTAEv2-S Model . Results such as table III Shown . It can be observed that the model is about 40 individual epoch Start performance saturation after , Because with training 20 individual epoch comparison ,top-1 The accuracy has only improved 0.64%, And the next 20 individual epoch Only brought 0.23% The gain of . therefore , We chose to train first 40 individual epoch The network weight of is taken as ViTAEv2-S Of RSP Parameters , And apply to subsequent tasks . Intuition , Models that perform well on large-scale pre training data sets will also perform well on downstream tasks . therefore , We also used the "pass" in downstream missions 100 individual epoch Network weight of training . These models are suffixed with “E40” and “E100” Express .

about ResNet-50 and Swin-T, We followed Swin Your training settings , That is, the model is trained 300 individual epoch. In the experiment , We observed that Swin-T-E120 On the verification set top-1 The accuracy is roughly equivalent to ViTAEv2-S-E40. therefore , We also chose Swin-T-E120 Training weights . Again , We also chose the final network weight Swin-T-E300 As and ViTAEv2-S-E100 Comparison . To make the experiment Fair , The use of 40 individual epoch Trained ResNet-50 and Swin-T The weight of , Because they are associated with ViTAEv2-S-E40 After the same training Algebra .

The final pre training model is listed in table IV in . It can be seen that , The accuracy of the verification set almost increases with the training epoch To increase by . however ,Swin-T-E300 Its performance is slightly lower than Swin-T-E120. For all that , We still have Swin-T-E300 Model . Because the model sees more samples in the training stage , It may have a stronger generalization ability .

04

Fine tuning experiments on downstream tasks

1. Scene recognition

Quantitative experiments : surface V The above candidate models and others that are pre trained using different methods are shown SOTA Result of method . Bold words in the last three groups indicate the best results in each group , and “*” Represents the best of all models ( It has the same meaning in other tasks ). And ImageNet In the process of the training ResNet-50 comparison , Our remote sensing pre training ResNet-50 Improved accuracy in all settings . These results mean RSP It brings a better starting point for the optimization of the subsequent fine-tuning process . Again ,RSP-Swin-T Performance on three settings is better than IMP-Swin-T, Comparable results have also been obtained on the other two settings . Besides , Compared with other complex methods ,ResNet-50 and Swin-T Use only RSP Weight without changing the network structure has achieved competitive results , Thus the value of remote sensing pre training is proved .

Besides , In comparison ImageNet In the process of the training ResNet-50 and Swin-T when , We can find out IMP-Swin-T Better performance on all settings , because Vision Transformer Have stronger context modeling ability . But through RSP After the weight is initialized ,ResNet Become more competitive . because ViTAEv2-S At the same time, it has the ability of local modeling and remote dependency modeling , No matter what IMP and RSP, It is superior to... In almost all settings ResNet-50 and Swin-T. Besides ,RSP-ViTAEv2-S In addition AID (5:5) Best performance on almost all settings except .

Qualitative experiments : chart 4 It shows the response of different regions of images from various scenes of different evaluation models . And IMP-ResNet-50 comparison ,RSP-ResNet-50 Focus more on important goals . It means RSP be conducive to ResNet-50 Learn to better express , Thanks to MillionAID A large number of semantically similar remote sensing images provided in the dataset . It's amazing ,IMP-Swin-T The model focuses on the background area , But after RSP after , Its foreground response has been significantly enhanced .ViTAEv2-S By combining CNN And visual converter , Both local and global context capture capabilities , Realize the overall perception of the whole scene .RSP-ViTAEv2-S Not only focus on the main object , Relevant areas in the background are also considered . On the foreground object ,RSP-ViTAEv2-S Can also give higher attention , In a complex scene where objects are distributed ,RSP-ViTAEv2-S It can form a unified and complete feature representation , Effectively perceive the overall information of the scene .

2. Semantic segmentation

Quantitative experiments : surface VII Shows the adoption of UperNet When the framework , Our approach and others SOTA Method in iSAID Segmentation results on dataset . It can be seen that , Transfer the backbone network from ResNet-50 Change to Swin-T, Then change to ViTAEv2-S when , Improved performance . The results are consistent with the above scene recognition results , Indicates visual Transformer Have better presentation ability . On the other hand , after ImageNet In the process of the training IMP-Swin-T Achieved competitive results , and IMP-ViTAEv2-S stay iSAID Best performance on datasets . surface VII It also shows RSP The advantage of the model is to perceive some categories with clear remote sensing semantics , for example “ bridge ”, This is consistent with the findings of previous scene recognition tasks .

Qualitative experiments : chart 6 Shown in Potsdam Data sets with different pre trained backbone networks UperNet Some visual segmentation results of segmentation model . For strip-shaped features , Its length is long , The model is required to capture long-range context , And the width is narrow , The local perception ability of the model is also required , and ViTAEv2 The network will CNN The locality and scale invariance of are introduced into Vision Transfomer In the network , At the same time, it has CNN and Transformer The advantages of , Therefore, global and local awareness can be realized at the same time . therefore , Only ViTAEv2-S Successfully connected the long strip of low vegetation ( As shown in the red box ).

3. object detection

Quantitative experiments : surface VIII Shows The result of target detection experiment . In challenging DOTA On dataset , It can be seen that advanced ORCN Detection framework , use ResNet-50 or Swin-T The backbone network model performs well .ViTAEv2-S By introducing CNN And scale invariance , Achieved amazing performance , take ORCN The baseline has been improved by nearly 2% mAP. Another thing to note is ,RSP The performance on these three backbone networks is better than IMP.RSP-ViTAEv2-S In general mAP Than IMP-ViTAEv2-S high , because RSP stay “ bridge ” And including “ The helicopter ” and “ The plane ” Has significant advantages in the aircraft category including , In other categories , The gap between the two models is not very large .

Qualitative experiments : chart 7 Visualization DOTA Use... On test sets ViTAEv2-S Backbone network ORCN Some test results of the model . Red box , When objects are densely distributed ,RSP-ViTAEv2-S You can still predict the correct object categories , and IMP-ViTAEv2-S Being confused by dense context and making wrong predictions . For long strips “ bridge ” Category ,IMP-ViTAEv2-S There is a missed inspection ( See yellow box ), and RSP-ViTAEv2-S The model successfully detects the object with a higher confidence score , This again echoes previous findings .

4. change detection

Quantitative experiments : surface X In this paper, we show the application of different pre training backbone networks BIT Quantitative experimental results of the framework on change detection task . You can see , Self supervised SeCo Pre training weights perform well on this task , although SeCo The goal of is to realize the seasonal invariance feature learning through comparative learning , But because it adopts the method of multi head subspace embedding to encode the change features , Therefore, it can still learn the feature expression sensitive to seasonal changes in specific branches . For all that , adopt IMP or RSP In the process of the training ViTAEv2-S Better performance than SeCo-ResNet-50, Shows the benefits of using an advanced backbone network . Compared with other methods ,ViTAEv2-S Achieved the best performance , It shows that Vision Transformer The potential of the model in the field of Remote Sensing .

Under different tasks through different models RSP and IMP Performance comparison under , We can infer that the granularity of change detection should be between segmentation and detection , Because although it is a split task , But there are only two categories , There is no need to identify specific semantic categories .

Qualitative experiments : chart 8 Some visual change detection results are shown . It can be seen that ,IMP Of ResNet-50 and Swin-T It can not well detect the changes of the roads in the natural scene . use RSP It can partially alleviate this problem .SeCo-ResNet-50 The detection of Road area is further improved , This is different from the table X The results are the same in . Compared with the above model ,ViTAEv2-S The model captures the road details effectively . In the scene of artificial change ,ViTAEv2-S The model solves the problem of object adhesion in all other model results , This shows that ViTAEv2-S The feature of is more discriminative in distinguishing objects and backgrounds .

5. Comprehensive comparison of different remote sensing pre training backbone networks

Last , We made a comprehensive comparison RSP Performance of different backbone networks on all tasks . say concretely , We average the scores of all data sets for each task , Results such as table XI. You can find , Pre training more epoch The backbone of usually performs better on downstream tasks , Because they get a stronger representation . Although there are exceptions , For example, pre training 300 Generation Swin-T The performance of model in object detection task is not as good as that of pre training 120 The corresponding model of generation , This implies that task differences are also important . Combined with the CNN and Vision Transformer Superior ViTAEv2-S The model shows the best performance on all tasks .

05

Conclusion

In this study , We are in the largest remote sensing data set MillionAID Based on CNN and Vision Transformer Remote sensing pre training problem , And comprehensively evaluate their application in scene recognition 、 Semantic segmentation 、 Performance on four downstream tasks of object detection and change detection , And connect them with ImageNet Pre training and others SOTA Methods for comparison . Through comprehensive analysis of the experimental results , We come to the following conclusion :

(1) With the traditional CNN The model compares , Vision Transformer Excellent performance in a series of remote sensing missions , especially ViTAEv2-S This will CNN The inherent inductive bias of is introduced into Vision Transformer Advanced model of , The best performance is achieved in almost all settings for these tasks .

(2) classic IMP Enable the depth model to learn more general representations . therefore ,IMP When processing remote sensing image data , Competitive baseline results can still be produced .RSP Have produced a comparable IMP An equivalent or better result , And because the data difference between the upstream pre training task and the downstream task is reduced , So in some specific categories ( for example “ bridge ” and “ The plane ”) Better performance on .

(3) The difference between tasks is right RSP The performance of . If the representation required for a specific downstream task is closer to the upstream pre training task ( For example, scene recognition ), be RSP It usually leads to better performance .

We hope that this research can provide the remote sensing community with information about the use of advanced Vision Transformer And remote sensing pre training . For the convenience of everyone , All remote sensing pre training models and related codes have been open source , See https://github.com/ViTAE-Transformer/ViTAE-Transformer-Remote-Sensing . in addition , About using non hierarchical Vision Transformer Research progress in the application of models to downstream tasks , Can pay attention to ViTDet The method of and our recurrence code :https://github.com/ViTAE-Transformer/ViTDet . We will be in ViTAE-Transformer-Remote-Sensing The official repo The corresponding results are constantly updated in .

Thesis link :https://arxiv.org/abs/2204.02825

Project address :https://github.com/ViTAE-Transformer/ViTAE-Transformer-Remote-Sensing

reference

[1] D.Wang, J. Zhang, B.Du, G-S.Xia and and D. Tao, “An Empirical Study of Remote Sensing Pretraining”, arXiv preprint, axXiv: 2204: 02825, 2022.

[2] Y. Long, G.-S. Xia, S. Li, W. Yang, M. Y. Yang, X. X. Zhu, L. Zhang, and D. Li, “On creating benchmark dataset for aerial image interpretation: Reviews, guidances and million-aid,” IEEE JSTARS, vol. 14, pp. 4205–4230, 2021.

[3] Y. Xu, Q. Zhang, J. Zhang, and D. Tao, “Vitae: Vision transformer advanced by exploring intrinsic inductive bias,” NeurIPS, vol. 34, 2021.

[4] Q. Zhang, Y. Xu, J. Zhang, and D. Tao, “Vitaev2: Vision transformer advanced by exploring inductive bias for image recognition and beyond,” arXiv preprint arXiv:2202.10108, 2022.

[5] T. Xiao, Y. Liu, B. Zhou, Y. Jiang, and J. Sun, “Unified perceptual parsing for scene understanding,” in ECCV, 2018, pp. 418–434.

[6] X. Xie, G. Cheng, J. Wang, X. Yao, and J. Han, “Oriented r-cnn for object detection,” in ICCV, October 2021, pp. 3520–3529.

[7] H. Chen, Z. Qi, and Z. Shi, “Remote Sensing Image Change Detection With Transformers,” IEEE TGRS., vol. 60, p.3095166, Jan. 2022.

[8] Y. Li, H. Mao, R. Girshick, K. He. Exploring Plain Vision Transformer Backbones for Object Detection[J]. arXiv preprint arXiv:2203.16527, 2022.

THE END

Please contact the official account for authorization.

The learning group of computer vision research institute is waiting for you to join !

ABOUT

Institute of computer vision

The Institute of computer vision is mainly involved in the field of deep learning , Mainly devoted to face detection 、 Face recognition , Multi target detection 、 Target tracking 、 Image segmentation and other research directions . The Research Institute will continue to share the latest paper algorithm new framework , The difference of our reform this time is , We need to focus on ” Research “. After that, we will share the practice process for the corresponding fields , Let us really experience the real scene of getting rid of the theory , Develop the habit of hands-on programming and brain thinking !

VX:2311123606

Previous recommendation

In recent years, several good papers have implemented the code ( With source code download )

Based on hierarchical self - supervised learning, vision Transformer Scale to gigapixel images

YOLOS: Rethink through target detection Transformer( With source code )

Fast YOLO: For real-time embedded target detection ( Attached thesis download )

边栏推荐

- 面试经典题目:怎么做的性能测试?【杭州多测师】【杭州多测师_王sir】

- [learn FPGA programming from scratch -17]: quick start chapter - operation steps 2-5- VerilogHDL hardware description language symbol system and program framework (both software programmers and hardwa

- Complete test process [Hangzhou multi tester] [Hangzhou multi tester \wang Sir]

- web网页设计实例作业 ——河南美食介绍(4页) web期末作业设计网页_甜品美食大学生网页设计作业成品

- C# 启动一个外部exe文件,并传入参数

- 从0到1了解Prometheus

- How the autorunner automated test tool creates a project -alltesting | Zezhong cloud test

- 微信小程序时间戳转化时间格式+时间相减

- [sword finger offer] 21 Adjust array order so that odd numbers precede even numbers

- Data enhancement

猜你喜欢

Ruiji takeout project (III) employee management business development

pycharm和anaconda的基础上解决Jupyter连接不上Kernel(内核)的问题--解决方案1

What is a generic? Why use generics? How do I use generics? What about packaging?

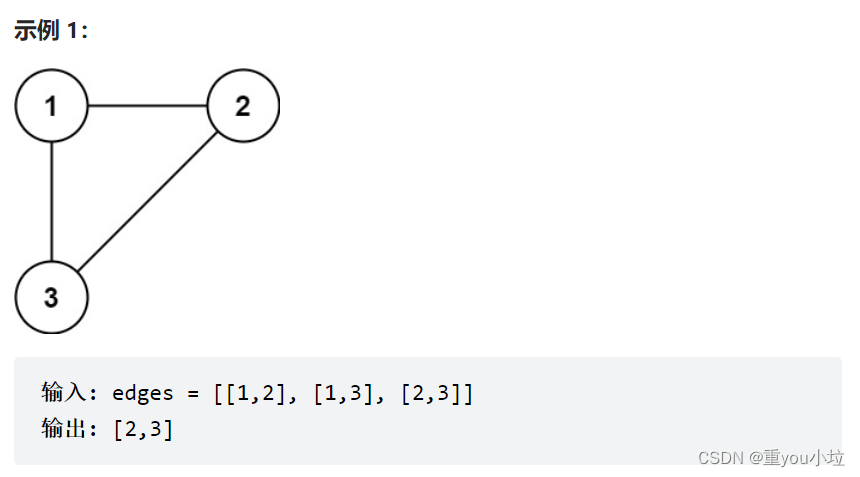

leetcode684. 冗余连接(中等)

leetcode417. 太平洋大西洋水流问题(中等)

What happened to the frequent disconnection of the computer at home

leetcode785. 判断二分图(中等)

如何优化 Compose 的性能?通过「底层原理」寻找答案 | 开发者说·DTalk

【剑指Offer】22.链表中倒数第K节点

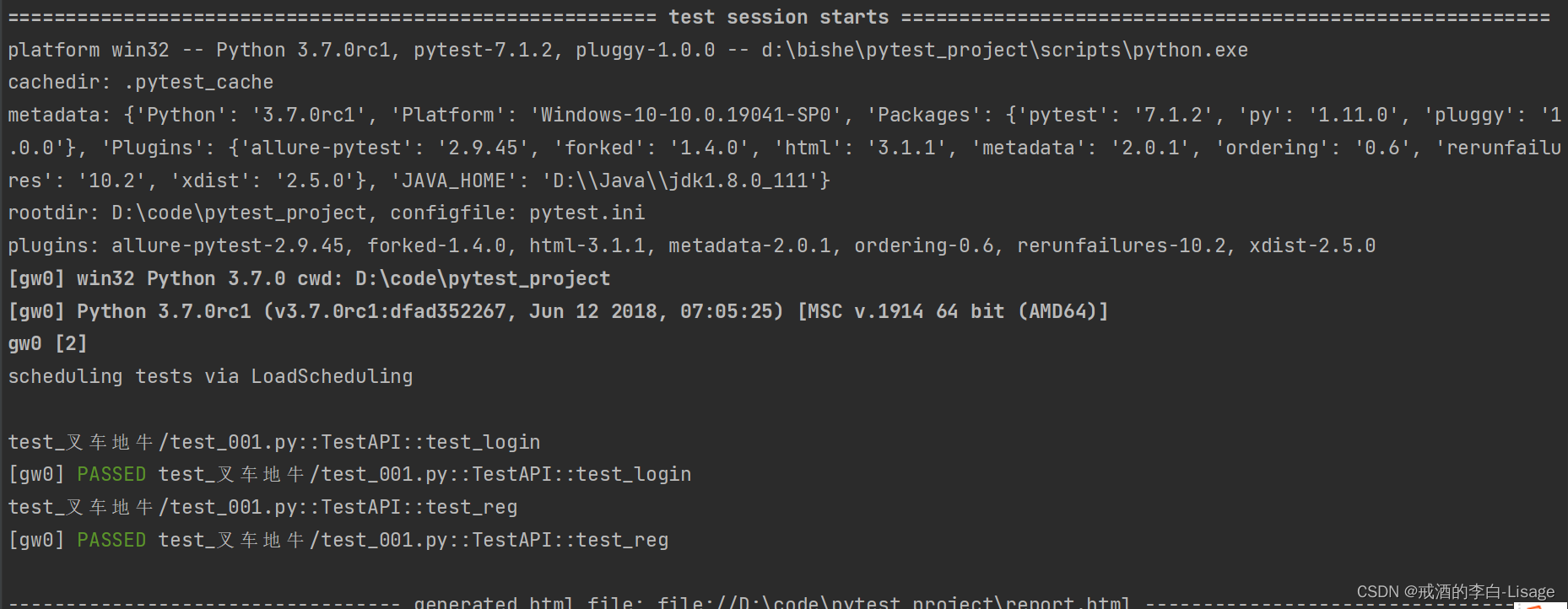

Pytest测试框架基础篇

随机推荐

面试高频算法题---最长回文子串

What happened to the frequent disconnection of the computer at home

Production problem troubleshooting reference

leetcode-141. Circular linked list

leetcode-141.环形链表

Leetcode 1974. 使用特殊打字机键入单词的最少时间(可以,一次过)

学生网站模板棕色蛋糕甜品网站设计——棕色蛋糕甜品店(4页) 美食甜品网页制作期末大作业成品_生鲜水果网页设计期末作业

How the autorunner automated test tool creates a project -alltesting | Zezhong cloud test

unittest 如何知道每个测试用例的执行时间

MySQL快速入门实例篇(入内不亏)

2022G1工业锅炉司炉考题及模拟考试

2022安全员-A证考试题模拟考试题库模拟考试平台操作

[sword finger offer] 21 Adjust array order so that odd numbers precede even numbers

LeetCode——42. 接雨水(双指针)

问题 AC: 中国象棋中的跳马问题

Laravel 8 uses passport for auth authentication and token issuance

【pytest学习】pytest 用例执行失败后其他不再执行

leetcode417. Pacific Atlantic current problems (medium)

大龄码农从北京到荷兰的躺平生活

2022 national question bank and mock examination for safety officer-b certificate