当前位置:网站首页>【点云处理之论文狂读前沿版13】—— GAPNet: Graph Attention based Point Neural Network for Exploiting Local Feature

【点云处理之论文狂读前沿版13】—— GAPNet: Graph Attention based Point Neural Network for Exploiting Local Feature

2022-07-03 08:53:00 【LingbinBu】

GAPNet: Graph Attention based Point Neural Network for Exploiting Local Feature of Point Cloud

摘要

- 方法: 本文提出一种新的用于point cloud神经网络GAPNet,通过将graph attention mechanism嵌入到stacked Multi-Layer-Perceptron (MLP) layers中学习point cloud的局部几何表示

- 引入GAPLayer,通过强调邻域的不同权重学习每个点的attention features

- 利用multi-head mechanism,能够让GAPLayer从单独的head聚合不同的特征

- 在邻域中提出attention pooling layer得到local signature,用于提高网络的鲁棒性

- 代码:TenserFlow版本

方法

记 X = { x i ∈ R F , i = 1 , 2 , … , N } X=\left\{x_{i} \in \mathbb{R}^{F}, i=1,2, \ldots, N\right\} X={ xi∈RF,i=1,2,…,N}为输入point cloud set,本文中, F = 3 F=3 F=3,表示坐标 ( x , y , z ) (x, y, z) (x,y,z)。

GAPLayer

Local structure representation

考虑到真实应用中的point cloud数量很庞大,所以利用 k k k-nearest neighbor构造有向 graph G = ( V , E ) G=(V, E) G=(V,E),其中 V = { 1 , 2 , … , N } V=\{1,2, \ldots, N\} V={ 1,2,…,N}表示节点, E ⊆ V × N i E \subseteq V \times N_{i} E⊆V×Ni表示边, N i N_{i} Ni表示点 x i x_{i} xi的邻域集合。定义边特征为 y i j = ( x i − x i j ) y_{i j}=\left(x_{i}-x_{i j}\right) yij=(xi−xij),其中 i ∈ V , j ∈ N i i \in V, j \in N_{i} i∈V,j∈Ni, x i j x_{i j} xij表示 x i x_{i} xi的neighboring point x j x_{j} xj。

Single-head GAPLayer

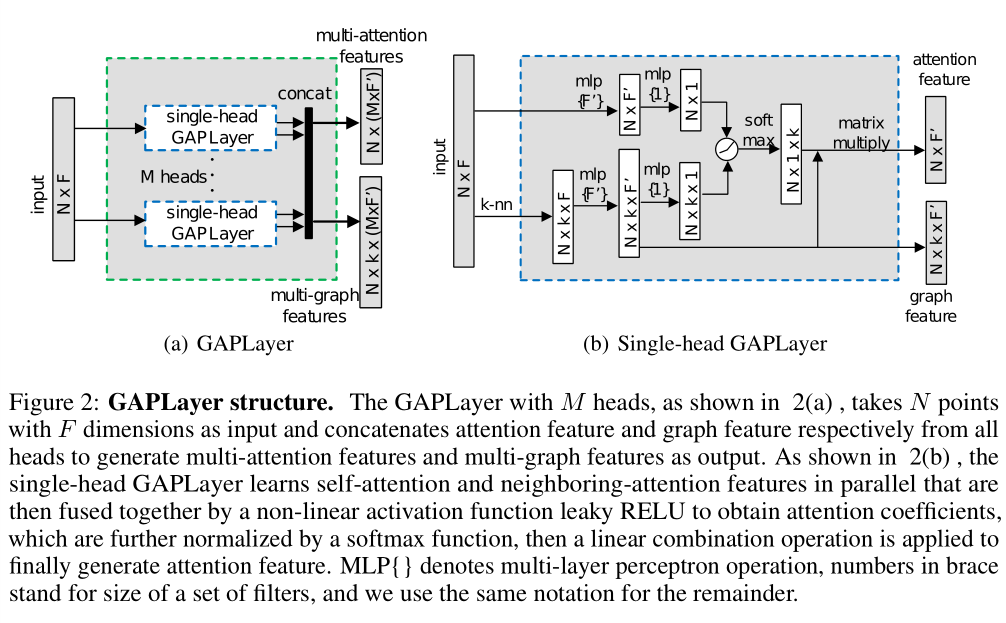

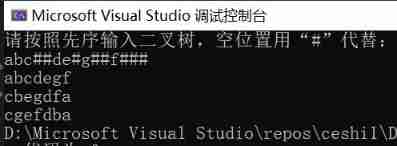

Single-head GAPLayer的结构见图2(b)。

为了给每个neighbors分配注意力,分别提出了self-attention mechanism和neighboring-attention mechanism来获得每个点到其neighbors的注意力系数,如图1所示。具体而言,self-attention mechanism通过考虑每个点的self-geometric information学习self-coefficients;neighboring-attention mechanism通过考虑neighborhood关注local-coefficients。

作为初始化的步骤,对point cloud的顶点和边进行编码,映射到更高维度的特征,输出的维度为 F F F:

x i ′ = h ( x i , θ ) y i j ′ = h ( y i j , θ ) \begin{aligned} x_{i}^{\prime} &=h\left(x_{i}, \theta\right) \\ y_{i j}^{\prime} &=h\left(y_{i j}, \theta\right) \end{aligned} xi′yij′=h(xi,θ)=h(yij,θ)

其中 h ( ) h() h()是一个参数化的非线性函数,在实验中被选中作为single-layer neural network , θ \theta θ是filter的可学习参数集合。

通过融合self-coefficients h ( x i ′ , θ ) h\left(x_{i}^{\prime}, \theta\right) h(xi′,θ) 和 local-coefficients h ( y i j ′ , θ ) h\left(y_{i j}^{\prime}, \theta\right) h(yij′,θ)得到最终的attention coefficients,其中 h ( x i ′ , θ ) h\left(x_{i}^{\prime}, \theta\right) h(xi′,θ)和 h ( y i j ′ , θ ) h\left(y_{i j}^{\prime}, \theta\right) h(yij′,θ)是输出为1维的单层的神经网络, LeakyReLU() 表示激活函数:

c i j = LeakyRe L U ( h ( x i ′ , θ ) + h ( y i j ′ , θ ) ) c_{i j}=\operatorname{LeakyRe} L U\left(h\left(x_{i}^{\prime}, \theta\right)+h\left(y_{i j}^{\prime}, \theta\right)\right) cij=LeakyReLU(h(xi′,θ)+h(yij′,θ))

使用softmax对这些系数进行归一化:

α i j = exp ( c i j ) ∑ k ∈ N i exp ( c i k ) \alpha_{i j}=\frac{\exp \left(c_{i j}\right)}{\sum_{k \in N_{i}} \exp \left(c_{i k}\right)} αij=∑k∈Niexp(cik)exp(cij)

Single-head GAPLayer的目标就是计算每个点的ontextual attention feature。为此,利用计算得到的归一化系数更新顶点的特征 x ^ i ∈ R F ′ \hat{x}_{i} \in \mathbb{R}^{F^{\prime}} x^i∈RF′ :

x ^ i = f ( ∑ j ∈ N i α i j y i j ′ ) \hat{x}_{i}=f\left(\sum_{j \in N_{i}} \alpha_{i j} y_{i j}^{\prime}\right) x^i=f⎝⎛j∈Ni∑αijyij′⎠⎞

其中 f ( ) f() f()是一个非线性激活函数,实验中使用RELU函数。

Multi-head mechanism

为了获得足够的结构信息和稳定的网络,我们将 M M M 个独立的single-head GAPLayers进行拼接,生成通道数为 M × F ′ M \times F^{\prime} M×F′的multi-attention features:

x ^ i ′ = ∥ m M x ^ i ( m ) \hat{x}_{i}^{\prime}=\|_{m}^{M} \hat{x}_{i}^{(m)} x^i′=∥mMx^i(m)

如图2所示,multi-head GAPLayer 的输出是multi-attention features 和multi-graph features。 x ^ i ( m ) \hat{x}_{i}^{(m)} x^i(m)是第 m m m个head的 attention feature, M M M是heads的数量, ∥ \| ∥表示特征通道间的拼接操作。

Attention pooling layer

为了提高网络的稳定性和提升性能,在multi-graph features的相邻通道上定义attention pooling layer:

Y i = ∥ m M max j ∈ N i y i j ′ ( m ) Y_{i}=\|_{m}^{M} \max _{j \in N_{i}} y_{i j}^{\prime(m)} Yi=∥mMj∈Nimaxyij′(m)

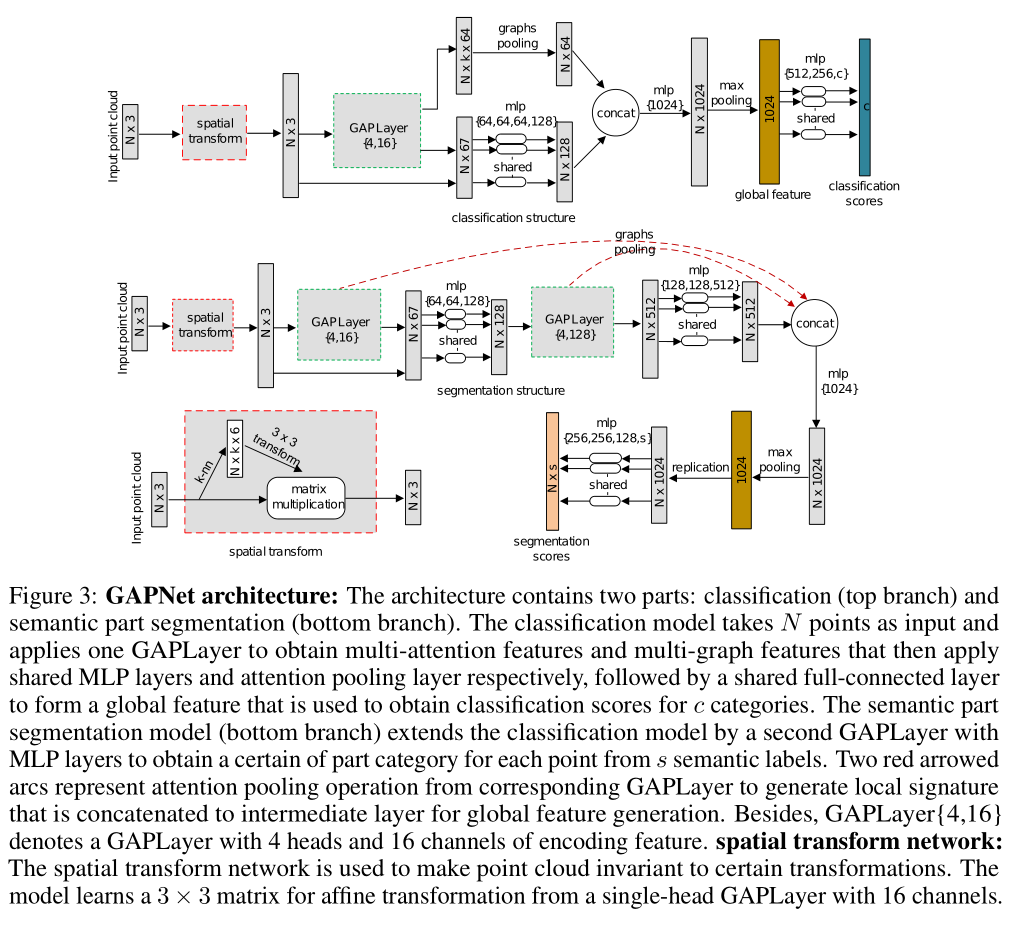

GAPNet architecture

该结构与PointNet有3点不一样:

- 使用attention-aware spatial transform network使得Point cloud具有某种变换不变性

- 不对单个点进行处理,而是提取局部特征

- 使用attention pooling layer得到local signature,与中间层相连接,用于得到 global descriptor

实验

Classification

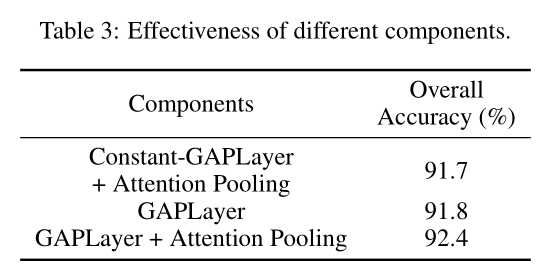

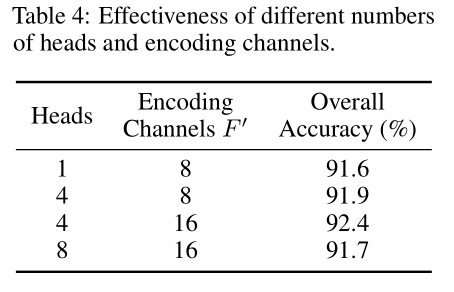

Ablation study

Semantic part segmentation

边栏推荐

- Using variables in sed command

- Find the combination number acwing 886 Find the combination number II

- Use the interface colmap interface of openmvs to generate the pose file required by openmvs mvs

- cres

- The difference between if -n and -z in shell

- 低代码起势,这款信息管理系统开发神器,你值得拥有!

- Noip 2002 popularity group selection number

- What is an excellent fast development framework like?

- Recommend a low code open source project of yyds

- State compression DP acwing 291 Mondrian's dream

猜你喜欢

LeetCode 515. 在每个树行中找最大值

Find the combination number acwing 885 Find the combination number I

AcWing 786. 第k个数

状态压缩DP AcWing 291. 蒙德里安的梦想

数位统计DP AcWing 338. 计数问题

状态压缩DP AcWing 91. 最短Hamilton路径

Binary tree traversal (first order traversal. Output results according to first order, middle order, and last order)

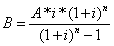

Mortgage Calculator

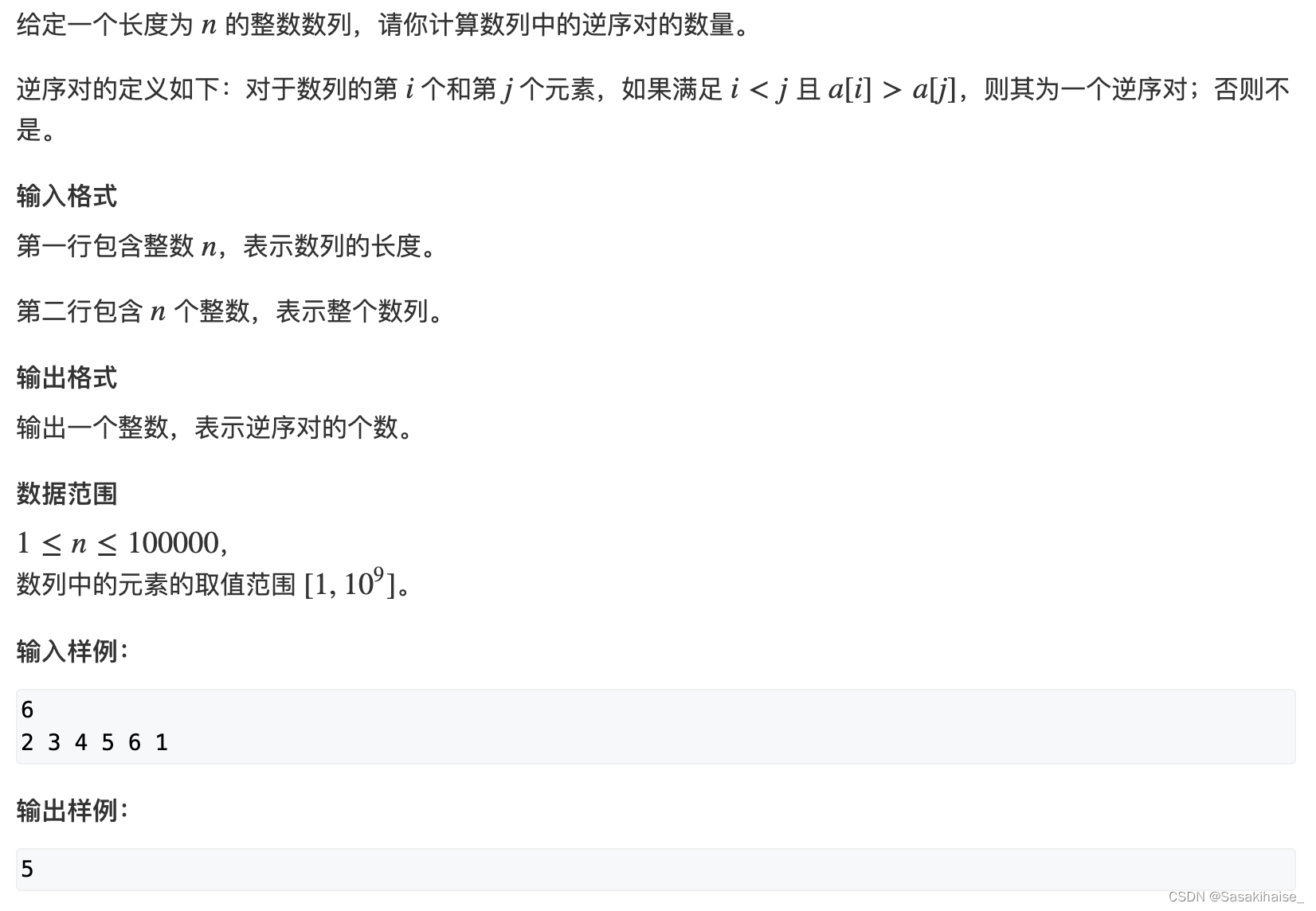

AcWing 788. Number of pairs in reverse order

How to place the parameters of the controller in the view after encountering the input textarea tag in the TP framework

随机推荐

Use the interface colmap interface of openmvs to generate the pose file required by openmvs mvs

Try to reprint an article about CSDN reprint

LeetCode 241. Design priorities for operational expressions

How to delete CSDN after sending a wrong blog? How to operate quickly

【点云处理之论文狂读经典版14】—— Dynamic Graph CNN for Learning on Point Clouds

高斯消元 AcWing 883. 高斯消元解线性方程组

【点云处理之论文狂读前沿版8】—— Pointview-GCN: 3D Shape Classification With Multi-View Point Clouds

String splicing method in shell

Digital management medium + low code, jnpf opens a new engine for enterprise digital transformation

Memory search acwing 901 skiing

LeetCode 1089. 复写零

What is an excellent fast development framework like?

浅谈企业信息化建设

【点云处理之论文狂读经典版10】—— PointCNN: Convolution On X-Transformed Points

Digital statistics DP acwing 338 Counting problem

状态压缩DP AcWing 91. 最短Hamilton路径

Sword finger offer II 091 Paint the house

What is the difference between sudo apt install and sudo apt -get install?

How to use Jupiter notebook

LeetCode 75. 颜色分类