当前位置:网站首页>Detailed explanation of label smoothing and implementation of pytorch tenorflow

Detailed explanation of label smoothing and implementation of pytorch tenorflow

2022-06-23 02:52:00 【Goose】

Definition

Label smoothing (Label smoothing), image L1、L2 and dropout equally , It is a regularization method in the field of machine learning , Usually used for classification problems , The purpose is to prevent the model from overconfident prediction during training , Improve the problem of poor generalization ability .

background

For the classification problem , We usually think that the target category probability of the label vector in the training data should be 1, The probability of non target category should be 0. Conventional one-hot Encoded label vector y_i by ,

When training the network , Minimum loss function H(y,p)=-\sum\limits_i^K{y_ilogp_i}, among p_i Output by the penultimate layer of the model logits vector z application Softmax The function calculates that ,

Tradition one-hot Coding tags in the process of e-learning , Encourage the model to predict the probability of approaching the target category 1, The probability of non target categories approaches 0, That is, the final predicted logits vector (logits The vector passes through softmax The output of all probability categories is the predicted output ) Target category in z_i The value of tends to infinity , Make the model predict the correct and wrong labels logit Learn in the direction where the difference increases infinitely , And too big logit The difference will make the model less adaptive , Over confident in its predictions .

When the training data is not enough to cover all cases , This will cause the network to over fit , Poor generalization ability , And in fact, some labeled data may not be accurate , At this time, using the cross entropy loss function as the objective function is not necessarily optimal .

Mathematical definition

label smoothing Combined with uniform distribution , Use the updated label vector \hat y^i To replace the traditional ont-hot Encoded label vector y_{hat}

among K The total number of categories for multiple categories ,αα Is a smaller super parameter ( Usually take 0.1), namely

such , The smoothed distribution of labels is equivalent to adding noise to the real distribution , Avoid overconfidence in the model about the correct label , So that the difference between the output values of the predicted positive and negative samples is not so large , So as to avoid over fitting , Improve the generalization ability of the model .

effect

NIPS 2019 This paper on When Does Label Smoothing Help? Explain why by experiment Label smoothing Sure work, It is pointed out that label smoothing can make cluster More compact , Increase the distance between classes , Reduce the distance within the class , Improve generalization , At the same time, it can improve the efficiency Model Calibration( The effect of the model on the predicted value confidences and accuracies Between aligned The degree of ). But in model distillation Label smoothing Can cause performance degradation .

From the definition of label smoothing, we can see , It encourages neural networks to choose the right class , And the difference between the correct class and the rest of the wrong class is the same . The difference is , If we use hard targets , It will allow a large difference between different error classes . Based on this, the author puts forward a conclusion : Label smoothing encourages the results of the penultimate activation function to be close to the template of the correct class , And the same away from the wrong class template .

The author designed a visual scheme to prove this , The specific plan is :(1) choose 3 Classes ;(2) Select the plane of the orthonormal basis of the template passing through the three classes ;(3) Map the result after the penultimate activation function to the plane . The author did 4 Group experiment , The first group of experiments was conducted in CIFAR-10/AlexNet( Data sets / Model ) above “ The plane ”、“ automobile ” and “ bird ” Three kinds of results , The visualization results are as follows :

From this we can see that , After adding label smoothing ( The last two pictures ), Each class gathers more tightly , And the distance from other classes is roughly the same . The second group of experiments was conducted in CIFAR-100/ResNet-56( Data sets / Model ) The results of the experiment on , The three classes are “ Beaver ”、“ The dolphins ” And “ Otter ”, We can get similar results :

In the third group of experiments , The author tested in ImageNet/Inception-v4( Data sets / Model ) Performance on , The three classes are “ Mongoose ”、“ The carp ” and “ Knife meat ”, give the result as follows :

because ImageNet There are many fine-grained classifications , It can be used to test the relationship between similar classes . The three categories selected by the author in the fourth group of experiments are “ Toy poodle ”、“ Mini poodle ” and “ The carp ”, You can see that the first two classes are very similar , The last class with big difference is shown in blue in the figure , give the result as follows :

It can be seen that when hard targets are used , Two similar classes are closer to each other . However, label smoothing enforces that each example is equidistant from the templates of all remaining classes , This leads to the distance between the two classes in the last two graphs , This has caused the loss of information to some extent .

Code implementation

pytorch Part of the code

class LabelSmoothing(nn.Module):

def __init__(self, size, smoothing=0.0):

super(LabelSmoothing, self).__init__()

self.criterion = nn.KLDivLoss(size_average=False)

#self.padding_idx = padding_idx

self.confidence = 1.0 - smoothing#if i=y Formula

self.smoothing = smoothing

self.size = size

self.true_dist = None

def forward(self, x, target):

"""

x Indicates input (N,M)N Samples ,M Indicates the total number of classes , The probability of each class log P

target Express label(M,)

"""

assert x.size(1) == self.size

true_dist = x.data.clone()# First, make a deep copy

#print true_dist

true_dist.fill_(self.smoothing / (self.size - 1))#otherwise Formula

#print true_dist

# become one-hot code ,1 Indicates to fill by column ,

#target.data.unsqueeze(1) Index representation ,confidence Indicates the filled number

true_dist.scatter_(1, target.data.unsqueeze(1), self.confidence)

self.true_dist = true_dist

return self.criterion(x, Variable(true_dist, requires_grad=False))

loss_function = LabelSmoothing(num_labels, 0.1)tensorflow Code implementation

def smoothing_cross_entropy(logits,labels,vocab_size,confidence):

with tf.name_scope("smoothing_cross_entropy", values=[logits, labels]):

# Low confidence is given to all non-true labels, uniformly.

low_confidence = (1.0 - confidence) / to_float(vocab_size - 1)

# Normalizing constant is the best cross-entropy value with soft targets.

# We subtract it just for readability, makes no difference on learning.

normalizing = -(

confidence * tf.log(confidence) + to_float(vocab_size - 1) *

low_confidence * tf.log(low_confidence + 1e-20))

soft_targets = tf.one_hot(

tf.cast(labels, tf.int32),

depth=vocab_size,

on_value=confidence,

off_value=low_confidence)

xentropy = tf.nn.softmax_cross_entropy_with_logits_v2(

logits=logits, labels=soft_targets)

return xentropy - normalizingRef

边栏推荐

- Push RTMP stream using ffmpeg

- What is ISBN code and how to make it

- 8. greed

- Analysis of ThreadLocal

- 2022-02-05: the k-th decimal number of dictionary order. Given integers n and K, find 1

- Related concepts of TTF, TOF, woff and woff2

- Learning notes of recommendation system (1) - Collaborative Filtering - Theory

- Troubleshooting and optimization of easynvr version 5.0 Video Square snapshot not displayed

- Windows system poisoning, SQL Server database file recovery rescue and OA program file recovery

- Soft exam information system project manager_ Information system comprehensive testing and management - Senior Information System Project Manager of soft test 027

猜你喜欢

Spark broadcast variables and accumulators (cases attached)

WebService details

5. concept of ruler method

Soft exam information system project manager_ Contract Law_ Copyright_ Implementation Regulations - Senior Information System Project Manager of soft exam 030

What is sitelock? What is the function?

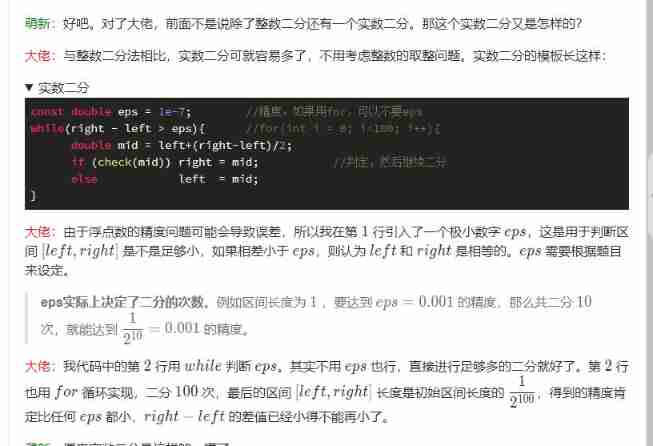

6. template for integer and real number dichotomy

Understand GB, gbdt and xgboost step by step

Microservice Optimization: internal communication of microservices using grpc

Quick sorting C language code + auxiliary diagram + Notes

How to store, manage and view family photos in an orderly manner?

随机推荐

Salesforce fileUpload (I) how to configure the file upload function

Applet control version update best practices

Deep scan log4j2 vulnerability using codesec code audit platform

Push RTMP stream using ffmpeg

Docker installs mysql5.7 and mounts the configuration file

PNAs: power spectrum shows obvious bold resting state time process in white matter

Analysis of ThreadLocal

The difference between script in head and body

Vulnhub DC-5

How to use pictures in Excel in PPT template

[data preparation and Feature Engineering] data cleaning

Schedule tasks to periodically restart remote services or restart machines

Im web demo invite end hang up error avoidance

Extract NTDs with volume shadow copy service dit

Precision loss problem

Operate attribute chestnut through reflection

Pyqt5 installation and use

2022-01-30: minimum good base. For a given integer n, if K (k) of n

What is ISBN code and how to make it

Reading redis source code (III) initialization and event cycle