当前位置:网站首页>One article combs multi task learning (mmoe/ple/dupn/essm, etc.)

One article combs multi task learning (mmoe/ple/dupn/essm, etc.)

2022-06-24 16:40:00 【Alchemy notes】

When you're making models , Often focus on the optimization of a specific index , Such as click through rate model , Just optimize AUC, Do a binary model , Just optimize f-score. However , This ignores the information gain and effect improvement that the model can bring by learning other tasks . By sharing vector representations in different tasks , We can greatly improve the generalization effect of the model in various tasks . This method is what we're going to talk about today - Multi task learning (MTL).

So how to determine whether it's multi task learning ? You don't need to look at the whole structure of the model , Just look at loss Function , If loss Contains many items , Each is a different goal , This model is multi task learning . Sometimes , Although your model is only an optimization goal , It can also be done through multitasking , Enhance the generalization effect of the model . For example, click through rate model , We can do this by adding transformation samples , Building AIDS loss( Estimated conversion ), So as to improve the generalization of the click through rate model .

Why multitasking is effective ? for instance , A model has learned to distinguish colors , If this model is directly applied to the classification task of vegetables and meat ? It's easy to learn that the green ones are vegetables , The other, more likely, is meat . Is regularization multitasking ? Regularized, optimized loss Not only does it have its own return / Classification produces loss, also l1/l2 Produced loss, Because we think " It's right and just fitting " The parameters of the model should be sparse , And it's not easy to be too big , To put this assumption into the model to learn , So we have the regularization term , Nature is also an extra task .

MTL Two methods

The first is hard parameter sharing, As shown in the figure below :

Relatively simple , The first few floors dnn Share... For each task , Then separate out the different tasks layers. This method effectively reduces the risk of over fitting : The more tasks the model learns at the same time , In the sharing layer, the model needs to learn a common embedded expression to make every task perform better , So as to reduce the risk of over fitting .

The second is soft parameter sharing, As shown in the figure below :

In this way , Each task has its own model , It has its own parameters , But there are restrictions on the parameters between different models , The parameters of different models must be similar , There will be a distance Describe the similarity between parameters , Will be added to the model learning as an additional task , Similar to the regularization term .

Multitasking can improve , Mainly due to the following reasons :

- Implicit data enhancement : Each task has its own sample , With multitasking learning , The sample size of the model will increase a lot . And the data is noisy , If you study alone A Mission , The model will put A The noise of data is also learned , If it's multitasking , Because the model requires B The task also needs to learn well , It's going to ignore A The noise of the mission , Empathy , Modeling A I'll ignore it when I'm in the middle of it B The noise of the mission , So multi task learning can learn a more accurate embedded expression .

- Focus on : If the task has a lot of data noise , Very little data and very high dimensions , The model can't distinguish the related features from the non related features . Multi task learning can help models focus on useful features , Because different tasks will reflect the correlation between characteristics and tasks .

- Feature information theft : There are some features in the mission B It's easy to learn in English , On mission A It's hard to learn in English , The main reason is the mission A The interaction with these features is more complex , And for the task A For example, other features may hinder the learning of some features , So through multitasking learning , The model can learn every important feature efficiently .

- Expression bias :MTL Make the model learn the vector representation that all tasks prefer . This will also help to extend the model to new tasks in the future , Because it's assumed that space performs well for enough training tasks , Also good at learning new tasks .

- Regularization : For a task , The learning of other tasks will regularize the task .

Multi task deep learning model

Deep Relationship Networks:

From the chart , We can see that the first few convolution layers are pre trained , The latter layers share parameters , Used to learn the connections between different tasks , Finally, independent dnn Modules are used to learn about tasks .

Fully-Adaptive Feature Sharing:

From the other extreme , Here's a bottom-up approach , Start with a simple network , In the training process, the network is greedily and dynamically expanded by using the grouping criteria of similar tasks . Greedy methods may not be able to find a globally optimal model , And each branch is only assigned to one task, which makes the model unable to learn the complex interaction between tasks .

cross-stitch Networks:

As mentioned above soft parameter sharing, The model is two completely separate model structures , The structure uses cross-stitch Unit to let the separated model learn the relationship between different tasks , As shown in the figure below , By means of pooling After the layer and full connection layer are added respectively cross-stitch Linear fusion of the feature expression learned earlier , And then output to the following convolution / Fully connected module .

A Joint Many-Task Model:

As shown in the figure below , The predefined hierarchy is made up of NLP Task composition , Low level structures are learned through word level tasks , So do the analysis , Block labeling, etc . The structure of the intermediate level is learned through the task of parsing level , Such as syntactic dependency . High level structure is learned through semantic level tasks .

weighting losses with uncertainty:

Considering the uncertainty of correlation between different tasks , Multi task loss function based on Gaussian likelihood maximization , Adjust the relative weight of each task in the cost function . The structure is shown in the following figure , Regression of pixel depth 、 Semantic and instance segmentation .

sluice networks:

The following model summarizes the deep learning based MTL Method , Such as hard parameter sharing and cross-stitch The Internet 、 Block sparse regularization method , And recently created the task hierarchy NLP Method . The model can learn which layers and subspaces should be shared , And at which layers the network learns the best representation of the input sequence .

ESSM:

In the e-commerce scene , Transformation refers to the process from Click to purchase . stay CVR Estimate when , We often have two problems : Sample bias and data coefficients . Sample bias refers to the difference between training and test samples , Take e-commerce for example , The model trains with click data , And the estimate is the entire sample space . The problem of data sparsity is even more serious , There are very few samples per click , Even less transformation , So we can learn from the idea of multi task learning , Introducing assisted learning tasks , fitting pCTR and pCTCVR(pCTCVR = pCTR * pCVR), As shown in the figure below :

- about pCTR Come on , Exposure events with click behavior can be taken as positive samples , Exposure events without click behavior are taken as negative samples

- about pCTCVR Come on , We can take the exposure events with both click behavior and purchase behavior as positive samples , Others as negative samples

- about pCVR Come on , Only the gradients in the sample with no exposure click can be returned to main task In the network

The other two subnetworks are embedding Layers are shared , because CTR The training sample size of the task is much larger than CVR The training sample size of the task , So it can alleviate the sparsity problem of training data .

DUPN:

The model is divided into behavior sequence layer 、Embedding layer 、LSTM layer 、Attention layer 、 Downstream multitasking layer (CTR、LTR、 Fashionistas focus on Forecasting 、 The strength and quantity of users' purchase ). As shown in the figure below

MMOE:

As shown in the figure below , Model (a) Most common , Shared the underlying network , It is connected with the full connection layer of different tasks . Model (b) Different experts can extract different features from the same input , By a Gate( similar ) attention structure , The features extracted by experts are filtered out to each task The most relevant feature , Finally, the full connection layer of different tasks is connected .MMOE The idea is for different tasks , Need information extracted by different experts , So each task needs an independent gate.

PLE:

Even if passed MMoE In this way, the negative transfer phenomenon is alleviated , The seesaw phenomenon is still widespread ( Seesaw phenomenon refers to when the correlation between multiple tasks is not strong , Information sharing will affect the effect of the model , There will be a task that becomes more generalized , Another weakening phenomenon ).PLE The essence is MMOE Improved version , There are some expert It's mission specific , There are some expert Is Shared , Here's the picture CGC framework , For the task A for , adopt A Of gate hold A Of expert And shared expert To merge , To learn A.

Final PLE The structure is as follows , Integrated with customized expert and MMOE, Stack multiple layers CGC framework , As shown below :

边栏推荐

- A memory leak caused by timeout scheduling of context and goroutine implementation

- Tencent on the other hand, I was puzzled by the "horse race" problem

- Go deep into the implementation principle of go language defer

- Saying "Dharma" Today: the little "secret" of paramter and localparam

- Yuanqi forest started from 0 sugar and fell at 0 sugar

- 山金期货安全么?期货开户都是哪些流程?期货手续费怎么降低?

- What is thermal data detection?

- [idea] dynamic planning (DP)

- How to perform concurrent stress testing on RTSP video streams distributed by audio and video streaming servers?

- What is the difference between a network card and a port

猜你喜欢

Cognition and difference of service number, subscription number, applet and enterprise number (enterprise wechat)

A survey on model compression for natural language processing (NLP model compression overview)

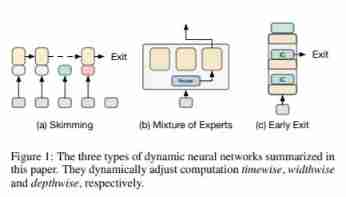

A survey on dynamic neural networks for natural language processing, University of California

Problems encountered in the work of product manager

A survey of training on graphs: taxonomy, methods, and Applications

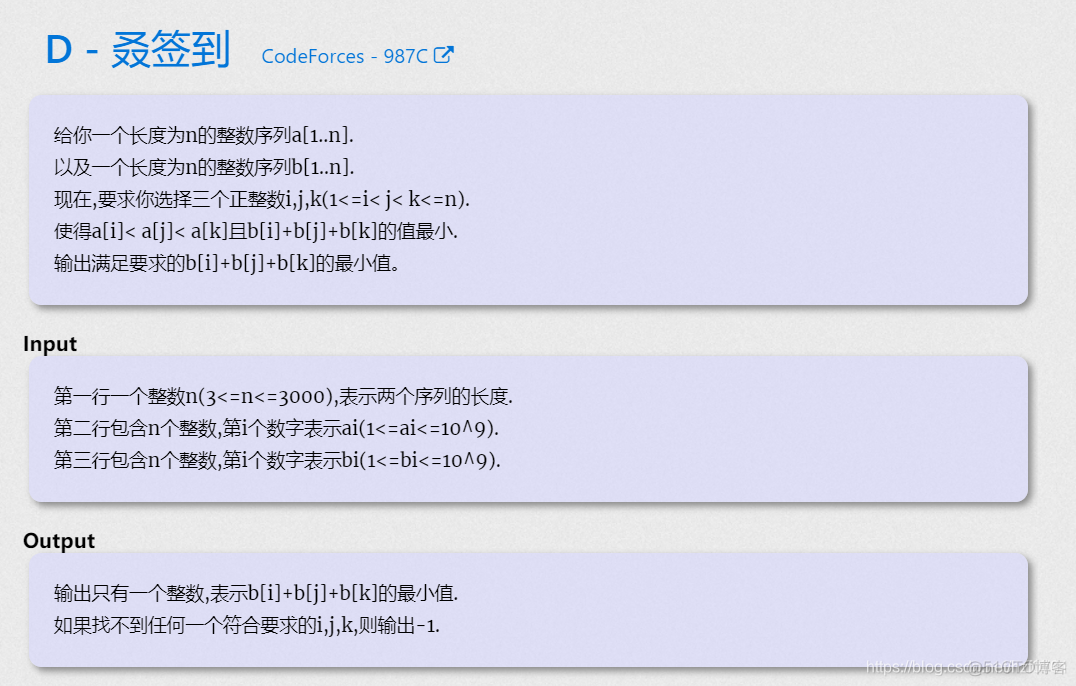

C. Three displays codeforces round 485 (Div. 2)

Applet - use of template

Ui- first lesson

MySQL Advanced Series: Locks - Locks in InnoDB

There are potential safety hazards Land Rover recalls some hybrid vehicles

随机推荐

How does easydss, an online classroom / online medical live on demand platform, separate audio and video data?

Cloud + community [play with Tencent cloud] video solicitation activity winners announced

API documents are simple and beautiful. It only needs three steps to open

What is the difference between get and post? After reading it, you won't be confused and forced, and you won't have to fight with your friends anymore

Introduction of thread pool and sharing of practice cases

AI video structured intelligent security platform easycvr intelligent security monitoring scheme for protecting community residents

Teach you to write a classic dodge game

Kubernetes characteristic research: sidecar containers

Page scrolling effect library, a little skinny

AI video structured intelligent security platform easycvr realizes intelligent security monitoring scheme for procuratorate building

FPGA project development: experience sharing of lmk04821 chip project development based on jesd204b (I)

Activeindex selection and redirection in the menu bar on the right of easycvs

D. Solve the maze (thinking +bfs) codeforces round 648 (Div. 2)

Comparison of jmeter/k6/locust pressure measuring tools (not completed yet)

Pageadmin CMS solution for redundant attachments in website construction

Week7 weekly report

An error is reported during SVN uploading -svn sqlite[s13]

Istio FAQ: virtualservice route matching sequence

Snowflake algorithm implemented in go language

炒期货在哪里开户最正规安全?怎么期货开户?