当前位置:网站首页>Build the first neural network with pytoch and optimize it

Build the first neural network with pytoch and optimize it

2022-06-28 08:36:00 【Sol-itude】

I've been learning pytorch, This time I built a neural network following the tutorial , The most classic CIFAR10, Let's look at the principle first

Input 3 passageway 32*32, Last pass 3 A convolution ,3 Maximum pooling , also 1 individual flatten, And two linearizations , Get ten outputs

The procedure is as follows :

from torch import nn

from torch.nn import Conv2d, MaxPool2d, Flatten, Linear

class NetWork(nn.Module):

def __init__(self):

super(NetWork, self).__init__()

self.conv1=Conv2d(3,32,5,padding=2)

self.maxpool1=MaxPool2d(2)

self.conv2=Conv2d(32,32,5,padding=2)

self.maxpool2=MaxPool2d(2)

self.conv3=Conv2d(32,64,5,padding=2)

self.maxpool3=MaxPool2d(2)

self.flatten=Flatten()

self.linear1=Linear(1024,64)#1024=64*4*4

self.linear2=Linear(64,10)

def forward(self,x):

x=self.conv1(x)

x=self.maxpool1(x)

x=self.conv2(x)

x=self.maxpool2(x)

x=self.conv3(x)

x=self.maxpool3(x)

x=self.flatten(x)

x=self.linear1(x)

x=self.linear2(x)

return x

network=NetWork()

print(network)

Here we can also use tensorboard Have a look , Remember import

input=torch.ones((64,3,32,32))

output=network(input)

writer=SummaryWriter("logs_seq")

writer.add_graph(network,input)

writer.close()

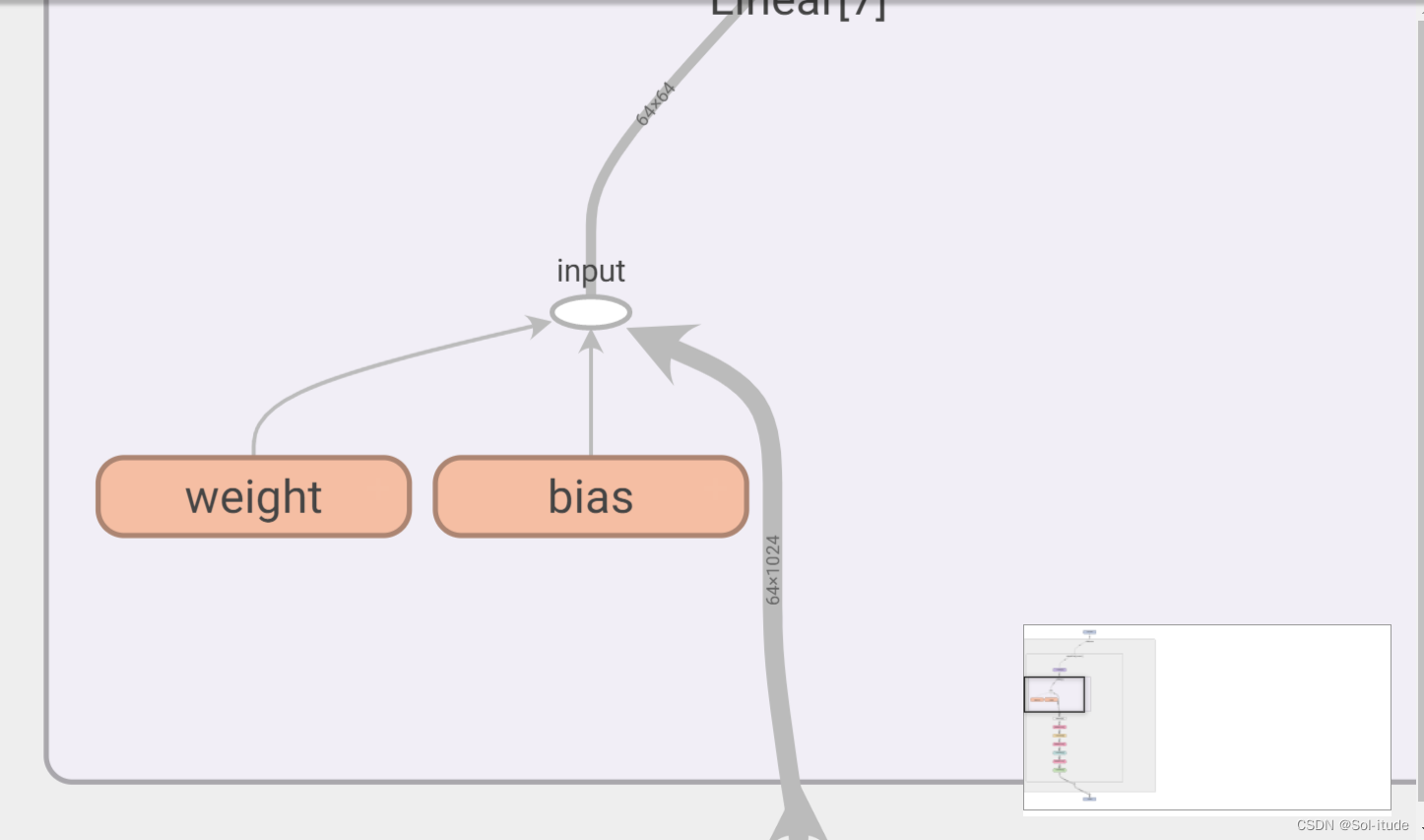

stay tensorboard It's like this in English

open NetWork

You can zoom in to see

Neural networks have errors , So we use gradient descent to reduce the error

The code is as follows

import torchvision.datasets

from torch import nn

from torch.nn import Sequential,Conv2d,MaxPool2d,Flatten,Linear

from torch.utils.data import DataLoader

import torch

dataset=torchvision.datasets.CIFAR10("./dataset2",train=False,transform=torchvision.transforms.ToTensor(),

download=True)

dataloader=DataLoader(dataset,batch_size=1)

class NetWork(nn.Module):

def __init__(self):

super(NetWork, self).__init__()

self.conv1=Conv2d(3,32,5,padding=2)

self.maxpool1=MaxPool2d(2)

self.conv2=Conv2d(32,32,5,padding=2)

self.maxpool2=MaxPool2d(2)

self.conv3=Conv2d(32,64,5,padding=2)

self.maxpool3=MaxPool2d(2)

self.flatten=Flatten()

self.linear1=Linear(1024,64)#1024=64*4*4

self.linear2=Linear(64,10)

self.model1=Sequential(

Conv2d(3,32,5,padding=2),

MaxPool2d(2),

Conv2d(32,32,5,padding=2),

MaxPool2d(2),

Conv2d(32, 64, 5, padding=2),

MaxPool2d(2),

Flatten(),

Linear(1024, 64),

Linear(64, 10)

)

def forward(self,x):

# x=self.conv1(x)

# x=self.maxpool1(x)

# x=self.conv2(x)

# x=self.maxpool2(x)

# x=self.conv3(x)

# x=self.maxpool3(x)

# x=self.flatten(x)

# x=self.linear1(x)

# x=self.linear2(x)

x=self.model1(x)

return x

loss=nn.CrossEntropyLoss()

network=NetWork()

optim=torch.optim.SGD(network.parameters(),lr=0.01)## Using gradient descent as the optimizer

for epoch in range(20):## loop 20 Time

running_loss=0.0

for data in dataloader:

imgs, targets=data

outputs=network(imgs)

result_loss=loss(outputs, targets)

optim.zero_grad()## Set the value of each drop to zero

result_loss.backward()

optim.step()

running_loss=running_loss+result_loss

print(running_loss)

My computer's GPU yes RTX2060 It belongs to the older one , It took about three times 1 minute , It was so slow that I finished running

Output results :

tensor(18733.7539, grad_fn=<AddBackward0>)

tensor(16142.7451, grad_fn=<AddBackward0>)

tensor(15420.9199, grad_fn=<AddBackward0>)

It can be seen that the error is getting smaller and smaller , But in the application 20 There are too few layers , When my new computer arrived, I ran 100 layer

边栏推荐

- App automated testing appium Tutorial Part 1 - advanced supplementary content

- Oracle RAC -- understanding of VIP

- Installing MySQL under Linux

- After installing NRM, the internal/validators js:124 throw new ERR_ INVALID_ ARG_ TYPE(name, ‘string‘, value)

- Why MySQL cannot insert Chinese data in CMD

- 利尔达低代码数据大屏,铲平数据应用开发门槛

- Superimposed ladder diagram and line diagram and merged line diagram and needle diagram

- AWS builds a virtual infrastructure including servers and networks (2)

- DELL R730服务器开机报错:[XXX] usb 1-1-port4: disabled by hub (EMI?), re-enabling...

- Large current and frequency range that can be measured by Rogowski coil

猜你喜欢

与普通探头相比,差分探头有哪些优点

Build an integrated kubernetes in Fedora

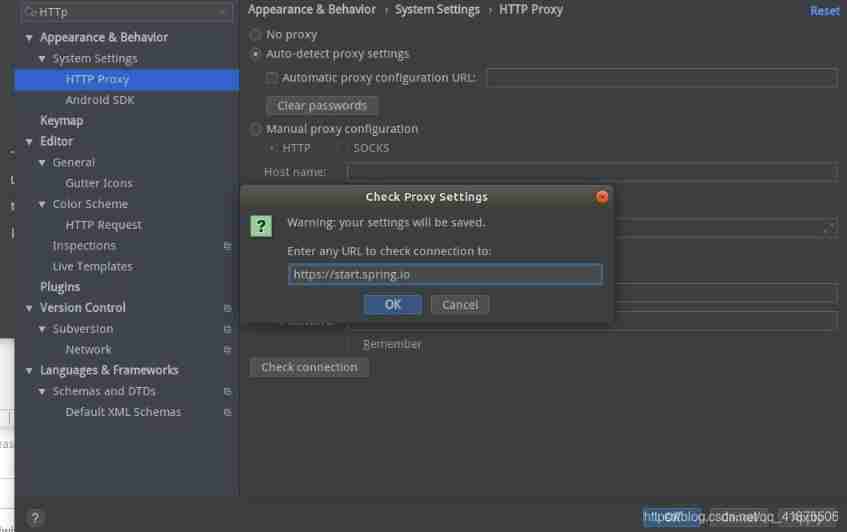

Idea related issues

Webrtc advantages and module splitting

Introduction, compilation, installation and deployment of Doris learning notes

Operating principle of Rogowski coil

Installing mysql5.7 under Windows

![[learning notes] matroid](/img/e3/4e003f5d89752306ea901c70230deb.png)

[learning notes] matroid

![Dell r730 server startup error: [xxx] USB 1-1-port4: disabled by hub (EMI?), re-enabling...](/img/90/425965ca4b3df3656ce2a5f4230c4b.jpg)

Dell r730 server startup error: [xxx] USB 1-1-port4: disabled by hub (EMI?), re-enabling...

Discussion on the application of GIS 3D system in mining industry

随机推荐

Build an integrated kubernetes in Fedora

B_ QuRT_ User_ Guide(26)

After installing NRM, the internal/validators js:124 throw new ERR_ INVALID_ ARG_ TYPE(name, ‘string‘, value)

如何抑制SiC MOSFET Crosstalk(串擾)?

[introduction to SQL in 10 days] day5+6 consolidated table

PLSQL installation under Windows

Trailing Zeroes (II)

抖音服务器带宽有多大,才能供上亿人同时刷?

yaml json

A - deep sea exploration

Installing MySQL under Linux

What are the advantages of a differential probe over a conventional probe

RAC enable archive log

ffmpeg推流报错Failed to update header with correct duration.

Understanding of CUDA, cudnn and tensorrt

On the solution of insufficient swap partition

Ffmpeg (I) AV_ register_ all()

webrtc优势与模块拆分

Super Jumping! Jumping! Jumping!

Not so Mobile