当前位置:网站首页>Loss loss function

Loss loss function

2022-06-28 08:23:00 【Beiluo sect XY】

This blog records all kinds of losses encountered , If you want to know about various losses and their codes , You can also view mmdet Project loss part

Cross entropy

Applicable to multi classification tasks , Cross entropy is a common loss in classification loss ,-ylogP Take the average , probability P by 1 when , The loss is 0. stay bert Of mlm In the pre training task ignore_index Enter the reference , Depending on only part of the location (15%mask It's about ) Calculate the loss . In the actual calculation , The label turns to one-hot,y=0 The location of -ylogP by 0, Not involved in loss calculation , Refer to the red example in the following link Cross entropy loss and binary cross entropy loss _ Plane train Barrett's blog -CSDN Blog _ Cross entropy loss

The calculation process is :softmax——log——nllloss

softmax Output in 0-1 Between

log The output is from negative infinity to 0 Between ,softmax and log Can be combined into F.log_softmax operation

nllloss take log The value of the corresponding position in the output , Take the negative after averaging , Output in 0 To positive infinity

There are many ways to do it , All in the following example loss=tensor(1.3077)

import torch

import torch.nn as nn

import torch.nn.functional as F

# input = torch.randn(3,3)

# print(input)

input = torch.tensor([[0.2,0.3,0.4],

[-0.02,-0.13,0.2],

[0.5,-0.3,0.73]])

target = torch.tensor([0,2,1])

target1 = torch.FloatTensor([[1,0,0],

[0,0,1],

[0,1,0]])

##############################################################

"""

softmax+ Only the probability of correct class position

nn.Softmax-torch.log-nn.NLLLoss

=F.log_softmax-nn.NLLLoss

=nn.CrossEntropyLoss

=F.cross_entropy

"""

# 1、nllloss softmax-log-nllloss

softmax=nn.Softmax(dim=1) # The transverse softmax

nllloss=nn.NLLLoss()

softmax_log = torch.log(softmax(input))

softmax_log2=F.log_softmax(input, dim=1)

"""

print(softmax_log)

tensor([[-1.2019, -1.1019, -1.0019],

[-1.1448, -1.2548, -0.9248],

[-0.9962, -1.7962, -0.7662]])

"""

loss1 = nllloss(softmax_log,target)

print(loss1)

loss2 = nllloss(softmax_log2,target)

print(loss2)

# 2、nllloss= Take the corresponding value, average it, and take the negative value

loss3 = -(softmax_log[0][target[0]]+

softmax_log[1][target[1]]+

softmax_log[2][target[2]])/3

print(loss3)

# 3、 Call directly CrossEntropyLoss、cross_entropy

crossEntropyLoss=nn.CrossEntropyLoss()

loss4=crossEntropyLoss(input, target)

print(loss4)

loss5=F.cross_entropy(input, target)

print(loss5)Binary cross entropy / Two categories cross entropy

It is suitable for two categories 、 Multi label classification task . Where is the cross entropy softmax Then only take the value of the correct position to calculate loss. Binary cross entropy holds that classes are independent , stay sigmoid Then take the values of all positions to calculate loss,-(ylogP+(1-y)log(1-P)) Take the average , When y by 0 or 1 when , One of the two terms added must be 0.

"""

sigmoid+ Probability of using all categories

F.sigmoid- F.binary_cross_entropy

=F.binary_cross_entropy_with_logits

=nn.BCEWithLogitsLoss

=nn.BCELoss

"""

softmax=nn.Softmax(dim=1)

print('softmax=',softmax(input))

sigmoid = F.sigmoid(input)

print('sigmoid=',sigmoid)

# softmax= tensor([[0.3006, 0.3322, 0.3672],

# [0.3183, 0.2851, 0.3966],

# [0.3693, 0.1659, 0.4648]])

# sigmoid= tensor([[0.5498, 0.5744, 0.5987],

# [0.4950, 0.4675, 0.5498],

# [0.6225, 0.4256, 0.6748]])

loss6 = F.binary_cross_entropy(sigmoid, target1)

print(loss6)

loss7 = F.binary_cross_entropy_with_logits(input, target1)

print(loss7)

loss = nn.BCELoss(reduction='mean')

loss8 =loss(sigmoid, target1)

print(loss8)

loss =nn.BCEWithLogitsLoss()

loss9 =loss(input, target1)

print(loss9)

def binary_cross_entropyloss(prob, target, weight=None):

weight=torch.ones((3,3)) # Positive sample weight

loss = -weight * (target * torch.log(prob) + (1 - target) * (torch.log(1 - prob)))

# print('torch.numel(target)=',torch.numel(target)) # Element number 9

loss = torch.sum(loss) / torch.numel(target) # Averaging

return loss

loss10=binary_cross_entropyloss(sigmoid, target1)

print(loss10)Equilibrium cross entropy Balanced Cross-Entropy—— Set super parameters

It is often used in semantic segmentation tasks , Deal with the imbalance between positive and negative samples , Balance positive and negative samples ( In binary cross entropy, you can also set a parameter to set the weight of positive samples ).

blanced and focal It can be understood as an idea , Used to optimize various losses . If in -(ylogP+(1-y)log(1-P)) Add one more beta coefficient , It is amended as follows -(BylogP+(1-B)(1-y)log(1-P))

pytorch To realize Balanced Cross-Entropy_fpan98 The blog of -CSDN Blog

In the following example 1-y/w*h As beta, In practice, you can also set a super parameter by yourself

input = torch.tensor([[0.2,0.3,0.4],

[-0.02,-0.13,0.2],

[0.5,-0.3,0.73]])

target2 = torch.FloatTensor([[1,0,0],

[1,1,1],

[0,1,1]])

def balanced_loss(input, target):

input = input.view(input.shape[0], -1)

target = target.view(target.shape[0], -1)

loss = 0.0

for i in range(input.shape[0]):

# In this case beta Respectively tensor(0.6667)、tensor(0.)、tensor(0.3333)

beta = 1-torch.sum(target[i])/target.shape[1] # Non in the sample 1 Probability

print('beta=',beta)

x = torch.max(torch.log(input[i]), torch.tensor([-100.0]))

y = torch.max(torch.log(1-input[i]), torch.tensor([-100.0]))

l = -(beta*target[i] * x + (1-beta)*(1 - target[i]) * y)

print('l=',l)

loss += torch.sum(l)

return loss

loss11=balanced_loss(sigmoid, target2)Equilibrium cross entropy Balanced Cross-Entropy—— Set the proportion of positive and negative samples for online difficult sample mining

In this example, the negative samples participating in the loss calculation are limited to the number of positive samples 3 times , Select a difficult negative sample

Another blog post also mentioned online difficult sample mining and its code be based on 【 be based on ( be based on pytorch Of resnet) Of fpn】 Of psenet_ Beiluo school XY The blog of -CSDN Blog

class BalanceCrossEntropyLoss(nn.Module):

'''

Balanced cross entropy loss.

Shape:

- Input: :math:`(N, 1, H, W)`

- GT: :math:`(N, 1, H, W)`, same shape as the input

- Mask: :math:`(N, H, W)`, same spatial shape as the input

- Output: scalar.

Examples::

>>> m = nn.Sigmoid()

>>> loss = nn.BCELoss()

>>> input = torch.randn(3, requires_grad=True)

>>> target = torch.empty(3).random_(2)

>>> output = loss(m(input), target)

>>> output.backward()

'''

def __init__(self, negative_ratio=3.0, eps=1e-6):

super(BalanceCrossEntropyLoss, self).__init__()

self.negative_ratio = negative_ratio

self.eps = eps

def forward(self,

pred: torch.Tensor,

gt: torch.Tensor,

mask: torch.Tensor,

return_origin=False):

'''

Args:

pred: shape :math:`(N, 1, H, W)`, the prediction of network

gt: shape :math:`(N, 1, H, W)`, the target

mask: shape :math:`(N, H, W)`, the mask indicates positive regions

'''

positive = (gt * mask).byte()

negative = ((1 - gt) * mask).byte()

positive_count = int(positive.float().sum())

negative_count = min(int(negative.float().sum()),

int(positive_count * self.negative_ratio))

loss = nn.functional.binary_cross_entropy(

pred, gt, reduction='none')[:, 0, :, :]

positive_loss = loss * positive.float()

negative_loss = loss * negative.float()

negative_loss, _ = torch.topk(negative_loss.view(-1), negative_count)

balance_loss = (positive_loss.sum() + negative_loss.sum()) /\

(positive_count + negative_count + self.eps)

if return_origin:

return balance_loss, loss

return balance_lossFocal Loss

Dealing with difficult samples , According to the model output probability P Adjust specific gravity , One more super parameter gamma

-(B((1-P)**gamma)ylogP+(1-B)(P**gamma)(1-y)log(1-P))

class BCEFocalLosswithLogits(nn.Module):

def __init__(self, gamma=0.2, alpha=0.6, reduction='mean'):

super(BCEFocalLosswithLogits, self).__init__()

self.gamma = gamma

self.alpha = alpha

self.reduction = reduction

def forward(self, logits, target):

# logits: [N, H, W], target: [N, H, W]

logits = F.sigmoid(logits)

alpha = self.alpha

gamma = self.gamma

loss = - alpha * (1 - logits) ** gamma * target * torch.log(logits) - \

(1 - alpha) * logits ** gamma * (1 - target) * torch.log(1 - logits)

if self.reduction == 'mean':

loss = loss.mean()

elif self.reduction == 'sum':

loss = loss.sum()

return loss

L=BCEFocalLosswithLogits()

loss12= L(sigmoid, target2)Weighting based on the number of samples

Count the number of different categories , Get the probability of occurrence of this category p, according to 1/log(a+p) Get the weight of each category , among a Is a super parameter for smoothing

DICE LOSS

It is applicable to the imbalance of positive and negative samples , Commonly used in semantic segmentation, where there is a large difference in the number of scene primes before and after, the calculation method is

1-2*abs(y*p)/(abs(y)+abs(p)) In actual calculation ,y Must be greater than or equal to 0,p adopt sigmoid The later is greater than or equal to 0, There is no... In the code abs Function of

You can also refer to pse That blog post will mine online difficult samples ( Control the proportion of positive and negative samples 1:3) and doce loss Combined code

def dice_loss(input, target):

input = input.contiguous().view(input.size()[0], -1)

target = target.contiguous().view(target.size()[0], -1).float()

a = torch.sum(input * target, 1)

b = torch.sum(input * input, 1) + 0.001

c = torch.sum(target * target, 1) + 0.001

d = (2 * a) / (b + c)

return 1-dL1 loss

Return to loss , Regardless of direction , The calculation method is abs(y-p) Average value

from torch.nn import L1Loss

loss = L1Loss()

loss13 = loss(input, target1)

print(loss13)

tmp = abs(input- target1)

loss14 = torch.sum(tmp)/torch.numel(tmp)

print(loss14)loss appear nan

It shows that the loss is normally reduced at the beginning of training , Later, it appeared sporadically Nan, Later, it was all Nan 了 . This is actually the phenomenon of gradient explosion . In various models 、 This phenomenon may occur in all kinds of losses . Such as the following situations encountered by bloggers :

use yolov3 VOC Problems and solutions in training your own data _ Gary zdh The blog of -CSDN Blog

ta The learning rate adjustment strategy is modified to reduce the learning rate

pytorch MultiheadAttention appear NaN_qq_37650969 The blog of -CSDN Blog _attention nan

ta Met in attention The whole line is mask The situation of

The reasons include :

1)loss Divide by nan

2)loss In calculation log0, Such as binary cross entropy calculation loss = -(y*ln(p)+(1-y)*ln(1-p))

3)softmax The inputs of are all negative infinity , Output is Nan

Solutions generally include :

1) Cross entropy input p Add a small number to limit the range of one side

crossentropy(out+1e-8, target)

2) Scope of bilateral restrictions

q_logits = torch.clamp(q_logits, min=1e-7, max=1 - 1e-7) # Added truncation interval

q_log_prob = torch.nn.functional.log_softmax(q_logits, dim=1)

3) Reduce the learning rate , Reduce shock

4) Evaluate whether the loss function is appropriate for the task

5) Evaluate whether there are annotation problems

Q: Why is the mean value not taken in the formula , But the code often needs to take the mean value

A: It's actually a batch The average loss of the sample is taken , Instead of averaging a single sample ,reduction Can be set to

'none', 'mean', 'sum'

Q: What are the methods to deal with the imbalance of various types of samples

A: Resampling 、 Weighted based on the number of samples 、balanced Set the positive and negative sample super parameters 、balanced Control the proportion of positive and negative samples 、dice loss

Recommended reading :

pytorch Learning experience ( 5、 ... and ) The cross entropy loss and Focal Loss - Simple books

边栏推荐

- Unity 获取当前物体正前方,一定角度、距离的坐标点

- 【学习笔记】搜索

- Children's unit of 2022 Paris fashion week ended successfully at Wuhan station on June 19

- 解决npm ERR! Unexpected end of JSON input while parsing near问题

- The maximum number of Rac open file descriptors, and the processing of hard check failure

- Not so Mobile

- 探讨gis三维系统在矿山行业中的应用

- App automated testing appium tutorial 2 - ADB command

- Super Jumping! Jumping! Jumping!

- 图像翻译/Transformer:ITTR: Unpaired Image-to-Image Translation with Transformers用Transfor进行非配对图像对图像的转换

猜你喜欢

The preliminary round of the sixth season of 2022 perfect children's model Foshan competition area came to a successful conclusion

Almost Union-Find(带权并查集)

Build an integrated kubernetes in Fedora

Trigonometric transformation formula

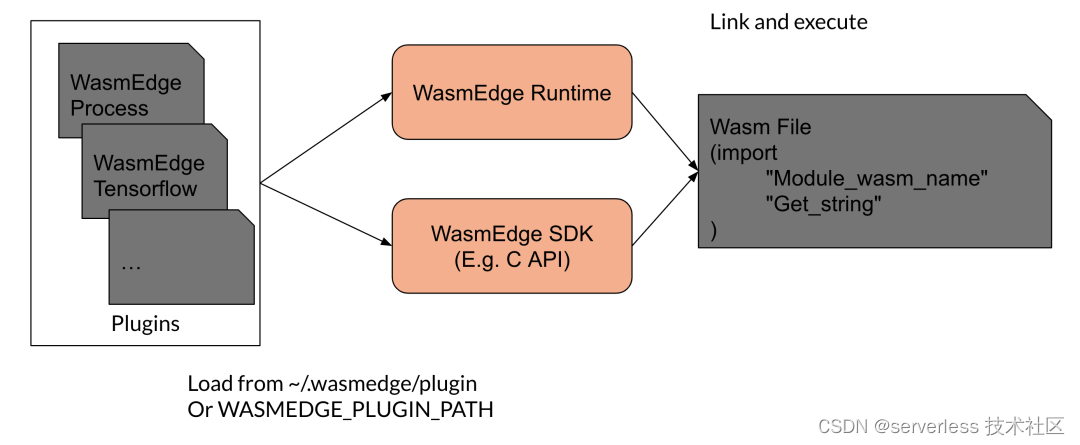

WasmEdge 0.10.0 发布!全新的插件扩展机制、Socket API 增强、LLVM 14 支持

Redis cluster deployment and application scenarios

PLSQL installation under Windows

Prometheus + grafana + MySQL master-slave replication + host monitoring

B_QuRT_User_Guide(27)

The micro kernel zephyr is supported by many manufacturers!

随机推荐

Set<String>

Discussion on the application of GIS 3D system in mining industry

三体攻击(三维拆分加二分)

Prometheus monitoring (I)

为什么函数模板没有偏特化?

NLP sequence can completely simulate human brain intelligence

Jenkins' common build trigger and hook services (V)

About RAC modifying scan IP

VMware Workstation related issues

【学习笔记】搜索

B_ QuRT_ User_ Guide(27)

Idea related issues

Solution: selenium common. exceptions. WebDriverException: Message: ‘chromedriver‘ execu

Installing mysql5.7 under Windows

Sword finger offer 03 Duplicate number in array

块级元素上下左右居中的两个小技巧

开户券商怎么选择?网上开户是否安全么?

Buffer pool in MySQL

App automated testing appium Tutorial Part 1 - advanced supplementary content

【力扣10天SQL入门】Day4 组合查询 & 指定选取