当前位置:网站首页>Use Stanford parse (intelligent language processing) to implement word segmentation

Use Stanford parse (intelligent language processing) to implement word segmentation

2022-07-23 07:50:00 【Wu Nian】

I studied yesterday Stanford Parse , Want to use Stanford Parse The effect of intelligent word segmentation is combined with lucene Ideas in the word breaker ; Due to the project time

hasty , Some studies have not been completed . Code still exists bug, I hope you have this idea , Can perfect ..

lucene edition :lucene4.10.3, introduce jar package :stanford-parser-3.3.0-models.jar ,stanford-parser.jar

First build the word breaker test class , The code is as follows :

-

package main.test;

-

-

import java.io.IOException;

-

import java.io.StringReader;

-

-

import org.apache.lucene.analysis.Analyzer;

-

import org.apache.lucene.analysis.TokenStream;

-

import org.apache.lucene.analysis.tokenattributes.CharTermAttribute;

-

import org.apache.lucene.analysis.tokenattributes.OffsetAttribute;

-

-

public

class

AnalyzerTest {

-

-

public

static

void

analyzer

(Analyzer analyzer,String text){

-

try {

-

System.out.println(

" Participator name :"+analyzer.getClass());

-

// obtain tokenStream flow

-

TokenStream tokenStream=analyzer.tokenStream(

"",

new

StringReader(text));

-

tokenStream.reset();

-

while(tokenStream.incrementToken()){

-

CharTermAttribute cta1=tokenStream.getAttribute(CharTermAttribute.class);

-

OffsetAttribute ofa=tokenStream.getAttribute(OffsetAttribute.class);

-

// Attribute of position increment , The distance between stored words

-

// PositionIncrementAttribute pia=tokenStream.getAttribute(PositionIncrementAttribute.class);

-

// System.out.print(pia.getPositionIncrement()+":");

-

System.out.print(

"["+ofa.startOffset()+

"-"+ofa.endOffset()+

"]-->"+cta1.toString()+

"\n");

-

}

-

tokenStream.end();

-

tokenStream.close();

-

}

catch (IOException e) {

-

// TODO Auto-generated catch block

-

e.printStackTrace();

-

}

-

}

-

public

static

void

main

(String[] args){

-

String

chText

=

" Tsinghua university students say they are studying the origin of life ";

-

Analyzer

analyzer

=

new

NlpHhcAnalyzer();

-

analyzer(analyzer,chText);

-

}

-

}

Redefine a new word breaker , Realization Analyzer class , Rewrite it :TokenStreamComponentscreateComponents Method . Note here :lucene4.x edition

Ben's TokenStreamComponents Contained as a component lucene3.x Version of filter and tokenizer.

-

package main.test;

-

-

import java.io.Reader;

-

-

import org.apache.lucene.analysis.Analyzer;

-

-

public

class

NlpHhcAnalyzer

extends

Analyzer{

-

-

@Override

-

protected TokenStreamComponents

createComponents

(String arg0, Reader reader) {

-

return

new

TokenStreamComponents(

new

aaa(reader));

-

}

-

-

}

Realize a new one Tokenizer class aaa: This part of the code also has bug, There is no time to debug and learn .. Friends who have time can try to improve .

-

package main.test;

-

-

import java.io.IOException;

-

import java.io.Reader;

-

import java.util.Collection;

-

import java.util.concurrent.ConcurrentHashMap;

-

-

import org.apache.lucene.analysis.Tokenizer;

-

import org.apache.lucene.analysis.tokenattributes.CharTermAttribute;

-

import org.apache.lucene.analysis.tokenattributes.OffsetAttribute;

-

import org.apache.lucene.util.AttributeFactory;

-

-

import edu.stanford.nlp.parser.lexparser.LexicalizedParser;

-

import edu.stanford.nlp.trees.Tree;

-

import edu.stanford.nlp.trees.TypedDependency;

-

import edu.stanford.nlp.trees.international.pennchinese.ChineseGrammaticalStructure;

-

-

public

class

aaa

extends

Tokenizer{

-

// Word meta text properties

-

private CharTermAttribute termAtt;

-

// Morpheme displacement attribute

-

private OffsetAttribute offsetAtt;

-

// Record the end position of the last word element

-

// private int finalOffset;

-

private String str;

-

private LexicalizedParser lp;

-

-

public

aaa

(Reader in) {

-

super(in);

-

StringBuilder sb=

new

StringBuilder();

-

try {

-

for (

int

i

=

0; i <

100; i++) {

-

sb.append((

char) in.read());

-

}

-

}

catch (IOException e) {

-

e.printStackTrace();

-

}

-

str=sb.toString();

-

String modelpath=

"edu/stanford/nlp/models/lexparser/xinhuaFactoredSegmenting.ser.gz";

-

lp = LexicalizedParser.loadModel(modelpath);

-

offsetAtt = addAttribute(OffsetAttribute.class);

-

termAtt = addAttribute(CharTermAttribute.class);

-

}

-

protected

aaa

(AttributeFactory factory, Reader input) {

-

super(factory, input);

-

// TODO Auto-generated constructor stub

-

}

-

-

@SuppressWarnings("unchecked")

-

@Override

-

public

boolean

incrementToken

()

throws IOException {

-

// Clear all lexical attributes

-

clearAttributes();

-

-

Tree

t

= lp.parse(str);

-

ChineseGrammaticalStructure

gs

=

new

ChineseGrammaticalStructure(t);

-

Collection<TypedDependency> tdl = gs.typedDependenciesCollapsed();

-

ConcurrentHashMap map=

new

ConcurrentHashMap();

-

for(

int i=

0;i<tdl.size();i++)

-

{

-

TypedDependency

td

= (TypedDependency)tdl.toArray()[i];

-

String

term

= td.dep().nodeString().trim();

-

// take Lexeme Turn into Attributes

-

// Set word meta text

-

termAtt.append(term);

-

// Set the word element length

-

termAtt.setLength(term.length());

-

// Set the morpheme displacement

-

if(i==

0){

-

map.put(

"beginPosition", i*term.length());

-

}

else{

-

map.put(

"beginPosition", Integer.parseInt(map.get(

"beginPosition").toString())+term.length());

-

}

-

offsetAtt.setOffset(Integer.parseInt(map.get(

"beginPosition").toString()), Integer.parseInt(map.get(

"beginPosition").toString())+term.length());

-

// Record the last position of the participle

-

// finalOffset = nextLexeme.getEndPosition();

-

// Return meeting true Tell me there's the next word

-

return

true;

-

-

}

-

// Return meeting false Inform that the word element output is complete

-

return

false;

-

}

-

-

}

边栏推荐

- Wechat campus second-hand book trading applet graduation design finished product (1) development outline

- 如何使用订单流分析工具(中)

- 我是如何在一周内拿到4份offer的?

- 局域网SDN硬核技术内幕 21 亢龙有悔——规格与限制(中)

- FastAPI学习(二)——FastAPI+Jinjia2模板渲染网页(跳转返回渲染页面)

- How to use the order flow analysis tool (in)

- Wechat campus second-hand book trading applet graduation design finished product (6) opening defense ppt

- 真人踩过的坑,告诉你避免自动化测试常犯的10个错误

- 一次 MySQL 误操作导致的事故,「高可用」都顶不住了

- Delete the duplicate items in the array (keep the last duplicate elements and ensure the original order of the array)

猜你喜欢

Information system project managers must recite the core examination points (49) contract law

The new idea 2022.2 was officially released, and the new features are really fragrant

7. Learn Mysql to select a database

延伸联接边界,扩展业务范围,全面迈向智能云网2.0时代

6-15漏洞利用-smb-RCE远程命令执行

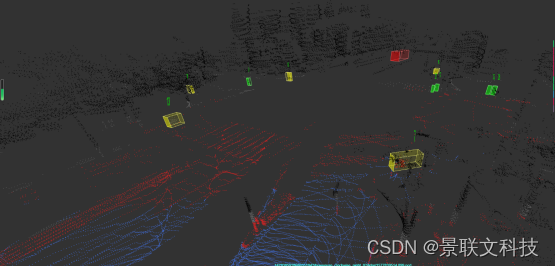

景联文科技提供3D点云-图像标注服务

Could NOT find Doxygen (missing: DOXYGEN_EXECUTABLE)

1.10 API 和字符串

类和对象(1)

93. (leaflet chapter) leaflet situation plotting - modification of attack direction

随机推荐

With 130 new services and functions a year, this storage "family bucket" has grown again

局域网SDN技术硬核内幕 8 从二层交换到三层路由

[ssm] unified result encapsulation

Redis三种集群方案

船舶测试/ IMO A.799 (19)船用结构材料不燃性试验

LAN SDN technology hard core insider 6 distributed anycast gateway

Redis——JedisConnectionException Could not get a resource from the pool

Wechat campus second-hand book trading applet graduation design finished product (1) development outline

如何在 PHP 应用程序中预防SQL 注入

ROS2常用命令行工具整理ROS2CLI

LAN SDN hard core technology insider 19 unite all forces that can be united

Patrick McHardy事件对开源社区的影响

URL的结构解读

用Stanford Parse(智能语言处理)去实现分词器

The new idea 2022.2 was officially released, and the new features are really fragrant

【无标题】

Chapter 2 how to use sourcetree to update code locally

6-13漏洞利用-smtp暴力破解

Mysql的索引为什么用B+树而不是跳表?

Understand the domestic open source Magnolia license series agreement in simple terms