当前位置:网站首页>Particles and sound effect system in games104 music 12 game engine

Particles and sound effect system in games104 music 12 game engine

2022-06-26 09:02:00 【Jason__ Y】

Particle and sound effect system in game engine

Particle basis

There are various emitters in the particle system (emitter), Emitters emit particles (particle).

- Particles have positions 、 Speed 、 Size, size 、 Color and life cycle 3D Model .

- In the life cycle of a particle , Include the production of (Spawn)、 Interaction with the environment and death . A very important point in design is to control the number of particles in the scene .

- Particle emitters have three functions :

– Specify the rules for particle generation

– Specify the logic of particle simulation

– Describes how to render particles - Particle systems often have more than one type of emitter , For example, there are flames in the system of fire pile 、 Mars and smoke are three kinds of launchers

- About the production of particles , Location can be divided into single point generation 、 Produce on a region and in mesh There are three kinds of ; The mode can also be divided into continuous generation and intermittent generation

- Particle simulation :

1、 Conventional stress

2、 How particles move , Generally, explicit Euler method is used . The force state determines the acceleration , Updates that determine speed , Speed determines position updates

3、 Simulation time , Except gravity , You can also add the rotation of the particles 、 Change of color 、 Changes in size and collisions with the environment - There are generally three types of particle shapes :

1、Billboard Particle. This particle is made up of some patches , Its shape actually has only one side , But it always keeps facing the camera , So it looks like a 3D The particles of the universe . If this particle It doesn't matter if you are small , But if it is large, the suggestion is that its shape will also change with time , Otherwise, it's fake

2、Mesh Particle. When you want to simulate scattered particles, you often use 3D mesh As particles , Then set random attributes of different categories for them , Thus, it is easier for artists to achieve the desired effect

3、Ribbon Particle. Spline particle , In fact, the particle is the control point on the spline , Then the complete strip is obtained through connection . It is often used in places such as the shadow of weapons waving . Interpolation is required during the connection of control points , Otherwise, the shape between each connection point is discontinuous ( It looks like quadrangles put together ). Generally use centripetal catmull-rom interpolation ( Add additional blocks between particles and the number can be selected by yourself , But for CPU The requirements will become higher )

Particle Renderer

Common transparent fusion problems also need to be solved in particle rendering , Sort from far to near .

There are two ways to sort particles , One is the global approach , Sort completely by individual particles , It's very accurate, but it costs a lot ; The other is by level , The order from simple to complex is according to the particle system 、 according to emitter Sort and in emitter Sort within .

say concretely , If you sort by particles , Sort by the distance from the particles to the camera ; If sorted by system or emitter , Then press Bounding box Sort .

On the other hand , The reason why particle rendering can be expensive is “ Full resolution particles ”. When we render a normal scene , because Z-buffer With the help of the , In fact, you only need to render the first object of the scene you touch ( Other occluded objects do not need to be rendered ), But particle effects sometimes ( In the worst case ) In an instant, it will produce particles with multiple layers of the whole resolution , And they do not have a complete occlusion relationship , So it is equivalent to a huge amount of rendering at once .

The solution is :

Lower resolution down sampling for particle rendering , This is irrelevant to the original scene , Get the color and transparency of the particles alpha. And then the upper sampling is merged into the original scene .

GPU The particle

As can be seen from the above , Particle computing consumes a lot of power , So put it in GPU Is a solution , There are three reasons. :

- High parallelism , Suitable for the simulation of a large number of particles

- You can release CPU Power consumption to calculate the game itself

- It is convenient to obtain depth buffer for occlusion judgment

But one difficulty is that particles have a life cycle , Will continue to produce and disappear , So how to GPU Implementing particles in is a difficult problem .

Solution :

Intial State

First create a particle pool , Design a data structure , Set the total number of particles the system contains , The position of each particle 、 Speed etc. . There are two more list, One is Dead List, Record the currently dead particles , Initially include all particle numbers , The other one is alive list, Record the currently living particles , Initially empty .

such as emitter fired 5 A particle , Take... From the end of the pool 5 A particle ( Serial number ) Put in alive list, At the same time dead list After 5 Serial numbers are cleared .Simulate:

When time jumps to the next tick when , It will create a new one alive list1, And search in order alive list Particles in , If a particle is found dead , The particle number will be moved to the death list , And skip this particle when rendering , If you're still alive, copy it alive list1 in .

This operation is because compute shader The invention of became easy , because compute shader It can perform atomic level operations .

meanwhile , After updating the live particles , It can also be used GPU Conduct frustum culling View culling ( For living particles ), And calculate their distance , Write distance buffer.Sort, Render and Swap Alive Lists

next , You also need to sort 、 Rendering and swapping alive list:

1、 Sort . According to distance buffer Sort the live culled particles

2、 Rendering . Render the sorted particles

3、 In exchange for . In exchange for alive list1 and alive list, Update survival list .Specifically, sort .GPU The sorting of is similar to the merging algorithm , The complexity is nlogn. The approach taken is for the target sequence ( After ordering ) Set a thread at each location of the , Consider which of the two lists it should get from . This is easier than having a thread at each source list location to consider where to insert , Because the latter will make “ Writing process ” Jump to jump to discontinuous .

At the same time, collision detection can be carried out by using depth buffer , Specifically :

1、 Project the current position of the particle to the screen space texture coordinates of the previous frame ( It is equivalent to projecting to the camera coordinate system of the previous frame ?)

2、 Read the depth value in the depth texture map of the previous frame

3、 Check 1 and 2 Depth value in , Judge whether it has collided but not completely penetrated ( Thickness values are used )

4、 If a collision happens , Calculate the normal direction of the collision surface and the direction of the particle bounce

Particle application

- Directly use particles to simulate objects , For example, birds 、 Passers by walking under the big map ( Because it's small ), There are also bones , But basically each vertex is limited by only one node ( Simplified human body ).

under these circumstances , We can replace the acceleration and other behaviors of the original example with more complex human posture actions , It's equivalent to each particle Set up a state machine . So let's take this particle The various states and corresponding attributes such as speed are set to a texture map . When particle When the attributes of the texture map change, the corresponding state is found in the texture map and constantly switched . - Navigation Texture. On the basis of the above , You can use a particle to simulate a navigation texture map . say concretely , utilize SDF To prevent people from entering the building , Then you can set a direction texture map in turn , When we set the destination and initial velocity for a particle , It will be based on DT Our field drives us to our destination . Of course , Some randomness can be added to the process .

The application of particle systems in games is “ Preset ”, That is, it is set at the beginning particle Possible behavior , And use stack To represent and store ; And then there was “ Based on graph “ The design of the , Reduce the amount of code and increase flexibility ; The best is a mixture of the two , Here's the picture :

Sound foundation

Too lazy to write. … It's better to watch video , Very broken

3D audio rendering

Too lazy to write. … It's better to watch video , Very broken

边栏推荐

- 唯品会工作实践 : Json的deserialization应用

- 微信小程序如何转换成百度小程序

- Yolov5进阶之一摄像头实时采集识别

- 1.26 pytorch learning

- [Matlab GUI] key ID lookup table in keyboard callback

- Reverse crawling verification code identification login (OCR character recognition)

- SRv6----IS-IS扩展

- Machine learning (Part 2)

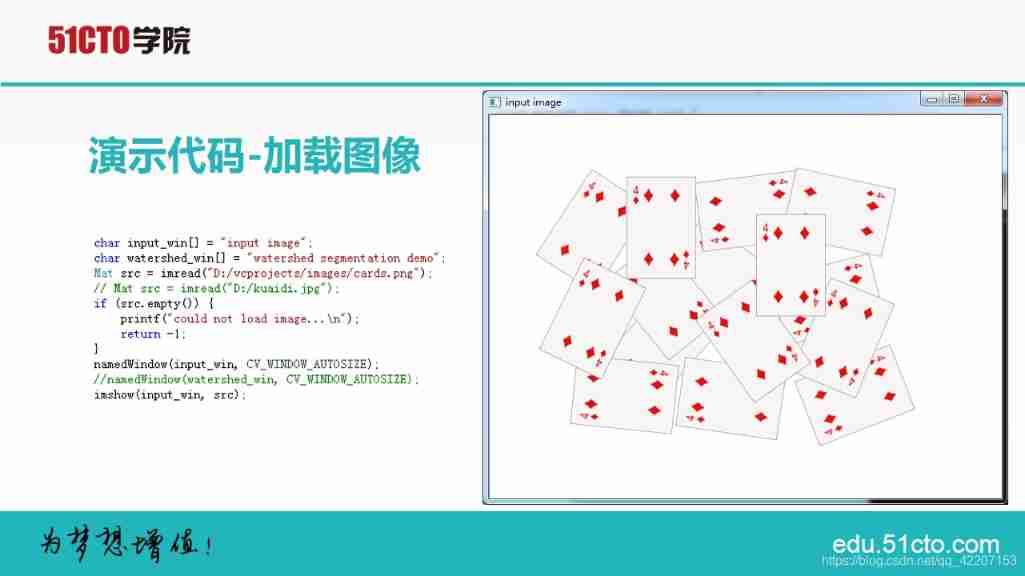

- Playing card image segmentation

- Matlab drawing checkerboard (camera calibration)

猜你喜欢

Exploration of webots and ROS joint simulation (I): software installation

Polka lines code recurrence

Trimming_ nanyangjx

QT_ AI

Principle of playing card image segmentation

Degree of freedom analysis_ nanyangjx

Yolov5 advanced zero environment rapid creation and testing

【程序的编译和预处理】

MySQL在服务里找不到(未卸载)

phpcms小程序插件api接口升级到4.3(新增批量获取接口、搜索接口等)

随机推荐

Ultrasonic image segmentation

pgsql_ UDF01_ jx

Clion installation + MinGW configuration + opencv installation

1.20 study univariate linear regression

ROS learning notes (6) -- function package encapsulated into Library and called

Degree of freedom analysis_ nanyangjx

1.21 study gradient descent and normal equation

Yolov5 advanced level 2 installation of labelimg

phpcms v9商城模块(修复自带支付宝接口bug)

[program compilation and pretreatment]

Principle of playing card image segmentation

Steps for ROS to introduce opencv (for cmakelist)

Sqoop merge usage

Convex optimization of quadruped

phpcms手机站模块实现自定义伪静态设置

攔截器與過濾器的實現代碼

Machine learning (Part 2)

编程训练7-日期转换问题

How to use leetcode

Isinstance() function usage