当前位置:网站首页>Convolutional neural network model -- vgg-16 network structure and code implementation

Convolutional neural network model -- vgg-16 network structure and code implementation

2022-07-25 13:08:00 【1 + 1= Wang】

List of articles

VGGNet brief introduction

VGG original text :Very deep convolutional networks for large-scale image recognition:https://arxiv.org/pdf/1409.1556.pdf

VGG stay 2014 Oxford University Visual GeometryGroup Put forward , Get the year lmageNet In the race Localization Task( Positioning tasks ) First place and Classification Task ( Classification task ) proxime accessit .

VGG And AlexNet comparison , It uses several consecutive 3x3 Convolution kernel substitution of AlexNet The larger convolution kernel in .

stay VGG in , Used 3 individual 3x3 Convolution kernel to replace 7x7 Convolution kernel , Used 2 individual 3x3 Convolution kernel to replace 5*5 Convolution kernel , Thus, under the condition of ensuring the same perceptual field , Improved the depth of the network , To some extent, it improves the effect of neural network .

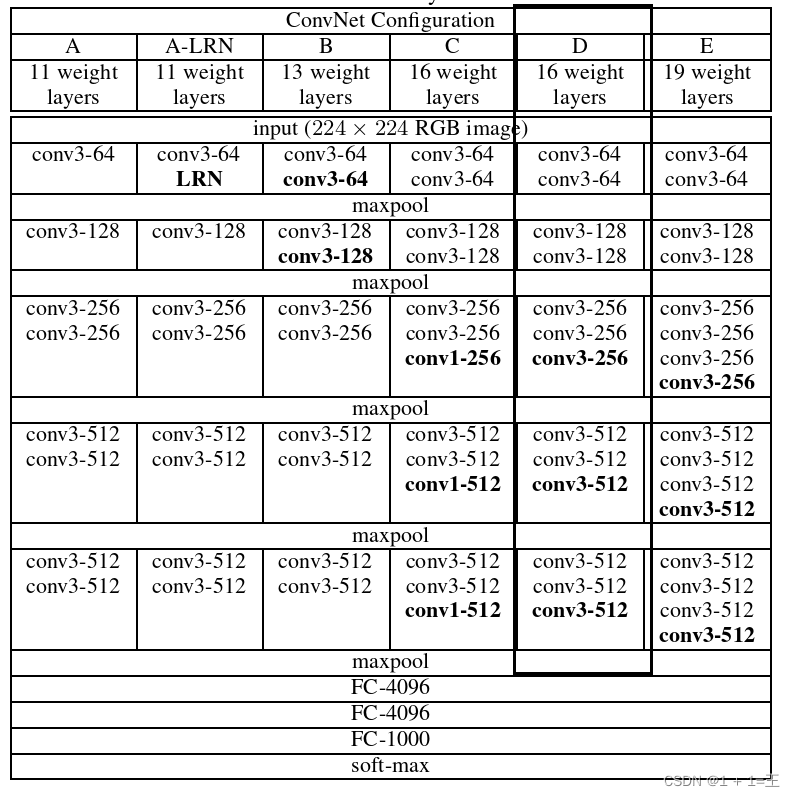

In the paper , The author tried to use 5 Different network structures , The depths are 11,11,13,16,19,5 The structure diagram is shown below :

The most common one is VGG16 and VGG19, So let's do that VGG16 As an example to analyze its network structure .

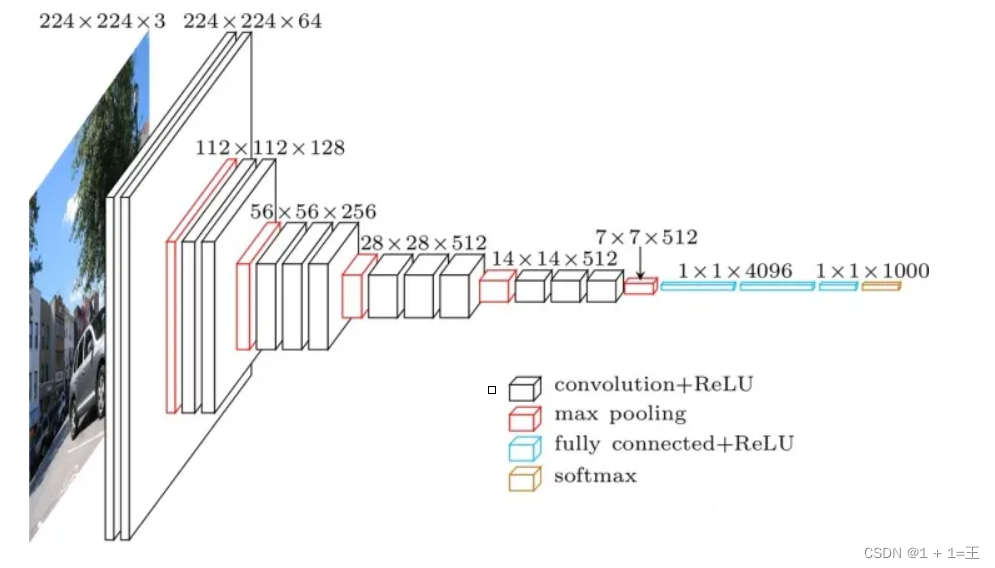

VGG16 Network structure

VGG16 Medium 16 It means that it is made by 16 layers (13 Convolution layers + 3 All connection layers , Pool layer is not included ).

VGG The input image size of is 224X224X3 Three channel color image , share 1000 Categories .

The convolution kernel size of convolution layer is 3,padding by 1; Of pool layer kernel_size by 2,stride by 2.

therefore

- The convolution layer only changes the number of channels of the characteristic graph , Don't change the size .(W - 3 + 2*1)/ 1 + 1 = W

- The pooling layer does not change the number of channels of the characteristic graph , The size becomes half of the original .

VGG It has obvious block structure ,VGG It can be divided into the following six pieces :

- Two convolutions + A pool :conv3-64+conv3-64 + maxpool

- Two convolutions + A pool :conv3-128+conv3-128+ maxpool

- Three convolutions + A pool :conv3-256+conv3-256+conv3-256+ maxpool

- Three convolutions + A pool :conv3-512+conv3-512+conv3-512+ maxpool

- Three convolutions + A pool :conv3-512+conv3-512+conv3-512+ maxpool

- Three full connections :fc-4096 + fc-4096 + fc-1000( Corresponding 1000 Categories )

Use pytorch build VGG16

For the sake of understanding , We divide the forward propagation process into two parts ,

- One is the feature extraction layer (features), Include 13 Convolution layers ;

- The other is the classification layer (classify), Include 3 All connection layers .

features

def make_features(self):

cfgs = [64, 64, 'MaxPool', 128, 128, 'MaxPool', 256, 256, 256, 'MaxPool', 512, 512, 512, 'MaxPool', 512, 512, 512, 'MaxPool']

layers = []

in_channel = 3

for cfg in cfgs:

if cfg == "MaxPool": # Pooling layer

layers += [nn.MaxPool2d(kernel_size=2,stride=2)]

else:

layers += [nn.Conv2d(in_channels=in_channel,out_channels=cfg,kernel_size=3,padding=1)]

layers += [nn.ReLU(True)]

in_channel = cfg

return nn.Sequential(*layers)

classifier

【 Be careful 】: Before making a full connection , It is necessary to flatten the three-dimensional feature image output from the convolution layer to 1 dimension .

x = torch.flatten(x,start_dim=1)

self.classifier = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Linear(4096, 1000)

)

Complete code

""" #-*-coding:utf-8-*- # @author: wangyu a beginner programmer, striving to be the strongest. # @date: 2022/7/1 15:01 """

import torch

import torch.nn as nn

class VGG(nn.Module):

def __init__(self):

super(VGG, self).__init__()

self.features = self.make_features()

self.classifier = nn.Sequential(

nn.Linear(512 * 7 * 7, 4096),

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Linear(4096, 4096),

nn.ReLU(True),

nn.Dropout(p=0.5),

nn.Linear(4096, 1000)

)

def forward(self,x):

x = self.features(x)

x = torch.flatten(x,start_dim=1)

x = self.classifier(x)

return x

def make_features(self):

cfgs = [64, 64, 'MaxPool', 128, 128, 'MaxPool', 256, 256, 256, 'MaxPool', 512, 512, 512, 'MaxPool', 512, 512, 512, 'MaxPool']

layers = []

in_channel = 3

for cfg in cfgs:

if cfg == "MaxPool": # Pooling layer

layers += [nn.MaxPool2d(kernel_size=2,stride=2)]

else:

layers += [nn.Conv2d(in_channels=in_channel,out_channels=cfg,kernel_size=3,padding=1)]

layers += [nn.ReLU(True)]

in_channel = cfg

return nn.Sequential(*layers)

net = VGG()

print(net)

边栏推荐

- Lu MENGZHENG's "Fu of broken kiln"

- Substance designer 2021 software installation package download and installation tutorial

- Atcoder beginer contest 261e / / bitwise thinking + DP

- Chapter5 : Deep Learning and Computational Chemistry

- [rust] reference and borrowing, string slice type (& STR) - rust language foundation 12

- 公安部:国际社会普遍认为中国是世界上最安全的国家之一

- go : gin 自定义日志输出格式

- The world is exploding, and the Google server has collapsed

- Shell common script: judge whether the file of the remote host exists

- 卷积核越大性能越强?一文解读RepLKNet模型

猜你喜欢

The larger the convolution kernel, the stronger the performance? An interpretation of replknet model

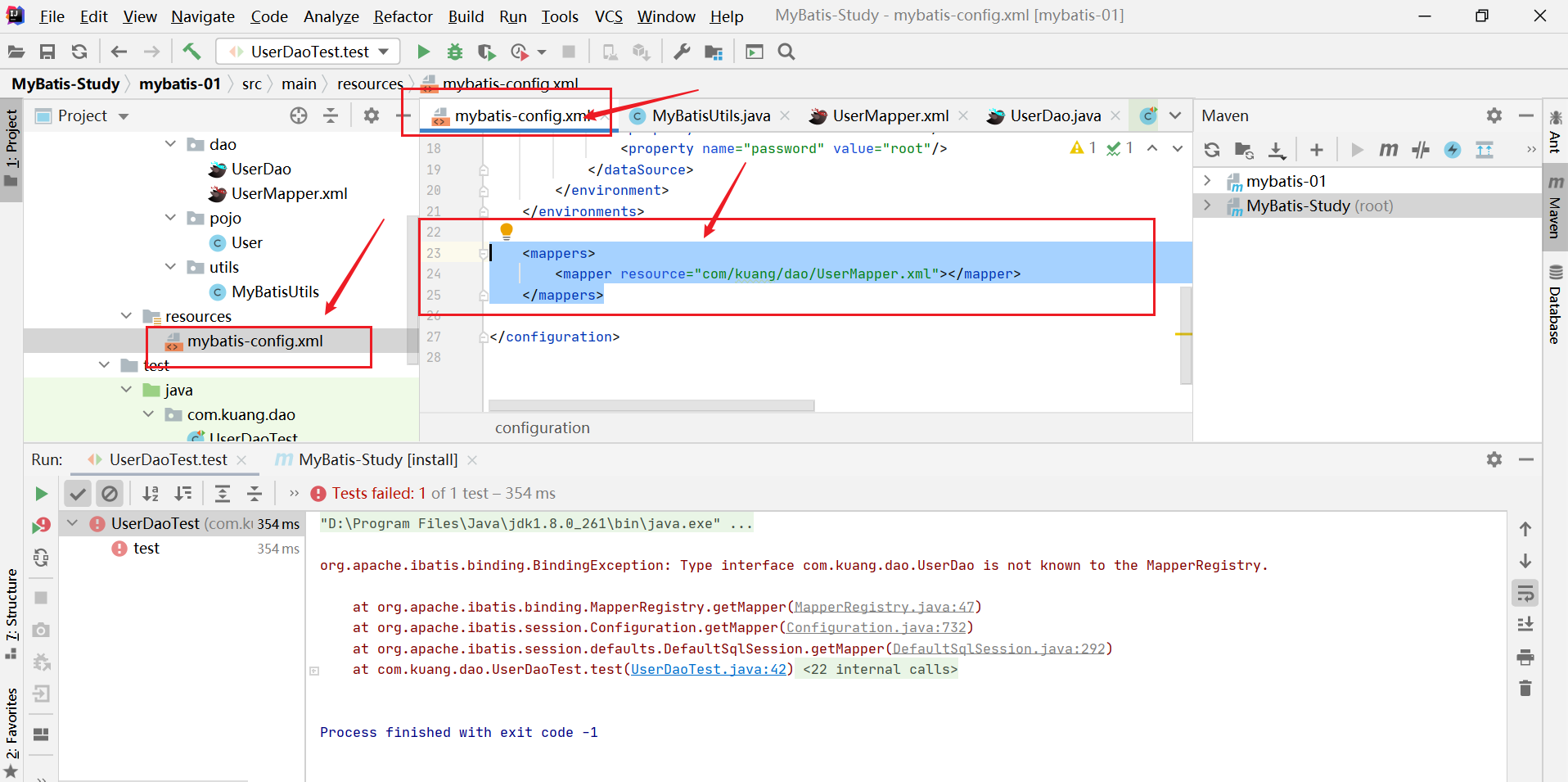

【问题解决】ibatis.binding.BindingException: Type interface xxDao is not known to the MapperRegistry.

A hard journey

【AI4Code】《CodeBERT: A Pre-Trained Model for Programming and Natural Languages》 EMNLP 2020

AtCoder Beginner Contest 261 F // 树状数组

零基础学习CANoe Panel(15)—— 文本输出(CAPL Output View )

VIM tip: always show line numbers

【AI4Code】《CoSQA: 20,000+ Web Queries for Code Search and Question Answering》 ACL 2021

Leetcode 1184. distance between bus stops

2022.07.24 (lc_6124_the first letter that appears twice)

随机推荐

零基础学习CANoe Panel(16)—— Clock Control/Panel Control/Start Stop Control/Tab Control

【AI4Code】《GraphCodeBERT: Pre-Training Code Representations With DataFlow》 ICLR 2021

I want to ask whether DMS has the function of regularly backing up a database?

跌荡的人生

Vim技巧:永远显示行号

卷积神经网络模型之——AlexNet网络结构与代码实现

[machine learning] experimental notes - emotion recognition

If you want to do a good job in software testing, you can first understand ast, SCA and penetration testing

CONDA common commands: install, update, create, activate, close, view, uninstall, delete, clean, rename, change source, problem

公安部:国际社会普遍认为中国是世界上最安全的国家之一

B树和B+树

2022 年中回顾 | 大模型技术最新进展 澜舟科技

[机器学习] 实验笔记 – 表情识别(emotion recognition)

State mode

【问题解决】org.apache.ibatis.exceptions.PersistenceException: Error building SqlSession.1 字节的 UTF-8 序列的字

OAuth,JWT ,OIDC你们搞得我好乱啊

Zero basic learning canoe panel (15) -- CAPL output view

Chapter5 : Deep Learning and Computational Chemistry

如何理解Keras中的指标Metrics

Summary of Niuke forum project deployment