当前位置:网站首页>Practice of combining rook CEPH and rainbow, a cloud native storage solution

Practice of combining rook CEPH and rainbow, a cloud native storage solution

2022-07-01 05:16:00 【Haoyuyun sect】

The foundation is not solid , The earth trembled and the mountains swayed . Whatever the architecture , The choice of underlying storage is a topic worth discussing . Store the data carrying the business , Its performance directly affects the actual performance of business applications . Because storage and business data are closely related , Its reliability must also be concerned , Once the failure of storage results in the loss of business data , It would be a disaster .

1. The way of storage choice in the cloud native era

Recent years , My work always revolves around customers Kubernetes The construction of clusters . How to Kubernetes The cluster chooses a stable and reliable model 、 High performance storage solutions , Such problems have been bothering me .

Storage volumes can be in Pod The basic functional requirement of reloading after drifting to other nodes , Let me focus on the storage type of shared file system from the beginning . At first, I chose Nfs, And then I put in Glusterfs The arms of , Until recently, I began to explore other better cloud native storage solutions , Along the way, I also have a certain understanding of various storage . They have their own characteristics :

Nfs:Nfs It is an old brand storage solution based on network shared files . Its advantage is simple and efficient . Its disadvantages are also obvious , Single point of failure at the server , There is no data replication mechanism . In some scenarios that do not require high reliability ,Nfs Still the best choice .

Glusterfs: This is an open source distributed shared storage solution . be relative to Nfs for ,Gfs Improves data reliability through multiple replica sets , add to Brick The mechanism also makes the expansion of the storage cluster no longer limited to one server .Gfs It was once the first choice of our department in the production environment , By setting the replication factor to 3 , While ensuring the reliability of data , It can also avoid the problem of data brain cracking in distributed systems . Along with Gfs After a long time together , We also found its performance weakness in the scenario of dense small file reading and writing . And a single shared file system type of storage , It also gradually no longer meets the needs of our use scenarios .

We haven't stopped exploring the way to find more suitable storage . The concept of cloud primordial has been very popular in the past two years , Various cloud native domain projects are emerging in the community , There are also storage related projects . In the beginning , We set our eyes on Ceph On the body , What attracts us most is that it can provide high-performance block device type storage . However, due to its complex deployment mode 、 The higher operation and maintenance threshold once dissuaded me . and CNCF Graduation project Rook Appearance , Finally, the contact is leveled Ceph The last threshold .

Rook The project provides a cloud native storage orchestration tool , Provide platform level for all types of storage 、 Framework level support , Managed the installation of storage software 、 Operation and maintenance .Rook stay 2018 Published in 0.9 In the version , Formal will Ceph Operator As a feature of stable support , It has been several years now . Use Rook Deploy and manage production level Ceph The cluster is still very robust .

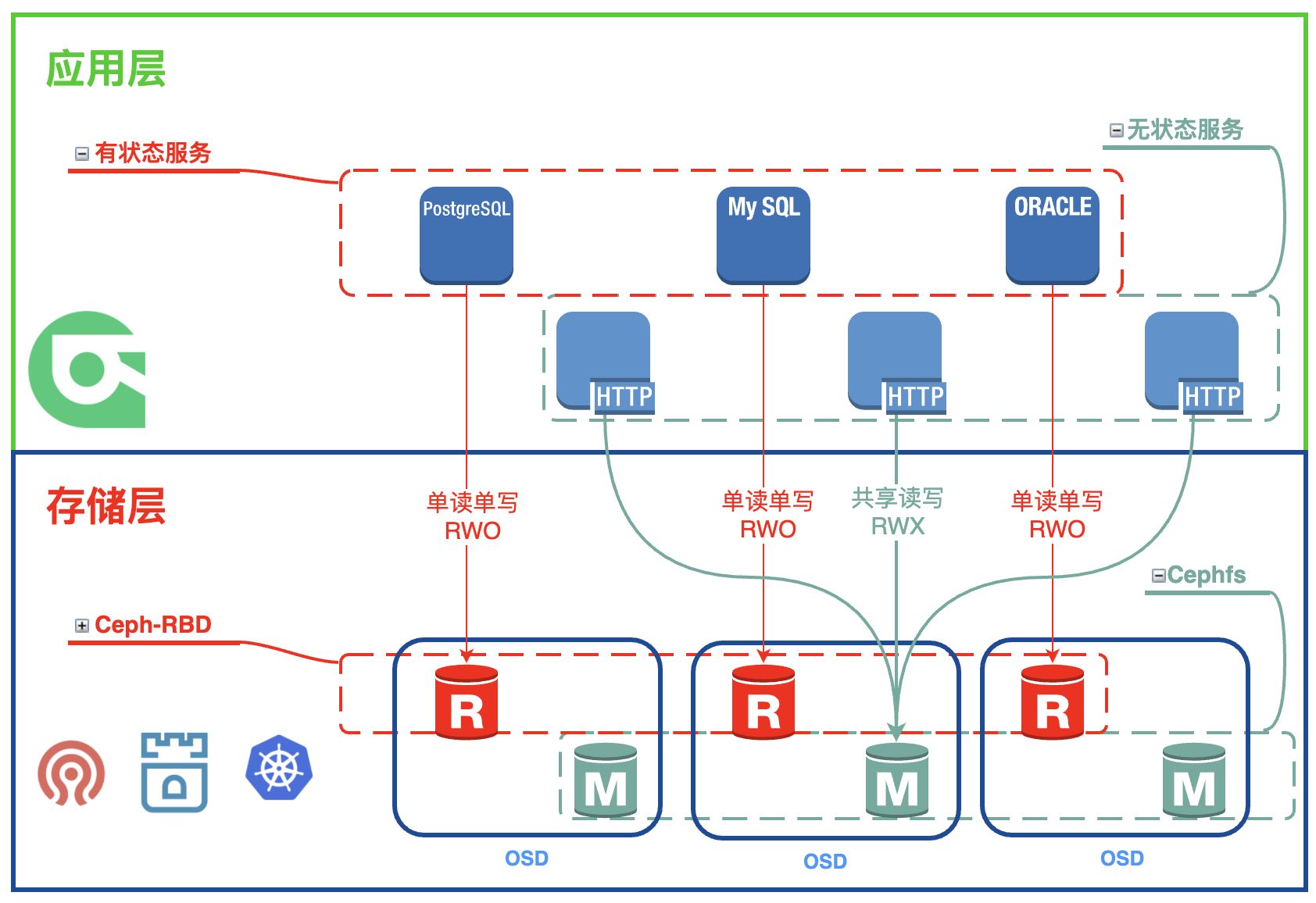

be relative to Gfs ,Rook-Ceph Provides high performance block device type storage , This is equivalent to Pod A hard disk is attached , It is not difficult to deal with the scenario of reading and writing dense small files .Rook-Ceph In addition to providing block device type storage , It can also be based on Cephfs Provide distributed shared storage , And based on S3 Object storage of protocol . Unified management of multiple storage types , It also provides a visual management interface , Very friendly to O & M personnel .

As CNCF Graduation project ,Rook-Ceph There is no doubt about the support for cloud native scenarios . Deployment completed Rook-Ceph The cluster provides CSI plug-in unit , With StorageClass The form of Kubernetes Provisioning data volumes , For compatibility CSI Standardized cloud primitives PaaS The platform is also very friendly .

2. Rainbond And Rook Docking

stay Rainbond V5.7.0-release In the version , Added right Kubernetes CSI Container storage interface support .

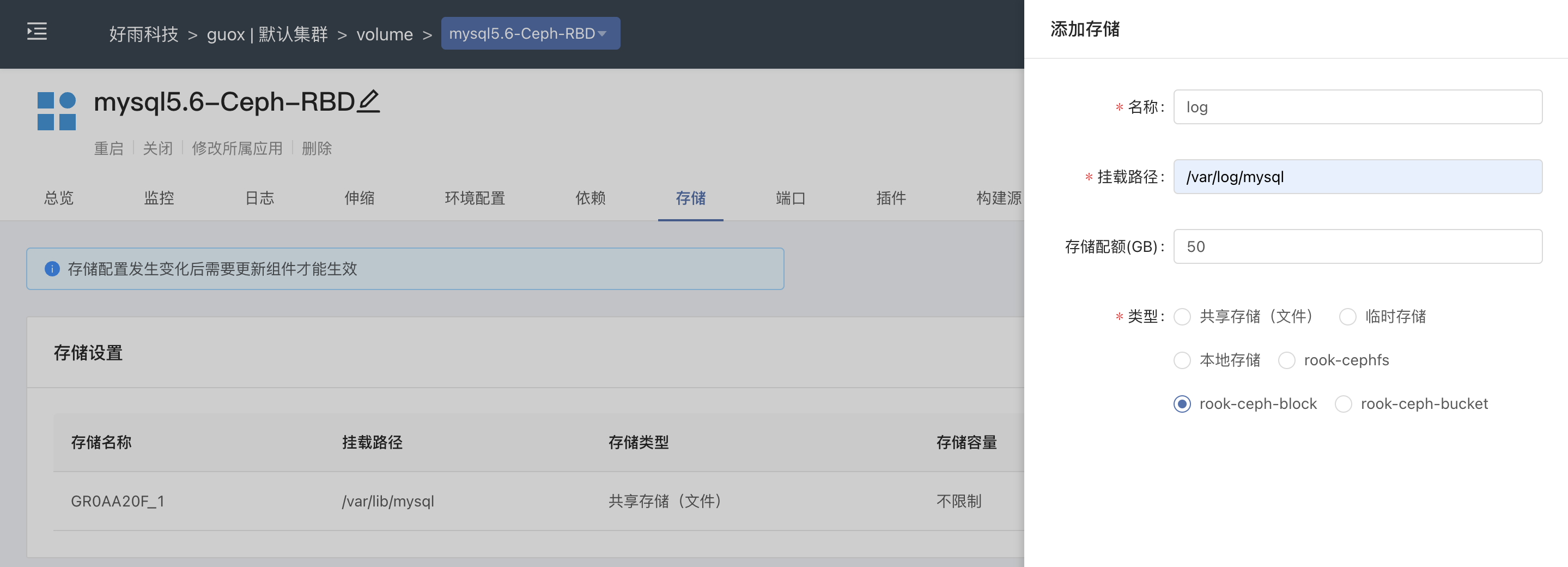

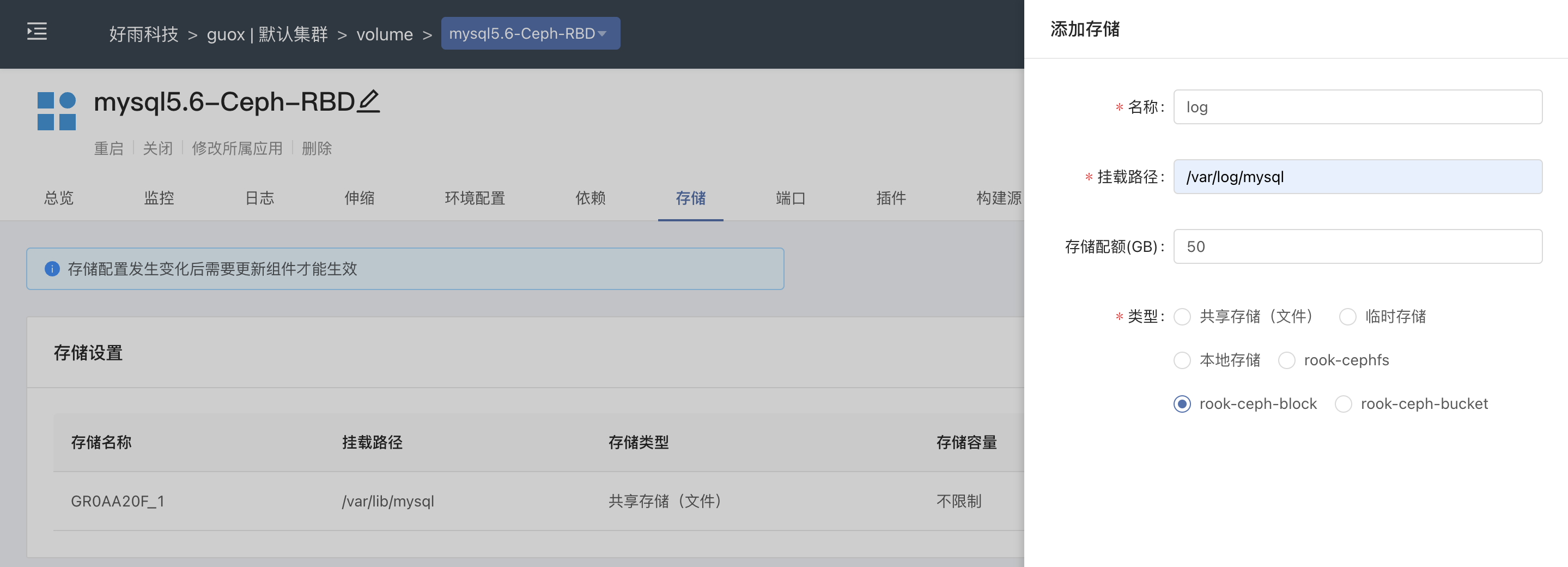

Rainbond During the installation and deployment phase , Will be quoted Cephfs To deploy the shared storage provided by default for all service components . For stateful service components , When adding persistent storage , You can select all available in the current cluster StorageClass, By choice rook-ceph-block You can apply for a piece of equipment to mount , Full graphical interface operation , It is very convenient .

How to deploy Rook-Ceph And receive Rainbond In , Please refer to the documentation Rook-Ceph Docking program .

3. Experience with

This chapter , I will do it in an intuitive way , The description is in Rainbond It's connected Rook-Ceph Various use experiences after storage .

3.1 Using shared storage

Rainbond During the installation phase, the docking is performed by specifying parameters Cephfs Share storage as a cluster . In the use of Helm install Rainbond In the process of , Key docking parameters are as follows :

--set Cluster.RWX.enable=true \--set Cluster.RWX.config.storageClassName=rook-cephfs \--set Cluster.RWO.enable=true \--set Cluster.RWO.storageClassName=rook-ceph-blockFor any deployment in Rainbond For service components on the platform , You only need to mount persistent storage , Select the default shared storage , That is, it is equivalent to persisting the data into Cephfs File system .

The English name of the component in the cluster can be used to filter the PV resources :

$ kubectl get pv | grep mysqlcephfspvc-faa3e796-44cd-4aa0-b9c9-62fa0fbc8417 500Gi RWX Retain Bound guox-system/manual7-volume-mysqlcephfs-0 rainbondsssc 2m7s3.2 Mount block device

In addition to the default shared storage , In all other clusters StorageClass Are open to stateful services . Select... Manually rook-ceph-block Block device type storage can be created , And mount to Pod Use . When a service component has multiple instances , Every Pod Will generate a block device to be mounted for use .

Query generated PV resources :

$ kubectl get pv | grep mysql6-0pvc-5172cb7a-cf5b-4770-afff-153c981ab09b 50Gi RWO Delete Bound guox-system/manual6-app-a710316d-mysql6-0 rook-ceph-block 5h15m3.3 Turn on dashboard

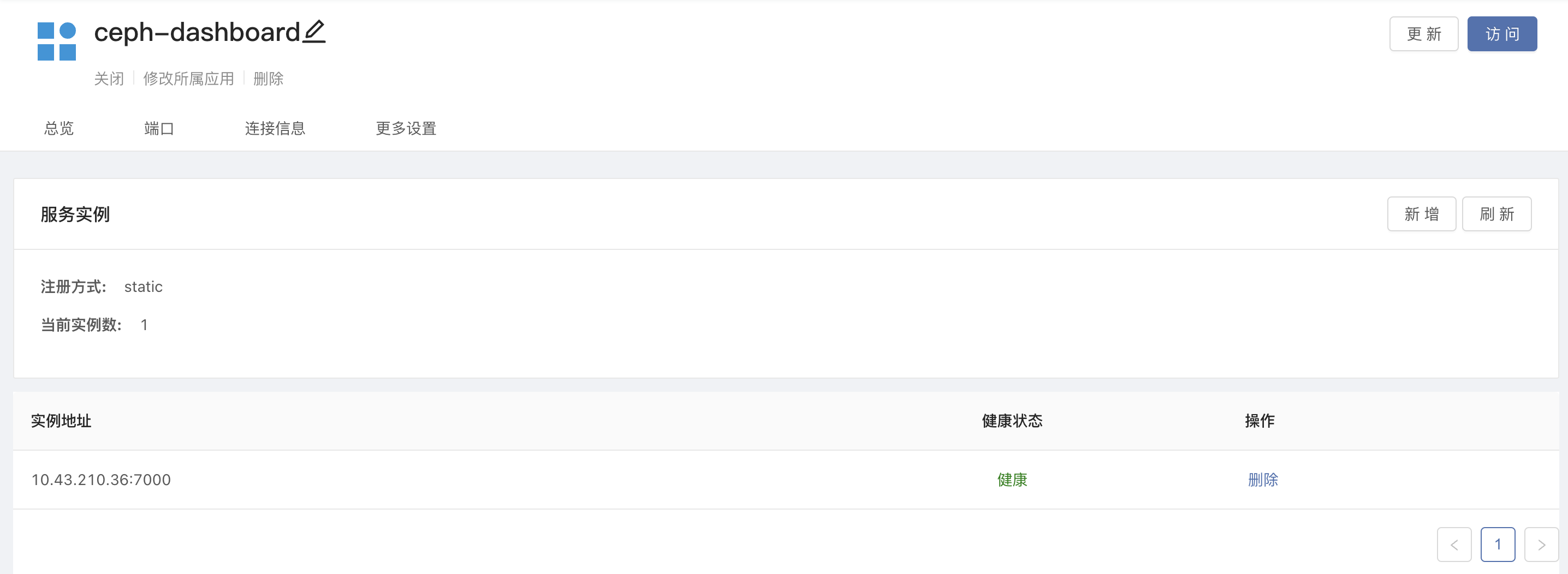

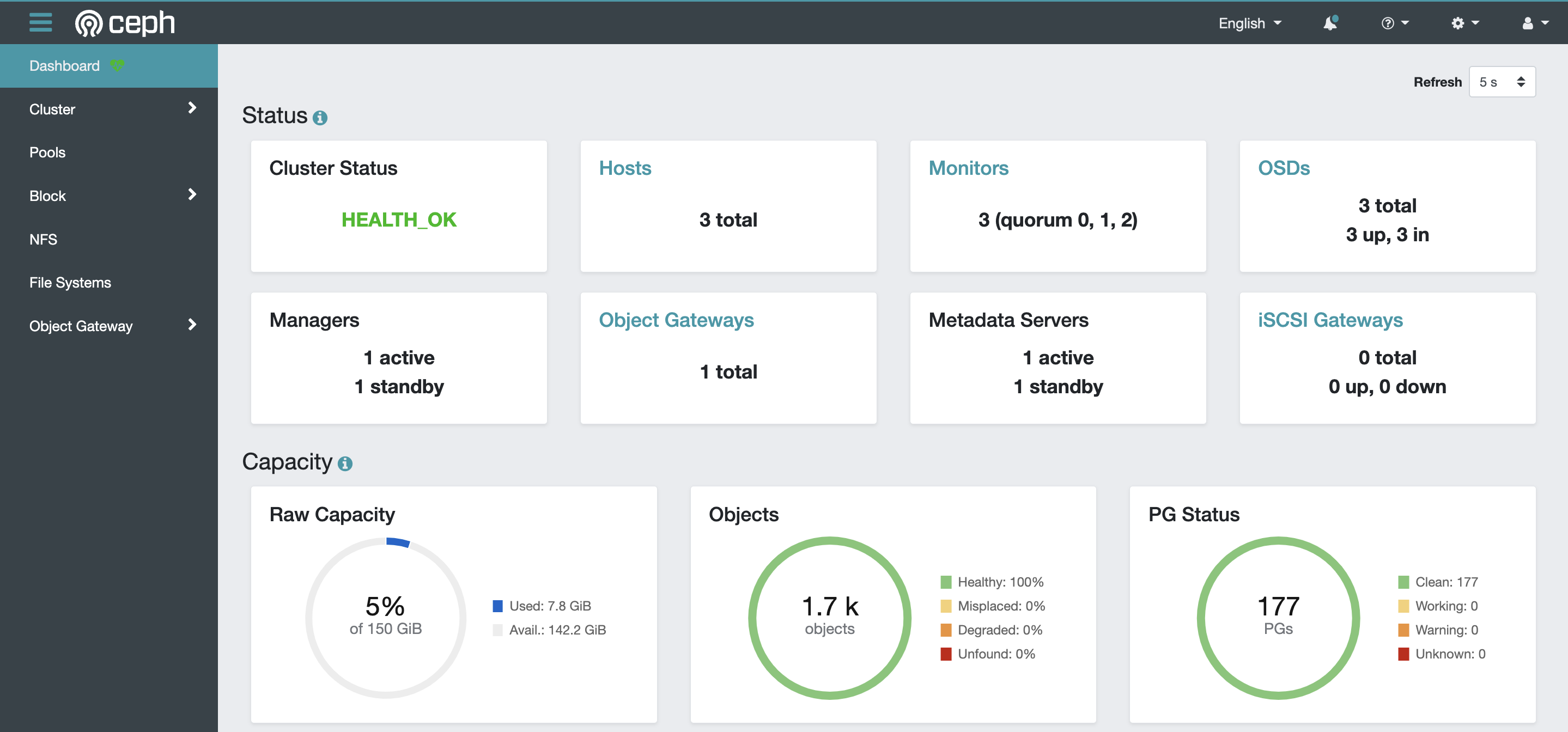

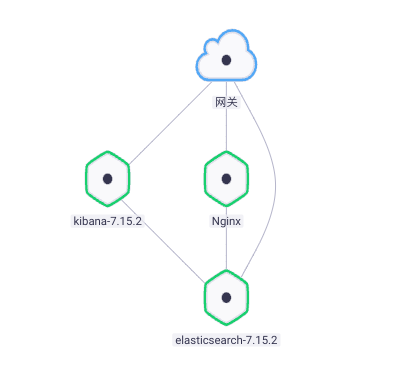

Rook-Ceph The visual operation interface is installed by default Ceph-dashboard. Here you can monitor the entire storage cluster , You can also change the configuration of various storage types based on graphical interface operations .

modify Ceph Cluster configuration , Ban dashboard built-in ssl:

$ kubectl -n rook-ceph edit cephcluster -n rook-ceph rook-ceph# modify ssl by falsespec: dashboard: enabled: true ssl: false# restart operator Make configuration effective $ kubectl delete po -l app=rook-ceph-operator -n rook-ceph$ kubectl -n rook-ceph get service rook-ceph-mgr-dashboardNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGErook-ceph-mgr-dashboard ClusterIP 10.43.210.36 <none> 7000/TCP 118m obtain svc, Formal proxy using third-party components on the platform , After opening the external service address , It can be accessed through the gateway dashboard.

After accessing the dashboard , The default user is admin, Execute the following command on the server to obtain the password :

kubectl -n rook-ceph get secret rook-ceph-dashboard-password -o jsonpath="{['data']['password']}" | base64 --decode && echo

3.4 Use object store

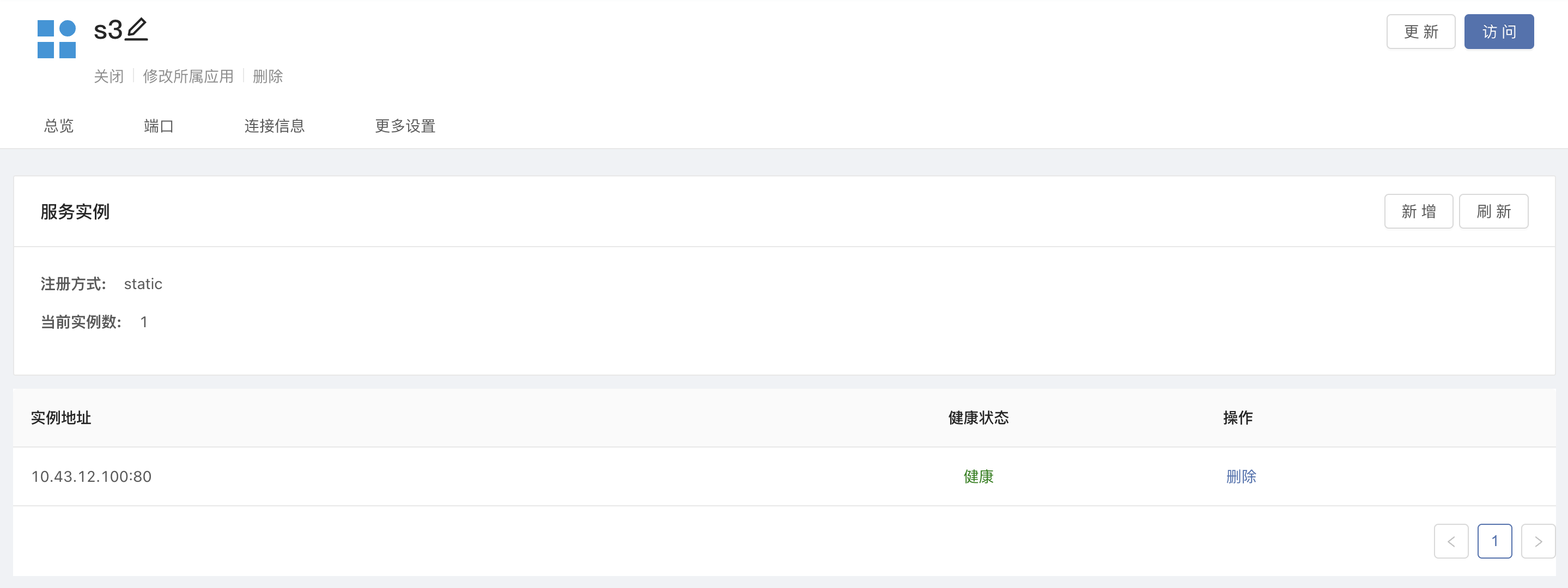

Please refer to the documentation Rook-Ceph Deployment docking , Can be in Rook-Ceph Deploy object store in . You only need to store the objects service ClusterIP Through a third-party service agent , We can get an object storage address that can be accessed simultaneously by multiple clusters managed by the same console .Rainbond Based on this feature , Realize cloud backup and migration .

Get the... Stored by the object svc Address :

$ kubectl -n rook-ceph get service rook-ceph-rgw-my-storeNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGErook-ceph-rgw-my-store ClusterIP 10.43.12.100 <none> 80/TCP 3h40m

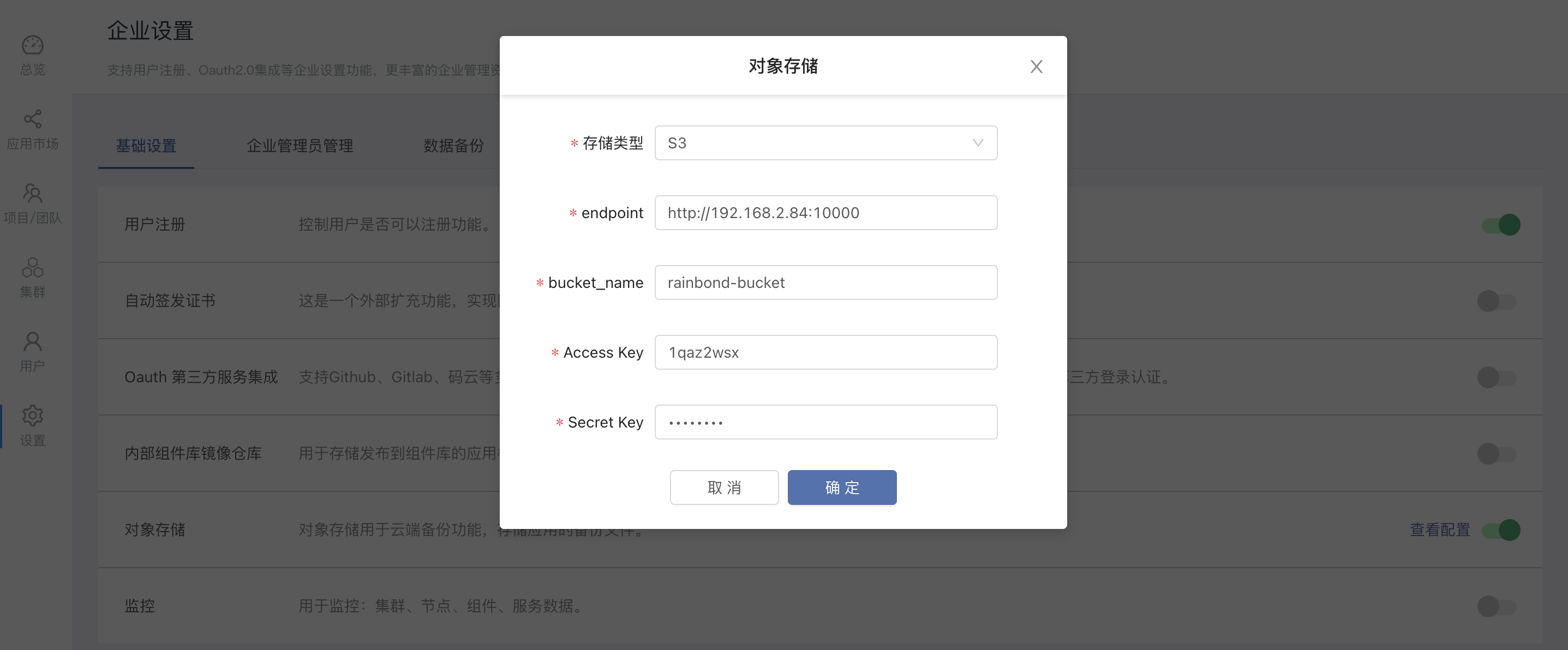

By filling in in the enterprise settings, you can Ceph-dashboard Object store created in bucket、access-key、secret-key, You can connect the object storage .

4. Performance comparison test

We make use of sysbench Tools , For those that use different types of storage Mysql Performance tests were carried out , In addition to the data directory, different types of storage are mounted , Other experimental conditions are consistent . The storage types involved in the test include Glusterfs、Cephfs、Ceph-RBD Three .

The data collected is sysbench The number of transactions per second returned by the test (TPS) And requests per second (QPS):

| Storage type | Mysql Memory | QPS | TPS |

|---|---|---|---|

| Glusterfs | 1G | 4600.22 | 230.01 |

| Cephfs | 1G | 18095.08 | 904.74 |

| Ceph-RBD | 1G | 24852.58 | 1242.62 |

The test results are obvious ,Ceph Block device has the highest performance ,Cephfs relative Glusterfs It also has obvious performance advantages .

5. At the end

adapter Kubernetes CSI The container storage interface is Rainbond v5.7.0-release A big feature of version , This feature allows us to easily dock Rook-Ceph This excellent storage solution . Through to Rook-Ceph Description of the user experience and comparison of the final performance test , Have to say ,Rook-Ceph It will soon become a major direction for us to explore in the field of cloud native storage .

边栏推荐

- Rust hello-word

- Software intelligence: the "world" and "boundary" of AI sentient beings in AAAs system

- Global and Chinese market of enterprise wireless LAN 2022-2028: Research Report on technology, participants, trends, market size and share

- 线程安全问题

- Global and Chinese market of solder wire 2022-2028: Research Report on technology, participants, trends, market size and share

- 数字金额加逗号;js给数字加三位一逗号间隔的两种方法;js数据格式化

- Global and Chinese market of 3D design and modeling software 2022-2028: Research Report on technology, participants, trends, market size and share

- Worried about infringement? Must share copyrightless materials on the website. Don't worry about the lack of materials for video clips

- How to select conductive slip ring material

- Leetcode316- remove duplicate letters - stack - greedy - string

猜你喜欢

LeetCode522-最长特殊序列II-哈希表-字符串-双指针

el-form表单新增表单项动态校验;el-form校验动态表单v-if不生效;

液压滑环的特点讲解

轻松上手Fluentd,结合 Rainbond 插件市场,日志收集更快捷

Unity drags and modifies scene camera parameters under the editor

复制宝贝提示材质不能为空,如何解决?

busybox生成的东西

![[RootersCTF2019]babyWeb](/img/b4/aa8f8e107a9dacbace72d4717b1834.png)

[RootersCTF2019]babyWeb

Mathematical knowledge: finding the number of divisors

云原生存储解决方案Rook-Ceph与Rainbond结合的实践

随机推荐

Sqlplus connects using the instance name

Copy baby prompt: material cannot be empty. How to solve it?

Global and Chinese market of enterprise wireless LAN 2022-2028: Research Report on technology, participants, trends, market size and share

Thread safety issues

Vérification simple de la lecture et de l'écriture de qdatastream

缓冲流与转换流

AcWing 884. Gauss elimination for solving XOR linear equations

Use of STM32 expansion board temperature sensor and temperature humidity sensor

线程类的几大创建方法

[daily question in summer] function of rogu p3742 UMI

Actual combat: gateway api-2022.2.13

Query long transaction

1076 Forwards on Weibo

QDataStream的簡單讀寫驗證

Introduction to 3D modeling and processing software Liu Ligang University of science and technology of China

Principle, technology and implementation scheme of data consistency in distributed database

How to hide browser network IP address and modify IP internet access?

Copier le matériel de conseils de bébé ne peut pas être vide, comment résoudre?

Day 05 - file operation function

Detailed explanation of distributed global unique ID solution