当前位置:网站首页>Share an example of a simple MapReduce method using a virtual machine

Share an example of a simple MapReduce method using a virtual machine

2022-06-30 04:07:00 【Xiao Zhu, classmate Wu】

I am also a new comer in big data , The bad news I share may only disturb the younger newcomers , Excuse me .

(1) First, the last mapreduce Flow chart of the method .

This is a method of counting the number of occurrences of text words , Very much in hadoop The wisdom of dividing and ruling the ecosystem , In the picture above splitting and mapping Phases can run on different hosts or virtual machines , If it's distributed , It will run very fast .reducing The stage is to count the same words into a module , Finally, together . The implementation method on the virtual machine will be introduced later .

(2) Create... On the virtual machine .txt file

If there is no ready-made data set , Just use what I wrote above , Let's write the text into a .txt In file . Make sure that the virtual machine is configured hadoop environment variable ,hdfs and yarn Various configuration files have been edited , No error will be reported when running .

[[email protected] ~]# cd /opt/rh/hadoop-3.2.2/

[[email protected] hadoop-3.2.2]# ls

bin etc hdfs include lib libexec LICENSE.txt logs NOTICE.txt README.txt sbin share tmp wcinput wcoutput

[[email protected] hadoop-3.2.2]# mkdir wordcountfile

[[email protected] hadoop-3.2.2]# ls

bin etc hdfs include lib libexec LICENSE.txt logs NOTICE.txt README.txt sbin share tmp wcinput wcoutput wordcountfile

[[email protected] hadoop-3.2.2]# cd wordcountfile

[[email protected] wordcountfile]# vi wordfile.txt

[[email protected] wordcountfile]# cat wordfile.txt

i like python

python like spark

Spark link hadoop

[[email protected] wordcountfile]#

So here we are hadoop The main file directory is created ,wordfile.txt file . Next we type in the command , function mapreduce Method .

(3) Open the virtual machine cluster ,start-all.sh, call hadoop Under the mapreduce Methods wordcount jar package , Specify the path to save the result file , Count the number of times each word appears .

[[email protected] hadoop-3.2.2]# bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.2.jar wordcount file:///opt/rh/hadoop-3.2.2/wordcountfile/wordfile.txt file:///opt/rh/hadoop-3.2.2/wordoutputfile

The virtual machine firewall needs to be turned off ,systemctl stop firewalld.service, The existence of firewall will affect the communication between hosts in the cluster . If you do not specify the full path of the output input file , It will be reported that the file has not been uploaded to hdfs Error of . Click on the run .

2021-04-03 21:54:23,391 INFO mapreduce.Job: map 0% reduce 0%

2021-04-03 21:54:29,850 INFO mapreduce.Job: map 100% reduce 0%

2021-04-03 21:54:38,973 INFO mapreduce.Job: map 100% reduce 100%

2021-04-03 21:54:40,002 INFO mapreduce.Job: Job job_1617457620069_0002 completed successfully

2021-04-03 21:54:40,169 INFO mapreduce.Job: Counters: 54

See this note wordcount The program runs successfully . Let's look at the result file .

drwxr-xr-x. 2 root root 26 Apr 3 21:33 wordcountfile

drwxr-xr-x. 2 root root 88 Apr 3 21:58 wordoutputfile

[[email protected] hadoop-3.2.2]# cd wordoutputfile/

[[email protected] wordoutputfile]# ls

part-r-00000 _SUCCESS

We turn on part-r-00000 file , See our results .

[[email protected] wordoutputfile]# cat part-r-00000

Spark 1

hadoop 1

i 1

like 2

link 1

python 2

spark 1

[[email protected] wordoutputfile]#

The result is the same as that in the above figure . Errors may be reported during execution .

But don't give up , If you encounter any problems, go online to search , You can also ask me . Although I don't know how to .

边栏推荐

- El upload upload file (manual upload, automatic upload, upload progress)

- [punch in - Blue Bridge Cup] day 3 --- slice in reverse order list[: -1]

- Day 11 script and game AI

- ReSharper 7. Can X be used with vs2013 preview? [off] - can resharper 7 x be used with VS2013 preview? [closed]

- 知识点滴 - 如何用3个简单的技巧在销售中建立融洽的关系

- lego_loam 代码阅读与总结

- el-upload上传文件(手动上传,自动上传,上传进度)

- Radiant energy, irradiance and radiance

- Thingsboard tutorial (II and III): calculating the temperature difference between two devices in a regular chain

- 云原生——Web实时通信技术之Websocket

猜你喜欢

el-upload上傳文件(手動上傳,自動上傳,上傳進度)

lego_ Reading and summary of loam code

第十天 数据的保存与加载

Huawei cloud native - data development and datafactory

An error occurs when sqlyog imports the database. Please help solve it!

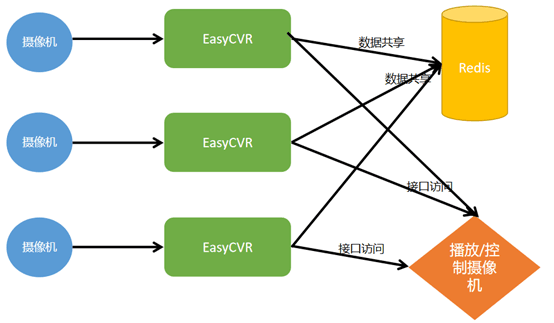

EasyCVR部署服务器集群时,出现一台在线一台不在线是什么原因?

![[note] May 23, 2022 MySQL](/img/a1/dd71610236729e1d25c4c3e903c0e0.png)

[note] May 23, 2022 MySQL

Error encountered in SQL statement, solve

(04).NET MAUI实战 MVVM

Day 9 script and resource management

随机推荐

Node-RED系列(二八):基于OPC UA节点与西门子PLC进行通讯

学校实训要做一个注册页面,要打开数据库把注册页面输入的内容存进数据库但是

Jour 9 Gestion des scripts et des ressources

Selenium environment installation, 8 elements positioning --01

基于海康EhomeDemo工具排查公网部署出现的视频播放异常问题

Postman learning sharing

[note] on May 28, 2022, data is obtained from the web page and written into the database

绿色新动力,算力“零”负担——JASMINER X4系列火爆热销中

《机器人SLAM导航核心技术与实战》第1季:第0章_SLAM发展综述

lego_loam 代码阅读与总结

使用IDEAL连接数据库,运行出来了 结果显示一些警告,这部分怎么处理

Ananagrams(UVA156)

Magical Union

Vscode+anaconda+jupyter reports an error: kernel did with exit code

第九天 脚本與資源管理

How to analyze and solve the problem of easycvr kernel port error through process startup?

Linear interpolation of spectral response function

What does the hyphen mean for a block in Twig like in {% block body -%}?

SQL server2005中SUM函数中条件筛选(IF)语法报错

Troubleshoot abnormal video playback problems in public network deployment based on Haikang ehomedemo tool