当前位置:网站首页>Introduction to Bert and Vit

Introduction to Bert and Vit

2022-06-29 04:27:00 【Binary artificial intelligence】

List of articles

BERT and ViT brief introduction

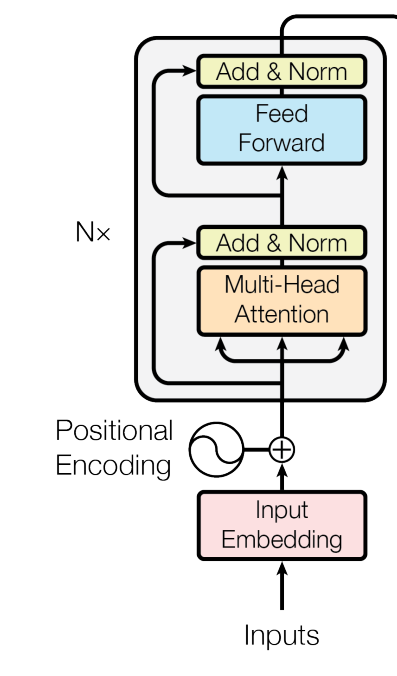

BERT(Bidirectional Encoder Representations from Transformers) It's a language model ; and VIT(Vision Transformer) Is a visual model . Both use Transformer The encoder :

BERT

BERT Enter the word vector of the text , Output the semantic representation of the text . In the process of the training BERT It can be used for various language processing tasks .

BERT Pre training :

(1) Mission 1:Masked Language Model(MLM)

Content : Fill in the blanks .

Purpose : The training model shows the deep and two-way representation of sentences , That is, the words in the sentence can be inferred from left to right, and the words in the sentence can also be inferred from right to left .

Method :

Mask a sentence randomly [MASK] Hollowing out .

Then type the sentence BERT Get its expression :

The final will be [MASK] The corresponding means to input a multi class linear classifier to predict and fill in the blank :

(2) Mission 2: Next Sentence Prediction (NSP)

Content : Judge whether the two sentences are connected .

Purpose : Train the model to understand the relationship between sentences .

Method :

Different sentences ( for example “ Wake up! ” and “ You don't have a sister ”) use [SEP] Flag separated , And [CLS] Type... Together BERT. [CLS] The corresponding representation will be input into a two classifier , Judge whether the two sentences are connected . Be careful ,BERT Inside, there is a lot of attention ,[CLS] It can be placed anywhere in the sentence , Finally, you can get other input information .

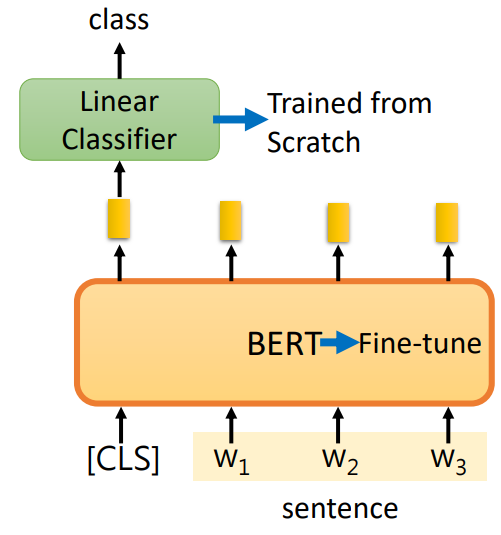

Using pre-trained BERT:

(1) Enter a sentence , Output category :

The linear classifier (Linear Classifier) It's training from scratch , and BERT fine-tuning (Fine-tune) Parameters .

(2) Enter a sentence , Classify each word in the sentence ( For example, the verb 、 Noun 、 Pronouns, etc ).

Empathy , The linear classifier is trained from scratch , and BERT fine-tuning (Fine-tune) Parameters .

(3) Type in two sentences , Output a category ( for example , Judge whether there is a certain relationship between the two sentences ).

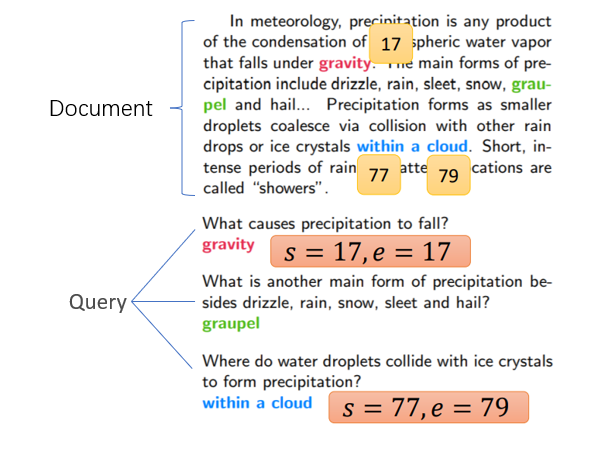

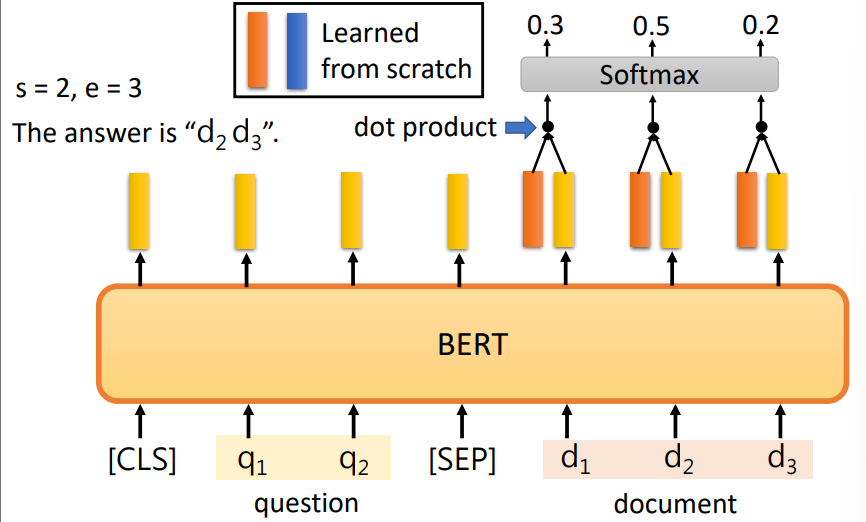

(4) reading comprehension : Input article (Document) And some questions (Query), Output the answer to the question .

Output ( s , e ) (s,e) (s,e) Indicates the... In the article s s s Two words and ( Include ) The first e e e The content between words . for example :

Using two trainable parameter vectors, respectively, and document The corresponding representation is a dot product , And then pass by Softmax Choose the location with the highest probability .

| The starting position ( Orange parameter vector ) | End position ( Blue parameter vector ) |

|---|---|

|  |

ViT

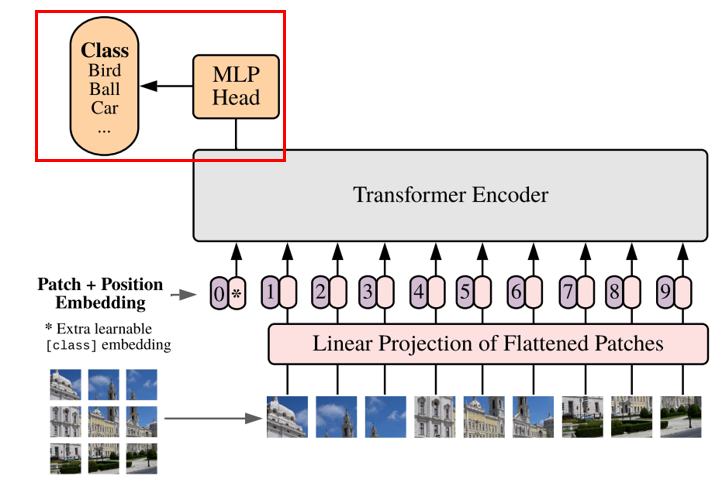

VIT And BERT equally , Also used. Transformer The encoder , But because it deals with image data , So we need to do some special processing on the image in the input part :VIT Block and vectorize the input picture , Thus, the same coding model as the word vector can be used .

(1) Divide the image into small pieces of a sequence (patch), Each piece is equivalent to a word in the sentence .

(2) Flatten the small pieces (flatten) Form a vector and use a linear transformation matrix to map it linearly .

(3) And above BERT Of [CLS] equally ,VIT Such a category vector has also been added :*. Then add position information for each vector .

(4) Input Transformer Encoder

(5) The last is classification , And BERT Empathy .

Be careful ,VIT The pre training task is also classified .

[1] machine learning , Li Hongyi ,http://speech.ee.ntu.edu.tw/~tlkagk/courses_ML19.html

[2] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding h ttps://arxiv.org/pdf/1810.04805v2.pdf

[3] AN IMAGE IS WORTH 16X16 WORDS:TRANSFORMERS FOR IMAGE RECOGNITION AT SCALE https://arxiv.org/abs/2010.11929

边栏推荐

- 人民银行印发《关于支持外贸新业态跨境人民币结算的通知》

- The people's Bank of China printed and distributed the notice on supporting cross-border RMB settlement of new foreign trade formats

- SQL two columns become multi row filter display

- 1019 数字黑洞

- 女程序员晒出5月的工资条:工资是高,但是真累,网友评论炸锅了

- Idea modifying JVM memory

- Decorator Pattern

- JSX的基本使用

- What are the MySQL database constraint types

- 1015 德才论

猜你喜欢

What are the MySQL database constraint types

Memo pattern

Blue Bridge Cup ruler method

Developer scheme · environmental monitoring equipment (Xiaoxiong school IOT development board) connected to graffiti IOT development platform

快速开发项目-VScode插件

The 30th day of force deduction (DP topic)

SEAttention 通道注意力机制

Libuv library overview and comparison of libevent, libev and libuv (Reprint)

1018 锤子剪刀布

How to display all MySQL databases

随机推荐

MySQL subquery

[wc2021] Fibonacci - number theory, Fibonacci sequence

[performance test] introduction and installation of JMeter

[laravel series 8] out of the world of laravel

Facade pattern

1016 部分A+B

Runtimeerror in yolox: dataloader worker (PID (s) 17724, 1364, 18928) exited unexpectedly

Does cdc2.2.1 not support postgresql14.1? Based on the pgbouncer connection mode, with 5433

树莓派用VNC Viewer方式远程连接

Live broadcast appointment AWS data everywhere series activities

Log in to the MySQL database and view the version number on the command line

The 30th day of force deduction (DP topic)

Canoe- how to parse messages and display information in the trace window (use of program node and structure type system variables)

【HackTheBox】dancing(SMB)

Call snapstateon closed sou from Oracle CDC

What are the MySQL database constraint types

The last week! Summary of pre competition preparation for digital model American Games

What exactly does GCC's -Wpsabi option do? What are the implications of supressing it?

[C language] start a thread

[hackthebox] dancing (SMB)