当前位置:网站首页>Transformer variants (routing transformer, linformer, big bird)

Transformer variants (routing transformer, linformer, big bird)

2022-07-25 12:02:00 【Shangshanxianger】

This blog post continues the previous two articles , The first two articles portal :

- Transformer variant (Sparse Transformer,Longformer,Switch Transformer)

- Transformer variant (Star-Transformer,Transformer-XL)

Efficient Content-Based Sparse Attention with RoutingTransformers

The goal of the previous two blog posts is the same , How to make Standards Transformer The time complexity is reduced .Routing Transformer The problem is modeled as a routing problem , The purpose is to let the model learn to select Sparse Clustering of word instances , The so-called clustering is a function of the content of each key and query , It is not only related to their absolute or relative positions . In a nutshell , Words with similar functions can be clustered into a representation , This will speed up the calculation .

See the comparison between the above figure and other models , Each line in the diagram represents the output , Each column represents input , about a and b The picture says , Shaded squares represent the elements noticed by each output line . For routing attention mechanism , Different colors represent the members in the cluster of output word instances . The specific method is to use a common random weight matrix to project the values of keys and queries : R = [ Q , K ] [ W R , W R ] T R=[Q,K][W_R,W_R]^T R=[Q,K][WR,WR]T And then put R The vector in is used k-means Clustering k A cluster of , Then in each cluster C_i The weighted summation context is embedded : X i ′ = ∑ j ∈ C k A i j V j X'_i=\sum_{j \in C_k} A_{ij}V_j Xi′=j∈Ck∑AijVj

Finally, the author uses n \sqrt{n} n A cluster of , So the time complexity is reduced O ( n n ) O(n \sqrt{n}) O(nn). See the original paper and code implementation for details :

- paper:https://arxiv.org/abs/2003.05997

- code:https://storage.googleapis.com/routing_transformers_iclr20/

Linformer: Self-Attention with Linear Complexity

from O(n^2) To O(n)! Firstly, the author proves theoretically and empirically that the random matrix formed by self attention mechanism can be approximated as a low rank matrix , Therefore, linear projection is directly introduced to decompose the original scaled point product concerns into multiple smaller concerns , That is to say, these small attention combinations are the low rank factorization of standard attention . As shown in the figure above , In the calculation key K And the value V Add two linear projection matrices E and F, namely h e a d = A t t e n t i o n ( Q W i Q , E i K W i K , F i V W i V ) = s o f t m a x ( Q W i Q ( E i K W i K ) T d k ⋅ F i V W i V ) head=Attention(QW^Q_i,E_iKW^K_i,F_iVW^V_i)=softmax(\frac{QW^Q_i(E_iKW^K_i)^T}{\sqrt{d_k}}\cdot F_iVW^V_i) head=Attention(QWiQ,EiKWiK,FiVWiV)=softmax(dkQWiQ(EiKWiK)T⋅FiVWiV)

At the same time, it also provides three levels of parameter sharing :

- Headwise: All attention heads share projected sentence parameters , namely Ei=E,Fi=F.

- Key-Value: All the key value mapping matrices of attention heads share the same parameter , namely Ei=Fi=E.

- Layerwise: All layer parameters are shared . That is, for all layers , All share the projection matrix E.

The complete content can be seen in the original , The original text has theoretical proof of low rank sum analysis :

- paper:https://arxiv.org/abs/2006.04768

- code:https://github.com/tatp22/linformer-pytorch

Big Bird: Transformers for Longer Sequences

It also uses the sparse attention mechanism , Reduce complexity to linear , namely O(N). Pictured above ,big bird It mainly includes three parts of attention :

- Random Attention ( Random attention ). Pictured a, For each of these token i, Random selection r individual token Calculate attention .

- Window Attention ( Local attention ). Pictured b, Show attention calculation with sliding window token Local information of .

- Global Attention ( Global attention ). Pictured c, Calculate global information . These in Longformer It is also said in , Refer to the corresponding paper .

Finally, combine these three parts of attention to get the attention matrix A, Pictured d Namely BIGBIRD The results of the , The formula is : A T T N ( X ) i = x i + ∑ h = 1 H σ ( Q h ( x i ) K h ( X N ( i ) ) T ) ⋅ V h ( X N ( i ) ) ATTN(X)_i=x_i+\sum^H_{h=1} \sigma(Q_h(x_i)K_h(X_{N(i)})^T)\cdot V_h(X_{N(i)}) ATTN(X)i=xi+h=1∑Hσ(Qh(xi)Kh(XN(i))T)⋅Vh(XN(i))H It's the number of heads ,N(i) Is all that needs to be calculated token, Here is the sparse part from three parts ,QKV Is an old friend .

- paper:https://arxiv.org/abs/2007.14062

边栏推荐

- 【图攻防】《Backdoor Attacks to Graph Neural Networks 》(SACMAT‘21)

- JS中的数组

- JS data types and mutual conversion

- Power BI----这几个技能让报表更具“逼格“

- Brpc source code analysis (V) -- detailed explanation of basic resource pool

- 【CTR】《Towards Universal Sequence Representation Learning for Recommender Systems》 (KDD‘22)

- Intelligent information retrieval(智能信息检索综述)

- 奉劝那些刚参加工作的学弟学妹们:要想进大厂,这些并发编程知识是你必须要掌握的!完整学习路线!!(建议收藏)

- Attendance system based on w5500

- What is the global event bus?

猜你喜欢

Hardware connection server TCP communication protocol gateway

![[leetcode brush questions]](/img/86/5f33a48f2164452bc1e14581b92d69.png)

[leetcode brush questions]

Return and finally? Everyone, please look over here,

Application and innovation of low code technology in logistics management

Teach you how to configure S2E to UDP working mode through MCU

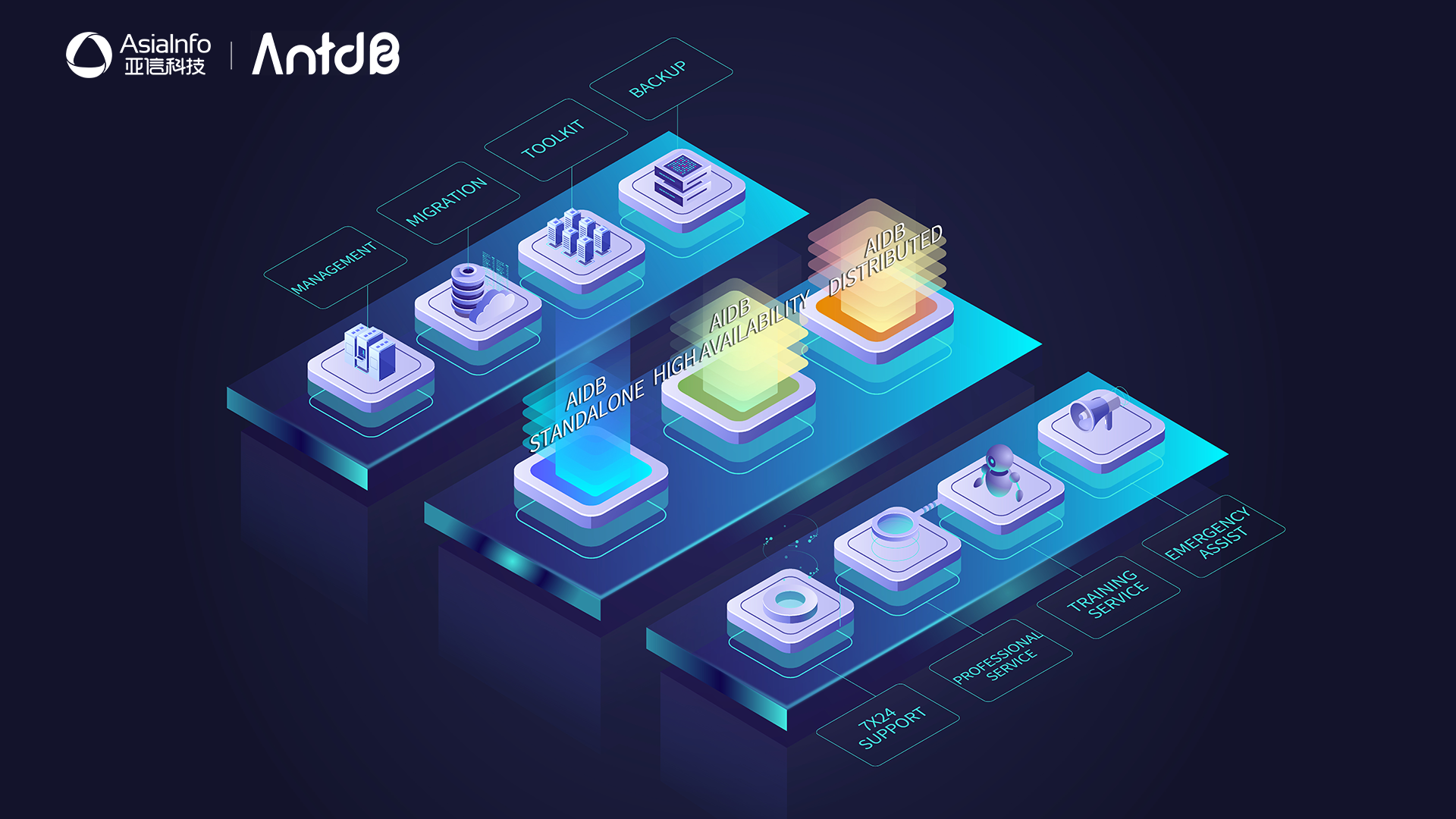

Innovation and breakthrough! AsiaInfo technology helped a province of China Mobile complete the independent and controllable transformation of its core accounting database

Zero-Shot Image Retrieval(零样本跨模态检索)

GPT plus money (OpenAI CLIP,DALL-E)

浅谈低代码技术在物流管理中的应用与创新

【RS采样】A Gain-Tuning Dynamic Negative Sampler for Recommendation (WWW 2022)

随机推荐

Pycharm connects to the remote server SSH -u reports an error: no such file or directory

[high concurrency] Why is the simpledateformat class thread safe? (six solutions are attached, which are recommended for collection)

已解决The JSP specification requires that an attribute name is preceded by whitespace

PHP curl post x-www-form-urlencoded

MySQL historical data supplement new data

Attendance system based on w5500

Hardware connection server TCP communication protocol gateway

【无标题】

【AI4Code】《IntelliCode Compose: Code Generation using Transformer》 ESEC/FSE 2020

JS中的对象

JS 面试题:手写节流(throttle)函数

pycharm连接远程服务器ssh -u 报错:No such file or directory

Make a reliable delay queue with redis

Classification parameter stack of JS common built-in object data types

【CTR】《Towards Universal Sequence Representation Learning for Recommender Systems》 (KDD‘22)

[MySQL 17] installation exception: could not open file '/var/log/mysql/mysqld log‘ for error logging: Permission denied

brpc源码解析(二)—— brpc收到请求的处理过程

一文入门Redis

Web APIs(获取元素 事件基础 操作元素)

Brpc source code analysis (VII) -- worker bthread scheduling based on parkinglot