当前位置:网站首页>Scratch program learning

Scratch program learning

2022-06-26 07:05:00 【Rorschach379】

douban.py

scrapy genspider douban movie.douban.com

scrapy genspider taobao www.taobao.com

import scrapy

from scrapy import Selector, Request

from scrapy.http import HtmlResponse

from spider2107.items import MovieItem

class DoubanSpider(scrapy.Spider):

name = 'douban'

allowed_domains = ['movie.douban.com']

def start_requests(self):

for page in range(10):

yield Request(url=f'https://movie.douban.com/top250?start={

page * 25}&filter=')

def parse(self, response: HtmlResponse):

sel = Selector(response)

lis = sel.css('#content > div > div.article > ol > li')

for li in lis: # type:Selector

movie_item = MovieItem()

movie_item['title'] = li.css('span.title::text').extract_first()

movie_item['rank'] = li.css('span.rating_num::text').extract_first()

movie_item['subject'] = li.css('span.inq::text').extract_first()

yield movie_item

items.py

# Define here the models for your scraped items

#

# See documentation in:

# https://docs.scrapy.org/en/latest/topics/items.html

import scrapy

class MovieItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

title = scrapy.Field()

rank = scrapy.Field()

subject = scrapy.Field()

settings.py

# Scrapy settings for spider2107 project

#

# For simplicity, this file contains only settings considered important or

# commonly used. You can find more settings consulting the documentation:

#

# https://docs.scrapy.org/en/latest/topics/settings.html

# https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# https://docs.scrapy.org/en/latest/topics/spider-middleware.html

BOT_NAME = 'spider2107'

SPIDER_MODULES = ['spider2107.spiders']

NEWSPIDER_MODULE = 'spider2107.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

USER_AGENT = 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.110 Safari/537.36'

# Obey robots.txt rules

ROBOTSTXT_OBEY = True

# Configure maximum concurrent requests performed by Scrapy (default: 16)

CONCURRENT_REQUESTS = 2

# Configure a delay for requests for the same website (default: 0)

# See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay

# See also autothrottle settings and docs

DOWNLOAD_DELAY = 2

# The download delay setting will honor only one of:

# CONCURRENT_REQUESTS_PER_DOMAIN = 16

# CONCURRENT_REQUESTS_PER_IP = 16

# Disable cookies (enabled by default)

# COOKIES_ENABLED = False

# Disable Telnet Console (enabled by default)

# TELNETCONSOLE_ENABLED = False

# Override the default request headers:

# DEFAULT_REQUEST_HEADERS = {

# 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

# 'Accept-Language': 'en',

# }

# Enable or disable spider middlewares

# See https://docs.scrapy.org/en/latest/topics/spider-middleware.html

# SPIDER_MIDDLEWARES = {

# 'spider2107.middlewares.Spider2107SpiderMiddleware': 543,

# }

# Enable or disable downloader middlewares

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html

# DOWNLOADER_MIDDLEWARES = {

# 'spider2107.middlewares.Spider2107DownloaderMiddleware': 543,

# }

# Enable or disable extensions

# See https://docs.scrapy.org/en/latest/topics/extensions.html

# EXTENSIONS = {

# 'scrapy.extensions.telnet.TelnetConsole': None,

# }

# Configure item pipelines

# See https://docs.scrapy.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'spider2107.pipelines.DbPipeline': 200,

'spider2107.pipelines.Spider2107Pipeline': 300,

}

# Enable and configure the AutoThrottle extension (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/autothrottle.html

# AUTOTHROTTLE_ENABLED = True

# The initial download delay

# AUTOTHROTTLE_START_DELAY = 5

# The maximum download delay to be set in case of high latencies

# AUTOTHROTTLE_MAX_DELAY = 60

# The average number of requests Scrapy should be sending in parallel to

# each remote server

# AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0

# Enable showing throttling stats for every response received:

# AUTOTHROTTLE_DEBUG = False

# Enable and configure HTTP caching (disabled by default)

# See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings

# HTTPCACHE_ENABLED = True

# HTTPCACHE_EXPIRATION_SECS = 0

# HTTPCACHE_DIR = 'httpcache'

# HTTPCACHE_IGNORE_HTTP_CODES = []

# HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

Save method

scrapy crawl douban -o douban.json

scrapy crawl douban -o douban.scv

scrapy crawl douban -o douban.xml

pip freeze ( Query dependency list )

pipelines.py

# Define your item pipelines here

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface

import openpyxl

import pymysql

class DbPipeline:

def __init__(self):

self.conn = pymysql.connect(host='localhost', port=3306,

user='root', password='Abc123!!',

database='hrs', charset='utf8mb4')

self.cursor = self.conn.cursor()

def close_spider(self, spider):

self.conn.commit()

self.conn.close()

def process_item(self, item, spider):

title = item.get('title', '')

rank = item.get('rank', 0)

subject = item.get('subject', '')

self.cursor.execute(

'insert into tb_movie (title ,rating, subject)'

'values (%s, %s,%s)',

(title, rank, subject)

)

return item

class Spider2107Pipeline:

def __init__(self):

self.wb = openpyxl.Workbook()

self.ws = self.wb.active

self.ws.title = 'Top250'

self.ws.append((' title ', ' score ', ' The theme '))

def close_spider(self, spider):

self.wb.save(' Movie data .xlsx')

def process_item(self, item, spider):

title = item.get('title', '')

rank = item.get('rank', '')

subject = item.get('subject', '')

self.ws.append((title, rank, subject))

return item

Save method

scrapy crawl douban

边栏推荐

- Development trends and prospects of acrylamide crystallization market in the world and China 2022-2027

- Procedure macros in rust

- [feature extraction] feature selection of target recognition information based on sparse PCA with Matlab source code

- Ppbpi-h-cr, ppbpimn Cr, ppbpi Fe Cr alkynyl crosslinked porphyrin based polyimide material Qiyue porphyrin reagent

- 【图像检测】基于形态学实现图像目标尺寸测量系统附matlab代码

- Excel中Unicode如何转换为汉字

- Porphyrin based polyimide (ppbpis); Synthesis of crosslinked porphyrin based polyimides (ppbpi CRS) porphyrin products supplied by Qiyue biology

- [image fusion] multimodal medical image fusion based on coupled feature learning with matlab code

- ES cluster_block_exception read_only_allow_delete问题

- Bugku exercise ---misc--- prosperity, strength and democracy

猜你喜欢

一芯实现喷雾|WS2812驱动|按键触摸|LED显示|语音播报芯片等功能,简化加湿器产品设计

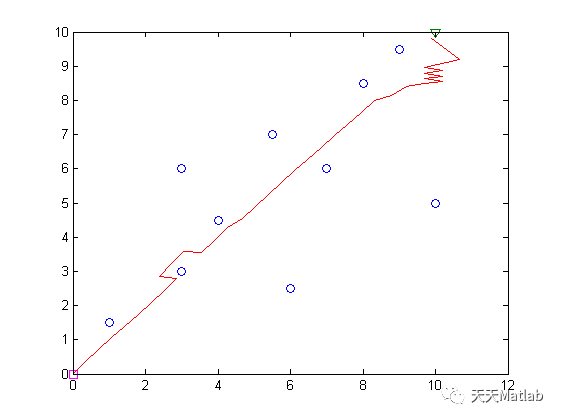

【路径规划】基于改进人工势场实现机器人路径规划附matlab代码

Pytorch builds CNN LSTM hybrid model to realize multivariable and multi step time series forecasting (load forecasting)

![[image fusion] MRI-CT image fusion based on gradient energy, local energy and PCA fusion rules with matlab code](/img/fc/fd81dedaa6b7c7542f9d74b04f247c.png)

[image fusion] MRI-CT image fusion based on gradient energy, local energy and PCA fusion rules with matlab code

![[feature extraction] feature selection of target recognition information based on sparse PCA with Matlab source code](/img/79/053e185f96aab293fde54578c75276.png)

[feature extraction] feature selection of target recognition information based on sparse PCA with Matlab source code

. Net 20th anniversary! Microsoft sends a document to celebrate

Easyar use of unity

I caught a 10-year-old Alibaba test developer in the company. After chatting with him, I realized everything

快速找到优质对象的5种渠道,赶紧收藏少走弯路

![5,10,15,20-tetra (4-methoxycarbonylphenyl) porphyrin tcmpp purple crystal; Meso-5,10,15,20-tetra (4-methoxyphenyl) porphyrin tmopp|zn[t (4-mop) p] and co[t (4-mop) p] complexes](/img/51/136eda75986fc01282558e626b2faf.jpg)

5,10,15,20-tetra (4-methoxycarbonylphenyl) porphyrin tcmpp purple crystal; Meso-5,10,15,20-tetra (4-methoxyphenyl) porphyrin tmopp|zn[t (4-mop) p] and co[t (4-mop) p] complexes

随机推荐

MySQL 'replace into' 的坑 自增id,备机会有问题

SQL

Service interface test guide

一芯实现喷雾|WS2812驱动|按键触摸|LED显示|语音播报芯片等功能,简化加湿器产品设计

China polyphenylene oxide Market Development Prospect and Investment Strategy Research Report 2022-2027

QPS

MySQL operation database

Typescript: use polymorphism instead of switch and other conditional statements

China's audio industry competition trend outlook and future development trend forecast report 2022 Edition

What is data mining?

【图像检测】基于形态学实现图像目标尺寸测量系统附matlab代码

Meso tetra (4-bromophenyl) porphyrin (tbpp); 5,10,15,20-tetra (4-methoxy-3-sulfonylphenyl) porphyrin [t (4-mop) ps4] supplied by Qiyue

Golang源码包集合

NumPy学习挑战第四关-NumPy数组属性

Numpy learning challenge level 5 - create array

I caught a 10-year-old Alibaba test developer in the company. After chatting with him, I realized everything

异地北京办理居住证详细材料

数据挖掘是什么?

一文深入底层分析Redis对象结构

[image detection] image saliency detection based on ITTI model with matlab code