当前位置:网站首页>Yan required executor memory is above the max threshold (8192mb) of this cluster!

Yan required executor memory is above the max threshold (8192mb) of this cluster!

2022-07-25 15:14:00 【The south wind knows what I mean】

Problem description :

Sparn on Yarn In this scenario Spark When the task , Single executor Of memeory Set more than 8G The following errors will be reported , This is a yarn Unreasonable settings lead to ,yarn Default single nodemanager by 8G resources , This is not reasonable , So I make adjustments on the cluster .

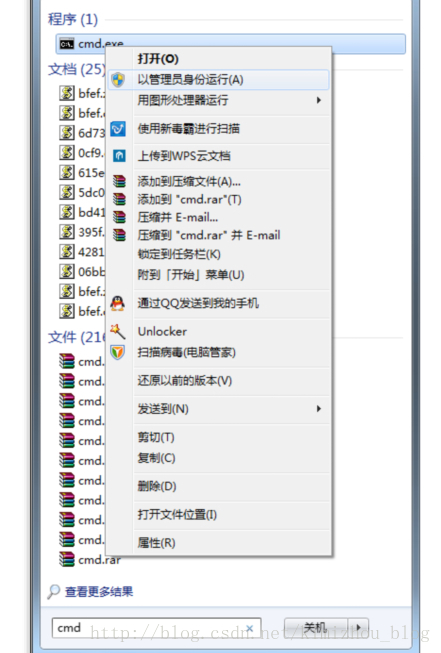

Main log performance :

Solution :

modify yarn-site.xml

<property>

<description>The minimum allocation for every container request at the RM,

in MBs. Memory requests lower than this won't take effect,

and the specified value will get allocated at minimum.</description>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>2048</value>

</property>

<property>

<description>The maximum allocation for every container request at the RM,

in MBs. Memory requests higher than this won't take effect,

and will get capped to this value.</description>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>16384</value>

</property>

<property>

<description>yarn Assigned to nodemanager Maximum number of cores </description>

<name>yarn.scheduler.maximum-allocation-vcores</name>

<value>16</value>

</property>

Adjusted performance

边栏推荐

猜你喜欢

String type time comparison method with error string.compareto

Debounce and throttle

System. Accessviolationexception: an attempt was made to read or write to protected memory. This usually indicates that other memory is corrupted

6月产品升级观察站

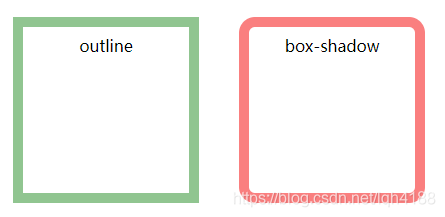

outline和box-shadow实现外轮廓圆角高光效果

【微信小程序】小程序宿主环境详解

How much memory can a program use at most?

Use the command to check the WiFi connection password under win10 system

![[Nacos] what does nacosclient do during service registration](/img/76/3c2e8f9ba19e36d9581f34fda65923.png)

[Nacos] what does nacosclient do during service registration

Process control (Part 1)

随机推荐

一个程序最多可以使用多少内存?

[C topic] Li Kou 206. reverse the linked list

CMake指定OpenCV版本

API health status self inspection

LeetCode_ Factorization_ Simple_ 263. Ugly number

sql server强行断开连接

"How to use" observer mode

redis淘汰策列

Maxcompute SQL 的查询结果条数受限1W

Hbck 修复问题

流程控制(上)

outline和box-shadow实现外轮廓圆角高光效果

TypeScript学习2——接口

System. Accessviolationexception: an attempt was made to read or write to protected memory. This usually indicates that other memory is corrupted

Automatically set the template for VS2010 and add header comments

Spark AQE

"How to use" agent mode

LeetCode_ String_ Medium_ 151. Reverse the words in the string

Spark提交参数--files的使用

mysql heap表_MySQL内存表heap使用总结-九五小庞