当前位置:网站首页>CUDA explanation - why GPU is used in deep learning

CUDA explanation - why GPU is used in deep learning

2022-07-25 09:45:00 【Frost Creek】

Graphic processing unit (GPU)

To understand CUDA, We need to have Graphic processing unit (GPU) Working knowledge of .GPU It is a kind of good at handling major Computing processor .

This is related to the central processing unit (CPU) In sharp contrast , a central processor It is a kind of good at handling commonly Computing processor .CPU It is the processor that powers most typical calculations on our electronic devices .

GPU The calculation speed ratio of CPU Much faster . however , This is not always the case .GPU be relative to CPU The speed of depends on the type of calculation performed . Best fit GPU The calculation type of is the calculation that can be completed in parallel .

Parallel computing

Parallel computing is a type of computing , Among them, specific calculations are decomposed into independent smaller calculations that can be performed at the same time . then , Recombine or synchronize the generated calculations , To form the result of the original larger calculation .

The number of tasks that a large task can be decomposed depends on the number of cores contained on a particular hardware . The kernel is the unit that actually performs calculations within a given processor ,CPU Usually has four 、 Eight or sixteen cores , and GPU There may be thousands of cores .

There are other important technical specifications , But this description is intended to drive the overall thinking .

With this working knowledge , We can come to a conclusion , Parallel computing uses GPU Accomplished , We can also conclude that , Most suitable for use GPU The tasks to be solved are tasks that can be completed in parallel . If the calculation can be done in parallel , We can use parallel programming methods and GPU Accelerate Computing .

Now let's turn our attention to Neural Networks , See why GPU It is used so frequently in deep learning . We just saw GPU It is very suitable for Parallel Computing , And about the GPU This fact is why deep learning uses them .

In parallel computing , parallel Tasks are small tasks that require little or no effort to divide the whole task into a group of Parallel Computing .

Embarrassing parallel tasks are easy to see that a group of smaller tasks are independent of each other .

For this reason , Neural networks are awkward parallelism . Many of the calculations we do using neural networks can be easily decomposed into smaller calculations , Make smaller calculation sets independent . An example of this is Convolution .

Convolution example

Let's take an example , Convolution operation :

This animation shows the convolution process without numbers . We have a blue input channel at the bottom . The convolution filter of the bottom shadow slides on the input channel , And green output channel :

Blue ( Bottom )- Input channel

shadow ( Blue top ) - 3 x 3 Convolution kernel

green ( Top )- Output channel

For each position on the blue input channel , One 3 x 3 Convolution kernel performs computation , Map the shaded part of the blue input channel to the corresponding shaded part of the green output channel .

In the animation , These calculations occur in turn . however , Each calculation is independent of the others , This means that no calculation depends on the results of any other calculation .

therefore , All these independent calculations can be done in GPU In parallel , And it can generate the whole output channel .

This enables us to see , By using parallel programming methods and GPU It can speed up the convolution operation .

Nvidia hard Pieces of (GPU) And software (CUDA)

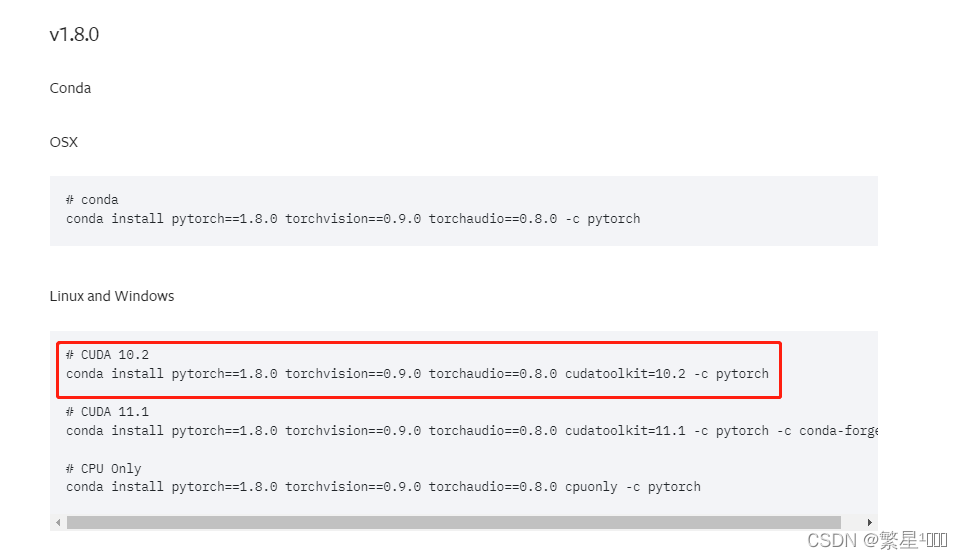

Nvidia It's a design company GPU Technology company of , They will CUDA Create a software platform , With their GPU Hardware pairing , Make it easier for developers to build and use Nvidia GPU The parallel processing ability of the software accelerates the calculation .

![]()

Nvidia GPU Hardware that supports parallel computing , and CUDA For developers API The software layer of .

therefore , You may have guessed to use CUDA need Nvidia GPU, also CUDA It can be downloaded from Nvidia Download and install for free .

Developers download CUDA Toolkits to use CUDA. With the toolkit comes a special library , Such as cuDNN,CUDA Deep neural network library .

PyTorch Incidental CUDA

Use PyTorch Or any other neural network API One of the benefits of , Parallelism is integrated into API in . This means being a neural network programmer , We can pay more attention to building Neural Networks , Not performance .

With PyTorch,CUDA It has been integrated from the beginning . No other downloads are required . All we need is a supported Nvidia GPU, We can use PyTorch utilize CUDA. We don't need to know how to use it directly CUDA API.

Now? , If we want to write PyTorch Expand , So know how to use it directly CUDA It might be useful .

After all ,PyTorch It's written with all these :

Python

C++

CUDA

take CUDA And PyTorch Use a combination of

stay PyTorch In the use of CUDA Very easy to . If we want to GPU Perform specific calculations on , We can use our data structure ( tensor ) On the call cuda() To instruct PyTorch To do so .

Suppose we have the following code :

> t = torch.tensor([1,2,3])

> t

tensor([1, 2, 3])

By default , The tensor object created in this way is located in CPU On . therefore , Any operation we perform with this tensor object will be in CPU On the implementation .

Now? , To move the tensor to GPU On , We just need to write :

> t = t.cuda()

> t

tensor([1, 2, 3], device='cuda:0')

This ability makes PyTorch Very versatile , Because the calculation can be done in CPU or GPU Selectively execute .

GPU Maybe it's better than CPU slow

We said , We can choose between GPU or CPU Run the calculation on , But why not just GPU Up operation Every How about calculation ?

GPU It's not about CPU Fast ?

The answer is ,GPU Faster for specific tasks only . One problem we may encounter is the bottleneck of reducing performance . for example , Take data from CPU Move to GPU The cost is very high , So in this case , If the computing task is simple , The overall performance may be reduced .

Transfer relatively small computing tasks to GPU It won't let us speed up , And it really slows us down . please remember ,GPU Suitable for tasks that can be broken down into many smaller tasks , If the computing task is already small , Then by moving the task to GPU, We won't get much .

For this reason , Simply use at the beginning CPU It's usually acceptable , And as we deal with bigger and more complex problems , Start using more GPU.

GPGPU Calculation

first , Use GPU The main task of acceleration is computer graphics . Hence the name graphic processing unit , But in recent years , There are more kinds of parallel tasks . As we can see , One of the tasks is deep learning .

Deep learning and many other scientific computing tasks using parallel programming techniques are leading to a phenomenon called GPGPU or Universal GPU Calculation New programming model .

GPGPU Calculations are usually just called GPU Calculate or accelerate the calculation , Because in GPU Prefabrication of various tasks is becoming more and more common .

Nvidia Has been a pioneer in this field .Nvidia General GPU The calculation is abbreviated as GPU Calculation .Nvidia CEO Huang Renxun (Jensen Huang) It was conceived long ago GPU Calculation , That's why CUDA It's near 10 Created years ago .

Even though CUDA It's been a long time , But it has just begun to take off , and Nvidia So far in CUDA Work on is why Nvidia In the depth of learning GPU Leading in Computing .

When we hear Jensen talk about GPU When calculating the stack , He means GPU As the bottom hardware ,CUDA As GPU Top software architecture , And finally CUDA At the top of the cuDNN Such as the library .

This GPU The computing stack is a general-purpose computing capability that supports a very professional chip . We often see such a stack in Computer Science , Because technology is layered , Just like Neural Networks .

Sitting on the CUDA and cuDNN Above is PyTorch, This is the framework we will work on , Finally support the top application .

This article is in-depth Discussed GPU The calculation and CUDA, But it's much deeper than we need . We will use... Here PyTorch Work near the top of the stack .

notes : The above content is translated from https://deeplizard.com/learn/video/6stDhEA0wFQ

边栏推荐

- About C and OC

- [code source] daily one question three paragraph

- STM32+HC05串口蓝牙设计简易的蓝牙音箱

- [code source] daily problem segmentation (nlogn & n solution)

- How to deploy the jar package to the server? Note: whether the startup command has nohup or not has a lot to do with it

- OC--Foundation--数组

- matlab绘图|坐标轴axis的一些常用设置

- Indexes, views and transactions of MySQL

- 初识Opencv4.X----图像卷积

- Redis installation (Ubuntu)

猜你喜欢

随机推荐

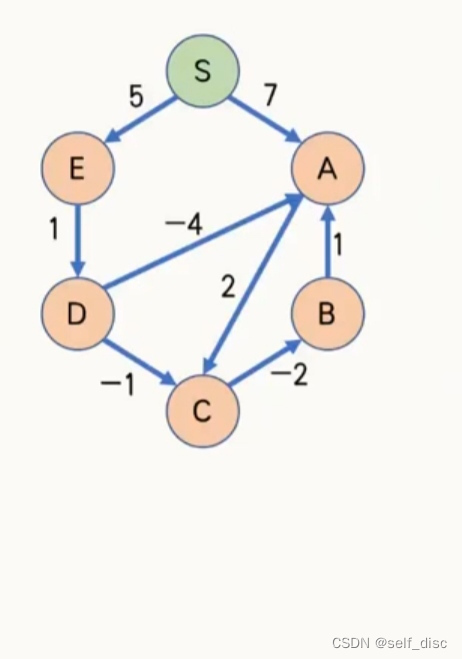

The shortest path problem Bellman Ford (single source shortest path) (illustration)

Redis installation (Ubuntu)

关于学生管理系统(注册,登录,学生端)

Redis set structure command

1094--谷歌的招聘

Save pdf2image PDF file as JPG nodejs implementation

Dream set sail (the first blog)

~1 CCF 2022-06-2 treasure hunt! Big adventure!

Database operation language (DML)

Object initialization

OC--对象复制

打造个人极限写作流程 -转载

Voice chat app source code - produced by NASS network source code

Android 如何使用adb命令查看应用本地数据库

Sort out personal technology selection in 2022

初识Opencv4.X----图像模板匹配

Flex layout syntax and use cases

自定义 view 实现兑奖券背景[初级]

Kotlin basic knowledge points

学习新技术语言流程