当前位置:网站首页>From fish eye to look around to multi task King bombing -- a review of Valeo's classic articles on visual depth estimation (from fisheyedistancenet to omnidet) (Part I)

From fish eye to look around to multi task King bombing -- a review of Valeo's classic articles on visual depth estimation (from fisheyedistancenet to omnidet) (Part I)

2022-07-25 14:03:00 【Apple sister】

Valeo( Valeo ) Multiple organizations of the company (Valeo DAR Kronach, Valeo Vision Systems etc. ) In recent years, there have been endless achievements in the field of automatic driving visual perception , Some of the classic articles are from Varun Ravi Kimar In the hands of , At the same time, a multi task look around dataset of autonomous driving has been released woodScape. Bloggers' comments on recent reading Valeo Make a brief summary of a series of articles , At the same time, some basic papers cited by it are also briefly introduced , I hope to communicate with you more . This paper will start with the fish eye depth estimation model , For the basic theory of pinhole camera depth estimation, please refer to previous articles of bloggers :

Apple sister : The basic theory of monocular depth estimation and the study summary of the paper

One 、 The cornerstone of the fisheye :FisheyeDistanceNet(2020)

This article first attempts to estimate the depth of the pinhole to the depth of the fish eye . The basic theoretical framework follows the classic work of pinhole depth estimation sfm-learner:

[2] Unsupervised Learning of Depth and Ego-Motion from Video (CVPR 2017)

and monodepth2:

[3] Digging Into Self-Supervised Monocular Depth Estimation (CVPR 2018)

Replace the pinhole projection model with the fish eye projection model , And make a series of improvements . The details are as follows :

One 、 take monodepth2 The pinhole projection model of is replaced by the distortion uncorrected fish eye model :

Two 、 By inputting additional supervisory signals : Self driving real-time speed as posnet Output pose constraints , Can solve monodepth2 The relative scale of output , Get the absolute depth scale , It is more conducive to the practicality of landing .

3、 ... and 、 The super-resolution network is used in the network structure (super-resolution networks) And variable convolution (deformable convolution) Combined network structure , High resolution depth map can be obtained from the input low resolution image , And the edges are clear . This replaces the transpose convolution and the nearest neighbor bilinear up sampling method .

1. The super-resolution network comes from the following papers :

The main principle is to use sub-pixel convolution layer (sub-pixel layer), Change the number of output characteristic channels to r^2, Then the multi-channel features are periodically inserted into the low resolution image , Get high-resolution images ( This process is called periodic shuffling, Different colors at the end of the figure below ).

2. Variable convolution comes from the following papers :

[5] Deformable ConvNets v2: More Deformable, Better Results (CVPR 2018)

among deformable convolution The concept of is derived from this article :

[6] Deformable Convolutional Networks(CVPR 2017), The basic idea is shown in the figure below :

That is, with learnable offset Convolution kernel , To learn about objects with deformation . Variable convolution v2 edition , That is, the version used in this paper , Two more improvements have been made : One is to add more in the feature extraction network deformable convolution, Second, weight is added to the calculation of variable convolution (modulation), It is also learnable , It can make the variable convolution better learn the region of interest . This is very helpful for the recognition of deformed objects photographed by the fish eye camera in this paper .

Four 、 Not only does it use forward warp, namely t0 Frame as target,t-1 and t+1 Frame as source, Still use backward warp, namely t0 Frame as source,t-1 and t+1 Frame as target, This operation increases the amount of computation , Extended training time , But got better results .

5、 ... and 、 Cross consistency is used loss, That is to say t Depth map of frame output and t' Frame reconstruction t The depth map obtained from the frame should be consistent , vice versa , Express in formula, that is :

Other processes such as dynamic targets mask、 Smoothing with edges 、 Multiscale loss And so on monodepth2 similar . The total loss is expressed as follows :( The first two items represent forward and backward warp)

Two 、 Extended to distorted wide angle :UnRectDepthNet(2020)

This article aims to fisheyedistancenet The results of this paper are extended to general camera models , And made some attempts . Author points out , In the practical application of automatic driving , Most of the cameras used are distorted , Include FOV100° Left and right forward-looking wide angle , and FOV190° Fish eye cameras on the left and right , The distortion is very serious . A common practice is to correct and crop the distorted image , But the correction will bring more problems , One is the loss of resolution and FOV The fall of , Lost the original use of these big FOV The meaning of camera , Second, in warp The interpolation method used in the process will also bring errors , Third, the correction itself will consume time and resources , And after correction, it depends very much on the calibration parameters , The calibration parameters are easy to change with the objective environment such as temperature , It is difficult to apply on a large scale . Therefore, the author hopes to do depth estimation directly on the uncorrected original image , Get more practical results . But because the models of various cameras are different , So we need to design a general generalization network to support different camera models .

The author mentioned that one of the closest jobs is 2019 Year of Cam-Convs, From the following paper :

[8] CAM-Convs: Camera-Aware Multi-Scale Convolutions for Single-View Depth (CVPR 2019)

Although this article does not use , But an important foundation of the following article , Here is also an introduction . The basic idea is to use convolution layers related to camera parameters ( This information is equivalent to the camera “ Metadata ”, Learn its features by constructing metadata into pseudo images consistent with the input format ), Through multi-scale connection , Realize the generalization to the general depth estimation network of the general camera model .cam-convs Its predecessor came from CoordConv:

The main idea is to add two additional channels in the input -- Respectively represent the horizontal 、 Ordinate ( You can also add other location information as needed ), As shown in the figure below :

A basic characteristic of ordinary convolution is translation invariance , Convolution kernel cannot perceive its own position , After adding coordinate information , The translation invariance can be preserved ( Learned coordinates channel The weight of 0 The situation of ), We can also learn translation dependency ( Learned coordinates channel The weight is not 0 The situation of ), It has a good effect on downstream tasks with translation dependence , It can also solve the defects of ordinary convolution in coordinate mapping tasks .

Cam-convs Six channels are added in :

Centralized x、y Coordinate channel ( The central coordinate point of the optical axis of the camera is the origin ):

FOV maps( Field diagram , It is divided into x、y passageway , The azimuth and elevation of each pixel can be calculated through the focal length of the camera ):

And normalized x、y Coordinate channel , This is equivalent to learning several elements of camera internal parameters : Focal length and optical center offset . Then through multi-scale connection 、 Loss of supervision ( Include depth Loss 、 Normal vector loss 、 Gradient loss 、 Loss of confidence, etc ) Training . This method is in the fifth part of this paper (SVDistNet) There are further extended applications in .

go back to UnRectDepthNet, Author points out cam-convs Cannot handle nonlinear distortion , It is also a method of supervision . Therefore, the author still adopts the classical projection model to deal with different cameras .

For moderation FOV General wide-angle camera lens model (FOV<120°) In general use Brown–Conrady Model , Because this model models both radial distortion and tangential distortion :

Brown–Conrady Projection model

Lens model of fish eye camera (FOV>=180°) Need a radial component r(θ), The main models are as follows :

Other used image reconstruction 、 Loss function 、 Scale recovery, etc. are all related to FisheyeDistanceNet similar , But it can support multiple camera models at the same time , stay KITTI and WoodScape There are good test results on . But at present, one training can only support the input of one data set , The author points out that the future direction is to expand the training framework to support the input of multiple cameras at the same time , Then output a general reasoning model .

3、 ... and 、FisheyeDistanceNet Enhanced version :FisheyeDistanceNet++(2021)

FisheyeDistanceNet++ From the name, it's right FisheyeDistanceNet The enhancement of , And allow the input of four-way fish eye camera , Train the same model . Mainly reflected in the following four aspects :

One 、 Robust loss (robust loss function) The introduction of

The concept of robust loss comes from the following papers :

[11] A general and adaptive robust loss function (CVPR 2019)

about L1,L2 And various loss functions , In this paper, a generalized loss function form is proposed :

among α Robustness of the control loss function .c It can be seen as a scale parameter , stay x=0 Nearby control the scale of bending . about α Different values of , The loss function is shown as follows :

The probability density expression of generalized loss function :

The negative logarithmic form of the generalized loss function (NLL) As a loss function , It is allowed to treat as a parameter , And then make the network learn the right . And the loss function is monotonic 、 Smoothness 、 Boundedness of first-order and second-order degrees . Experimental proof NLL The use of can improve the accuracy .

Two 、 Use stand-alone self-attention Module as encoder

stand-alone self-attention From the following paper :

[12] stand-alone self-attention in vision models (CVPR 2019) (CVPR 2019)

from attention Original paper on mechanism :attention is all you need We know that ,attention The mechanism can make better use of global information than convolutional neural network , Increase the receptive field . The general form is as follows :

and stand-alone self-attention It is further proved that attention The mechanism can completely replace CNN, Achieve ideal results in visual tasks . At the same time, this article uses embedded vectors (r) To represent the relative position , The relative position information between pixels is preserved , Form the following :

Experimental proof , The introduction of this mechanism has also significantly improved the results .

3、 ... and 、 stay encoder head Used in instance normalization, Retain the decoder Medium batch normalization, Further improve the accuracy .

Commonly used batch normalization It's a batch All pictures in the same channel are made together normalization, and instance normalization It refers to that a single channel of a single picture is made independently normalization. It is verified by comparative test instance normalization Effectiveness of layers .

Four 、 Train a general model with multiple camera data . However, the relevance between multiple cameras is not mentioned .

Please continue to read the next article :

边栏推荐

- OKA通证权益解析,参与Okaleido生态建设的不二之选

- 伯克利博士『机器学习工程』大实话;AI副总裁『2022 ML就业市场』分析;半导体创业公司大列表;大规模视频人脸属性数据集;前沿论文 | ShowMeAI资讯日报

- @Wrap decorator

- Experiment the Arduino code of NTP network timing alarm clock with esp32+tm1638

- 【力扣】645.错误的集合

- Comprehensive sorting and summary of maskrcnn code structure process of target detection and segmentation

- 【学习记录】plt.show()闪退解决方法

- Multidimensional pivoting analysis of CDA level1 knowledge points summary

- leetcode1 --两数之和

- Word set paste to retain only text

猜你喜欢

leetcode--四数相加II

移动端网站,独立APP,网站排名策略有哪些?

Amd epyc 9664 flagship specification exposure: 96 core 192 threads 480MB cache 3.8ghz frequency

![[原创]九点标定工具之机械手头部相机标定](/img/de/5ea86a01f1a714462b52496e2869d6.png)

[原创]九点标定工具之机械手头部相机标定

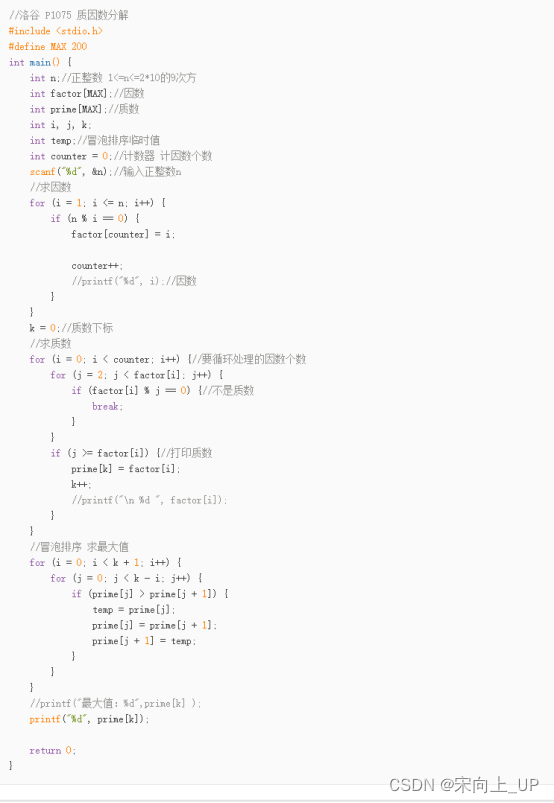

Brush questions - Luogu -p1075 prime factor decomposition

【目录爆破工具】信息收集阶段:robots.txt、御剑、dirsearch、Dirb、Gobuster

Construction and practice of yolov7 in hands-on teaching

Mysql表的操作

Applet H5 get mobile number scheme

Practice of online problem feedback module (13): realize multi parameter paging query list

随机推荐

[platform IO compile hifive1 revb] * * * [.pio\build\hifive1 revb\src\setupgpio.o] solution to error 1

Okaleido launched the fusion mining mode, which is the only way for Oka to verify the current output

Mxnet implementation of densenet (dense connection network)

Goldfish rhca memoirs: cl210 managing storage -- managing shared file systems

Interpretation of featdepth self-monitoring model for monocular depth estimation (Part I) -- paper understanding and core source code analysis

Applet starts wechat payment

AI model risk assessment Part 1: motivation

苹果手机端同步不成功,退出登录,结果再也登录不了

Applet H5 get mobile number scheme

DNS resolution error during windows unbutu20 lts apt, WGet installation

Goldfish rhca memoirs: cl210 management storage -- object storage

Lesson of C function without brackets

Construction and practice of yolov7 in hands-on teaching

Internal error of LabVIEW

Leetcode -- addition of four numbers II

Brush questions - Luogu -p1152 happy jump

sieve of eratosthenes

Experiment the Arduino code of NTP network timing alarm clock with esp32+tm1638

leetcode202---快乐数

LabVIEW的内部错误