当前位置:网站首页>Data platform scheduling upgrade and transformation | operation practice from Azkaban smooth transition to Apache dolphin scheduler

Data platform scheduling upgrade and transformation | operation practice from Azkaban smooth transition to Apache dolphin scheduler

2022-06-27 21:15:00 【Ink Sky Wheel】

Fordeal The data platform scheduling system was based on Azkaban Two times of development , But at the user level 、 At the technical level, there are some pain points that are difficult to solve . For example, there is no task visual editing interface at the user level 、 Complement and other necessary functions , This makes it difficult for users to get started and the experience is poor . On a technical level , Outdated architecture , Continuous iteration is difficult . Based on these situations , After comparison and investigation of competitive products ,Fordeal The new version of the data platform system is decided based on Apache DolphinScheduler Upgrade . How did the developer make the user smoothly transition to the new system during the whole migration process , What efforts have been made ?

5 month Apache Dolphinscheduler on-line Meetup, come from Fordeal Ludong, the big data development engineer of, shared the practical experience of platform migration

START

About Instructor

Lu Dong Fordeal Big data Development Engineer .5 Years of experience in data development , Currently working in Fordeal, The main focus of data technology includes : The lake and the warehouse are integrated 、MPP database 、 Data visualization, etc .

“

This speech mainly consists of four parts :

Fordeal Demand analysis of data platform scheduling system

Migrate to Apache Dolphin Scheduler How to adapt in the process

How to complete the new enhancement after the adaptation

The future planning

Apache DolphinScheduler

01

Demand analysis

Fordeal The data platform scheduling system was first based on Azkaban Two times of development . Support machine grouping ,SHELL Dynamic parameters 、 After the dependency detection, it can barely meet the requirements , However, there are still three problems in daily use , Respectively in the user 、 Technology and operation and maintenance .

First, in the At the user level , Lack of visual editing 、 Complement and other necessary functions . Only technical students can use the scheduling platform , Other students who have no foundation are very easy to make mistakes if they use it , also Azkaban The error reporting mode of leads developers to modify it .

The second is Technical level ,Fordeal The technical architecture of the data platform dispatching system is very old , The front and rear ends are not separated , Want to add a function , The second opening is very difficult .

The third is in China Operation and maintenance level , And the biggest problem . The system will come out from time to time flow The execution is stuck . To deal with this problem , You need to log in to the database , Delete execution flow Inside ID, Restart again Worker and API service , The process is very complicated .

therefore , stay 2019 year Apache DolphinScheduler Open source , We pay close attention to , And start to see if you can migrate . At that time, we investigated three software together ,Apache Dolphin Scheduler、Azkaban and Airflow. We are based on five needs .

The preferred JVM Department of language . because JVM System language in thread 、 The development documents are mature .

Airflow be based on Python In fact, it is no different from our current system , Non technical students cannot use

Distributed architecture , Support HA.Azkaban Of work It's not distributed web and master Services are coupled together , Therefore, it belongs to single node .

Workflow must support DSL And visual editing . This ensures that technical students can use DSL Writing , Visualization is user oriented , To expand the user base .

Fore and aft end separation , Mainstream Architecture . The front and back end can be developed separately , The coupling degree will also decrease after stripping .

Community activity . Finally, the activity of the community concerned is also very important for development , If there are often some “ old ” The old bug All of them need to be modified by themselves , That will greatly reduce the development efficiency .

Now our data architecture is shown in the figure above .Apache Dolphin Scheduler Undertake the whole life cycle from HDFS、S3 Collect to K8S The calculation is based on Spark、Flink Development of . On both sides olphinScheduler and Zookeeper As the basic architecture . Our scheduling information is as follows :Master x2、Worker x6、API x1( Bearing interface, etc ), Current daily average workflow instance :3.5k, Daily average task instance 15k+.( The following figure for 1.2.0 Version architecture diagram )

02

Adaptive migration

Fordeal The internal system needs to be online to provide access to users , At this time, several internal services must be connected , To reduce the user's starting cost and reduce the operation and maintenance work . It mainly includes the following Three systems .

Single sign on System :

be based on JWT Realized SSO System , One time login , Certify all .

Work order system :

DS Authorized access work order for the project , Avoid human flesh operation and maintenance .

( Access all authorized actions , Automation )

Alarm platform :

Expand DS Alarm mode , Send all alarm information to the internal alarm platform , The user can configure the telephone 、 Enterprise wechat and other modes alarm .

The three figures below correspond to Login system 、 Work order permission and enterprise wechat alarm .

Azkaban Of Flow Management is based on Self defined DSL To configure , Every Flow Configuration contains Node If there is a large number 800+ Is less 1 individual , There are three main ways to update them .

The figure above shows some data warehouse projects flow The configuration file . Want to put Azkaban Migrate to Apache DolphinScheduler in , We have listed a total of ten requirements .

DS Upload interface support Flow Parse the configuration file and generate the workflow .( Support nested flow)Flow The configuration file of is equivalent to Azkaban Of DAG file , If it doesn't fit, we have to write our own code to parse the configuration file , take Flow Turn into Json.

DS Resource center supports Folder ( trusteeship Azkaban All resources under the project ) At that time our 1.2.0 There was no folder function in the version , And our data warehouse has many folders , So we have to support .

DS Provide client package , Provide basic data structure classes and tool classes , Convenient to call API, Generate workflow configuration .

DS Support workflow concurrency control ( Parallel or skip )

DS Time parameters need to support the configuration of time zones ( for example :dt=$[ZID_CTT yyyy-MM=dd=1]). Although most of the time zones we configured are overseas , But for users , They prefer to see the Beijing time zone .

DS The run count and deployment interface supports global variable overwriting . Because our version is lower , Some functions like complement are not available , What variables are used in workflow , I hope the user can set it by himself .

DS DAG Figure support task Multiple operations .

DS task Log output final execution content , It is convenient for users to check and debug .

DS Support manual retry of running failed tasks . Usually, it takes several hours to run the warehouse at a time , Some of them task An error may be reported due to a code problem , We want to be able to do this without interrupting the task flow , Try again manually , Modify the wrong nodes one by one and try again . So the final state is successful .

Data warehouse projects need to support one click migration , Keep users' working habits (jenkins docking DS).

After continuous communication and transformation with fiveorsix groups , These ten requirements are finally met .

from Azkaban Move completely to Apache DolphinScheduler It will take about a year to complete , Because it involves API user , involves git user , There is also support for a variety of functional users , Each project team will put forward their own needs , During the whole process of assisting other teams to migrate , According to user feedback , A total of 140+ An optimization commit, Here are commit Categorical word cloud .

03

Feature enhancement

For why we refactor , What are our pain points ? We listed a few points . First ,Azkaban The operation steps are too cumbersome . When a user wants to find a workflow definition , First open the project , Find the workflow list in the first page of the project , Find the definition , The user can't find the definition I want at a glance . second , I can't pass the name 、 The workflow definition and instance can be retrieved by grouping and other conditions . Third , Unable to get URL Share workflow definitions and instance details . Fourth , Database tables and API The design is unreasonable , Query Caton , Long transaction alarms often occur . The fifth , The layout is written in many parts of the interface , If the width is set , As a result, the added columns cannot be well adapted to computers and mobile phones . The sixth , Workflow definitions and instances are missing batch operations . There must be a mistake in every program , How to batch retry , Become a headache for users .

implementation

DS

be based on AntDesign Library to develop a new set of front-end interface .

Weaken the project concept , Don't want users to pay too much attention to the concept of project , Items are only used as tags for workflows or instances .

At present, the computer version has only four entrances , home page 、 Workflow list 、 Execution list and content center list , The mobile version has only two entrances , They are workflow list and execution list .

Simplify the operation steps , Put the workflow list and execution list at the first entry .

Optimize query criteria and indexes , Add batch operation interface, etc .

Add union index .

Fully compatible with computers and mobile phones ( Except for the editor dag , Other functions are consistent )

What is dependency scheduling ? That is, workflow instance or Task After the instance succeeds, take the initiative to start the downstream workflow or Task Run number ( The execution status is dependent execution ). Imagine the following scenarios , The downstream workflow needs to set its own timing time according to the scheduling time of the upstream workflow ; After the number of upstream runs fails , There will also be errors in the downstream timed runs ; Upstream complement , All downstream business parties can only be notified to make up . It is difficult to adjust the time interval between the upstream and downstream of the data warehouse , Computing cluster resource utilization is not maximized (K8S). Because users do not submit continuously .

Concept map ( Trigger workflow by layer )

Rely on scheduling rules

DS

Workflow support time , rely on , Combined scheduling of the two ( And with or with )

Within the workflow Task Support dependent scheduling ( Not subject to timing restrictions ).

Dependency scheduling requires setting a dependency cycle , Only when all the dependencies are satisfied in this cycle will it trigger .

The minimum setting unit for dependent scheduling is Task , Support dependency on multiple workflows or Task ( Only supported and related ).

Workflow is just a group concept in the execution tree , That is to say, there will be no restriction Task.

Mobile workflow depends on details

Expand more Task type , Abstract common functions and provide editing interface , Reduce the cost of using , We have mainly expanded the following .

Data open platform (DOP):

It mainly provides data import and export functions ( Support Hive、Hbase,Mysql、ES、Postgre、Redis、S3)

Data quality : be based on Deequ Developed data validation .

Abstract the data for users to use .

SQL-Prest data source :SQL Module support Presto data source

Blood relationship data collection : Built in to all Task in ,Task All the data needed to expose the blood relationship

Architecture for Java+Spring Service monitoring under , The platform has a set of general Grafana Monitoring Kanban , Monitoring data is stored in Prometheus, Our principle is that there is no monitoring within the service , Just expose the data , Don't make wheels again , The modification list is :

API、Master and Worker Service access micrometer-registry-prometheus, Collect general data and expose Prometheus Acquisition interface .

collection Master and Worker Execute thread pool status data , Such as Master and Worker Running workflow instance 、 Database etc. , Used for subsequent monitoring optimization and alarm ( XiaYouTu ).

Prometheus Side configuration service status abnormal alarm , For example, the number of workflow instances running in a period of time is less than n( Blocking )、 Service memory &CPU Alarm and so on. .

04

The future planning

at present Fordeal The online version is based on the community's first Apache edition (1.2.0) Carry out second opening , We also found several problems through monitoring .

Database pressure , The Internet IO The cost is high

Zookeeper Acting as a queue , From time to time to cause disk IOPS soaring , Existence hidden danger

Command Consumption and Task The distribution model is simple , Cause uneven machine load

This scheduling model uses a lot of polling logic (Thread.sleep), Scheduling consumption 、 distribution 、 Detection efficiency is not high

The community is growing rapidly , The current architecture is also more reasonable and easy to use , Many problems have been solved , Our recent concern is Master Direct pair Worker Distribution tasks for , reduce Zookeeper The pressure of the ,Task Type plugins , Easy to expand later .Master Configure or customize the distribution logic , The complexity of the machine is more reasonable . More perfect fault tolerance mechanism and operation and maintenance tools ( Elegant online and offline ), Now? Worker There is no elegant online and offline function , Update now Worker The way to do this is to cut off the traffic , Set the thread pool to zero before going online or offline , To compare safety .

Currently, only the execution statistics of workflow instances are provided , The particle size is relatively coarse , Later, more detailed statistical data should be supported , Such as according to Task Filter for statistical analysis , Perform statistical analysis according to the execution tree , Perform path analysis according to the most time-consuming method ( Optimize it ).

Again , Add more data synchronization functions , Such as performing statistics and adding synchronization 、 Ring comparison threshold alarm and other functions , These are workflow based alerts .

When the scheduling iteration is stable , Will be gradually used as the basic component , Provide more convenient Interfaces and embeddable windows (iframe), Let more upper layer data applications ( Such as BI System , early warning system ) Wait for docking , Provide basic scheduling functions .

My share is here , Thank you for reading carefully !

Participation and contribution

With the rapid rise of domestic open source ,Apache DolphinScheduler The community is booming , In order to make better use of 、 Easy to use scheduling , Sincerely welcome partners who love open source to join the open source community , Contribute to the rise of China's open source , Let local open source go global .

Participate in DolphinScheduler The community has a lot of ways to participate and contribute , Include :

Contribute the first PR( file 、 Code ) We also hope it's simple , first PR It's used to familiarize with the submission process and community collaboration, and feel the friendliness of the community .

The community summarizes the following list of questions for novices :https://github.com/apache/dolphinscheduler/issues/5689

List of non novice questions :https://github.com/apache/dolphinscheduler/issues?q=is%3Aopen+is%3Aissue+label%3A%22volunteer+wanted%22

How to participate in contribution link :https://dolphinscheduler.apache.org/zh-cn/docs/development/contribute.html

Come on ,DolphinScheduler The open source community needs your participation , Contribute to the rise of China's open source , Even if it's just a small tile , The power that comes together is enormous .

If you participate in open source, you can compete with experts from all walks of life , Quickly improve your skills , If you want to contribute , We have a donor seed incubation group , You can add community helpers WeChat (Leonard-ds) , Hand in hand teaches you ( Contributors of all levels , Have a craigslist , The key is to have a willing heart to contribute ).

When adding a small assistant wechat, please explain that you want to participate in the contribution .

Come on , The open source community is looking forward to your participation .

Activity recommendation

2022 year 6 month 18 Japan ,Apache DolphinScheduler Community unity TiDB Jointly organized by the community Meetup It's going to weigh on ! We are also honored to invite people from Alibaba cloud 、 Domestic cross-border e-commerce giants SHEIN、TiDB Senior big data engineers and developers in community and other enterprises , From database 、 Data scheduling 、 application development 、 Technology extension and other topics are discussed in the development practice of two open source projects .

Affected by the epidemic, this activity is still carried out in the form of online live broadcast , The event is now open for free registration , Welcome to scan the QR code below , Or click on “ Read the original ” Free registration !

Scan the code to watch the live broadcast

Scan the code into the live broadcast group

More highlights

* Another summer of open source , The prizes for the eight major projects are waiting for you !

Click to read the original text , Free registration

边栏推荐

- Zhongang Mining: the largest application field of new energy or fluorite

- A distribution fission activity is more than just a circle of friends

- 【STL编程】【竞赛常用】【part 3】

- 爱数课实验 | 第七期-基于随机森林的金融危机分析

- Navicat Premium连接问题--- Host ‘xxxxxxxx‘ is not allowed to connect to this MySQL server

- Installing services for NFS

- 基于微信小程序的高校党员之家服务管理系统系统小程序#毕业设计,党员,积极分子,学习,打卡,论坛

- 灵活的IP网络测试工具——— X-Launch

- 一场分销裂变活动,不止是发发朋友圈这么简单

- #夏日挑战赛# OpenHarmony HiSysEvent打点调用实践(L2)

猜你喜欢

Animal breeding production virtual simulation teaching system | Sinovel interactive

Practice of combining rook CEPH and rainbow, a cloud native storage solution

UOS prompts for password to unlock your login key ring solution

OpenSSL client programming: SSL session failure caused by an obscure function

低代码开发平台是什么?为什么现在那么火?

分享|智慧环保-生态文明信息化解决方案(附PDF)

Unity3D Button根据文本内容自适应大小

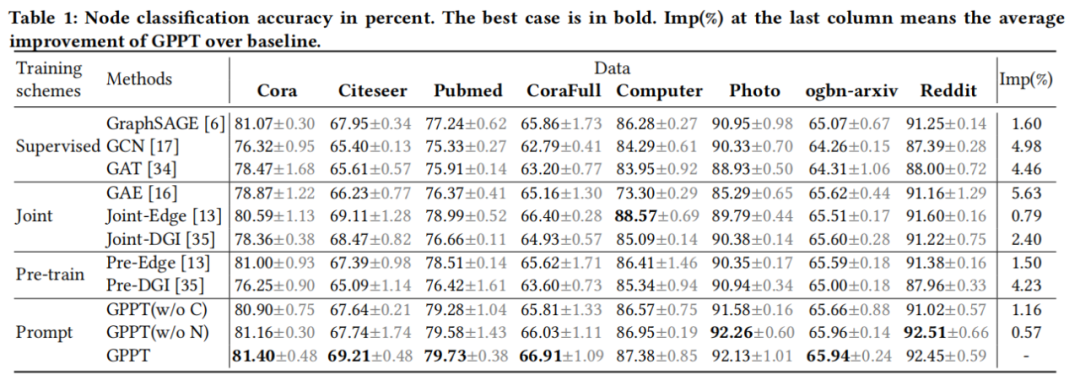

KDD 2022 | 图“预训练、提示、微调”范式下的图神经网络泛化框架

CocosCreator播放音频并同步进度

一套系统,减轻人流集中地10倍的通行压力

随机推荐

[STL programming] [common competition] [Part 3]

2021全球独角兽榜发布:中国301家独角兽企业全名单来了!

展现强劲产品综合实力 ,2022 款林肯飞行家Aviator西南首秀

关于企业数字化的展望(38/100)

爱数课实验 | 第八期-新加坡房价预测模型构建

Love number experiment | Issue 7 - Financial Crisis Analysis Based on random forest

Openharmony hisysevent dotting and calling practice of # Summer Challenge (L2)

覆盖接入2w+交通监测设备,EMQ 为深圳市打造交通全要素数字化新引擎

教程|fNIRS数据处理工具包Homer2下载与安装

Installing services for NFS

【STL编程】【竞赛常用】【part 3】

动物养殖生产虚拟仿真教学系统|华锐互动

划重点!国产电脑上安装字体小技巧

麒麟V10安装字体

1030 Travel Plan

VMware vSphere ESXi 7.0安装教程

Oracle architecture summary

Love math experiment | phase 9 - intelligent health diagnosis using machine learning method

The meta universe virtual digital human is closer to us | Sinovel interaction

通过CE修改器修改大型网络游戏