当前位置:网站首页>Spam filtering challenges

Spam filtering challenges

2022-07-01 11:05:00 【I'm not zzy1231a】

Spam filtering challenges

With the gradual development of network applications , E-mail has become an integral part of people's daily work and life . meanwhile , The problem of spam plagues many e-mail users , They not only bring reading burden to e-mail users , It also takes up limited mailbox space . Therefore, this study proposes a mail classification method based on multi-layer perceptron , Used to classify unknown messages , Detect and identify spam .

Goal question : Solve the two classification problem of spam filtering

The dataset includes 3017 mail , The training set includes 2082 Normal sealing ,935 Seal garbage , The data set is at the end of this article

TF-IDF modular

TF-IDF It is a statistical method of word frequency , Suitable for solving classification problems , It is mainly used to evaluate the importance of a word to a document set or one of the documents in a corpus . If the frequency of a word in an article TF high , And it's rarely seen in other articles , It is believed that this word or phrase has a good ability of classification . In the process of transforming text data into feature vectors , The commonly used text feature representation is word bag method , That is, we don't consider the order of words , Each of the words that appear as a separate set of features , These unique words are called thesaurus , Every text can count a lot of columns of eigenvectors on a long vocabulary , If every text has words , Usually marked as “ Stop words ” Not included in the eigenvector . This article USES the SKlearn Machine learning library TfidfVectorizer Method , Compare with CountVectorizer,TfidfVectorizer In addition to considering the frequency of a word in the text , Also look at the number of texts that contain the word . It can reduce the impact of high-frequency meaningless words , Mining more meaningful features .

MLP Multilayer perceptron module

MLP It is the most common feedforward artificial neural network model , In addition to the I / O layer , It can have multiple hidden layers in the middle , The simplest MLP Only one hidden layer , That is, the three-tier structure . The layers of multi-layer perceptron are fully connected . The bottom layer of multi-layer perceptron is the input layer , In the middle is the hidden layer , Finally, the output layer . Suppose you input a n Dimension vector , There is n Neurons , The regression function is softmax. The neurons in the hidden layer are fully connected with the input layer , Suppose the input layer uses a vector X Express , The output of the hidden layer is f(W1X+b1),W1 Weight. ( Also called connection coefficient ),b1 It's bias , function f Can be common sigmoid Function or tanh function . The output of the output layer is softmax(W2X1+b2),X1 Represents the output of the hidden layer f(W1X+b1). therefore ,MLP All the parameters are the connection weights and offsets between the layers , The parameters of multilayer perceptron set in this project are as follows : The activation function is the default relu function ; Optimizer selection lbfgs(quasi-Newton Method optimizer ) Used to optimize weights ;alpha Set to 1e-5; The number of hidden layers is 2, Each floor has its own 50 Neurons ; The maximum number of iterations is 100.

Source code

import os

import re

from html import unescape

from re import T

import numpy as np

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

from sklearn.model_selection import train_test_split

from sklearn.naive_bayes import MultinomialNB

from sklearn.neural_network import MLPClassifier

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score, classification_report

def html_div(html):

text = re.sub('<head.*?>.*?</head>', '', html, flags=re.M | re.S | re.I)

text = re.sub('<a\s.*?>', ' HYPERLINK ', text, flags=re.M | re.S | re.I)

text = re.sub('<.*?>', '', text, flags=re.M | re.S)

text = re.sub(r'(\s*\n)+', '\n', text, flags=re.M | re.S)

return unescape(text)

def main(cv=1):

path_spam = 'C:/cmail/trainspam/'

path_ham = 'C:/cmail/trainham/'

path_test = 'C:/cmail/test/'

dataset_x_train = []

dataset_y_train = []

dataset_file_train = []

for filename in list(os.listdir(path_spam)):

f = open(path_spam + filename, encoding='ISO-8859-1')

cont = f.read()

content = html_div(cont)

dataset_x_train.append(content)

dataset_y_train.append(1)

dataset_file_train.append(filename)

for filename in list(os.listdir(path_ham)):

f = open(path_ham + filename, encoding='ISO-8859-1')

cont = f.read()

content = html_div(cont)

dataset_x_train.append(content)

dataset_y_train.append(0)

dataset_file_train.append(filename)

dataset_x = np.array(dataset_x_train)

dataset_y = np.array(dataset_y_train)

dataset_file = np.array(dataset_file_train)

x_train_data, y_train_data, file_train_data = dataset_x, dataset_y, dataset_file

dataset_x_test = []

dataset_file_test = []

for filename in list(os.listdir(path_test)):

f = open(path_test + filename, encoding='ISO-8859-1')

cont = f.read()

content = html_div(cont)

dataset_x_test.append(content)

dataset_file_test.append(filename)

dataset_x_test = np.array(dataset_x)

dataset_file_test = np.array(dataset_file)

x_test_data, file_test_data = dataset_x_test, dataset_file_test

vectorizer = TfidfVectorizer(min_df=2, max_df=0.6, ngram_range=(1, 2), stop_words='english',

strip_accents='unicode', norm='l2')

# max_df: It can be set in the range of [0.0 1.0] Of float, There is no limit to the scope of int, The default is 1.0. This parameter is used as a threshold , When constructing a corpus keyword set , If there's a word document frequence Greater than max_df, This word will not be used as a keyword . If this parameter is float, The percentage between the number of words and the number of corpus documents , If it is int, The number of times a word appears . If the parameter has been given vocabulary, This parameter is invalid .

# min_df: Be similar to max_df, The difference is that if a word is document frequence Less than min_df, Then the word will not be used as a key word .

# ngram_range: for example ngram_range(min,max), It means to be text Divide into min,min+1,min+2,.........max Two different phrases . such as ' I Love China ' in ngram_range(1,3) And then you get ' I ' ' Love ' ' China ' ' I Love ' ' Love China ' and ' I Love China ', If it is ngram_range (1,1) You can only get a single word ' I ' ' Love ' and ' China '.

# stop_words: Set stop words , Set to english Built in English stop words will be used

#

train_x_vec = vectorizer.fit_transform(x_train_data)

test_x_vec = vectorizer.transform(x_test_data)

acc = 0.0

# Split the test set to test the accuracy of the model

train_x, test_x, train_y, test_y = train_test_split(train_x_vec, y_train_data, test_size=0.2)

mlp = MLPClassifier(solver='lbfgs', alpha=1e-5, hidden_layer_sizes=(50, 50), random_state=0, max_iter=100)

mlp.fit(train_x_vec, y_train_data)

prediction_mlp = mlp.predict(test_x_vec)

# mlp.fit(train_x, train_y)

# prediction_mlp_test = mlp.predict(test_x)

acc = format(accuracy_score(test_y, prediction_mlp))

print('acc: ', format(accuracy_score(test_y, prediction_mlp)))

# File is written to

for i in range(len(x_test_data)):

with open('C:/cmail//re.txt', 'a', encoding='ISO-8859-1') as f:

if int(prediction_mlp[i]) == 0:

text = 'ham ' + file_test_data[i]

else:

text = 'spam ' + file_test_data[i]

f.write(text + '\n')

return acc

if __name__ == '__main__':

# Ten fold cross validation The training set opens on April 1

# n = 1

# avg_acc = 0.0

# for i in range(1, n + 1):

# print('cv {}:'.format(i))

# b_acc = main(i)

# avg_acc = avg_acc + float(b_acc)

# avg_acc /= n

# print('avg acc:'.avg_acc)

Dataset Links

Mail dataset

link :https://pan.baidu.com/s/1ncsf4SiqMc0bfGpgKvzOvw

Extraction code :spwd

边栏推荐

- Win平台下influxDB导出、导入

- Oracle和JSON的结合

- Submission lottery - light application server essay solicitation activity (may) award announcement

- Is it safe to open a stock account online in 2022? Is there any danger?

- Addition, deletion, modification and query of database

- Suggest collecting | what to do when encountering slow SQL on opengauss?

- 中国探月工程独家藏品限量发售!

- The idea runs with an error command line is too long Shorten command line for...

- JS基础--数据类型

- Dotnet console uses microsoft Maui. Getting started with graphics and skia

猜你喜欢

Huawei HMS core joins hands with hypergraph to inject new momentum into 3D GIS

Yoda unified data application -- Exploration and practice of fusion computing in ant risk scenarios

使用强大的DBPack处理分布式事务(PHP使用教程)

kubernetes之ingress探索实践

12款大家都在用的產品管理平臺

Neurips 2022 | cell image segmentation competition officially launched!

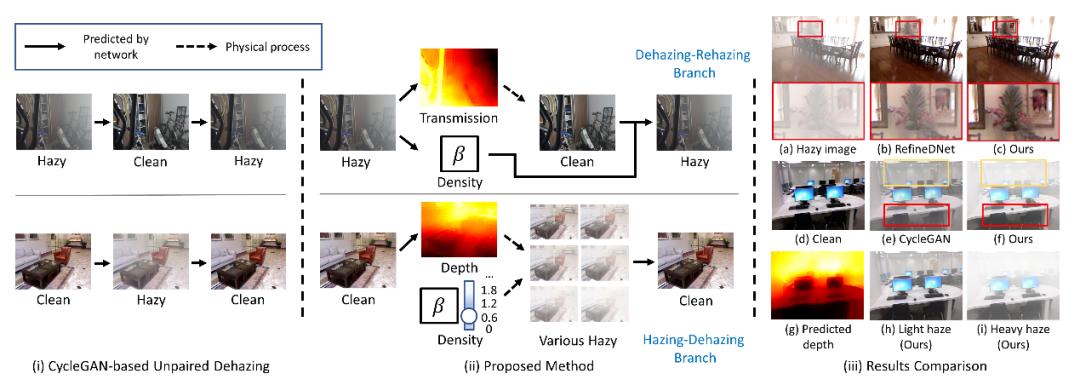

CVPR 2022 | self enhanced unpaired image defogging based on density and depth decomposition

【MPC】②quadprog求解正定、半正定、负定二次规划

关于Keil编译程序出现“File has been changed outside the editor,reload?”的解决方法

Suggest collecting | what to do when encountering slow SQL on opengauss?

随机推荐

[AI information monthly] 350 + resources! All the information and trends that can't be missed in June are here! < Download attached >

Uncover the secrets of new products! Yadi Guanneng 3 multi product matrix to meet the travel needs of global users

LeetCode. 515. Find the maximum value in each tree row___ BFS + DFS + BFS by layer

2022年6月编程语言排行,第一名居然是它?!

Win平台下influxDB导出、导入

Addition, deletion, modification and query of database

JS foundation -- data type

BAIC bluevale: performance under pressure, extremely difficult period

使用强大的DBPack处理分布式事务(PHP使用教程)

华为HMS Core携手超图为三维GIS注入新动能

毕业季·进击的技术er

Huawei Equipment configure les services de base du réseau WLAN à grande échelle

NC | intestinal cells and lactic acid bacteria work together to prevent Candida infection

Ask everyone in the group about the fact that the logminer scheme of flick Oracle CDC has been used to run stably in production

LeetCode.每日一题 剑指 Offer II 091. 粉刷房子 (DP问题)

[.NET6]使用ML.NET+ONNX预训练模型整活B站经典《华强买瓜》

CVPR 2022 | 基于密度与深度分解的自增强非成对图像去雾

442. duplicate data in array

关于Keil编译程序出现“File has been changed outside the editor,reload?”的解决方法

华为设备配置大型网络WLAN基本业务