当前位置:网站首页>How to use data pipeline to realize test modernization

How to use data pipeline to realize test modernization

2022-07-26 11:39:00 【51CTO】

Enterprises need to understand how data synthesis and data pipeline can provide scalable solutions , To create consistent data that meets the actual needs of the test system .

Many enterprises are now submerged in data . They collect data from many sources , And try to find ways to use this data to advance business goals . One way to solve this problem is to use the data pipeline as a connection to the data source , And convert the data into some form available to the endpoint through the pipeline .

Although this is part of the ongoing struggle to manipulate data for enterprises , But it is always necessary to find a way to provide a good data set for testing . Enterprises need these datasets to test applications and systems in the entire architectural environment . They also need data sets to focus on testing all aspects of their enterprise , For example, safety and quality assurance .

Creating synthetic data is a very practical need . In short , This really means that enterprises need to find a way to create fictitious or false data . Enterprises want to create consistent data similar to the actual requirements of the test system . The following will look at data pipelines , And explore how enterprises can use it to start creating their own synthetic data , In order to test in the enterprise .

PART 01

Data pipeline and testing

A very simple definition of a data pipeline is “ A series of data processing elements , The output of one element is the input of the next ”. To put it more simply , These are used to return data from the data source to be analyzed 、 Transform the basic connection of the level then used by the enterprise .

Data pipeline starts from retrieving data . They can use the application programming interface (API) Such programmable interface , Or through data flow and event processing interface , from SQL(DB) Data sources and other platforms to extract the required data .

Once the data is retrieved , You can decide to transform the data to meet the needs of end users . This can be done by data generation API、 By cleaning up or changing the structure of the retrieved data to build data , Last , For safety reasons , Data can be anonymized before being presented to end users .

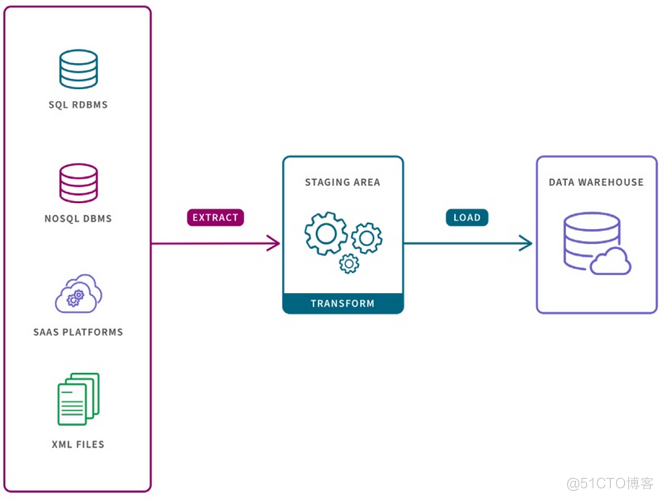

These are just a few examples of data pipelines that can be used as part of the testing process , chart 1 Is a simple example of a data pipeline from the source to the final data warehouse location for further use .

chart 1

Testing requires enterprises to report to the system being tested 、 Applications or code snippets provide data sets . This data set can be created manually 、 Copy or generate from existing datasets for use by the test team .

When dealing with very small data sets , Creating test data manually can be useful , But when a large number of data sets are needed , It will become very troublesome . If the data contains sensitive elements , From existing ( Production to test ) Environmental replication data sets pose security and privacy issues . Generating data based on existing data can provide good results .

If enterprises want to generate data on a large scale , Consider security to provide anonymous results , And ensure the flexibility of generating content , So what to do ? This is where data synthesis plays an important role . It allows enterprises to generate data with the flexibility they may need .

PART 02 Data synthesis for beginners

Generating synthetic data can provide a large amount of data while processing sensitive data elements . Synthetic data can be based on key data dimensions , For example, name 、 Address 、 Phone number 、 Account number 、 Social security 、 The credit card 、 identifier 、 Driving license number, etc .

Synthetic data is defined as false or created data , But it is usually based on real data , Used to expand to create larger 、 More realistic data sets for testing . then , The data generated for testing is provided to business users and developers in a secure and extensible way in the enterprise .

This synthetic data has a wide range of uses in any enterprise , For example, health care 、 Finance 、 Manufacturing and any other area that uses new technologies to meet various business needs . Its direct use is continuous testing 、 Safety and quality assurance practices , To help implement 、 Application development 、 Integration and data science work .

Enterprises can not only provide data sets on a large scale through data synthesis , It also ensures data consistency across multiple domains in the enterprise , At the same time, it provides feasible data in a real-world format . It's for developers 、 Architects and data architects provide a consistent approach across any enterprise , To test with data .

PART 03 Introduction to data synthesis

The best way to find that data synthesis can provide benefits to enterprises is to explore the most common usage patterns , Then dive into open source projects to start their experience . There are two simple patterns to start data synthesis : In cloud native environment and cloud native API in , Pictured 2 Shown .

chart 2

The first mode is to run the data synthesis platform in a single container on the cloud platform chosen by the enterprise , And make use of API From the source in the container ( For example, applications or databases ) Extract data from . The second is to deploy the data synthesis platform on the selected cloud platform , And take advantage of cloud native API From any source ( For example, external independent data sources ) Extract the data .

Data synthesis shines in the following use cases :

- Retrieve the required data in the platform (SQL)

- data retrieval (API)

- The data generated (API)

- Build more fictitious data on demand or as planned

- Data construction

- Build more structured data on demand or plan based on fictitious data

- Create structured or unstructured data that meets your needs

- Data centered on the streaming media industry

- Use data pipeline to process various industry standard data

- Provide real-world attributes by parsing and populating from real-time systems , So as to realize de identification and anonymization

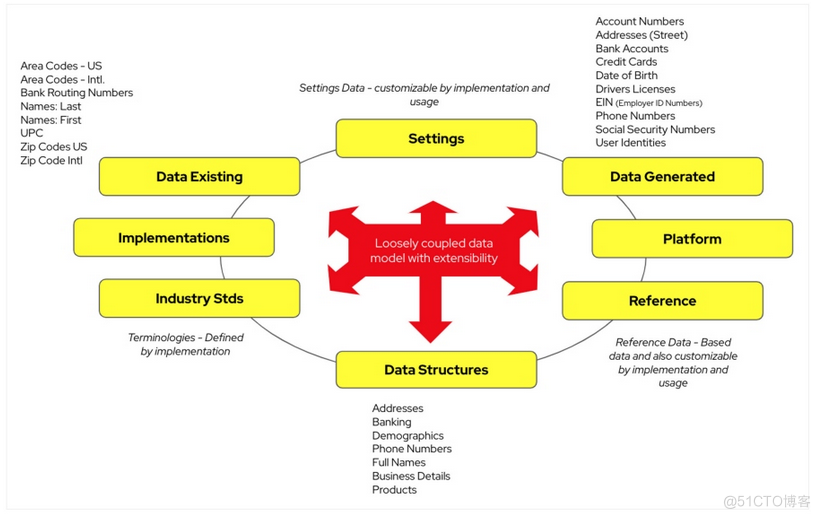

Covering each of these use cases is beyond the scope of this article , But this list gives people a good understanding of the applicability of data synthesis and testing . An overview of the data composition data layer , Pictured 3 Shown :

This is an overview of the data layer , And how the platform uses data fields across the United States ( Postal code and area code ) As an example, connect them . In the figure 3 Center of , You can see the loosely coupled data model that can be extended as needed . They constitute from existing data 、 The core foundation for accessing implementation data and industry standard data . This can be set up using data and adjusted based on the existing data structure in the enterprise . The output is the generated data 、 Reference data and platform specific data .

After this short journey of data synthesis , The next step is to start exploring what is called Project Herophilus Open source projects for , Enterprises can start using the data synthesis platform .

Enterprises will find the key starting area of data synthesis :

- The data layer —— Designed to be scalable and support all the requirements of the platform .

- The data layer API—— What supports user requests is the data layer API, This API Set is about being able to generate data and persist it to the data layer .

- Web UI(s) —— It aims to be the smallest viable product that can be used to view the data synthesis data layer implemented by the enterprise .

The three modules in the data synthesis project should help enterprises quickly start developing test data sets .

边栏推荐

- ESP8266-Arduino编程实例-开发环境搭建(基于PlatformIO)

- 贝尔曼期望方程状严谨证明

- 【万字长文】使用 LSM-Tree 思想基于.Net 6.0 C# 实现 KV 数据库(案例版)

- 【万字长文】使用 LSM-Tree 思想基于.Net 6.0 C# 实现 KV 数据库(案例版)

- Which is faster to open a file with an absolute path than to query a database?

- PostgreSQL在Linux和Windows安装和入门基础教程

- Back to the top of several options (JS)

- Meiker Studio - Huawei 14 day Hongmeng equipment development practical notes 8

- [error reported]exception: found duplicate column (s) in the data schema: `value`;

- 大咖观点+500强案例,软件团队应该这样提升研发效能!

猜你喜欢

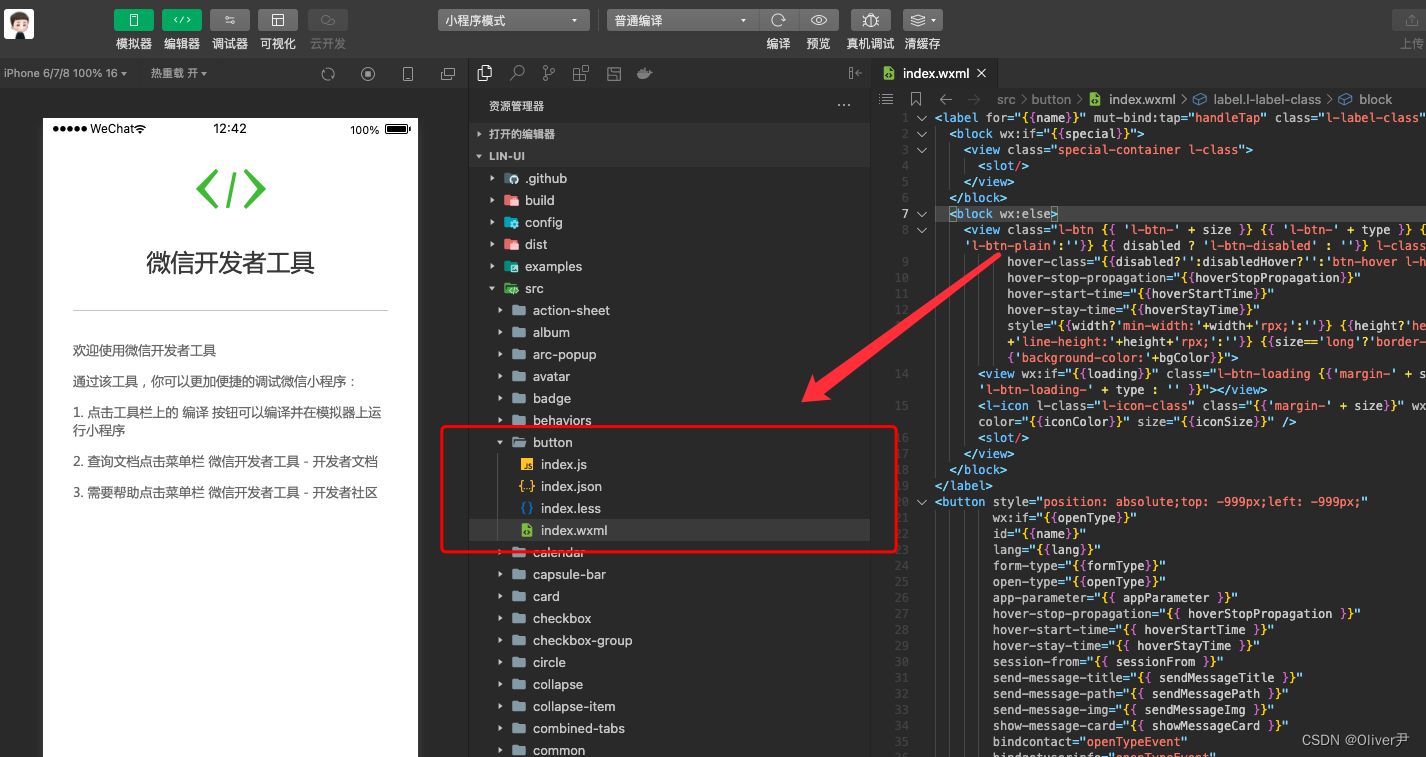

《微信小程序-进阶篇》Lin-ui组件库源码分析-Button组件(一)

如何使用数据管道实现测试现代化

MySQL deadlock analysis

36.【const函数放在函数前后的区别】

Practice of microservice in solving Library Download business problems

社区点赞业务缓存设计优化探索

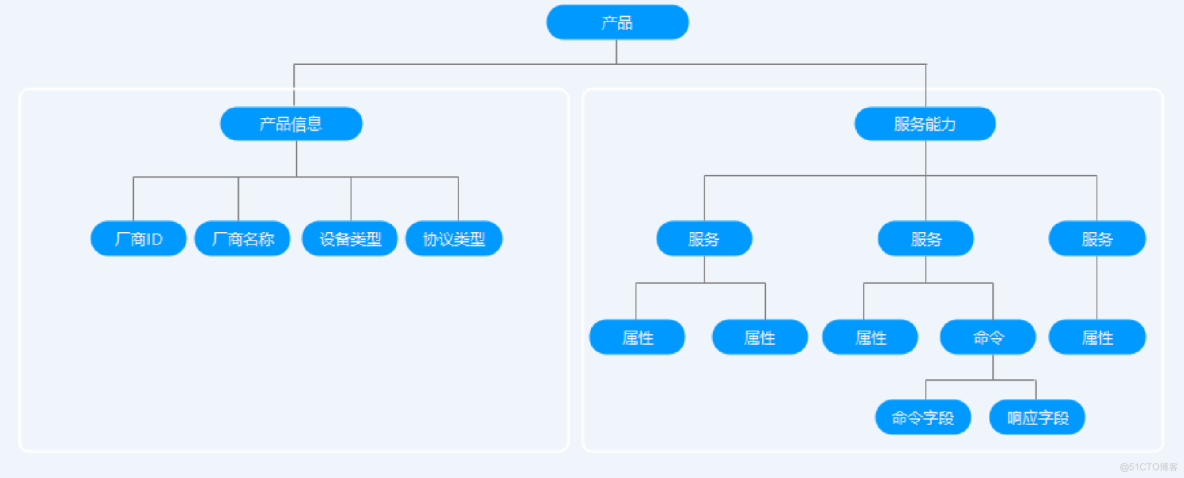

Data center construction (II): brief introduction to data center

梅科尔工作室-华为14天鸿蒙设备开发实战笔记八

![[vscode]如何远程连接服务器](/img/b4/9a80ad995bd589596d8b064215b55a.png)

[vscode]如何远程连接服务器

ESP8266-Arduino编程实例-开发环境搭建(基于PlatformIO)

随机推荐

Hal library IIC simulation in punctual atom STM32 `define SDA_ IN() {GPIOB->MODER&=~(3<<(9*2));GPIOB->MODER|=0<<9*2;}` // PB9 input mode

"Mongodb" mongodb high availability deployment architecture - replica set

Pyqt5 rapid development and practice Chapter 1 understanding pyqt5

Pytorch——基于mmseg/mmdet训练报错:RuntimeError: Expected to have finished reduction in the prior iteration

梅科尔工作室-华为14天鸿蒙设备开发实战笔记八

[reprint] the multivariate normal distribution

Why give up NPM and turn to yarn

查询进阶 别名

X 2 earn must rely on Ponzi startup? Where is the way out for gamefi? (top)

ORBSLAM2 CmakeLists文件结构解析

Data center construction (II): brief introduction to data center

Can SAIC mingjue get out of the haze if its products are unable to sell and decline

【通信原理】第一章 -- 绪论

FINEOS宣布2022年GroupTech Connect活动开放注册

安科瑞余压监控系统在住宅小区的应用方案

元宇宙GameFi链游系统开发NFT技术

Exploration on cache design optimization of community like business

Ten year structure five year life-06 impulse to leave

Real time streaming protocol --rtsp

Rigorous proof of Behrman's expectation equation