当前位置:网站首页>TensorFlow custom training function

TensorFlow custom training function

2022-07-30 15:32:00 【u012804784】

优质资源分享

| 学习路线指引(点击解锁) | 知识定位 | 人群定位 |

|---|---|---|

| 🧡 Python实战微信订餐小程序 🧡 | 进阶级 | 本课程是python flask+微信小程序的完美结合,从项目搭建到腾讯云部署上线,打造一个全栈订餐系统. |

| Python量化交易实战 | 入门级 | 手把手带你打造一个易扩展、更安全、效率更高的量化交易系统 |

本文记录了在TensorFlow框架中自定义训练函数的模板并简述了使用自定义训练函数的优势与劣势.

首先需要说明的是,本文中所记录的训练函数模板参考自https://stackoverflow.com/questions/59438904/applying-callbacks-in-a-custom-training-loop-in-tensorflow-2-0中的回答以及Hands-On Machine Learning with Scikit-Learn, Keras, and Tensorflow一书中第12.3.9节的内容,如有错漏,欢迎指正.

为什么和什么时候需要自定义训练函数

除非你真的需要额外的灵活性,否则应该更倾向使用fit()方法,为不是实现你自己的循环,尤其是在团队合作中.

如果你还在困惑为什么需要自定义训练函数的时候,那说明你还不需要自定义训练函数.通常只有在搭建一些结构奇特的模型时,我们才会发现model.fit()无法完全满足需求,接下来首先该尝试的方法是去看TensorFlow相关部分的源码,看看有没有认识之外的参数或方法,其次才是考虑使用自定义训练函数.毫无疑问,自定义训练函数会让代码更长、更难维护、更难懂.

但是,自定义训练函数的灵活性是fit()方法无法比拟的.比如,在自定义函数中你可以实现使用多个不同优化器的训练循环或是在多个数据集上计算验证循环.

自定义训练函数模板

模板设计的目的在于让我们通过对代码块的复用以及对关键部位的填空快速完成自定义训练函数,以使我们更专注于训练函数结构本身而非一些细枝末节的部分(如未知长度训练集的处理)并实现一些fit()方法支持的功能(如Callback类的使用).

def train(model:keras.Model,train\_batchs,epochs=1,initial\_epoch=0,callbacks=None,steps\_per\_epoch=None,val\_batchs=None):

callbacks = tf.keras.callbacks.CallbackList(

callbacks, add_history=True, model=model)

logs_dict = {}

# init optimizer, loss function and metrics

optimizer = keras.optimizers.Nadam(learning_rate=0.0005)

loss_fn = keras.losses.MeanSquaredError

train_loss_tracker = keras.metrics.Mean(name="train\_loss")

val_loss_tracker = keras.metrics.Mean(name="val\_loss")

# train\_acc\_metric = tf.keras.metrics.BinaryAccuracy(name="train\_acc")

# val\_acc\_metric = tf.keras.metrics.BinaryAccuracy(name="val\_acc")

def count(): # infinite iter

x = 0

while True:yield x;x+=1

def print\_status\_bar(iteration, total, metrics=None):

metrics = " - ".join(["{}:{:.4f}".format(m.name,m.result()) for m in (metrics or [])])

end = "" if iteration < total or float('inf') else "\n"

print("\r{}/{} - ".format(iteration,total) + metrics, end=end)

def train\_step(x,y,loss\_tracker:keras.metrics.Metric):

with tf.GradientTape() as tape:

outputs = model(x)

main_loss = tf.reduce_mean(loss_fn(y,outputs))

loss = tf.add_n([main_loss] + model.losses)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients,model.trainable_variables))

loss_tracker.update_state(loss)

return {loss_tracker.name:loss_tracker.result()}

def val\_step(x,y,loss\_tracker:keras.metrics.Metric):

outputs = model.predict(x,verbose=0)

main_loss = tf.reduce_mean(loss_fn(y,outputs))

loss = tf.add_n([main_loss] + model.losses)

loss_tracker.update_state(loss)

return {loss_tracker.name:loss_tracker.result()}

# init train\_batchs

train_iter = iter(train_batchs)

callbacks.on_train_begin(logs=logs_dict)

for i_epoch in range(initial_epoch, epochs):

# init steps

infinite_flag = False

if steps_per_epoch is None:

infinite_flag = True

step_iter = count()

else:

step_iter = range(steps_per_epoch)

# train\_loop

for i_step in step_iter:

callbacks.on_batch_begin(i_step, logs=logs_dict)

callbacks.on_train_batch_begin(i_step, logs=logs_dict)

try:

X_batch, y_batch = train_iter.next()

except StopIteration:

train_iter = iter(train_batchs)

if infinite_flag is True:

break

else:

X_batch, y_batch = train_iter.next()

train_logs_dict = train_step(x=X_batch,y=y_batch,loss_tracker=train_loss_tracker)

logs_dict.update(train_logs_dict)

print_status_bar(i_step, steps_per_epoch or i_step, [train_loss_tracker])

callbacks.on_train_batch_end(i_step, logs=logs_dict)

callbacks.on_batch_end(i_step, logs=logs_dict)

if steps_per_epoch is None:

print()

steps_per_epoch = i_step

if val_batchs is not None:

# val\_loop

for i_step,(X_batch,y_batch) in enumerate(iter(val_batchs)):

callbacks.on_batch_begin(i_step, logs=logs_dict)

callbacks.on_test_batch_begin(i_step, logs=logs_dict)

val_logs_dict = val_step(x=X_batch,y=y_batch,loss_tracker=val_loss_tracker)

logs_dict.update(val_logs_dict)

callbacks.on_test_batch_end(i_step, logs=logs_dict)

callbacks.on_batch_end(i_step, logs=logs_dict)

logs_dict.update(val_logs_dict)

print_status_bar(steps_per_epoch, steps_per_epoch, [train_loss_tracker, val_loss_tracker])

callbacks.on_epoch_end(i_epoch, logs=logs_dict)

for metric in [train_loss_tracker, val_loss_tracker]:

metric.reset_states()

callbacks.on_train_end(logs=logs_dict)

# Fetch the history object we normally get from keras.fit

history_object = None

for cb in callbacks:

if isinstance(cb, tf.keras.callbacks.History):

history_object = cb

return history_object

折叠

边栏推荐

猜你喜欢

Flink real-time data warehouse completed

这个编辑器居然号称快如闪电!

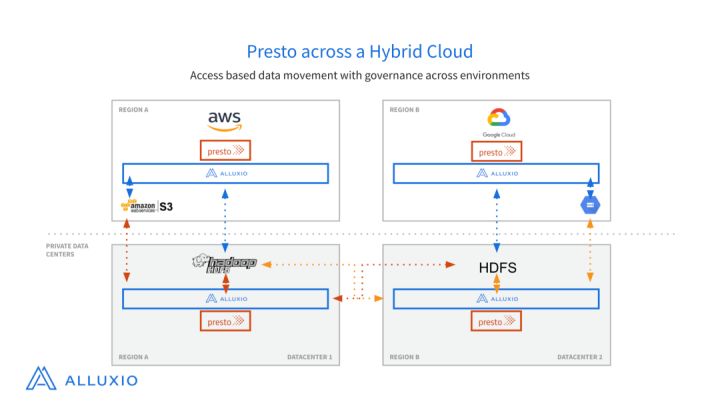

Alluxio for Presto fu can across the cloud self-service ability

![[Cloud native] Alibaba Cloud ARMS business real-time monitoring](/img/e7/55f560196521d22f830b2caf110e34.png)

[Cloud native] Alibaba Cloud ARMS business real-time monitoring

Mac 中 MySQL 的安装与卸载

三电系统集成技术杂谈

Flink实时数仓完结

基于FPGA的DDS任意波形输出

(Crypto essential dry goods) Detailed analysis of the current NFT trading markets

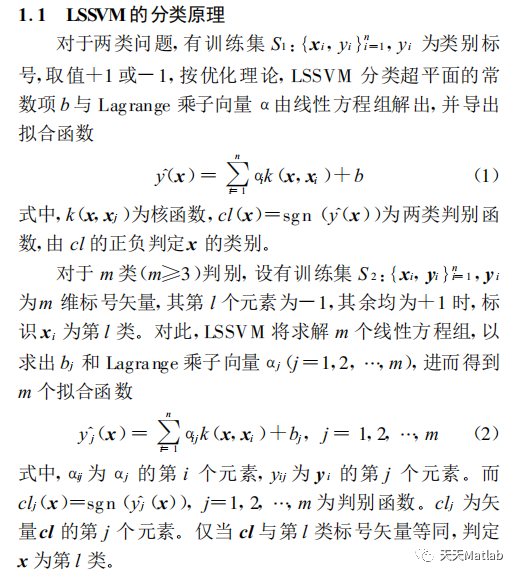

【回归预测-lssvm分类】基于最小二乘支持向量机lssvm实现数据分类代码

随机推荐

阿里CTO程立:阿里巴巴的开源历程、理念和实践

golang modules initialization project

JVM performance tuning

952. 按公因数计算最大组件大小 : 枚举质因数 + 并查集运用题

JUC common thread pool source learning 02 ( ThreadPoolExecutor thread pool )

MongoDB启动报错 Process: 29784 ExecStart=/usr/bin/mongod $OPTIONS (code=exited, status=14)

我们公司用了 6 年的网关服务,动态路由、鉴权、限流等都有,稳的一批!

JVM性能调优

Go to Tencent for an interview and let people turn left directly: I don't know idempotency!

微服务架构下的核心话题 (二):微服务架构的设计原则和核心话题

Redis 缓存穿透、击穿、雪崩以及一致性问题

三电系统集成技术杂谈

剑指 Offer II 037. 小行星碰撞

MaxWell抓取数据

GeoServer

分布式限流 redission RRateLimiter 的使用及原理

QIIME2得到PICRUSt2结果后如何分析

golang图片处理库image简介

Flink实时数仓完结

B+树索引页大小是如何确定的?