当前位置:网站首页>MapReduce project case 3 - temperature statistics

MapReduce project case 3 - temperature statistics

2022-06-28 12:02:00 【A vegetable chicken that is working hard】

Make statistics of each month and year , Two days before the highest temperature

1. data

2020-01-02 10:22:22 1c

2020-01-03 10:22:22 2c

2020-01-04 10:22:22 4c

2020-02-01 10:22:22 7c

2020-02-02 10:22:22 9c

2020-02-03 10:22:22 11c

2020-02-04 10:22:22 1c

2019-01-02 10:22:22 1c

2019-01-03 10:22:22 2c

2019-01-04 10:22:22 4c

2019-02-01 10:22:22 7c

2019-02-02 10:22:22 9c

2018-02-03 10:22:22 11c

2018-02-04 10:22:22 1c

2. Demand analysis

- Grouped by year and year

- The first two are sorted by temperature

3.Weather

import org.apache.hadoop.io.WritableComparable;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

/** * bean */

public class Weather implements WritableComparable<Weather> {

private int year;

private int month;

private int day;

private int degree;// temperature

@Override// Read... In order

public void readFields(DataInput dataInput) throws IOException {

this.year = dataInput.readInt();

this.month = dataInput.readInt();

this.day = dataInput.readInt();

this.degree = dataInput.readInt();

}

@Override

public void write(DataOutput dataOutput) throws IOException {

dataOutput.writeInt(year);

dataOutput.writeInt(month);

dataOutput.writeInt(day);

dataOutput.writeInt(degree);

//java.lang.RuntimeException: java.io.EOFException --- The problem of inconsistent serialization and deserialization

}

@Override

public int compareTo(Weather o) {

int t1 = Integer.compare(this.year, o.getYear());

if (t1 == 0) {

int t2 = Integer.compare(this.month, o.getMonth());

if (t2 == 0) {

return -Integer.compare(this.degree, o.getDegree());

}

return t2;

}

return t1;

}

public void setYear(int year) {

this.year = year;

}

public void setMonth(int month) {

this.month = month;

}

public void setDay(int day) {

this.day = day;

}

public void setDegree(int degree) {

this.degree = degree;

}

public int getYear() {

return year;

}

public int getMonth() {

return month;

}

public int getDay() {

return day;

}

public int getDegree() {

return degree;

}

@Override

public String toString() {

return "Weather{" +

"year=" + year +

", month=" + month +

", day=" + day +

", degree=" + degree +

'}';

}

}

4.WeatherMapper

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

import java.io.IOException;

import java.text.ParseException;

import java.text.SimpleDateFormat;

import java.util.Calendar;

import java.util.Date;

/** * 1. mapping , Each mapped row reaches the partition */

public class WeatherMapper extends Mapper<LongWritable, Text, Weather, IntWritable> {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

// Divide according to the separator

String[] split = value.toString().trim().split("\t");

if (split != null && split.length >= 2) {

SimpleDateFormat format = new SimpleDateFormat("yyyy-MM-dd HH:mm:ss");

Calendar calendar = Calendar.getInstance();

try {

Date date = format.parse(split[0]);

calendar.setTime(date);

Weather weather = new Weather();

weather.setYear(calendar.get(Calendar.YEAR));

weather.setMonth(calendar.get(Calendar.MONTH) + 1);// Be careful : Month from 0 Start counting

weather.setDay(calendar.get(Calendar.DAY_OF_MONTH));

int degree = Integer.parseInt(split[1].substring(0, split[1].lastIndexOf("c")));

weather.setDegree(degree);

context.write(weather, new IntWritable(degree));

} catch (ParseException e) {

e.printStackTrace();

}

}

}

}

5.WeatherPartition

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.mapreduce.Partitioner;

/** * 2. Partition : Press Weather.year Of hash Value to partition , Make each year a separate reduce, That is, a separate division for each year , Several partitions result in several output files */

public class WeatherPartition extends Partitioner<Weather, IntWritable> {

@Override

public int getPartition(Weather weather, IntWritable intWritable, int numPartitions) {

//numPartitions from job.setNumReduceTasks(3) decision

// Write an algorithm to calculate hash, This algorithm should meet the business requirements , This method is called for each key value , So this method needs to be concise

System.out.println((weather.getYear() - 1929) % numPartitions);

return (weather.getYear() - 1929) % numPartitions;

}

}

6.WeatherGroup

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.io.WritableComparator;

/** * 3. grouping : Grouping in partitions ,key The same month and year in are divided into the same group , The default is the same key In the same group * reduce The previous default is the same key In the same group , But at this time, we only need to compare the year and month , Obviously, the grouping method needs to be rewritten * <p> * Without grouping, data in the same partition will be transferred one by one , Can not reach the effect of screening the first two * Group words , According to the following grouping rules ,< years , temperature > In this way, the data of the same month and year is a group of incoming data */

public class WeatherGroup extends WritableComparator {

public WeatherGroup() {

super(Weather.class, true);

}

@Override

public int compare(WritableComparable a, WritableComparable b) {

Weather weather1 = (Weather) a;

Weather weather2 = (Weather) b;

int c1 = Integer.compare(weather1.getYear(), weather2.getYear());

if (c1 == 0) {

int c2 = Integer.compare(weather1.getMonth(), weather2.getMonth());

return c2;

}

return c1;

}

}

7.WeatherReduce

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

import java.io.IOException;

/** * 4. Statute */

public class WeatherReduce extends Reducer<Weather, IntWritable, Text, NullWritable> {

@Override

protected void reduce(Weather key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException {

int count = 0;

for (IntWritable i : values) {

count++;

if (count >= 3) {

break;

}

String res = key.getYear() + "-" + key.getMonth() + "-" + key.getDay() + "\t" + i.get();

context.write(new Text(res), NullWritable.get());

}

}

}

8.WeatherMain

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

/** * @program: Hadoop_MR * @description: * @author: author * @create: 2022-06-21 16:45 */

public class WeatherMain {

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

Job job = Job.getInstance();// establish job example

job.setJarByClass(WeatherMain.class);

//1.map

job.setMapperClass(WeatherMapper.class);

// Output types except Text It's best to set it all , Otherwise, there is no output

job.setMapOutputKeyClass(Weather.class);

job.setMapOutputValueClass(IntWritable.class);

//2. Partition

job.setPartitionerClass(WeatherPartition.class);

// Set up reduce number

job.setNumReduceTasks(3);//output Output three folders

//3. Sort

//job.setSortComparatorClass(WeatherSort.class);

//4.reduce Internal grouping

job.setGroupingComparatorClass(WeatherGroup.class);

//5.reduce

job.setReducerClass(WeatherReduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(NullWritable.class);

FileInputFormat.addInputPath(job, new Path("E:\\HadoopMRData\\input"));// Enter Directory

Path outPath = new Path("E:\\HadoopMRData\\output");

/*FileSystem fs = FileSystem.get(conf); if (fs.exists(outPath)) { fs.delete(outPath, true); }*/

FileOutputFormat.setOutputPath(job, outPath);

/*FileInputFormat.addInputPath(job, new Path(args[0]));// The command line runtime passes in FileOutputFormat.setOutputPath(job, new Path(args[1]));*/

System.exit(job.waitForCompletion(true) ? 0 : 1);// start-up ,0 Indicates normal exit

}

}

边栏推荐

- 赛尔号抽奖模拟求期望

- Setinterval, setTimeout and requestanimationframe

- For example, the visual appeal of the live broadcast of NBA Finals can be seen like this?

- day34 js笔记 正则表达式 2021.09.29

- Pre parsing, recursive functions and events in day25 JS 2021.09.16

- Day39 prototype chain and page fireworks effect 2021.10.13

- Apache2 configuration denies access to the directory, but can access the settings of the files inside

- Contract quantitative trading system development | contract quantitative app development (ready-made cases)

- Unity屏幕截图功能

- Oracle date format exception: invalid number

猜你喜欢

QML control type: tabbar

Fancy features and cheap prices! What is the true strength of Changan's new SUV?

day36 js笔记 ECMA6语法 2021.10.09

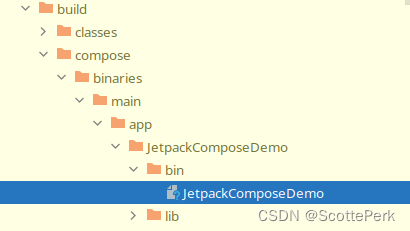

Jetpack Compose Desktop 桌面版本的打包和发布应用

Swin, three degrees! Eth open source VRT: a transformer that refreshes multi domain indicators of video restoration

水果FL Studio/Cubase/Studio one音乐宿主软件对比

Web page tips this site is unsafe solution

Using soapUI to obtain freemaker's FTL file template

Everyone can participate in open source! Here comes the most important developer activity in dragon lizard community

How to deploy the software testing environment?

随机推荐

Django -- MySQL database reflects the mapping data model to models

Deployment and optimization of vsftpd service

Redis 原理 - List

AGCO AI frontier promotion (2.16)

买股票在中金证券经理的开户二维码上开户安全吗?求大神赐教

Difference (one dimension)

MySql5.7添加新用户

Software test interview classic + 1000 high-frequency real questions, and the hit rate of big companies is 80%

day25 js中的预解析、递归函数、事件 2021.09.16

[Beijing University of Aeronautics and Astronautics] information sharing for the first and second examinations of postgraduate entrance examination

Setinterval, setTimeout and requestanimationframe

For example, the visual appeal of the live broadcast of NBA Finals can be seen like this?

QML control type: tabbar

Contract quantitative trading system development | contract quantitative app development (ready-made cases)

来吧元宇宙,果然这热度一时半会儿过不去了

Recommended practice sharing of Zhilian recruitment based on Nebula graph

Web3安全连载(3) | 深入揭秘NFT钓鱼流程及防范技巧

Graduated

【sciter】: sciter-fs模块扫描文件API的使用及其注意细节

Database Series: is there any way to seamlessly upgrade the business tables of the database