Citus 11.0 coming ! Citus It's a PostgreSQL Expand , It's for PostgreSQL Added the super capability of distributed database . Use Citus, You can create cross PostgreSQL Node clusters transparently distribute or replicate tables . Citus 11.0 Is a new master version , This means that it comes with some very exciting new features , Can achieve a higher level of scalability .

Citus 11.0 The biggest improvement in is that you can now always run distributed queries from any node in the cluster , because schema & metadata Is automatically synchronized . We've been Citus 11.0 Some details are shared in the beta blog post , But for those who don't use the initial beta Citus For open source people , We also had a big surprise .

Citus 11.0 The biggest enhancement in is , You can now always run distributed queries from any node in the cluster , because schema and metadata Is automatically synchronized . We've been Citus 11.0 beta Blog Some details are shared in , And for those who use Citus For open source people , We also had a big surprise , and Citus Open source is not the first beta Part of the edition .

- https://mp.weixin.qq.com/s/AAUG6FxVnVUNPekx70bXog

- https://www.citusdata.com/blog/2022/03/26/test-drive-citus-11-beta-for-postgres/

When we release new Citus version , We usually publish 2 A version : Open source version And the enterprise version with some additional features . however ,Citus 11.0 There will be only one version , because Citus Everything in the extension is now Fully open source Of !

This means that you can now Rebalance the tiles 、 Manage the of the entire cluster role 、 take Tenant isolation To their own pieces and so on . All this is based on Citus 11.0 On the basis of large-scale enhancement in : You can query your... From any node Citus colony , To create truly distributed PostgreSQL Experience .

- https://docs.citusdata.com/en/latest/admin_guide/cluster_management.html?highlight=rebalance#rebalance-shards-without-downtime

- https://www.citusdata.com/updates/v11-0/#multi-user

- https://www.citusdata.com/updates/v11-0/#tenant-isolation

In this post , We will focus on :

- Is becoming open source Citus Enterprise features

- Query distributed from any node Postgres surface

- Hide preview function : trigger !

If you want to know all the new features , You can see Citus 11.0 Update page for , It contains a detailed breakdown of all new features and other improvements .

rest Citus Enterprise Features are now open source

Long ago ,Citus Data Is an enterprise software company . as time goes on , The focus of our team has shifted to Open source , Become a cloud provider , And then become Azure An integral part of . With a new focus , Our team has developed all the new features , As Citus GitHub Open source project Part of . send Citus Open source enables you to interact directly with developers and the community , Know the code you're running , Avoid locking problems , And create a better developer experience for everyone .

- https://www.citusdata.com/blog/2016/03/24/citus-unforks-goes-open-source/

- https://docs.microsoft.com/azure/postgresql/hyperscale/

- https://github.com/citusdata/citus

last year , As Citus 10 Part of the version , We have Open source fragment rebalance , This is a Citus An important component of , It allows you to easily expand the cluster by moving data to new nodes . For performance reasons , Slice rebalancing is also useful , Data can be balanced among all nodes in the cluster .

Now? , As Citus 11.0 Part of , The rest of the enterprise functions are also open source :

- Rebalance sharding using logical replication to avoid blocking writes

- Multi user support ( Role and authorization propagation , Line level security )

- Tenant isolation for multi tenant applications

- Fine grained control over authentication between nodes

- Route internal connections through connection pools

- Performance optimization of data loading

My favorite new open source feature is the non blocking shard rebalance

Perhaps the most exciting of the new open source features is non blocking sharding . Although we are Citus 10 in Open source fragment rebalance , But during the sharding movement of the open source version , Writing to the moving partition is blocked . Now in Citus 11 in ,Citus Move shards by using logical replication . such , When expanding a cluster by moving existing data to a new node , Your application will only experience a short write delay . One prerequisite is that all Postgres Tables have primary keys .

Now the non blocking aspect of the shard rebalance is open source , When you are local 、 Internal deployment 、CI Environment or Azure Run in managed services in Citus when , You can get exactly the same slice rebalance function .

Query distributed from any node Postgres surface

Citus 11 It also comes with an important new feature : Automatically schema and metadata Sync .

In a typical Citus Deploying , Your application executes distributed queries through the coordinator . From an application perspective , Connect through the coordinator to make Citus To a large extent, with a single node PostgreSQL There is no difference between .

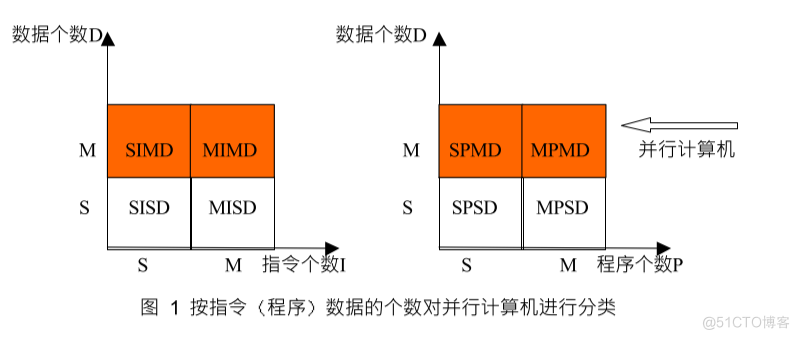

chart 1:Citus 10.2 Or earlier Citus colony , among users and items It's a distributed table , Their metadata is only on the coordinator .

The coordinator can handle high distributed query throughput (100k/ second ), But some applications still need higher throughput , Or there are queries that require a relatively large amount of processing on the coordinator ( for example , Search with large result sets ). Fortunately, ,Citus 11 Distributed queries in can be handled by any node , Because distributed tables schema and metadata Synchronize from coordinator to all nodes . You can still execute through the coordinator DDL Command and cluster management , But you can choose to cross work nodes Load balancing Heavy distributed query workloads .

chart 2:Citus 11.0 colony , among users and items It's a distributed table - Use the new automatic metadata synchronization feature , Their metadata will be synchronized to all nodes .

Although metadata is synchronized in Citus 11 It has existed as a special mode before , But there are some limitations ( We sometimes call it “Citus MX”), But it is now universal and automatic . whatever Citus The cluster will always have distributed table metadata on all nodes , And all your views 、 Functions, etc , This means that any node can execute distributed queries .

Citus 11 beta Blog This article describes in detail how to operate a cluster when querying from any node . The blog post describes how to view the activities of all nodes , And how to use the global process identifier (GPID) Associate internal queries with distributed queries . This article also introduces how to Citus Load balance the connections from applications between nodes .

most important of all , This new metadata synchronization / Query from any node what functionality means to you and your application ?

- There is no need to change the application : Your application can continue to put your Postgres Queries are routed to Citus The coordinator , Just like you always do , And let Citus Determine how to distribute queries .

- Today's most demanding data intensive applications can choose to query from any node : If you are willing and need , You can go to Citus Between work nodes Postgres Query for load balancing . Be sure to follow the instructions on how to Instructions for configuring the cluster according to the maximum number of connections and load balancing .

Upgrade to Citus 11

If you are currently running Citus colony , Upgrade to Citus 11 It's simple . Install new package And restart PostgreSQL after , The first step is to run the following command on all nodes :

ALTER EXTENSION citus UPDATE;Then when all nodes are upgraded , The second step is to connect to the coordinator and run :

CALL citus_finish_citus_upgrade(); The second step above is Citus 11 New steps in .citus_finish_citus_upgrade The function will ensure that all nodes have metadata , This way, your existing cluster will behave in a completely new way Citus 11 The clusters are the same . We suggest that in any future Citus Call after upgrade citus_finish_citus_upgrade, Because we may add additional steps .

Switch to Citus 11 Without changing the application . You can continue to run all queries through the coordinator , This is still the simplest approach for most applications . After upgrading , You can choose to run some or all queries through the work node , Of course, you can also use all the new features , For example, non blocking rebalance .

Upgrade to Citus 11 One of the things to consider when doing this is , Some rarely used features have been deprecated :

- The placement of pieces is invalid Used to handle write failures of shards replicated using statement based shard replication . When the write on the shard placement fails , It will fail , So that the system can continue to use the remaining copies . Although this behavior has some usability advantages , But it also has many disadvantages . Citus Statement based sharding replication is still supported to extend reads , Therefore, existing distributed tables that use sharding replication can be upgraded , However, after the upgrade, the shard placement will no longer be invalid due to failure . Although this behavior has some usability advantages , But it also has many disadvantages . Citus Statement based sharding replication is still supported to extend reads , Therefore, existing distributed tables that use sharding replication can be upgraded , However, after the upgrade, the shard placement will no longer be invalid due to failure .

- Append the distributed table It is a distributed table that needs to create new shards frequently when loading new data . The disadvantage of this method is that there are too many shards in the table , And because there is no clearly defined distribution column , Many relationship features are not available . from Citus 11.0 Start , Existing additional distributed tables will be read-only . We recommend switching to the hash distribution table .

- Distributed cstore_fdw surface It's a distributed table , Among them, slicing is the use of cstore_fdw Extended external table . because Citus With built-in Column access method , Therefore, distributed tables and

cstore_fdwThe combination of . We recommend upgrading to Citus 11.0 Previously converted to column access method .

wait , Where is my slice ?

If you have used Citus, You may occasionally connect to your work node to see the shards that store data in distributed and reference tables . Each work node will have a different set of partitions , for example :

\d

List of relations

┌────────┬──────────────┬───────┬───────┐

│ Schema │ Name │ Type │ Owner │

├────────┼──────────────┼───────┼───────┤

│ public │ citus_tables │ view │ marco │

│ public │ ref_102040 │ table │ marco │

│ public │ test_102105 │ table │ marco │

│ public │ test_102107 │ table │ marco │

└────────┴──────────────┴───────┴───────┘stay Citus 11 in , When you connect to any work node , You will see distributed tables and reference tables , But there are no slices :

\d

List of relations

┌────────┬──────────────┬───────┬───────┐

│ Schema │ Name │ Type │ Owner │

├────────┼──────────────┼───────┼───────┤

│ public │ citus_tables │ view │ marco │

│ public │ ref │ table │ marco │

│ public │ test │ table │ marco │

└────────┴──────────────┴───────┴───────┘

(3 rows)The cool thing is that every node in the cluster now looks the same , But where are the pieces ?

We find that users and various tools are confused by the mixture of distributed tables and Shards . for example ,pg_dump Will attempt to dump shards and distributed tables . therefore , We hide shards from directory queries , But they still exist , if necessary , You can query them directly .

For situations where you need to view shards in a specific application , We introduced a new setup :

-- show shards only to pgAdmin and psql (based on their application_name):

set citus.show_shards_for_app_name_prefixes to 'pgAdmin,psql';

-- show shards to all applications:

set citus.show_shards_for_app_name_prefixes to '*';

\d

List of relations

┌────────┬──────────────┬───────┬───────┐

│ Schema │ Name │ Type │ Owner │

├────────┼──────────────┼───────┼───────┤

│ public │ citus_tables │ view │ marco │

│ public │ ref │ table │ marco │

│ public │ ref_102040 │ table │ marco │

│ public │ test │ table │ marco │

│ public │ test_102105 │ table │ marco │

│ public │ test_102107 │ table │ marco │

└────────┴──────────────┴───────┴───────┘

(6 rows)Citus 11 Hidden preview function in : Triggers on distributed tables

Trigger is an important Postgres characteristic , For maintaining complex data models —— And the broader relational database . When inserting 、 When a row is updated or deleted , Trigger functions can perform other operations on the database . Because of all Citus Nodes now have metadata , Triggers on shards of distributed tables can now perform operations on other distributed tables from the work node where the shards are stored .

Citus The trigger method of can be well extended , because Postgres Trigger calls are pushed down to each shard . However ,Citus At present, there is no way to know what trigger functions will do , This means that it can do something that causes transactional problems . for example , If the trigger function attempts to access another partition , It may not see some uncommitted writes . The way to avoid this is to access only from the trigger function located at Slice keys at the same position . at present , We require users to use citus.enable_unsafe_triggers Set explicit enable trigger :

CREATE TABLE data (key text primary key, value jsonb);

SELECT create_distributed_table('data','key');

CREATE TABLE data_audit (operation text, key text, new_value jsonb, change_time timestamptz default now());

SELECT create_distributed_table('data_audit','key', colocate_with := 'data');

-- we know this function only writes to a co-located table using the same key

CREATE OR REPLACE FUNCTION audit_trigger()

RETURNS trigger

AS $$

DECLARE

BEGIN

INSERT INTO data_audit VALUES (TG_OP, Coalesce(OLD.key, NEW.key), NEW.value);

RETURN NULL;

END;

$$ LANGUAGE plpgsql;

-- so, it is safe to enable triggers on distributed tables

SET citus.enable_unsafe_triggers TO on;

CREATE TRIGGER data_audit_trigger

AFTER INSERT OR UPDATE OR DELETE ON data

FOR EACH ROW EXECUTE FUNCTION audit_trigger();As long as you are careful to access only the keys in the same location , Use Citus The trigger of provides you with a way to take advantage of automatic schema and metadata A good way to synchronize , There is no need for load balancing queries between nodes . By pushing more work into the trigger function , Need less distributed queries and network round trips , So as to improve the overall scalability .

![[Patents and papers-20]: Operation Guide for electronic information declaration in Nanjing, Jiangsu Province in 2022](/img/bb/313f4a9f9c03ab2e9dfe0e8846831e.png)