当前位置:网站首页>Hit the point! The largest model training collection!

Hit the point! The largest model training collection!

2022-06-26 23:55:00 【Shengsi mindspire】

01

Overall structure of large model training

How to use hundreds of computing centers AI Accelerate the clustering of chips , A large-scale model with more than 10 billion training parameters ? Parallel computing is an effective method , In addition to the technologies related to distributed parallel computing , In fact, more technologies will be integrated in the process of training large models , Such as the new algorithm model architecture and memory / Computational optimization technology, etc .

This article combs the relevant technical points we use in large model training , It is mainly divided into three aspects to review the current use of multi AI The mainstream method of accelerating chip training large model .

Distributed parallel acceleration : Parallel training is mainly divided into data parallel training 、 Model parallel 、 Pipeline parallel 、 There are four parallel ways of tensor parallelism , The above four main distributed parallel strategies are used as the main strategy of large model training parallel .

Algorithm model architecture : Large model training is inseparable from Transformer Proposal of network model structure , Later, we often encounter expert mixed models in trillion sparse scenes MoE They are all new algorithm model structures that are inseparable from large models .

Memory and computing optimization : The memory optimization technology is mainly composed of activation Activation Recalculation 、 Memory efficient optimizer 、 The model of compression , The calculation optimization is mainly reflected in the mixed accuracy training 、 Operator fusion 、 Gradient accumulation and other technologies .

02

The goal formula of large model training

The overall goal of super model training is to improve the overall training speed , Reduce the training time of large models , You know , After all, training a large model basically starts from the moment you press enter 1 To 2 Months , It hurts . Let's mainly look at the formula of total training speed in large model training :

In the above formula , The single card speed is mainly composed of a single block AI Accelerate the computing speed of the chip 、 data IO To decide ; And the number of acceleration chips is very clear , The more the number, the faster the training ; The multi card acceleration ratio is determined by the calculation and communication efficiency .

Let's associate the technology used with this formula :

Single card speed : Since the single card speed is the operation speed and data IO It's up to you to decide , Then we need to optimize the single card training , So the main technical means are precision training 、 Operator fusion 、 Gradient accumulation to speed up the training performance of a single card .

Accelerate the number of chips : Theoretically ,AI The more chips , The faster the model training . however , With the further growth of the training data set , The growth of acceleration ratio is not obvious . Such as data parallelism, there will be limitations , When training resources expand to a certain scale , Due to the existence of communication bottleneck , The marginal effect of increasing computing resources is not obvious , Even increasing resources can't accelerate . At this time, the communication topology needs to be optimized , For example, through ring-all-reduce To optimize the training mode .

Multi card speedup : Since the multi card acceleration ratio is calculated by 、 Communication efficiency determines , Then we need to combine the algorithm with the network topology in the cluster to optimize , So there is data parallelism DP、 Model parallel MP、 Pipeline parallel PP Combined multi-dimensional hybrid parallel strategy , To increase the efficiency of multi card training .

In general , The goal of super model training is to optimize the above formula , Increase total training speed . The core idea is to combine data and calculation related graphs / The operator cuts into different devices , At the same time, reduce the cost of communication between devices as much as possible , Rational use of computing resources of multiple devices , Achieve efficient concurrent scheduling training , Maximize training speed .

03

Cluster architecture of large model training

The cluster architecture here is to solve the distributed training problem of machine learning model . At present, the big model of deep learning can only be trained in clusters , The architecture of cluster also needs to be based on distributed parallel computing 、 Deep learning 、 Large model training technology to make reasonable arrangement .

stay 2012 About years ago Spark A simple and intuitive data parallel method is adopted to solve the problem of model parallel training , But because of Spark The parallel gradient descent method is synchronous blocking , And the model parameters need to be sent to each node in the form of global broadcast , therefore Spark The parallel gradient descent is relatively inefficient .

2014 In, Li Mu proposed distributed and scalable Parameter Server framework , The distributed training problem of machine learning model is well solved .Parameter Server It is not only directly applied to the machine learning platforms of major companies , And it's also integrated into TensorFlow,Pytroch、MindSpore、PaddlePaddle In the depth framework of mainstream , As one of the most important solutions of machine learning distributed training .

At present, there are two most popular models :

Parameter server mode (Parameter Server,PS)

Collective communication mode (Collective Communication,CC)

The parameter server mainly has one or more central nodes , These nodes are called PS node , Used to aggregate parameters and manage model parameters . Collective communication has no central node to manage model parameters , Every node is Worker, Every Worker While responsible for model training , We also need to master the latest global gradient information .

Parameter server mode

Parameter server architecture Parameter Server,PS The architecture consists of two parts , The first is to divide computing resources into two parts , Parameter server node and work node :

The parameter server node is used to store parameters

The work node part is used for algorithm training

The second part is to divide the machine learning algorithm into two aspects , namely 1) Parameters and 2) Training .

As shown in the figure ,PS The architecture divides computing nodes into server And worker, among ,worker Used to perform forward and reverse calculations of the network model . and server For each worker The gradients sent back are merged and the model parameters are updated , The way of centralized management of deep learning model parameters , Very easy to store very large scale model parameters .

But as the model network becomes more complex , The demand for computing power is higher and higher , When the amount of data is constant , Single GPU The calculation time is different , And the network bandwidth is not balanced , There will be some GPU The calculation is faster , part GPU The calculation is slow . At this time, if the parameters of the network model are updated asynchronously , This will cause confusion in the update of parameters related to the optimizer . When using synchronous update, there will be a problem of blocking waiting for network parameter synchronization .

GPU Powerful computing power can undoubtedly improve the computing performance of cluster , But then comes , Not only is the size of the model limited by the display memory and memory of the machine , Moreover, the communication bandwidth will become a bottleneck due to the reduction of the number of cluster network cards .

At this time, baidu is based on PS Based on the architecture Ring-All-Reduce New communication architecture .

As shown in the figure , Through asynchronous pipeline execution mechanism , Hidden IO The additional performance overhead , At the same time of ensuring the training speed , So that the size of the training model is no longer subject to the video memory and memory , Greatly increase the scale of the model . and RPC&NCCL The hybrid communication strategy can adopt some sparse parameters RPC Protocols communicate across nodes , Other parameters are between cards NCCL The way to complete communication , Make full use of bandwidth resources .

Collective communication mode

04

Large model training related papers

2022 Learn the big model 、 Distributed deep learning , Impossible to miss AI The paper , Have you read it all ? According to the introduction above , We will divide it into papers related to distributed parallel strategy 、 Papers related to distributed framework 、 Organize the papers from different dimensions such as papers related to communication bandwidth optimization . And give a simple interpretation , I hope you can share good ideas together .

Distributed parallel strategy

Data parallelism (Data Parallel,DP): The speedup of data parallel training is the highest , But every device requires a backup , It takes up a lot of video memory .

Model parallel (Model Parallel,MP): Model parallel , Communication accounts for a high proportion , There are limited types of models that are suitable for model parallelism in machines .

Pipeline parallel (Pipeline Parallel,PP): Pipeline parallel , Training equipment is prone to idle state , Acceleration efficiency is not as high as data parallelism ; But it can reduce the communication boundary and support more layers , Suitable for use between machines .

Hybrid parallel (Hybrid parallel,HP): The idea of hybrid parallel strategy , Combine the advantages of three strategies , Realize learning from each other . say concretely , Firstly, the strategy of combining model parallelism and grouping parameter slicing is used in a single machine , The reason for this is that these two strategies have a large amount of traffic , Suitable for inter card communication in the machine . next , In order to carry hundreds of billions of large-scale models , Superimposed pipeline parallel strategy , Use multiple machines to share the calculation . Last , For efficient computing and communication , In the outer layer, data parallelism is added to increase the number of concurrency , Improve the overall training speed . This is what we are now AI Parallel strategy added to the framework , The industry basically uses this method .

Parallel related papers

The following are the classic recommended papers related to parallelism , The first is Jeff Dean stay 2012 Pioneering article in , Then introduce Facebook Pytroch The data used in parallel DDP、FSDP The strategy of . However, this is not enough , Because there are multiple parallel strategies , therefore NVIDIA Launched based on GPU The data of 、 Model 、 A comparative review of pipelined parallelism . In fact, pipelined parallelism will introduce a large number of servers buffer, therefore Google Parallel pipeline optimization and parallel pipeline optimization are introduced for Microsoft respectively GPipe and PipeDream. The last is NVIDIA A big model for your own family Megatron, The relevant strategies involved in the proposed model parallelism .

- Large Scale Distributed Deep Networks.

2012 The masterpiece of the year , You know, there were not many neural networks at that time , This is from Google A great god Jeff Dean The article . It is mainly the neural network to divide the model , Because it was launched earlier , So a little Naitve One o'clock , But as a pioneering work of distributed parallelism , A little recommendation .

- Getting Started with Distributed Data Parallel.

- PyTorch Distributed: Experiences on Accelerating Data Parallel Training.

Facebook by Pytorch Create a distributed data parallel strategy algorithm Distributed Data Parallel (DDP). And Data Parallel Single process control GPU Different , stay distributed With the help of the , Just write one piece of code ,torch It will be automatically assigned to n A process , Respectively in n individual GPU Up operation . There is no lord GPU, Every GPU Perform the same task . For each GPU All training is carried out in their own process . Each process loads its own data from disk . The distributed data sampler ensures that the loaded data does not overlap between processes . The forward propagation of the loss function is calculated in each GPU Independent execution on . therefore , No need to collect network output . During the back propagation , The gradient drops at all GPU Both are executed , To ensure that GPU At the end of the back propagation, the same copy of the average gradient is finally obtained .

- Fully Sharded Data Parallel: faster AI training with fewer GPUs.

Facebook released FSDP(Fully Sharded Data Parallel), Benchmarking Microsoft in DeepSpeed Proposed in ZeRO,FSDP Can be seen as PyTorch Medium DDP Optimized version , It is also data parallelism , But and DDP The difference is ,FSDP Adopted parameter sharding, So-called parameter sharding That is to divide the model parameters into various GPUs On , and DDP Every GPU Keep a copy of parameter,FSDP It can achieve better training efficiency ( Speed and memory usage ).

- Efficient Large-Scale Language Model Training on GPU Clusters.

A good overview of the production and NVIDIA, In the paper , NVIDIA This paper introduces three necessary parallel technologies for distributed training super large-scale model : Data parallelism (Data Parallelism)、 Model parallel (Tensor Model Parallelism) Parallel with pipelining (Pipeline Model Parallelism).

- Automatic Cross-Replica Sharding of Weight Update in Data-Parallel Training.

In traditional data parallelism , The model parameters are copied and updated by the optimizer after each training cycle . However , When the batch number of each core is not large enough , Computing may become a bottleneck . for example , With MLPerf Of BERT Take training as an example , stay 512 A third generation TPU On chip ,LAMB The parameter update time of the optimizer can account for... Of the whole cycle time 18%.Xu Et al. 2020 The parameter updating division technology was proposed in , This distributed computing technology first performs a reduce-scatter operation , Then make each accelerator have part of the integration gradient . In this way, each accelerator can calculate the corresponding updated local parameters . In the next step , Each updated local parameter is globally broadcast to each accelerator , In this way, there are updated global parameters on each Accelerator . In order to achieve a higher acceleration ratio , At the same time, data parallelism and model parallelism are used to deal with parameter update partition . In the image segmentation model , Parameters are copied , In this case, parameter update partitioning is similar to data parallelism . then , When the parameters are distributed to different cores , Perform multiple concurrent parameter update partitions .

- PipeDream: Fast and Efficient Pipeline Parallel DNN Training.

Microsoft research announced Fiddle The creation of the project , It includes a series of research projects aimed at simplifying distributed deep learning .PipeDreams yes Fiddle One of the first projects to focus on parallel training of deep learning model . It mainly uses “ Pipeline parallel ” Technology to extend the training of deep learning model . stay PipeDream It mainly overcomes the challenge of pipeline parallel training , The main flow of the algorithm is as follows . First ,PipeDream Must be between different input data , Coordinate the work of two-way pipeline . then ,PipeDream The weight version in the backward channel must be managed , Thus, the gradient can be calculated correctly , And the weight version used in the backward channel must be the same as that used in the forward channel . Last ,PipeDream You need all the... In the assembly line stage It takes roughly the same calculation time , This is to maximize the throughput of the pipeline .

- GPipe: Easy Scaling with Micro-Batch Pipeline Parallelism.

GPipe yes Google A paper on invention , Focus on extending the training load of deep learning applications in parallel through pipeline .GPipe Put one L Layer network , Cut into K individual composite layers. Every composite layer Run in a separate TPU core On . this K individual core composite layers It can only be executed in sequence , however GPipe Pipelining parallel strategy is introduced to alleviate the performance problem of sequential execution , hold mini-batch Subdivide into several smaller macro-batch, Improve parallelism .GPipe Also use recomputation This simple and effective technique to reduce memory , Further allows training of larger models .

- Megatron-LM: Training Multi-Billion Parameter Language Models Using Model Parallelism.

- Efficient Large-Scale Language Model Training on GPU Clusters Using Megatron-LM.

come from NVIDIA, Although these two articles are about Megatron A network model , In fact, all the technical points related to multi-dimensional parallelism such as model parallelism . The first paper has two main conclusions :1, Using the distributed technology of parallel data and model, the training with 3.9B Parametric BERT-large Model , stay GLUE On many data sets of SOTA achievement . meanwhile , Also trained with 8.3B Parametric GPT-2 Language model , And in the dataset Wikitext103,LAMBADA,RACE Get on both SOTA achievement . This paper , On the one hand, it reflects the importance of computational power , On the other hand, it reflects the key technology of model parallelism and data parallelism . These two optimization techniques are very important in accelerating the process of model training and inference .

05

Large model algorithm correlation

Must understand the basic large model structure

The basic large-scale model structure is basically composed of Google Contributing , The first thing to see is 17 Years only need Attention replace RNN Sequence structure , So there is a fourth kind of deep learning architecture Transformer. With Transformer After the infrastructure , stay 18 Introduced in the BERT Pre training model , All the big models after that are based on Transformer The structure and BERT Pre training mechanism . The more interesting thing behind is to use Transformer Large visual model of mechanism ViT And the introduction of expert decision-making mechanism MoE.

- Attention is all you need.

Google Pioneering Transformer Big model , It is the most basic architecture of all large models now , Now? Transformer Has become except MLP、CNN、RNN Besides, the fourth most important deep learning algorithm architecture . Google in arxiv Sent a paper with the name of teaching Attention Is All You Need, A method based on attention To deal with the problems related to sequence model , For example, machine translation . Traditional neural machine translation mostly uses RNN perhaps CNN As a encoder-decoder Model basis of , And Google's latest is based on Attention Of Transformer The model abandons the inherent formula , It doesn't work CNN perhaps RNN Structure . The model can work in high parallel , Therefore, the training speed is particularly fast while improving the translation performance .

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.

Google The first large pre training model released BERT, So as to detonate the trend and trend of pre training large model , There is no need to introduce this. You must have heard of it .BERT The full name is Bidirectional Encoder Representation from Transformers, It is a pre trained language representation model . It emphasizes that the traditional one-way language model or the shallow splicing of two one-way language models are no longer used for pre training , It's a new masked language model(MLM), So as to generate a deep two-way linguistic representation .BERT When the paper was published, it was mentioned in 11 individual NLP(Natural Language Processing, natural language processing ) Got new... In the mission state-of-the-art Result , It's stunning .

- An Image is Worth 16x16 Words: transformer for Image Recognition at Scale.

ViT Google The first proposed use Transformer A large visual model of , Basically, the innovative algorithms of large models come from Google, Have to take .ViT Use as a visual Converter , instead of CNN Or mixed methods to perform image tasks . The results are promising, but not complete , Because vision based tasks other than classification : Such as detection and segmentation , Not yet . Besides , And Vaswani wait forsomeone (2017 year ) Different , And CNN comparison ,transformer Performance improvements are much more limited . The author assumes that further pre training can improve performance , Because compared with other existing technology models ,ViT It's relatively scalable .

- GShard: Scaling Giant Models with Conditional Computation and Automatic Sharding.

As if G The first models are Google The same magic . stay ICLR 2021 On ,Google Further research will be carried out MoE Applied to the Transformer On the task of neural machine translation .GShard take Transformer Medium Feedforward Network(FFN) Layer replaced with MoE layer , And will MoE Layer and data parallelism are cleverly combined . In data parallel training , Several copies of the model have been copied in the training cluster .GShard By paralleling the data of each channel FFN as MoE To be realized by an expert in MoE layer , Such a design can be achieved by introducing the All-to-All Communication to achieve MoE The function of .

A landmark big model

- GPT-3: Language Models are Few-Shot Learners.

OpenAI The first 10 billion scale large model released , It should be very groundbreaking , Now the big models are benchmarking GPT-3.GPT-3 Still continue their own one-way language model training mode , It's just that this time the size of the model has been increased to 1750 Billion , And use 45TB Data for training . meanwhile ,GPT-3 Focus on more generic NLP Model , Solve the present BERT Two disadvantages of the class model : Over reliance on domain tagged data : Even with pre training + Fine tuned two-stage framework , But there is still a certain amount of domain annotation data , Otherwise, it is difficult to achieve good results , And the cost of tagging data is very high . Over fitting of domain data distribution : In the fine tuning stage , Because the domain data is limited , The model can only fit the distribution of training data , If the data is small, it may cause over fitting , As a result, the pan Chinese ability of the model is reduced , It can't be applied to other fields .

- T5: Text-To-Text Transfer Transformer.

Google hold T5 To put it simply, put all NLP Tasks are transformed into Text-to-Text( Text to text ) Mission . about T5 This paper , very Google An article on , It makes me weak , After all, besides being rich and powerful , And ideas , This is Gao Fu Shuai . Go back to the paper itself ,T5 The point is not how much money you burned , Nor how many lists have been slaughtered , among idea Innovation is not big , Its most important function is to give the whole NLP The pre training model domain provides a general framework , Turn all tasks into one form .

- Swin Transformer: Hierarchical Vision Transformer using Shifted Windows.

Microsoft Yayan proposed Swin Transformer New vision Transformer, It can be used as a general purpose of computer vision backbone. The difference between the two areas , For example, the huge difference of visual entity scale and the comparison with words in text , The high resolution of pixels in an image , Brought to make Transformer From the challenge of language adaptation to vision .

A sparse large model with a scale of more than one trillion

- Modeling Task Relationships in Multi-task Learning with Multi-gate Mixture-of-Experts.

Google Released multitasking MoE. The purpose of multi task learning is to use a model to learn multiple goals and tasks at the same time , But the prediction quality of common task models is usually sensitive to the relationship between tasks ( Data distribution is different ,ESMM It's also the problem that is being solved ), therefore ,google A multi gate hybrid expert algorithm is proposed (Multi-gate Mixture-of-Experts) The goal is to learn how to weigh task goals from data (task-specific objectives) And the task (inter-task relationships) The relationship between . All tasks share a hybrid expert structure (MoE) To adapt to multi task learning , At the same time, it also has a trainable gating network (Gating Network) To optimize every task .

- Switch transformers: Scaling to trillion parameter models with simple and efficient sparsity.

Google Launch the first super large-scale sparse language model with trillions of parameters Switch Transformer. They claim to be able to train the technology of language models containing more than one trillion parameters . Direct the parameter quantity from GPT-3 Of 1750 100 million to 100 million 1.6 One trillion , The speed is Google Previously developed language models T5-XXL Of 4 times .

06

Memory and computing optimization

The last is the optimization , The main one is the parallel optimizer 、 Model compression quantization 、 Memory reuse optimization 、 Optimization of hybrid accuracy training , Here are some of the most classic articles .

- Accurate, Large Minibatch SGD: Training ImageNet in 1 Hour.

A piece of 17 Old article on optimizer in , An important conclusion of the article is very simple , It's a linear scaling principle , But the analysis is good , It comes to an understanding of many basic knowledge in deep learning . This paper makes a detailed analysis from the perspective of experiment . Although the article analyzes how in the larger batch Training on , But for the same reason, this article can also be used in the poor party like me , When we don't have enough GPU Or how to adjust some parameters when the video memory is insufficient .

Memory optimization related papers :

- Training Deep Nets with Sublinear Memory Cost.

Chen Tianqi's name may be familiar to insiders , stay 2016 Put forward in , Mainly for memory reuse of neural network . This paper presents a systematic method to reduce memory consumption in deep neural network training . The main focus is on reducing the storage of intermediate results ( Feature mapping ) And gradient memory costs , Because in many common deep architectures , Compared with the size of the intermediate feature map , The size of the parameter is relatively small . Use computational graph analysis to perform automatic in place operations and memory sharing optimization . what's more , A new method for computing swap memory is also proposed .

- Gist: Efficient data encoding for deep neural network training.

Gist yes ISCA'18 A top meeting article , It's not a new article , But the citation is very high in the system article , After reading it, I found that the experiment was really solid , Worth learning . The main idea is how to reduce the use of video memory in neural network training .Gist Data compression oriented , Discover the training mode and the characteristics of each layer of data , Compress specific data in different schemes , So as to achieve the purpose of saving space .

- Adafactor: Adaptive learning rates with sublinear memory cost.

AdaFactor, One by Google Proposed new optimizer ,AdaFactor It has the characteristics of adaptive learning rate , But more than RMSProp And save video memory , And it also solves Adam Some of the shortcomings of . Tell the truth ,AdaFactor in the light of Adam The analysis is quite classic , It's worth pondering carefully , For readers interested in studying optimization problems , It is a rare analysis case .

- ZeRO: Memory Optimization Towards Training A Trillion Parameter Models Samyam.

Microsoft has proposed a very classic algorithm , This algorithm is also based on Pytroch A distributed parallel system is developed DeepSpeed frame . Deep learning training under the existing universal data parallel mode , Each machine needs to consume a fixed size of full memory , This part of memory and will not decrease with the parallelism of data , thus , In data parallel mode, the memory of the machine usually becomes the bottleneck of training . This paper develops a Zero Redundancy Optimizer (ZeRO), It is mainly used to solve the problem of insufficient memory in the state of data parallelism , So that the memory of the model can be evenly allocated to each gpu On , Every gpu Memory consumption on is inversely proportional to data parallelism , Without affecting the communication efficiency .

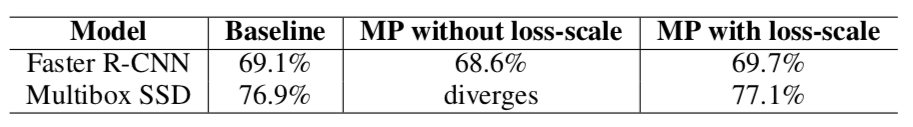

- Mixed precision training.

Article on mixing accuracy , Reference resources ZOMI The sauce is the most complete in the whole network - Mixed accuracy training principle , The content is in the article .

Related to the underlying system architecture

- Parameter Server for Distributed Machine Learning.

Article published by Li Mu, chief scientist of Amazon during his reading period . Industry needs to train large machine learning models , Some widely used specific models have two characteristics in scale :1. The parameters of deep learning model are very large , The capacity of more than a single machine is limited ;2. The training data is huge , Need to speed up distributed parallelism . In this demand , Current similar Map Reduce The framework is not well suited to . So the great God Li Mu was OSDI and NIPS Articles have been posted on , among OSDI The version is biased towards system design , and NIPS The version is biased towards the algorithm level . It is a foundational article on the distributed training architecture of deep learning .

- More Effective Distributed ML via a Stale Synchronous Parallel Parameter Server.

- GeePS: Scalable deep learning on distributed GPUs with a GPU-specialized parameter server.

Distributed deep learning can adopt BSP and SSP Two modes .1 by SSP By allowing faster worker Use staled Parameters , So as to achieve the effect of balancing computing and network communication overhead time .SSP Each iteration converges slowly , But each iteration takes less time , stay CPU On the cluster ,SSP Overall convergence rate ratio BSP faster , But in GPU Training in groups ,2 by BSP Overall convergence rate ratio SSP But much faster .

- Bandwidth Optimal All-reduce Algorithms for Clusters of Workstations.

- Bringing HPC Techniques toDeep Learning.

Baidu in 17 United in NVIDIA, Put forward ring-all-reduce Communication mode , Now it has become the standard way of communication in the industry or the way of large model communication . In the past few years , The scale of neural network is expanding , Training may require a lot of data and computing resources . In order to provide the required computing power , We use high-performance computing (HPC) Common techniques scale the model to dozens of GPU, But it is not fully used in deep learning . such ring allreduce Technology is reduced in different GPU The time spent communicating between , So that they can spend more time on useful calculations . In Baidu's Silicon Valley AI laboratory (SVAIL) in , We have successfully used these techniques to train the most advanced speech recognition models . We are happy to be Ring Allreduce The implementation of is published as TensorFlow Libraries and patches , And hope to publish these libraries , We can make the deep learning community extend its model more effectively .

MindSpore Official information

GitHub : https://github.com/mindspore-ai/mindspore

Gitee : https : //gitee.com/mindspore/mindspore

official QQ Group : 486831414

边栏推荐

- Introduction de l'opérateur

- Is it reliable to open an account on a stock trading mobile phone? Is it safe to open an account online and speculate in stocks

- Cve-2022-30190 follina office rce analysis [attached with customized word template POC]

- Solid and ambient colors

- 低佣金免费开户渠道安全吗?

- The most complete hybrid precision training principle in the whole network

- Is it safe to open an account on the mobile phone to buy stocks? Is it safe to open an account on the Internet to speculate in stocks

- 手机炒股靠谱吗 网上开户炒股安全吗

- 固有色和环境色

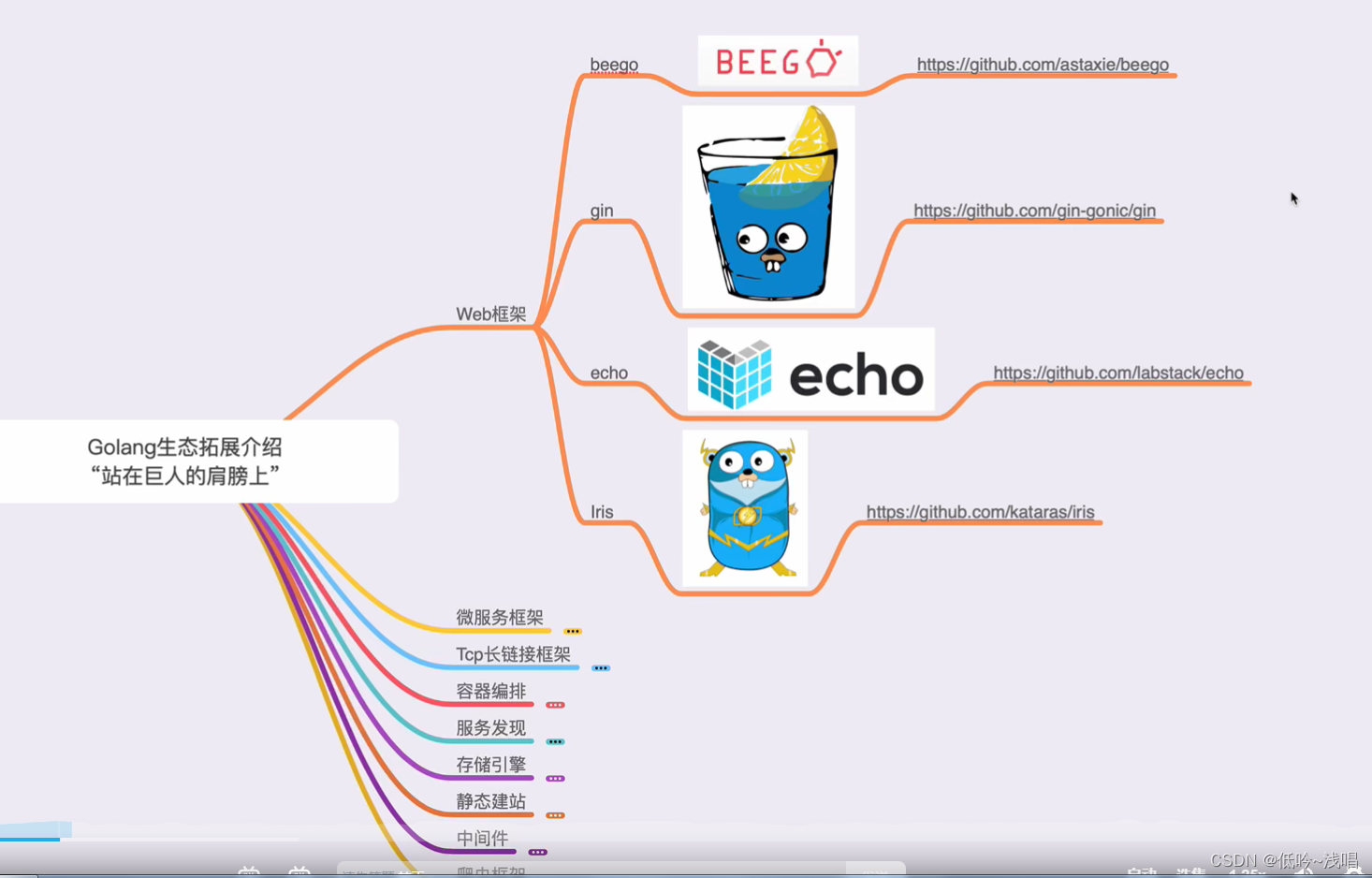

- Microservices and container choreography in go

猜你喜欢

随机推荐

ASP.Net Core创建MVC项目上传文件(缓冲方式)

Thesis study -- Analysis of the influence of rainfall field division method on rainfall control rate

国内外最好的12款项目管理系统优劣势分析

Operator介紹

目标追踪拍摄?目标遮挡拍摄?拥有19亿安装量的花瓣app,究竟有什么别出心裁的功能如此吸引用户?

低佣金免费开户渠道安全吗?

手机网上开户炒股安全吗 网上开户炒股安全吗

Leetcode 718. 最长重复子数组(暴力枚举,待解决)

Is the low commission free account opening channel safe?

Redcap is ready to come out. It is indispensable to build a "meta universe"

Le principe le plus complet de formation à la précision hybride pour l'ensemble du réseau

golang语言的开发学习路线

Crawler and Middleware of go language

Tensorrt notes (VII) sorting out tensorrt use problems

炒股手机上开户可靠吗? 网上开户炒股安全吗

go语言的爬虫和中间件

Different subsequence problems I

go语言中的私聊功能处理

12 color ring three primary colors

入侵痕迹清理