当前位置:网站首页>Using keras to build the basic model yingtailing flower

Using keras to build the basic model yingtailing flower

2022-06-11 04:51:00 【Panbohhhhh】

I recently taught myself , Feeling keras It seems very useful , Here is a record of the learning process , And give the source code .

I have written notes for detailed ideas .

A work of practice , Many deficiencies , Welcome to discuss .

First step : Build a model

Keras There are two types of models , Sequential model and functional model .

Here I use the sequence model , The method adopted is .add() Methods , Build layer by layer .

#define a network

def baseline_model():

model = Sequential() # It's sequential

model.add(Dense(7, input_dim=4 , activation='tanh')) # The input layer has 7 Nodes ,

model.add(Dense(3, activation='softmax')) # Output layer

model.compile(loss='mean_squared_error', optimizer='sgd', metrics=['accuracy']) # Mean square error Optimizer Distinguish

return model

What needs to be noted here is , The model needs to know the input of data shape, Only the first level needs to be specified shape Parameters , The following layers can be automatically derived .

Before training , The function to configure the learning process is compile(), The three parameters are : Optimizer optimizer, Loss function loss, Discriminant function metrics.

The second step : Training

Note here , I use the kfold

estimator = KerasClassifier(build_fn=baseline_model, epochs = 20, batch_size= 1, verbose=1)

- batch_size: Integers , Specifies the gradient descent for each batch Number of samples included .

- epochs: Integers , At the end of training epochs Count

- verbose: The log shows ,0 Is not output ,1 Record for the output progress bar ,2 For each epoch Output a record

The third step : Save performance parameters related to the model and output

The specific training described in this step ends –> Save the model ----> Call model ----> Output key value , A complete process .

Simply speaking , As a foundation keras The algorithm framework comes out .

In the future, I will also expand on it .

Fourth parts

Output results : Take the first act :[0.8176036 0.1570888 0.02530759], We divide the data into 3 class ,setosa,versicolor,virginica.

Take the first act :[0.8176036 0.1570888 0.02530759], We divide the data into 3 class ,setosa,versicolor,virginica.

The three data in this row , It means the possibility of these three categories ,0.817 The possibility of is the first kind ,.

The final result .

See all the codes for details

All the code :

# -*- coding: utf-8 -*-

import numpy as np

import pandas as pd

from keras.models import Sequential # Sequence Serial

from keras.layers import Dense #

from keras.wrappers.scikit_learn import KerasClassifier

from keras.utils import np_utils

from sklearn.model_selection import cross_val_score # Introduce cross validation

from sklearn.model_selection import KFold # Test sets and validation sets

from sklearn.preprocessing import LabelEncoder # Convert text to numeric values

from keras.models import model_from_json # Save the model as json, Read it out when you need it , Direct prediction .

from keras.optimizers import SGD

# reproducibility repeat

seed = 13

np.random.seed(seed) # Out of order , Lower fit

#load data Import data

df = pd.read_csv('iris.csv') # Where are the documents

X = df.values[:,0:4].astype(float)

Y = df.values[:,4]

encoder = LabelEncoder()

Y_encoded = encoder.fit_transform(Y) # take 3 Letters are converted into 0 0 1 And so on. .

Y_onehot = np_utils.to_categorical(Y_encoded) # These two should be used together , The effect is to convert characters into numbers , Then convert the numbers into matrices ,

''' ## Specific effect , About LabEncoder and to_categorical from keras.utils.np_utils import * # Class vector definition b = [0,1,2,3,4,5,6,7,8] # call to_categorical take b according to 9 Class to transform b = to_categorical(b, 9) print(b) The results are as follows : [[1. 0. 0. 0. 0. 0. 0. 0. 0.] [0. 1. 0. 0. 0. 0. 0. 0. 0.] [0. 0. 1. 0. 0. 0. 0. 0. 0.] [0. 0. 0. 1. 0. 0. 0. 0. 0.] [0. 0. 0. 0. 1. 0. 0. 0. 0.] [0. 0. 0. 0. 0. 1. 0. 0. 0.] [0. 0. 0. 0. 0. 0. 1. 0. 0.] [0. 0. 0. 0. 0. 0. 0. 1. 0.] [0. 0. 0. 0. 0. 0. 0. 0. 1.]] '''

sgd = SGD(lr=0.01, decay=1e-6, momentum=0.9, nesterov=True)

#define a network

def baseline_model():

model = Sequential() # It's sequential

model.add(Dense(7, input_dim=4 , activation='tanh')) # The input layer has 7 Nodes ,

model.add(Dense(3, activation='softmax')) # Output layer

model.compile(loss='mean_squared_error', optimizer='sgd', metrics=['accuracy']) # Mean square error Optimizer Distinguish

return model

estimator = KerasClassifier(build_fn=baseline_model, epochs = 20, batch_size= 1, verbose=1)

#evalute

kfold = KFold(n_splits=10,shuffle=True,random_state=seed) # The seed of the random generator

result = cross_val_score(estimator, X,Y_onehot,cv=kfold)

print('Accuray of cross validation, mean %.2f, std %.2f'% (result.mean(),result.std()))

#save model

estimator.fit(X, Y_onehot)

model_json = estimator.model.to_json()

with open('model.json','w') as json_file:

json_file.write(model_json)

estimator.model.save_weights('model.h5')

print('saved model to disk')

#load model and use it for prediction

json_file = open('model.json','r')

loaded_model_json = json_file.read()

json_file.close()

loaded_model = model_from_json(loaded_model_json) # Know the network structure ,

loaded_model.load_weights('model.h5') # Know the parameters and specific structure of each layer

print('loaded model from disk')

predicted = loaded_model.predict(X)

print('predicted probability:'+ str(predicted))

predicted_label = loaded_model.predict_classes()

print('predicted label:'+ str(predicted_label))

边栏推荐

- International qihuo: what are the risks of Zhengda master account

- The second discussion class on mathematical basis of information and communication

- [Transformer]Is it Time to Replace CNNs with Transformers for Medical Images?

- Tianchi - student test score forecast

- What is the difference between a wired network card and a wireless network card?

- Bas Bound, Upper Bound, deux points

- PCB ground wire design_ Single point grounding_ Bobbin line bold

- Leetcode question brushing series - mode 2 (datastructure linked list) - 206:reverse linked list

- Leetcode classic guide

- Lianrui electronics made an appointment with you with SIFA to see two network cards in the industry's leading industrial automation field first

猜你喜欢

Redis master-slave replication, sentinel, cluster cluster principle + experiment (wait, it will be later, but it will be better)

PCB地线设计_单点接地_底线加粗

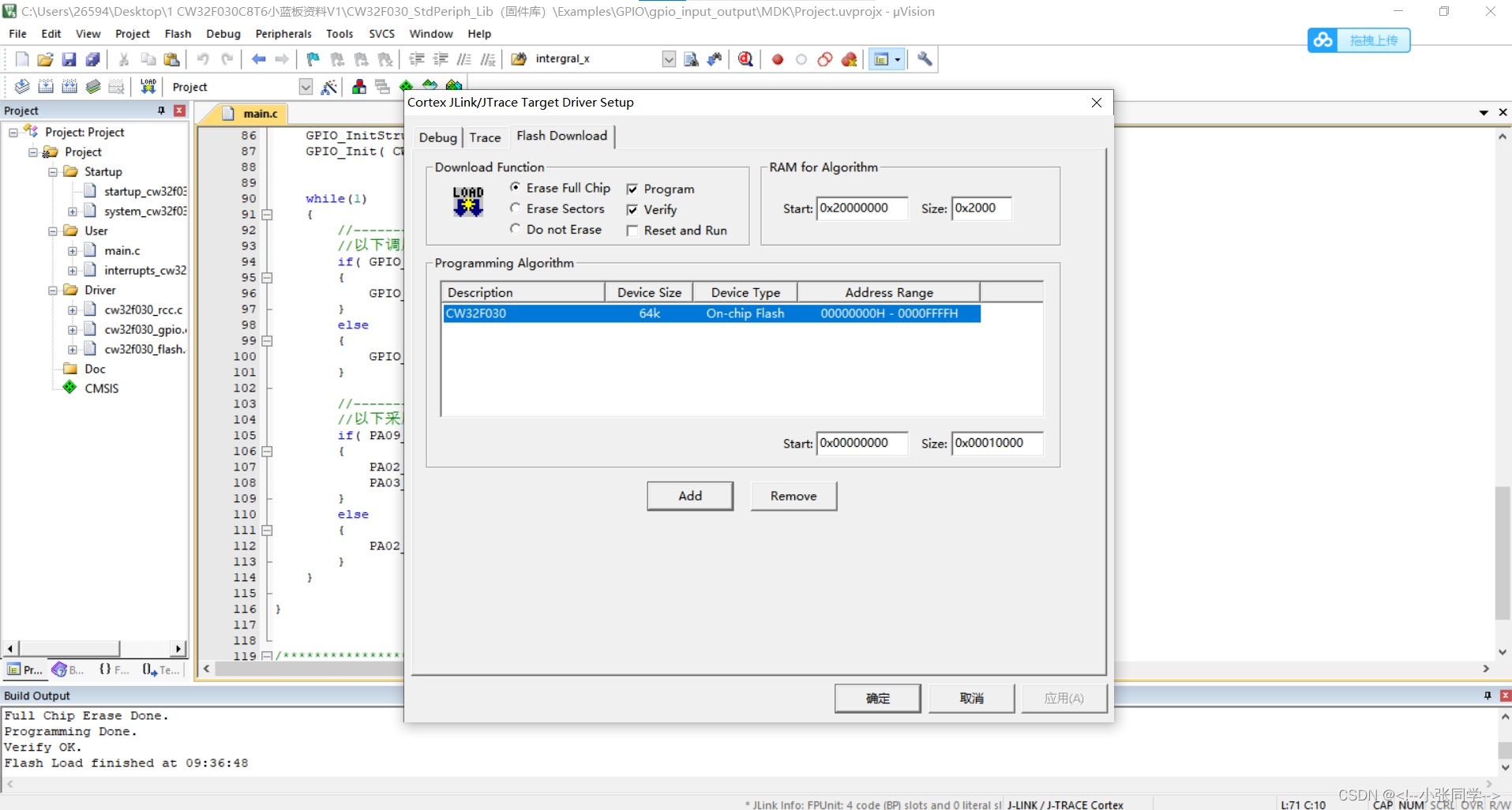

Problems in compiling core source cw32f030c8t6 with keil5

Yolact paper reading and analysis

Win10+manjaro dual system installation

选择数字资产托管人时,要问的 6 个问题

![[Transformer]AutoFormerV2:Searching the Search Space of Vision Transformer](/img/33/32796e4f807db99898d471ac5dfe2c.jpg)

[Transformer]AutoFormerV2:Searching the Search Space of Vision Transformer

Mathematical basis of information and communication -- the first experiment

The third small class discussion on the fundamentals of information and communication

Sealem Finance打造Web3去中心化金融平台基础设施

随机推荐

USB转232 转TTL概述

Possible errors during alphapose installation test

华为设备配置BGP/MPLS IP 虚拟专用网地址空间重叠

Legend has it that setting shader attributes with shader ID can improve efficiency:)

Exness: liquidity series - order block, imbalance (II)

Emlog new navigation source code / with user center

Network adapter purchase guide

Visual (Single) Object Tracking -- SiamRPN

C language test question 3 (program multiple choice question - including detailed explanation of knowledge points)

Relational database system

[Transformer]Is it Time to Replace CNNs with Transformers for Medical Images?

董明珠称“格力手机做得不比苹果差”哪里来的底气?

MySQL regularly deletes expired data.

Yolact paper reading and analysis

华为设备配置通过GRE隧道接入虚拟专用网

[Transformer]MViTv2:Improved Multiscale Vision Transformers for Classification and Detection

Anaconda installation and use process

Comparison of gigabit network card chips: who is better, a rising star or a Jianghu elder?

lower_bound,upper_bound,二分

Leetcode classic guide