当前位置:网站首页>Master the use of auto analyze in data warehouse

Master the use of auto analyze in data warehouse

2022-07-04 17:29:00 【Huawei cloud developer Alliance】

Abstract :analyze Whether the implementation is timely , To some extent, it directly determines SQL Speed of execution .

This article is shared from Huawei cloud community 《 Article to read autoanalyze Use 【 Gauss is not a mathematician this time 】》, author : leapdb.

analyze Whether the implementation is timely , To some extent, it directly determines SQL Speed of execution . therefore ,GaussDB(DWS) Automatic statistical information collection is introduced , It can make users no longer worry about whether the statistical information is expired .

1. Automatically collect scenes

There are usually five scenarios where automatic statistical information collection is required : Batch DML At the end , The incremental DML At the end ,DDL At the end , Query start and background scheduled tasks .

therefore , In order to avoid being against DML,DDL Unnecessary performance overhead and deadlock risk , We chose to trigger before the query started analzye.

2. Automatic collection principle

GaussDB(DWS) stay SQL In the process of execution , It will record the addition, deletion, modification and query of relevant runtime Statistics , And record the shared memory after the transaction is committed or rolled back .

This information can be obtained through “pg_stat_all_tables View ” Inquire about , You can also query through the following functions .

pg_stat_get_tuples_inserted -- Table accumulation insert Number of pieces

pg_stat_get_tuples_updated -- Table accumulation update Number of pieces

pg_stat_get_tuples_deleted -- Table accumulation delete Number of pieces

pg_stat_get_tuples_changed -- Table since last analyze since , Number of changes

pg_stat_get_last_analyze_time -- Query the last analyze Time therefore , Based on shared memory " Table since last analyze Number of entries modified since " Whether a certain threshold is exceeded , You can decide whether you need to do analyze 了 .

3. Automatically collect thresholds

3.1 Global threshold

autovacuum_analyze_threshold # The table triggers analyze Minimum modification of

autovacuum_analyze_scale_factor # The table triggers analyze Percentage of changes when When " Table since last analyze Number of entries modified since " >= autovacuum_analyze_threshold + Table estimated size * autovacuum_analyze_scale_factor when , It needs to be triggered automatically analyze.

3.2 Table level threshold

-- Set table level threshold

ALTER TABLE item SET (autovacuum_analyze_threshold=50);

ALTER TABLE item SET (autovacuum_analyze_scale_factor=0.1);

-- Query threshold

postgres=# select pg_options_to_table(reloptions) from pg_class where relname='item';

pg_options_to_table

---------------------------------------

(autovacuum_analyze_threshold,50)

(autovacuum_analyze_scale_factor,0.1)

(2 rows)

-- Reset threshold

ALTER TABLE item RESET (autovacuum_analyze_threshold);

ALTER TABLE item RESET (autovacuum_analyze_scale_factor);The data characteristics of different tables are different , Need to trigger analyze The threshold may have different requirements . The table level threshold priority is higher than the global threshold .

3.3 Check whether the modification amount of the table exceeds the threshold ( Only the current CN)

postgres=# select pg_stat_get_local_analyze_status('t_analyze'::regclass);

pg_stat_get_local_analyze_status

----------------------------------

Analyze not needed

(1 row)4. Automatic collection method

GaussDB(DWS) Automatic analysis of the following table in three scenarios is provided .

- When there is “ Statistics are completely missing ” or “ The modification amount reaches analyze threshold ” Table of , And the implementation plan does not take FQS (Fast Query Shipping) Execution time , Through autoanalyze Control the automatic collection of statistical information in the following table in this scenario . here , The query statement will wait for the statistics to be collected successfully , Generate a better execution plan , Then execute the original query statement .

- When autovacuum Set to on when , The system will start regularly autovacuum Threads , Yes “ The modification amount reaches analyze threshold ” The table automatically collects statistical information in the background .

5. Freeze Statistics

5.1 Freeze table distinct value

When a watch distinct It's always inaccurate , for example : Data pile up and repeat the scene . If the watch distinct Fixed value , You can freeze the table in the following ways distinct value .

postgres=# alter table lineitem alter l_orderkey set (n_distinct=0.9);

ALTER TABLE

postgres=# select relname,attname,attoptions from pg_attribute a,pg_class c where c.oid=a.attrelid and attname='l_orderkey';

relname | attname | attoptions

----------+------------+------------------

lineitem | l_orderkey | {n_distinct=0.9}

(1 row)

postgres=# alter table lineitem alter l_orderkey reset (n_distinct);

ALTER TABLE

postgres=# select relname,attname,attoptions from pg_attribute a,pg_class c where c.oid=a.attrelid and attname='l_orderkey';

relname | attname | attoptions

----------+------------+------------

lineitem | l_orderkey |

(1 row)5.2. Freeze all statistics of the table

If the data characteristics of the table are basically unchanged , You can also freeze the statistics of the table , To avoid repeating analyze.

alter table table_name set frozen_stats=true;6. Manually check whether the table needs to be done analyze

a. I don't want to trigger the database background task during the business peak , So I don't want to open autovacuum To trigger analyze, What do I do ?

b. The business has modified a number of tables , I want to do these watches right away analyze, I don't know what watches are there , What do I do ?

c. Before the business peak comes, I want to do a test on the tables near the threshold analyze, What do I do ?

We will autovacuum Check the threshold to determine whether analyze Logic , Extraction becomes a function , Help users flexibly and proactively check which tables need to be done analyze.

6.1 Determine whether the table needs analyze( Serial version , Applicable to all historical versions )

-- the function for get all pg_stat_activity information in all CN of current cluster.

CREATE OR REPLACE FUNCTION pg_catalog.pgxc_stat_table_need_analyze(in table_name text)

RETURNS BOOl

AS $$

DECLARE

row_data record;

coor_name record;

fet_active text;

fetch_coor text;

relTuples int4;

changedTuples int4:= 0;

rel_anl_threshold int4;

rel_anl_scale_factor float4;

sys_anl_threshold int4;

sys_anl_scale_factor float4;

anl_threshold int4;

anl_scale_factor float4;

need_analyze bool := false;

BEGIN

--Get all the node names

fetch_coor := 'SELECT node_name FROM pgxc_node WHERE node_type=''C''';

FOR coor_name IN EXECUTE(fetch_coor) LOOP

fet_active := 'EXECUTE DIRECT ON (' || coor_name.node_name || ') ''SELECT pg_stat_get_tuples_changed(oid) from pg_class where relname = ''''|| table_name ||'''';''';

FOR row_data IN EXECUTE(fet_active) LOOP

changedTuples = changedTuples + row_data.pg_stat_get_tuples_changed;

END LOOP;

END LOOP;

EXECUTE 'select pg_stat_get_live_tuples(oid) from pg_class c where c.oid = '''|| table_name ||'''::REGCLASS;' into relTuples;

EXECUTE 'show autovacuum_analyze_threshold;' into sys_anl_threshold;

EXECUTE 'show autovacuum_analyze_scale_factor;' into sys_anl_scale_factor;

EXECUTE 'select (select option_value from pg_options_to_table(c.reloptions) where option_name = ''autovacuum_analyze_threshold'') as value

from pg_class c where c.oid = '''|| table_name ||'''::REGCLASS;' into rel_anl_threshold;

EXECUTE 'select (select option_value from pg_options_to_table(c.reloptions) where option_name = ''autovacuum_analyze_scale_factor'') as value

from pg_class c where c.oid = '''|| table_name ||'''::REGCLASS;' into rel_anl_scale_factor;

--dbms_output.put_line('relTuples='||relTuples||'; sys_anl_threshold='||sys_anl_threshold||'; sys_anl_scale_factor='||sys_anl_scale_factor||'; rel_anl_threshold='||rel_anl_threshold||'; rel_anl_scale_factor='||rel_anl_scale_factor||';');

if rel_anl_threshold IS NOT NULL then

anl_threshold = rel_anl_threshold;

else

anl_threshold = sys_anl_threshold;

end if;

if rel_anl_scale_factor IS NOT NULL then

anl_scale_factor = rel_anl_scale_factor;

else

anl_scale_factor = sys_anl_scale_factor;

end if;

if changedTuples > anl_threshold + anl_scale_factor * relTuples then

need_analyze := true;

end if;

return need_analyze;

END; $$

LANGUAGE 'plpgsql';6.2 Determine whether the table needs analyze( Parallel Edition , For versions that support parallel execution frameworks )

-- the function for get all pg_stat_activity information in all CN of current cluster.

--SELECT sum(a) FROM pg_catalog.pgxc_parallel_query('cn', 'SELECT 1::int FROM pg_class LIMIT 10') AS (a int); Using concurrent execution framework

CREATE OR REPLACE FUNCTION pg_catalog.pgxc_stat_table_need_analyze(in table_name text)

RETURNS BOOl

AS $$

DECLARE

relTuples int4;

changedTuples int4:= 0;

rel_anl_threshold int4;

rel_anl_scale_factor float4;

sys_anl_threshold int4;

sys_anl_scale_factor float4;

anl_threshold int4;

anl_scale_factor float4;

need_analyze bool := false;

BEGIN

--Get all the node names

EXECUTE 'SELECT sum(a) FROM pg_catalog.pgxc_parallel_query(''cn'', ''SELECT pg_stat_get_tuples_changed(oid)::int4 from pg_class where relname = ''''|| table_name ||'''';'') AS (a int4);' into changedTuples;

EXECUTE 'select pg_stat_get_live_tuples(oid) from pg_class c where c.oid = '''|| table_name ||'''::REGCLASS;' into relTuples;

EXECUTE 'show autovacuum_analyze_threshold;' into sys_anl_threshold;

EXECUTE 'show autovacuum_analyze_scale_factor;' into sys_anl_scale_factor;

EXECUTE 'select (select option_value from pg_options_to_table(c.reloptions) where option_name = ''autovacuum_analyze_threshold'') as value

from pg_class c where c.oid = '''|| table_name ||'''::REGCLASS;' into rel_anl_threshold;

EXECUTE 'select (select option_value from pg_options_to_table(c.reloptions) where option_name = ''autovacuum_analyze_scale_factor'') as value

from pg_class c where c.oid = '''|| table_name ||'''::REGCLASS;' into rel_anl_scale_factor;

dbms_output.put_line('relTuples='||relTuples||'; sys_anl_threshold='||sys_anl_threshold||'; sys_anl_scale_factor='||sys_anl_scale_factor||'; rel_anl_threshold='||rel_anl_threshold||'; rel_anl_scale_factor='||rel_anl_scale_factor||';');

if rel_anl_threshold IS NOT NULL then

anl_threshold = rel_anl_threshold;

else

anl_threshold = sys_anl_threshold;

end if;

if rel_anl_scale_factor IS NOT NULL then

anl_scale_factor = rel_anl_scale_factor;

else

anl_scale_factor = sys_anl_scale_factor;

end if;

if changedTuples > anl_threshold + anl_scale_factor * relTuples then

need_analyze := true;

end if;

return need_analyze;

END; $$

LANGUAGE 'plpgsql';6.3 Determine whether the table needs analyze( Custom threshold )

-- the function for get all pg_stat_activity information in all CN of current cluster.

CREATE OR REPLACE FUNCTION pg_catalog.pgxc_stat_table_need_analyze(in table_name text, int anl_threshold, float anl_scale_factor)

RETURNS BOOl

AS $$

DECLARE

relTuples int4;

changedTuples int4:= 0;

need_analyze bool := false;

BEGIN

--Get all the node names

EXECUTE 'SELECT sum(a) FROM pg_catalog.pgxc_parallel_query(''cn'', ''SELECT pg_stat_get_tuples_changed(oid)::int4 from pg_class where relname = ''''|| table_name ||'''';'') AS (a int4);' into changedTuples;

EXECUTE 'select pg_stat_get_live_tuples(oid) from pg_class c where c.oid = '''|| table_name ||'''::REGCLASS;' into relTuples;

if changedTuples > anl_threshold + anl_scale_factor * relTuples then

need_analyze := true;

end if;

return need_analyze;

END; $$

LANGUAGE 'plpgsql';through “ Optimizer triggered real-time analyze” and “ backstage autovacuum Triggered polling analyze”,GaussDB(DWS) It has been possible to make users no longer care about whether the table needs analyze. It is recommended to try in the latest version .

Click to follow , The first time to learn about Huawei's new cloud technology ~

边栏推荐

- Analysis of abnormal frequency of minor GC in container environment

- Overflow: the combination of auto and Felx

- Readis configuration and optimization of NoSQL (final chapter)

- 离线、开源版的 Notion—— 笔记软件Anytype 综合评测

- Two methods of MD5 encryption

- Can you really use MySQL explain?

- How to implicitly pass values when transferring forms

- 一文掌握数仓中auto analyze的使用

- 动态规划股票问题对比

- NFT liquidity market security issues occur frequently - Analysis of the black incident of NFT trading platform quixotic

猜你喜欢

防火墙基础透明模式部署和双机热备

With an annual income of more than 8 million, he has five full-time jobs. He still has time to play games

离线、开源版的 Notion—— 笔记软件Anytype 综合评测

Blood spitting finishing nanny level series tutorial - play Fiddler bag grabbing tutorial (2) - first meet fiddler, let you have a rational understanding

【云原生】服务网格是什么“格”?

第十八届IET交直流輸電國際會議(ACDC2022)於線上成功舉辦

Zhijieyun - meta universe comprehensive solution service provider

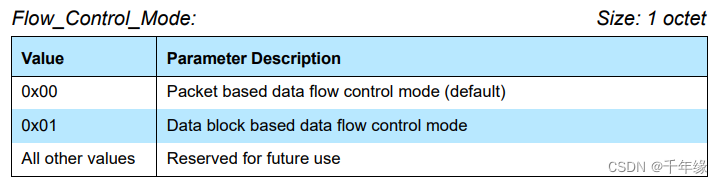

ble HCI 流控机制

矿产行业商业供应链协同系统解决方案:构建数智化供应链平台,保障矿产资源安全供应

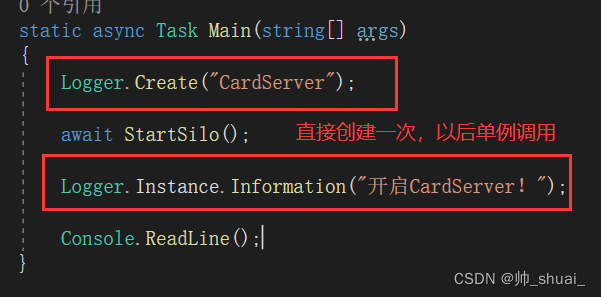

C# 服务器日志模块

随机推荐

2022年国内云管平台厂商哪家好?为什么?

利用win10计划任务程序定时自动运行jar包

ble HCI 流控机制

Leetcode list summary

Why do you say that the maximum single table of MySQL database is 20million? Based on what?

Offline and open source version of notation -- comprehensive evaluation of note taking software anytype

Yanwen logistics plans to be listed on Shenzhen Stock Exchange: it is mainly engaged in international express business, and its gross profit margin is far lower than the industry level

Smart Logistics Park supply chain management system solution: digital intelligent supply chain enables a new supply chain model for the logistics transportation industry

Is it safe for Great Wall Securities to open an account? How to open a securities account

周大福践行「百周年承诺」,真诚服务推动绿色环保

Spark 中的 Rebalance 操作以及与Repartition操作的区别

Linear time sequencing

What grade does Anxin securities belong to? Is it safe to open an account

上网成瘾改变大脑结构:语言功能受影响,让人话都说不利索

[Acwing] 58周赛 4490. 染色

电子宠物小狗-内部结构是什么?

PingCode 性能测试之负载测试实践

[acwing] 58 weeks 4489 Longest subsequence

Is it safe for Bank of China Securities to open an account online?

Zhijieyun - meta universe comprehensive solution service provider