当前位置:网站首页>Q-learning notes

Q-learning notes

2022-06-30 12:35:00 【Show brother invincible】

emmmmm, Forced reinforcement learning

The idea of reinforcement learning is actually easy to understand , By constantly interacting with the environment , To fix agent act , obtain agent In different state What should be done next action, To maximize the benefits .

Here is a strong push for this Zhihu blogger

https://www.zhihu.com/column/c_1215667894253830144

It really made me understand in vernacular , Search others to find out the formula and the theory , It's really a face of muddled ......( After you understand the process, you look at the formulas and find that they are not so difficult to understand )

Have a look first Q-Learning Algorithm flow of , Then explain one by one , Here is mo fan python Flow chart of :

The first thing to say is that you should have a basic Q Tabular , Otherwise you have no hair ,agent How to give you the next status s’ The guidance of , Is that so? , This step corresponds to the first line Initialize

then episode I searched it and it was step Set , That is, every step from the beginning of the game to the end of the game ,s Is the initial state of the game

The following is to say off-policy and on-policy The problem.

About the definition of the two , I refer to this article :

So-called off-policy and on-policy The difference between generating data and updating to ensure maximum revenue Q Whether the strategies adopted in the table stage are consistent , With Q-Learning For example , Of course you chose it when you played the game action It's trained Q(s,a) The one with the largest value is , This is called goal strategy

Target strategy (target policy): Strategies to be learned by agents

But we talked about the initial Q- The table is given at random , He needs many rounds of training , De convergence , So we were asked to take-action When traversing all possible actions in a certain state , So this is called

Behavioral strategies (behavior policy): Strategies for agent interaction with environment , That is, the policy used to generate the behavior

When the two are consistent, it is on-policy, Inconsistency is off-policy

Now consider , During training , The agent selects eplison-greedy Strategy , That is, I have a certain probability to choose now in my q table action The action with the greatest value , But not necessarily , I can also choose other movements , Then the subsequent processes, including states and actions, will be different , This makes it possible to explore different movements

By constantly playing ,Q The table will continue to converge , When it comes time to play, it will be based on Q-table Play under the target strategy , In order to obtain greater profits .

therefore Q-Learning It's a off-policy Algorithm , Because of these two stages policy Completely different

边栏推荐

- Layout of pyqt5 interface and loading of resource files

- Clipboardjs - development learning summary 1

- 【一天学awk】运算符

- [cf] 803 div2 B. Rising Sand

- 【一天学awk】数组的使用

- MySQL判断执行条件为NULL时,返回0,出错问题解决 Incorrect parameter count in the call to native function ‘ISNULL‘,

- iServer发布ES服务查询设置最大返回数量

- grep匹配查找

- Getting started with the go language is simple: go handles XML files

- How to use the plug-in mechanism to gracefully encapsulate your request hook

猜你喜欢

![[cloud native | kubernetes] in depth understanding of deployment (VIII)](/img/88/4eddb8e6535a12541867b027b109a1.png)

[cloud native | kubernetes] in depth understanding of deployment (VIII)

Building a database model using power designer tools

Pinda general permission system (day 7~day 8)

Redis - problèmes de cache

NoSQL - redis configuration and optimization

Browser plays RTSP video based on nodejs

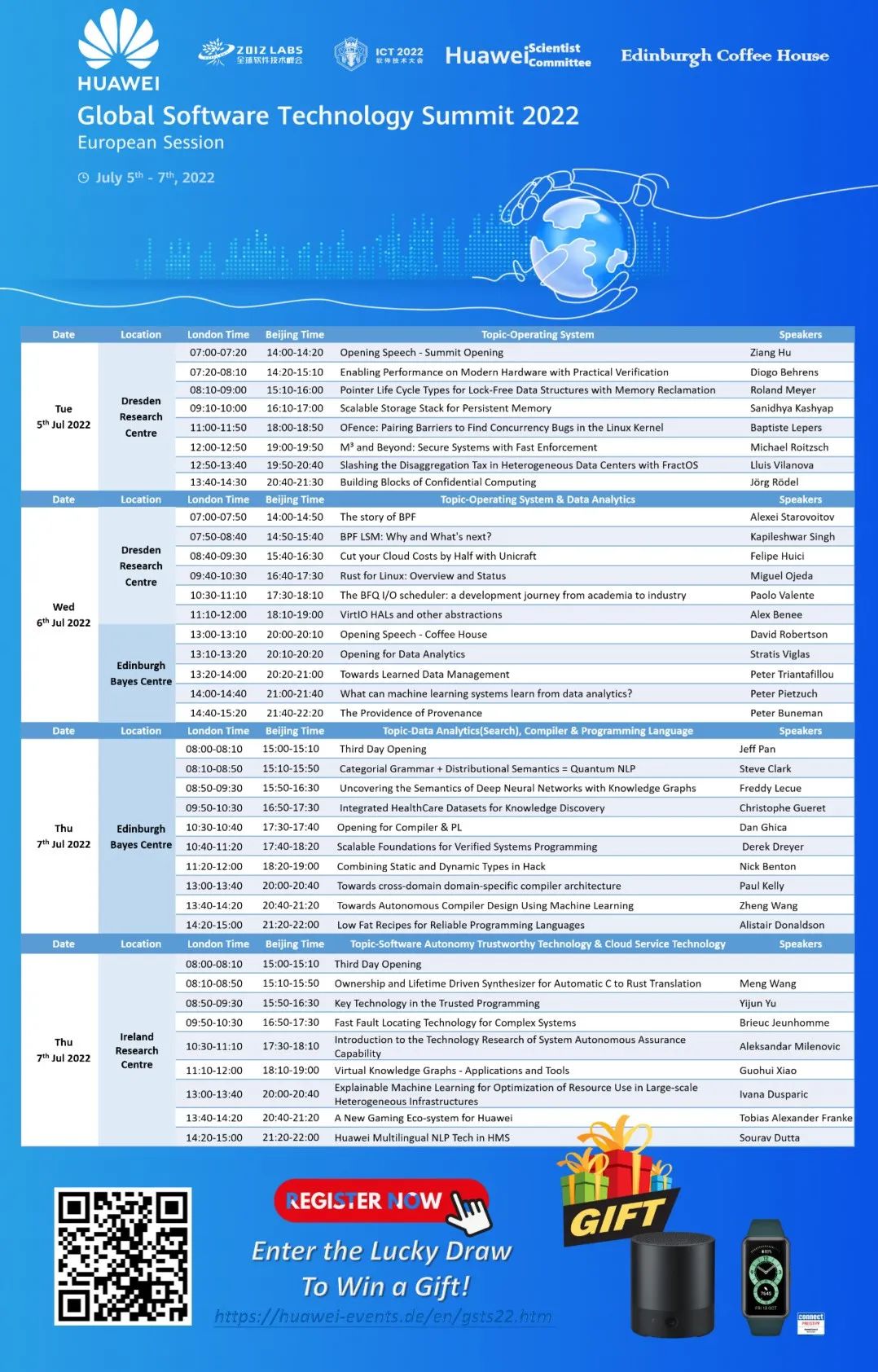

Conference Preview - Huawei 2012 lab global software technology summit - European session

Talk about how to do hardware compatibility testing and quickly migrate to openeuler?

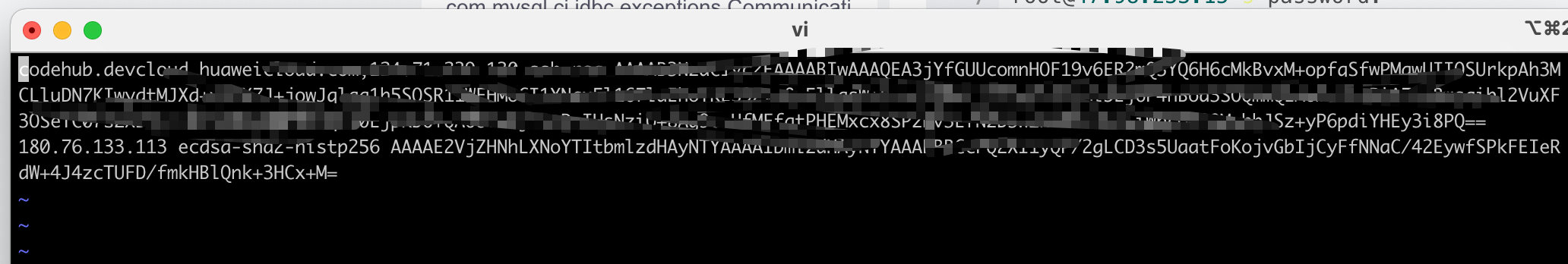

Solve the problem that the server cannot be connected via SSH during reinstallation

Redis configuration files and new data types

随机推荐

Construction de la plate - forme universelle haisi 3559: obtenir le codage après modification du cadre de données

品达通用权限系统(Day 7~Day 8)

NoSQL - redis configuration and optimization

[cf] 803 div2 A. XOR Mixup

The website with id 0 that was requested wasn‘t found. Verify the website and try again

Basic interview questions for Software Test Engineers (required for fresh students and test dishes) the most basic interview questions

Hisilicon 3559 sample parsing: Venc

Introduction to sub source code updating: mid May: uniques NFT module and nomination pool

200. number of islands

"Xiaodeng" user personal data management in operation and maintenance

Pharmacy management system

Commands for redis basic operations

海思3559万能平台搭建:YUV格式简介

A high precision positioning approach for category support components with multiscale difference reading notes

Iserver publishing es service query setting maximum return quantity

[cf] 803 div2 B. Rising Sand

Videos are stored in a folder every 100 frames, and pictures are transferred to videos after processing

JMeter性能测试工作中遇到的问题及剖析,你遇到了几个?

List集合

695. maximum island area