当前位置:网站首页>[out of distribution detection] learning confidence for out of distribution detection in neural networks arXiv '18

[out of distribution detection] learning confidence for out of distribution detection in neural networks arXiv '18

2022-06-22 06:55:00 【chad_ lee】

This article is somewhat like “learning loss” Same as that one , A wave of “end to end DL system solve everything” The smell of . I need a confidence To evaluate whether a sample is OOD data , Then my neural network model will output one confidence Indicators to predict the current sample .

Although the article was not published at the meeting , But it is highly cited .

Motivation

The author uses an example to introduce the motivation of design model . Suppose the students have to answer a series of questions to get scores in the exam , Students can choose to ask for help , But there is a small penalty for requesting a prompt . At this time, students should answer the questions with confidence , Ask for help on a topic you don't have confidence in .

At the end of the exam , Count the number of tips used by students , You can estimate their confidence in each problem . Then apply this same strategy to neural networks , It can also be used to learn confidence estimation .

Model architecture

Add a to any normal classification prediction model “ Confidence estimation branch ”, After the penultimate layer of the model , and “softmax Classification module ” parallel , Both branches accept the same input .

p , c = f ( x , Θ ) p i , c ∈ [ 0 , 1 ] , ∑ i = 1 M p i = 1 p, c=f(x, \Theta) \quad p_{i}, c \in[0,1], \sum_{i=1}^{M} p_{i}=1 p,c=f(x,Θ)pi,c∈[0,1],i=1∑Mpi=1

above p p p Represents the classification probability , Through one softmax Function to obtain ; c c c Represents the confidence score , Through one sigmoid Function to obtain .

In order to give the model “ Tips ”, In primitive softmax Prediction probability and real label y y y The final classification prediction is adjusted by interpolation , The degree of interpolation is expressed by the confidence of the network :

p i ′ = c ⋅ p i + ( 1 − c ) y i p_{i}^{\prime}=c \cdot p_{i}+(1-c) y_{i} pi′=c⋅pi+(1−c)yi

The specific process is shown in the right figure of the above figure . Use the modified probability in training , Calculate like a normal classification task loss:

L t = − ∑ i = 1 M log ( p i ′ ) y i \mathcal{L}_{t}=-\sum_{i=1}^{M} \log \left(p_{i}^{\prime}\right) y_{i} Lt=−i=1∑Mlog(pi′)yi

At the same time, in order to avoid the model, in order to reduce loss And always choose c = 0 c=0 c=0, Also for c c c Add an incentive loss, hope c c c The bigger the better ( The more confident the model is, the better ), therefore loss It also includes :

L c = − log ( c ) \mathcal{L}_{c}=-\log (c) Lc=−log(c)

The final model is loss It consists of two , Then a weight parameter λ \lambda λ Adjust the :

L = L t + λ L c \mathcal{L}=\mathcal{L}_{t}+\lambda \mathcal{L}_{c} L=Lt+λLc

Lower through training loss, Improve model performance , It can also be based on confidence c c c To measure whether the input data is OOD sample .

Three details

(Idea Pretty good , It doesn't work )

- The author found that the model is always the same for all inputs c c c. Set a parameter β \beta β, L c > β \mathcal{L}_{c}>\beta Lc>β Increase when λ \lambda λ; L c < β \mathcal{L}_{c}<\beta Lc<β Time reduction λ \lambda λ.

- The author finds that the model is more effective in learning some difficult data “ lazy ”.( My understanding is that those difficult models will reduce the confidence first loss, Not optimization loss The first item to explore the boundaries .) So in training, every batch Inside , Half of the data adopts the original loss function , A new loss function is used for half the data

- Retain samples of misclassification . I didn't understand the author's description .

OOD testing

Once a model is trained , Can be used to OOD testing , say concretely :

g ( x ; δ ) = { 1 if c ≤ δ 0 if c > δ g(x ; \delta)=\left\{\begin{array}{ll} 1 & \text { if } c \leq \delta \\ 0 & \text { if } c>\delta \end{array}\right. g(x;δ)={ 10 if c≤δ if c>δ

For an input x x x, Set a threshold δ \delta δ.

Input preprocessing

( Experimental trick)

suffer FGSM Inspired by the ,FGSM Is to add a small disturbance to the sample , To reduce the softmax Predictive value .FGSM Deviate the sample from the correct category , Instead, the sample is approximated to the correct category , That is, add a small disturbance to the sample , Make it more “ self-confidence ”(c -> 1). In order to calculate the necessary disturbances , Simply back propagate the confidence loss relative to the input gradient (backpropagate the gradients of the confidence loss with respect to the inputs):

x ˉ = x − ϵ sign ( ∇ x L c ) \bar{x}=x-\epsilon \operatorname{sign}\left(\nabla_{x} \mathcal{L}_{c}\right) xˉ=x−ϵsign(∇xLc)

I think this is an unrealistic trick, For the ID Of the training samples , After the model goes online, it is impossible to process the new data , The newcomer ID Is the data also “ Stay away from ” For training ID Data. ?

边栏推荐

- SQL injection vulnerability (x) secondary injection

- 仙人掌之歌——上线运营(5)

- -Bash: telnet: command not found solution

- leetcode:面试题 08.12. 八皇后【dfs + backtrack】

- What exactly is the open source office of a large factory like?

- [5g NR] NAS connection management - cm status

- College entrance examination is a post station on the journey of life

- MySQL ifnull processing n/a

- Qt development simple Bluetooth debugging assistant (low power Bluetooth)

- Iframe framework, native JS routing

猜你喜欢

golang调用sdl2,播放pcm音频,报错signal arrived during external code execution。

Single cell literature learning (Part3) -- dstg: deconvoluting spatial transcription data through graph based AI

C skill tree evaluation - customer first, making excellent products

vue连接mysql数据库失败

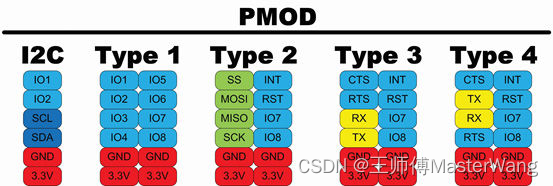

KV260的PMOD接口介绍

OpenGL - Textures

SQL injection vulnerability (x) secondary injection

Introduction to 51 Single Chip Microcomputer -- minimum system of single chip microcomputer

MySQL ifnull processing n/a

Chrome install driver

随机推荐

QT connect to Alibaba cloud using mqtt protocol

迪进面向ConnectCore系统模块推出Digi ConnectCore语音控制软件

Five common SQL interview questions

6. install the SSH connection tool (used to connect the server of our lab)

Armadillo installation

Introduction to 51 Single Chip Microcomputer -- minimum system of single chip microcomputer

自定义实现JS中的bind方法

成功解决raise KeyError(f“None of [{key}] are in the [{axis_name}]“)KeyError: “None of [Index([‘age.in.y

Py之scorecardpy:scorecardpy的简介、安装、使用方法之详细攻略

Dongjiao home development technical service

Implement a timer: timer

Great progress in code

Languo technology helps the ecological prosperity of openharmony

Network layer: IP protocol

流程引擎解决复杂的业务问题

Record of problems caused by WPS document directory update

生成字符串方式

leetcode:面试题 08.12. 八皇后【dfs + backtrack】

Golang appelle sdl2, lit l'audio PCM et signale une erreur lors de l'exécution externe du Code.

仙人掌之歌——进军To C直播(3)