当前位置:网站首页>torchvision. models._ utils. Intermediatelayergetter tutorial

torchvision. models._ utils. Intermediatelayergetter tutorial

2022-06-27 09:51:00 【jjw_ zyfx】

Don't look at the examples

import torch

import torchvision

m = torchvision.models.resnet18(pretrained=True)

print(m)

new_m = torchvision.models._utils.IntermediateLayerGetter(m,

{

'layer1': 'feat1', 'layer3': 'feat2'})

out = new_m(torch.rand(1, 3, 224, 224))

print([(k, v.shape) for k, v in out.items()])

# The output is :

# [('feat1', torch.Size([1, 64, 56, 56])), ('feat2', torch.Size([1, 256, 14, 14]))]

# Because the result is too long, I just want to explain :IntermediateLayerGetter The first parameter of is the model , That is, here is resnet18,

# The second parameter is a dictionary , among 'layer1': 'feat1', Medium layer1 representative resnet18 Medium layer1 That is, only

# resnet18 Medium layer1 Layer can be ,feat1 Is the name of the small module of the network you want to build .'layer3': 'feat2'

# Empathy layer3 Means you want to resnet18 From the beginning to layer3 layer ( contain layer3 layer ) end , And use feat2 As a small module name

Complete output results :

ResNet(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(1): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer2): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(64, 128, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer3): Sequential(

(0): BasicBlock(

(conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(128, 256, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer4): Sequential(

(0): BasicBlock(

(conv1): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(avgpool): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Linear(in_features=512, out_features=1000, bias=True)

)

[('feat1', torch.Size([1, 64, 56, 56])), ('feat2', torch.Size([1, 256, 14, 14]))]

边栏推荐

- Hitek power supply maintenance X-ray machine high voltage generator maintenance xr150-603-02

- vector::data() 指针使用细节

- js 所有的网络请求方式

- Scientists develop two new methods to provide stronger security protection for intelligent devices

- MYSQL精通-01 增删改

- 邮件系统(基于SMTP协议和POP3协议-C语言实现)

- Arduino PROGMEM静态存储区的使用介绍

- Semi supervised learning—— Π- Introduction to model, temporary assembling and mean teacher

- 如何获取GC(垃圾回收器)的STW(暂停)时间?

- 我大抵是卷上瘾了,横竖睡不着!竟让一个Bug,搞我两次!

猜你喜欢

隐私计算FATE-离线预测

Hitek power supply maintenance X-ray machine high voltage generator maintenance xr150-603-02

On anchors in object detection

![[vivid understanding] the meanings of various evaluation indicators commonly used in deep learning TP, FP, TN, FN, IOU and accuracy](/img/d6/119f32f73d25ddd97801f536d68752.png)

[vivid understanding] the meanings of various evaluation indicators commonly used in deep learning TP, FP, TN, FN, IOU and accuracy

Quick start CherryPy (1)

![[system design] proximity service](/img/02/57f9ded0435a73f86dce6eb8c16382.png)

[system design] proximity service

详解各种光学仪器成像原理

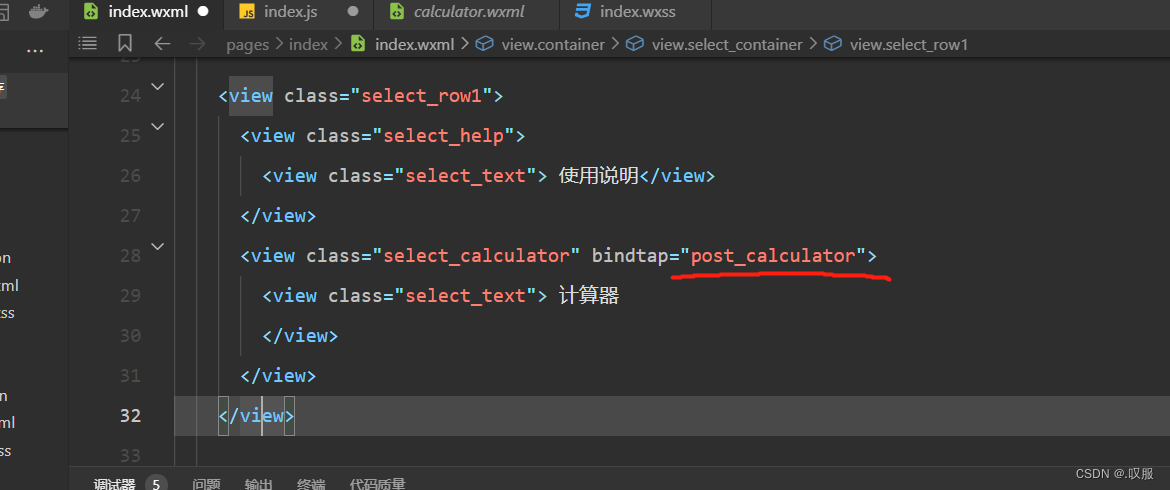

Five page Jump methods for wechat applet learning

基于STM32设计的蓝牙健康管理设备

有关二叉树的一些练习题

随机推荐

巴基斯坦安全部队开展反恐行动 打死7名恐怖分子

How do I get the STW (pause) time of a GC (garbage collector)?

谷歌浏览器 chropath插件

感应电机直接转矩控制系统的设计与仿真(运动控制matlab/simulink)

ucore lab5

SVN版本控制器的安装及使用方法

1098 insertion or heap sort (PAT class a)

别再用 System.currentTimeMillis() 统计耗时了,太 Low,StopWatch 好用到爆!

分布式文件存储系统的优点和缺点

细说物体检测中的Anchors

Understand neural network structure and optimization methods

[200 opencv routines] 211 Draw vertical rectangle

视频文件太大?使用FFmpeg来无损压缩它

如何获取GC(垃圾回收器)的STW(暂停)时间?

TDengine 邀请函:做用技术改变世界的超级英雄,成为 TD Hero

Prometheus alarm process and related time parameter description

通俗易懂理解樸素貝葉斯分類的拉普拉斯平滑

有关二叉树的一些练习题

Freemarker

Quartz (timer)