当前位置:网站首页>Positive and negative sample division and architecture understanding in image classification and target detection

Positive and negative sample division and architecture understanding in image classification and target detection

2022-07-03 10:01:00 【Star soul is not a dream】

understand Deep learning with supervised learning Of The key Lies in Reasoning and Training Phase in Separate , A model can be understood by understanding the reasoning of various deep neural network architectures and the operation in the training stage .

The reasoning stage Yes, it will Model As a similar Nonlinear function of black box , For example, through the combination of various convolution modules, a backbone, Output what you want shape Tensor , Do it again post-processing .

Training phase Is the need to Divide positive and negative samples , then According to the task To design a Loss function , Use optimization algorithms such as SGD Update the neurons in an iterative way weight and bias, The goal of optimization is to minimize the loss function , So the trained model can Fit the training set .

We can usually put All Neural Networks With Encoder - decoder Understand the architecture of .

Image classification :

- The reasoning stage : Input as image , And then there was Encoder ( Such as CNN) Encode as tensor , It's usually W/H Reduce x times , And the number of channels C increase y times , Encoded into a new tensor (W/x, H/x, yC). And then there was decoder , Join in FC、softmax etc. . Of course , Can also be softmax All the previous understanding is Encoder , hold softmax Understood as a decoder .

- Training phase : The same as the reasoning stage , But is softmax Output vector Need and The labeled label calculates the cross entropy loss ( Commonly used ), So as to back propagate updates softmax All before weight and bias.

object detection :

- The reasoning stage : Target detection is more complex , Generally speaking , The architecture of target detection is Backbone + Neck + Detection head. Interestingly The name , trunk And then there was Neck And finally Detection head of decision .Backbone Often We are Large image classification data set Pre training model for training on ( Encoder for image classification ), This is because Annotation of classification problems Cheaper , However, the features extracted from the two tasks of the network can be used universally , Therefore, it is an idea of transfer learning .Neck yes Backbone Some of the output tensors Feature fusion operation , Get better combination features to It is suitable for the detection of targets of different sizes .Detection head yes Neck The tensor after fusion is operated , Output what you want shape Tensor . And finally post-processing , Delete a part of the vector according to the threshold , And then use NMS Remove redundant borders .

Of course , We can Backbone + Neck as Encoder ,Detection head as decoder . Be careful : Some architectures may not Neck , Such as YOLO v1, So it will bring performance loss .

Backbone + Neck + Detection head Our architecture allows us to design individual modules separately , Then we can construct different target detection models by replacing .

2. Training phase :

The core of the training phase is The design of loss function .Detection head The output tensor and the loss of label annotation , So as to update the network . therefore , This part does not cover the above post-processing . The key here is The choice of positive and negative samples , To calculate the loss .

stay Image classification task in Positive sample yes This kind All labeled images , The negative sample is Other categories All images . Network input positive sample image , then Predicted value and label vector 1 Where to seek loss , So the predicted value will become larger , Thus reducing losses , because softmax constraint , Then the other values of the prediction vector will become smaller ; When the network inputs the negative sample image of a class at present , The predicted value of the class to which the image belongs will become larger , Other values will also become smaller . therefore , For image classification , We don't need to pay attention to the division of positive and negative samples , Because by Labeled one-hot code , Naturally, it is equivalent to distinguishing positive and negative samples .

Target detection task in , Enter an image , Unlike image classification , Units of positive and negative samples No longer an image , and Is an area in an image , therefore An image has multiple positive and negative samples , Although the size of these areas is smaller than the image in image classification , But because of the huge number , So compared with target detection slow More . that How to get these areas ( sample )? How to divide so many areas into positive and negative samples ? These are two important questions . The former : A common practice is anchor based To get these areas , Some generated on small pieces of each image A priori box anchor It's a sample . the latter : Commonly used are based on and Real box Of IOU To divide positive and negative samples , Different algorithms have different strategies . If anchor Divided into positive samples , So right. This positive sample Conduct Return to You can get Prediction box , Then the prediction box can participate in the loss function Calculation of positioning loss , Prediction box and Real box Calculated distance .

Notice that there are three kinds of boxes :

- Real box

- A priori box anchor

- Prediction box

Sum up , In target detection Positive samples are not Real box , The real dimension box is the goal of optimization , just as Image classification Medium one-hot Encoded vector equally . Positive sample Those who choose Partial a priori box anchor, just as Image classification Medium An image of a class . And through the model A priori box anchor And what you get is Prediction box , just as Image classification Medium Prediction vector , therefore Prediction box and real box Loss. Of course , image yolov1 did not anchor, So there are some differences .

Backbone + Neck + Detection head modular :

- Input: Image, Patches, Image Pyramid

- Backbones: VGG16, ResNet-50, SpineNet , EffificientNet-B0/B7, CSPResNeXt50, CSPDarknet53, swin transformer

- Neck:

- Additional blocks: SPP, ASPP, RFB , SAM

- Path-aggregation blocks: FPN, PAN, NAS-FPN, Fully-connected FPN, BiFPN, ASFF, SFAM

- Heads:

- Dense Prediction (one-stage):

- RPN, SSD, YOLO(v2-v5), RetinaNet (anchor based)

- YOLOv1, CornerNet, CenterNet, MatrixNet, FCOS(anchor free)

- Sparse Prediction (two-stage):

- Faster R-CNN, R-FCN, Mask R-CNN(anchor based)

- RepPoints(anchor free)

- Dense Prediction (one-stage):

notes : It comes from yolov4 The paper .

Part of the Positive and negative sample division strategy , Please refer to :

Target detection Anchor And Loss Sort out the calculation | Scavenging records

anchor Generation method , Please refer to :

Anchor frame (anchor box) Understanding and code implementation - You know

Reference resources :

边栏推荐

- Fundamentals of Electronic Technology (III)__ Chapter 1 resistance of parallel circuit

- (2) New methods in the interface

- Oracle database SQL statement execution plan, statement tracking and optimization instance

- Project cost management__ Cost management technology__ Article 8 performance review

- It is difficult to quantify the extent to which a single-chip computer can find a job

- Stm32-hal library learning, using cubemx to generate program framework

- Interruption system of 51 single chip microcomputer

- 4G module initialization of charge point design

- STM32 general timer output PWM control steering gear

- Qt QComboBox QSS样式设置

猜你喜欢

Seven sorting of ten thousand words by hand (code + dynamic diagram demonstration)

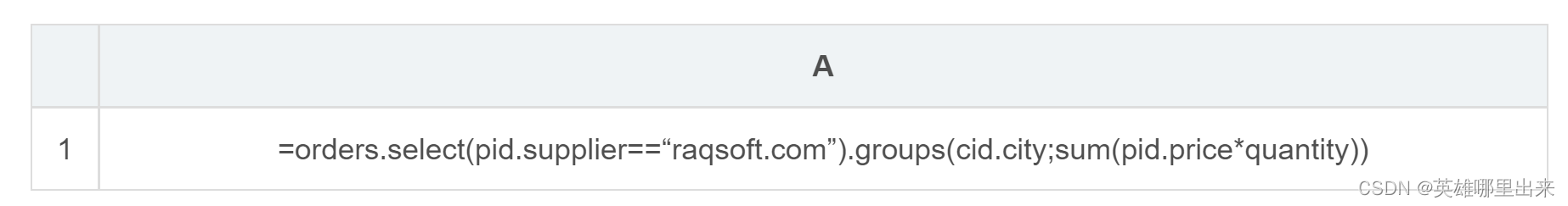

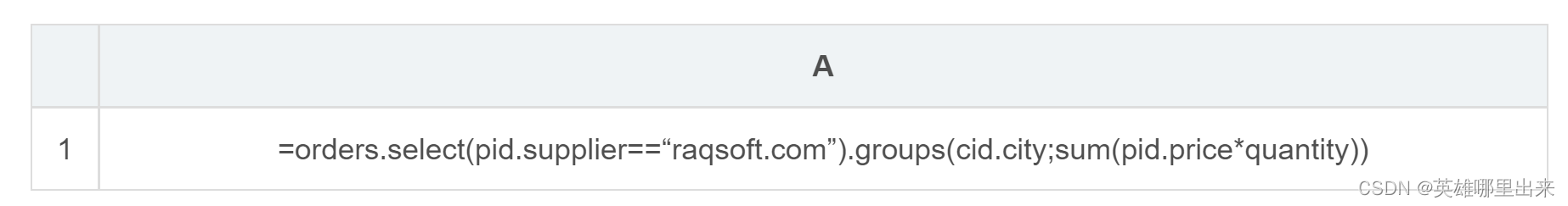

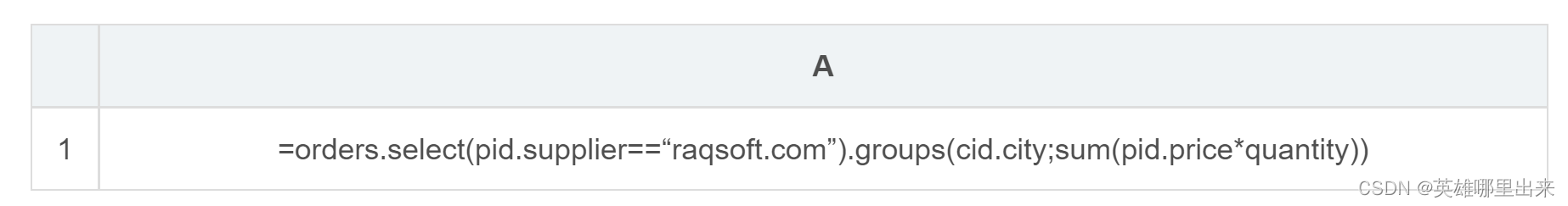

Comment la base de données mémoire joue - t - elle l'avantage de la mémoire?

内存数据库究竟是如何发挥内存优势的?

当你需要使用STM32某些功能,而51实现不了时, 那32自然不需要学

应用最广泛的8位单片机当然也是初学者们最容易上手学习的单片机

嵌入式系统没有特别明确的定义

Pymssql controls SQL for Chinese queries

Fundamentals of Electronic Technology (III)__ Fundamentals of circuit analysis__ Basic amplifier operating principle

內存數據庫究竟是如何發揮內存優勢的?

嵌入式本来就很坑,相对于互联网来说那个坑多得简直是难走

随机推荐

【力扣刷题笔记(二)】特别技巧,模块突破,45道经典题目分类总结,在不断巩固中精进

4G module IMEI of charging pile design

Project cost management__ Cost management technology__ Article 6 prediction

[Li Kou brush question notes (II)] special skills, module breakthroughs, classification and summary of 45 classic questions, and refinement in continuous consolidation

2020-08-23

It is difficult to quantify the extent to which a single-chip computer can find a job

Liquid crystal display

STM32 general timer output PWM control steering gear

自動裝箱與拆箱了解嗎?原理是什麼?

干单片机这一行的时候根本没想过这么多,只想着先挣钱养活自己

Fundamentals of Electronic Technology (III)__ Chapter 1 resistance of parallel circuit

51 MCU tmod and timer configuration

Gpiof6, 7, 8 configuration

Vector processor 9_ Basic multilevel interconnection network

Basic knowledge of communication interface

开学实验里要用到mysql,忘记基本的select语句怎么玩啦?补救来啦~

Runtime. getRuntime(). GC () and runtime getRuntime(). The difference between runfinalization()

JMX、MBean、MXBean、MBeanServer 入门

Introduction to chromium embedded framework (CEF)

getopt_ Typical use of long function