当前位置:网站首页>Comprehensive overview of ijkplayer contour features for ijkplayer code walk through

Comprehensive overview of ijkplayer contour features for ijkplayer code walk through

2022-06-13 06:29:00 【That's right】

stay read_thread() Call in thread stream_component_open(ffp, st_index[AVMEDIA_TYPE_VIDEO]); function ,

This function decoder_start(&is->viddec, video_thread, ffp, “ff_video_dec”) Launched the video_thread Threads ,

This code is from android Program space , have a look ijkPlayer Basic contour features .

The first 1 part wideo_thread Thread source

//> Source path : libavformat/ff_ffplay.c in

static int video_thread(void *arg)

{

FFPlayer *ffp = (FFPlayer *)arg;

int ret = 0;

if (ffp->node_vdec) {

ret = ffpipenode_run_sync(ffp->node_vdec);

}

return ret;

}

//> Source path : libavformat/ff_ffpipenode.c in

int ffpipenode_run_sync(IJKFF_Pipenode *node)

{

return node->func_run_sync(node);

}

The first 2 part this func_run_sync() Is a pointer , Who the hell is he .

This pointer needs to be combed node->func_run_sync Point to the function implementation , The following contents can be found from 1 ~ 7 Look at it this way , Would be right ijkPlayer Have a clearer understanding .

//> 7. SDL_AMediaCodecJava_init() Initialize function contents

static SDL_AMediaCodec* SDL_AMediaCodecJava_init(JNIEnv *env, jobject android_media_codec)

{

SDLTRACE("%s", __func__);

jobject global_android_media_codec = (*env)->NewGlobalRef(env, android_media_codec);

if (J4A_ExceptionCheck__catchAll(env) || !global_android_media_codec) {

return NULL;

}

SDL_AMediaCodec *acodec = SDL_AMediaCodec_CreateInternal(sizeof(SDL_AMediaCodec_Opaque));

if (!acodec) {

SDL_JNI_DeleteGlobalRefP(env, &global_android_media_codec);

return NULL;

}

SDL_AMediaCodec_Opaque *opaque = acodec->opaque;

opaque->android_media_codec = global_android_media_codec;

acodec->opaque_class = &g_amediacodec_class;

acodec->func_delete = SDL_AMediaCodecJava_delete;

acodec->func_configure = NULL;

acodec->func_configure_surface = SDL_AMediaCodecJava_configure_surface;

acodec->func_start = SDL_AMediaCodecJava_start; //> The contents of the function are as follows

acodec->func_stop = SDL_AMediaCodecJava_stop;

acodec->func_flush = SDL_AMediaCodecJava_flush;

acodec->func_writeInputData = SDL_AMediaCodecJava_writeInputData;

acodec->func_dequeueInputBuffer = SDL_AMediaCodecJava_dequeueInputBuffer;

acodec->func_queueInputBuffer = SDL_AMediaCodecJava_queueInputBuffer;

acodec->func_dequeueOutputBuffer = SDL_AMediaCodecJava_dequeueOutputBuffer;

acodec->func_getOutputFormat = SDL_AMediaCodecJava_getOutputFormat;

acodec->func_releaseOutputBuffer = SDL_AMediaCodecJava_releaseOutputBuffer;

acodec->func_isInputBuffersValid = SDL_AMediaCodecJava_isInputBuffersValid;

SDL_AMediaCodec_increaseReference(acodec);

return acodec;

}

//> The function content is here

static sdl_amedia_status_t SDL_AMediaCodecJava_start(SDL_AMediaCodec* acodec)

{

SDLTRACE("%s", __func__);

JNIEnv *env = NULL;

if (JNI_OK != SDL_JNI_SetupThreadEnv(&env)) {

ALOGE("%s: SetupThreadEnv failed", __func__);

return SDL_AMEDIA_ERROR_UNKNOWN;

}

SDL_AMediaCodec_Opaque *opaque = (SDL_AMediaCodec_Opaque *)acodec->opaque;

jobject android_media_codec = opaque->android_media_codec;

J4AC_MediaCodec__start(env, android_media_codec);

if (J4A_ExceptionCheck__catchAll(env)) {

//> This function is a callback android Program .

ALOGE("%s: start failed", __func__);

return SDL_AMEDIA_ERROR_UNKNOWN;

}

return SDL_AMEDIA_OK;

}

//> This function

static volatile int g_amediacodec_object_serial;

int SDL_AMediaCodec_create_object_serial()

{

int object_serial = __sync_add_and_fetch(&g_amediacodec_object_serial, 1);

if (object_serial == 0)

object_serial = __sync_add_and_fetch(&g_amediacodec_object_serial, 1);

return object_serial;

}

//> __sync_fetch_and_add Function function , In multithreading , Simple variable operation can ensure the correctness of the result .

//> 6. Source path :ijksdl_codec_android_mediacodec_java.c In file

SDL_AMediaCodec* SDL_AMediaCodecJava_createByCodecName(JNIEnv *env, const char *codec_name)

{

SDLTRACE("%s", __func__);

jobject android_media_codec = J4AC_MediaCodec__createByCodecName__withCString__catchAll(env, codec_name);

if (J4A_ExceptionCheck__catchAll(env) || !android_media_codec) {

return NULL;

}

SDL_AMediaCodec* acodec = SDL_AMediaCodecJava_init(env, android_media_codec); //> initialization Decoder control interface , see 7 Subsection description

acodec->object_serial = SDL_AMediaCodec_create_object_serial(); //> see 7 Subsection description

SDL_JNI_DeleteLocalRefP(env, &android_media_codec);

return acodec;

}

//> 5. Source path :pipeline/ffpipenode_androd_mediacodec_vdec.c in ,

IJKFF_Pipenode *ffpipenode_init_decoder_from_android_mediacodec(FFPlayer *ffp, IJKFF_Pipeline *pipeline, SDL_Vout *vout)

{

if (SDL_Android_GetApiLevel() < IJK_API_16_JELLY_BEAN)

return NULL;

if (!ffp || !ffp->is)

return NULL;

IJKFF_Pipenode *node = ffpipenode_alloc(sizeof(IJKFF_Pipenode_Opaque));

if (!node)

return node;

VideoState *is = ffp->is;

IJKFF_Pipenode_Opaque *opaque = node->opaque;

JNIEnv *env = NULL;

node->func_destroy = func_destroy;

if (ffp->mediacodec_sync) {

///> The initialization value of this parameter is not found

node->func_run_sync = func_run_sync_loop;

} else {

node->func_run_sync = func_run_sync; //> Point to this function , The first 1 Part of the video thread running functions

}

node->func_flush = func_flush;

opaque->pipeline = pipeline;

opaque->ffp = ffp;

opaque->decoder = &is->viddec; //> Point to is->viddec Video decoder

opaque->weak_vout = vout;

opaque->acodec_mutex = SDL_CreateMutex();

opaque->acodec_cond = SDL_CreateCond();

opaque->acodec_first_dequeue_output_mutex = SDL_CreateMutex();

opaque->acodec_first_dequeue_output_cond = SDL_CreateCond();

opaque->any_input_mutex = SDL_CreateMutex();

opaque->any_input_cond = SDL_CreateCond();

if (!opaque->acodec_cond || !opaque->acodec_cond || !opaque->acodec_first_dequeue_output_mutex || !opaque->acodec_first_dequeue_output_cond) {

ALOGE("%s:open_video_decoder: SDL_CreateCond() failed\n", __func__);

goto fail;

}

opaque->codecpar = avcodec_parameters_alloc();

if (!opaque->codecpar)

goto fail;

if (JNI_OK != SDL_JNI_SetupThreadEnv(&env)) {

ALOGE("%s:create: SetupThreadEnv failed\n", __func__);

goto fail;

}

ALOGI("%s:use default mediacodec name: %s\n", __func__, ffp->mediacodec_default_name);

strcpy(opaque->mcc.codec_name, ffp->mediacodec_default_name);

opaque->acodec = SDL_AMediaCodecJava_createByCodecName(env, ffp->mediacodec_default_name); //> establish android Callback interface related

if (!opaque->acodec) {

goto fail;

}

return node;

fail:

ALOGW("%s: init fail\n", __func__);

ffpipenode_free_p(&node);

return NULL;

}

//> 4. call ffpipenode_init_decoder_from_android_mediacodec() person

static IJKFF_Pipenode *func_init_video_decoder(IJKFF_Pipeline *pipeline, FFPlayer *ffp)

{

IJKFF_Pipeline_Opaque *opaque = pipeline->opaque;

IJKFF_Pipenode *node = NULL;

if (ffp->mediacodec_all_videos || ffp->mediacodec_avc || ffp->mediacodec_hevc || ffp->mediacodec_mpeg2)

node = ffpipenode_init_decoder_from_android_mediacodec(ffp, pipeline, opaque->weak_vout);

return node;

}

//> 3. call func_init_video_decoder() person

IJKFF_Pipeline *ffpipeline_create_from_android(FFPlayer *ffp)

{

ALOGD("ffpipeline_create_from_android()\n");

IJKFF_Pipeline *pipeline = ffpipeline_alloc(&g_pipeline_class, sizeof(IJKFF_Pipeline_Opaque));

if (!pipeline)

return pipeline;

IJKFF_Pipeline_Opaque *opaque = pipeline->opaque;

opaque->ffp = ffp;

opaque->surface_mutex = SDL_CreateMutex();

opaque->left_volume = 1.0f; //> Player volume control

opaque->right_volume = 1.0f;

if (!opaque->surface_mutex) {

ALOGE("ffpipeline-android:create SDL_CreateMutex failed\n");

goto fail;

}

pipeline->func_destroy = func_destroy;

pipeline->func_open_video_decoder = func_open_video_decoder; //> Turn on the video decoder

pipeline->func_open_audio_output = func_open_audio_output; //> Turn on the audio decoder

pipeline->func_init_video_decoder = func_init_video_decoder;

pipeline->func_config_video_decoder = func_config_video_decoder;

return pipeline;

fail:

ffpipeline_free_p(&pipeline);

return NULL;

}

//> 2. call ffpipeline_create_from_android() person , Next function source code path :ijkplayer_android.c In file

IjkMediaPlayer *ijkmp_android_create(int(*msg_loop)(void*))

{

IjkMediaPlayer *mp = ijkmp_create(msg_loop); //> establish FFPlayer player , initialization ffp->msg_queue Users and android Program information exchange .

if (!mp)

goto fail;

mp->ffplayer->vout = SDL_VoutAndroid_CreateForAndroidSurface(); //> initialization vout->create_overlay = func_create_overlay

if (!mp->ffplayer->vout)

goto fail;

mp->ffplayer->pipeline = ffpipeline_create_from_android(mp->ffplayer); //> see 3 Some notes

if (!mp->ffplayer->pipeline)

goto fail;

ffpipeline_set_vout(mp->ffplayer->pipeline, mp->ffplayer->vout);//> Build a video pipeline

return mp;

fail:

ijkmp_dec_ref_p(&mp);

return NULL;

}

//> 1. call ijkmp_android_create() person , Next function source code path :ijkplayer_jni.c In file

static void IjkMediaPlayer_native_setup(JNIEnv *env, jobject thiz, jobject weak_this)

{

MPTRACE("%s\n", __func__);

IjkMediaPlayer *mp = ijkmp_android_create(message_loop);

JNI_CHECK_GOTO(mp, env, "java/lang/OutOfMemoryError", "mpjni: native_setup: ijkmp_create() failed", LABEL_RETURN);

jni_set_media_player(env, thiz, mp);

ijkmp_set_weak_thiz(mp, (*env)->NewGlobalRef(env, weak_this));

ijkmp_set_inject_opaque(mp, ijkmp_get_weak_thiz(mp));

ijkmp_set_ijkio_inject_opaque(mp, ijkmp_get_weak_thiz(mp));

ijkmp_android_set_mediacodec_select_callback(mp, mediacodec_select_callback, ijkmp_get_weak_thiz(mp));

LABEL_RETURN:

ijkmp_dec_ref_p(&mp);

}

thus

We see that this program is used by users in android Program space through JNI Interface _native_setup() Create a player to call this program .

Let's sort out the relevant data structures in this part , In this way, we can get to the point .

struct IjkMediaPlayer {

volatile int ref_count;

pthread_mutex_t mutex;

FFPlayer *ffplayer; //> 1. FFPlayer Pointer to the player

int (*msg_loop)(void*); //> 2. msg_loop Thread used to exchange information with the player

SDL_Thread *msg_thread;

SDL_Thread _msg_thread;

int mp_state;

char *data_source; //> 3. The content of the playback source address set by the user

void *weak_thiz; //> 4. relation android Of jobject object

int restart;

int restart_from_beginning;

int seek_req;

long seek_msec;

};

typedef struct IJKFF_Pipeline_Opaque {

FFPlayer *ffp; //> Point to the player

SDL_mutex *surface_mutex;

jobject jsurface;

volatile bool is_surface_need_reconfigure;

bool (*mediacodec_select_callback)(void *opaque, ijkmp_mediacodecinfo_context *mcc);

void *mediacodec_select_callback_opaque; //> Callback interface

SDL_Vout *weak_vout;

float left_volume;

float right_volume;

} IJKFF_Pipeline_Opaque;

summary :

- The user is in android Program space through JNI Interface _native_setup() Create player ;

- IjkMediaPlayer Basic features such as structure definition , There is one FFPlayer、 Information exchange capability ;

- adopt Pipeline Add control interface ;

- Put player related events , adopt SDL_AMediaCodecJava_init() Function to implement the interface callback function ;

- User pass JNI Interface call IjkMediaPlayer_prepareAsync() function , Change the player state to the ready state ,

During this procedure, we call open_stream() function , establish read_thread() and video_thread() Threads .

thus , In combination with the previous several code walkthroughs , We are right. ijkPlayer The whole character can establish the outline cognition .

The first 3 part ijkPlayer How information is exchanged

have a look message_loop() The function is implemented as follows :

static int message_loop(void *arg)

{

MPTRACE("%s\n", __func__);

JNIEnv *env = NULL;

if (JNI_OK != SDL_JNI_SetupThreadEnv(&env)) {

ALOGE("%s: SetupThreadEnv failed\n", __func__);

return -1;

}

IjkMediaPlayer *mp = (IjkMediaPlayer*) arg;

JNI_CHECK_GOTO(mp, env, NULL, "mpjni: native_message_loop: null mp", LABEL_RETURN);

message_loop_n(env, mp);

LABEL_RETURN:

ijkmp_dec_ref_p(&mp);

MPTRACE("message_loop exit");

return 0;

}

//> Loop body function

static void message_loop_n(JNIEnv *env, IjkMediaPlayer *mp)

{

jobject weak_thiz = (jobject) ijkmp_get_weak_thiz(mp);

JNI_CHECK_GOTO(weak_thiz, env, NULL, "mpjni: message_loop_n: null weak_thiz", LABEL_RETURN);

while (1) {

AVMessage msg;

int retval = ijkmp_get_msg(mp, &msg, 1); //> Get the event content 、 first floor Information processing loop body

if (retval < 0)

break;

// block-get should never return 0

assert(retval > 0);

switch (msg.what) {

//> The second floor The main body of the information processing cycle

case FFP_MSG_FLUSH:

MPTRACE("FFP_MSG_FLUSH:\n");

post_event(env, weak_thiz, MEDIA_NOP, 0, 0);

break;

case FFP_MSG_ERROR:

MPTRACE("FFP_MSG_ERROR: %d\n", msg.arg1);

post_event(env, weak_thiz, MEDIA_ERROR, MEDIA_ERROR_IJK_PLAYER, msg.arg1);

break;

case FFP_MSG_PREPARED:

MPTRACE("FFP_MSG_PREPARED:\n");

post_event(env, weak_thiz, MEDIA_PREPARED, 0, 0);

break;

case FFP_MSG_COMPLETED:

MPTRACE("FFP_MSG_COMPLETED:\n");

post_event(env, weak_thiz, MEDIA_PLAYBACK_COMPLETE, 0, 0);

break;

case FFP_MSG_VIDEO_SIZE_CHANGED: //> Resolution change

MPTRACE("FFP_MSG_VIDEO_SIZE_CHANGED: %d, %d\n", msg.arg1, msg.arg2);

post_event(env, weak_thiz, MEDIA_SET_VIDEO_SIZE, msg.arg1, msg.arg2);

break;

case FFP_MSG_SAR_CHANGED:

MPTRACE("FFP_MSG_SAR_CHANGED: %d, %d\n", msg.arg1, msg.arg2);

post_event(env, weak_thiz, MEDIA_SET_VIDEO_SAR, msg.arg1, msg.arg2);

break;

case FFP_MSG_VIDEO_RENDERING_START:

MPTRACE("FFP_MSG_VIDEO_RENDERING_START:\n");

post_event(env, weak_thiz, MEDIA_INFO, MEDIA_INFO_VIDEO_RENDERING_START, 0);

break;

case FFP_MSG_AUDIO_RENDERING_START:

MPTRACE("FFP_MSG_AUDIO_RENDERING_START:\n");

post_event(env, weak_thiz, MEDIA_INFO, MEDIA_INFO_AUDIO_RENDERING_START, 0);

break;

case FFP_MSG_VIDEO_ROTATION_CHANGED: //> Horizontal and vertical screen changes

MPTRACE("FFP_MSG_VIDEO_ROTATION_CHANGED: %d\n", msg.arg1);

post_event(env, weak_thiz, MEDIA_INFO, MEDIA_INFO_VIDEO_ROTATION_CHANGED, msg.arg1);

break;

case FFP_MSG_AUDIO_DECODED_START:

MPTRACE("FFP_MSG_AUDIO_DECODED_START:\n");

post_event(env, weak_thiz, MEDIA_INFO, MEDIA_INFO_AUDIO_DECODED_START, 0);

break;

case FFP_MSG_VIDEO_DECODED_START:

MPTRACE("FFP_MSG_VIDEO_DECODED_START:\n");

post_event(env, weak_thiz, MEDIA_INFO, MEDIA_INFO_VIDEO_DECODED_START, 0);

break;

case FFP_MSG_OPEN_INPUT:

MPTRACE("FFP_MSG_OPEN_INPUT:\n"); //> Open input data

post_event(env, weak_thiz, MEDIA_INFO, MEDIA_INFO_OPEN_INPUT, 0);

break;

case FFP_MSG_FIND_STREAM_INFO:

MPTRACE("FFP_MSG_FIND_STREAM_INFO:\n"); //> Decoder matching succeeded

post_event(env, weak_thiz, MEDIA_INFO, MEDIA_INFO_FIND_STREAM_INFO, 0);

break;

case FFP_MSG_COMPONENT_OPEN:

MPTRACE("FFP_MSG_COMPONENT_OPEN:\n"); //> Turn on the flow

post_event(env, weak_thiz, MEDIA_INFO, MEDIA_INFO_COMPONENT_OPEN, 0);

break;

case FFP_MSG_BUFFERING_START:

MPTRACE("FFP_MSG_BUFFERING_START:\n");

post_event(env, weak_thiz, MEDIA_INFO, MEDIA_INFO_BUFFERING_START, msg.arg1);

break;

case FFP_MSG_BUFFERING_END:

MPTRACE("FFP_MSG_BUFFERING_END:\n");

post_event(env, weak_thiz, MEDIA_INFO, MEDIA_INFO_BUFFERING_END, msg.arg1);

break;

case FFP_MSG_BUFFERING_UPDATE:

// MPTRACE("FFP_MSG_BUFFERING_UPDATE: %d, %d", msg.arg1, msg.arg2);

post_event(env, weak_thiz, MEDIA_BUFFERING_UPDATE, msg.arg1, msg.arg2);

break;

case FFP_MSG_BUFFERING_BYTES_UPDATE:

break;

case FFP_MSG_BUFFERING_TIME_UPDATE:

break;

case FFP_MSG_SEEK_COMPLETE:

MPTRACE("FFP_MSG_SEEK_COMPLETE:\n");

post_event(env, weak_thiz, MEDIA_SEEK_COMPLETE, 0, 0);

break;

case FFP_MSG_ACCURATE_SEEK_COMPLETE:

MPTRACE("FFP_MSG_ACCURATE_SEEK_COMPLETE:\n");

post_event(env, weak_thiz, MEDIA_INFO, MEDIA_INFO_MEDIA_ACCURATE_SEEK_COMPLETE, msg.arg1);

break;

case FFP_MSG_PLAYBACK_STATE_CHANGED:

break;

case FFP_MSG_TIMED_TEXT:

if (msg.obj) {

jstring text = (*env)->NewStringUTF(env, (char *)msg.obj);

post_event2(env, weak_thiz, MEDIA_TIMED_TEXT, 0, 0, text);

J4A_DeleteLocalRef__p(env, &text);

}

else {

post_event2(env, weak_thiz, MEDIA_TIMED_TEXT, 0, 0, NULL);

}

break;

case FFP_MSG_GET_IMG_STATE:

if (msg.obj) {

jstring file_name = (*env)->NewStringUTF(env, (char *)msg.obj);

post_event2(env, weak_thiz, MEDIA_GET_IMG_STATE, msg.arg1, msg.arg2, file_name);

J4A_DeleteLocalRef__p(env, &file_name);

}

else {

post_event2(env, weak_thiz, MEDIA_GET_IMG_STATE, msg.arg1, msg.arg2, NULL);

}

break;

case FFP_MSG_VIDEO_SEEK_RENDERING_START:

MPTRACE("FFP_MSG_VIDEO_SEEK_RENDERING_START:\n");

post_event(env, weak_thiz, MEDIA_INFO, MEDIA_INFO_VIDEO_SEEK_RENDERING_START, msg.arg1);

break;

case FFP_MSG_AUDIO_SEEK_RENDERING_START:

MPTRACE("FFP_MSG_AUDIO_SEEK_RENDERING_START:\n");

post_event(env, weak_thiz, MEDIA_INFO, MEDIA_INFO_AUDIO_SEEK_RENDERING_START, msg.arg1);

break;

default:

ALOGE("unknown FFP_MSG_xxx(%d)\n", msg.what);

break;

}

msg_free_res(&msg);

}

LABEL_RETURN:

;

}

first floor Information circulation processing

/* need to call msg_free_res for freeing the resouce obtained in msg */

int ijkmp_get_msg(IjkMediaPlayer *mp, AVMessage *msg, int block)

{

assert(mp);

while (1) {

int continue_wait_next_msg = 0;

int retval = msg_queue_get(&mp->ffplayer->msg_queue, msg, block); //> from Get messages from the information queue of the player

if (retval <= 0)

return retval;

switch (msg->what) {

//> Control the player status according to the information content

case FFP_MSG_PREPARED: //> The player enters the ready state

MPTRACE("ijkmp_get_msg: FFP_MSG_PREPARED\n");

pthread_mutex_lock(&mp->mutex);

if (mp->mp_state == MP_STATE_ASYNC_PREPARING) {

ijkmp_change_state_l(mp, MP_STATE_PREPARED);

} else {

// FIXME: 1: onError() ?

av_log(mp->ffplayer, AV_LOG_DEBUG, "FFP_MSG_PREPARED: expecting mp_state==MP_STATE_ASYNC_PREPARING\n");

}

if (!mp->ffplayer->start_on_prepared) {

ijkmp_change_state_l(mp, MP_STATE_PAUSED);

}

pthread_mutex_unlock(&mp->mutex);

break;

case FFP_MSG_COMPLETED:

MPTRACE("ijkmp_get_msg: FFP_MSG_COMPLETED\n");

pthread_mutex_lock(&mp->mutex);

mp->restart = 1;

mp->restart_from_beginning = 1;

ijkmp_change_state_l(mp, MP_STATE_COMPLETED);

pthread_mutex_unlock(&mp->mutex);

break;

case FFP_MSG_SEEK_COMPLETE:

MPTRACE("ijkmp_get_msg: FFP_MSG_SEEK_COMPLETE\n");

pthread_mutex_lock(&mp->mutex);

mp->seek_req = 0;

mp->seek_msec = 0;

pthread_mutex_unlock(&mp->mutex);

break;

case FFP_REQ_START: //> Start the player

MPTRACE("ijkmp_get_msg: FFP_REQ_START\n");

continue_wait_next_msg = 1;

pthread_mutex_lock(&mp->mutex);

if (0 == ikjmp_chkst_start_l(mp->mp_state)) {

// FIXME: 8 check seekable

if (mp->restart) {

if (mp->restart_from_beginning) {

av_log(mp->ffplayer, AV_LOG_DEBUG, "ijkmp_get_msg: FFP_REQ_START: restart from beginning\n");

retval = ffp_start_from_l(mp->ffplayer, 0);

if (retval == 0)

ijkmp_change_state_l(mp, MP_STATE_STARTED);

} else {

av_log(mp->ffplayer, AV_LOG_DEBUG, "ijkmp_get_msg: FFP_REQ_START: restart from seek pos\n");

retval = ffp_start_l(mp->ffplayer);

if (retval == 0)

ijkmp_change_state_l(mp, MP_STATE_STARTED);

}

mp->restart = 0;

mp->restart_from_beginning = 0;

} else {

av_log(mp->ffplayer, AV_LOG_DEBUG, "ijkmp_get_msg: FFP_REQ_START: start on fly\n");

retval = ffp_start_l(mp->ffplayer);

if (retval == 0)

ijkmp_change_state_l(mp, MP_STATE_STARTED);

}

}

pthread_mutex_unlock(&mp->mutex);

break;

case FFP_REQ_PAUSE: //> Pause the player

MPTRACE("ijkmp_get_msg: FFP_REQ_PAUSE\n");

continue_wait_next_msg = 1;

pthread_mutex_lock(&mp->mutex);

if (0 == ikjmp_chkst_pause_l(mp->mp_state)) {

int pause_ret = ffp_pause_l(mp->ffplayer);

if (pause_ret == 0)

ijkmp_change_state_l(mp, MP_STATE_PAUSED);

}

pthread_mutex_unlock(&mp->mutex);

break;

case FFP_REQ_SEEK:

MPTRACE("ijkmp_get_msg: FFP_REQ_SEEK\n");

continue_wait_next_msg = 1;

pthread_mutex_lock(&mp->mutex);

if (0 == ikjmp_chkst_seek_l(mp->mp_state)) {

mp->restart_from_beginning = 0;

if (0 == ffp_seek_to_l(mp->ffplayer, msg->arg1)) {

av_log(mp->ffplayer, AV_LOG_DEBUG, "ijkmp_get_msg: FFP_REQ_SEEK: seek to %d\n", (int)msg->arg1);

}

}

pthread_mutex_unlock(&mp->mutex);

break;

}

if (continue_wait_next_msg) {

msg_free_res(msg);

continue;

}

return retval;

}

return -1;

}

//> Get the event content from the queue

/* return < 0 if aborted, 0 if no msg and > 0 if msg. */

inline static int msg_queue_get(MessageQueue *q, AVMessage *msg, int block)

{

AVMessage *msg1;

int ret;

SDL_LockMutex(q->mutex);

for (;;) {

if (q->abort_request) {

ret = -1;

break;

}

msg1 = q->first_msg;

if (msg1) {

q->first_msg = msg1->next;

if (!q->first_msg)

q->last_msg = NULL;

q->nb_messages--;

*msg = *msg1;

msg1->obj = NULL;

#ifdef FFP_MERGE

av_free(msg1);

#else

msg1->next = q->recycle_msg;

q->recycle_msg = msg1;

#endif

ret = 1;

break;

} else if (!block) {

ret = 0;

break;

} else {

SDL_CondWait(q->cond, q->mutex);

}

}

SDL_UnlockMutex(q->mutex);

return ret;

}

summary :

- Creating ijkPlayer when , hold msg_loop() Function associated with the player ;

- In message processing Two layer processing mechanism , first floor ffplay Player related event handling ,

The second layer is the message exchange processing between Android and the player .

边栏推荐

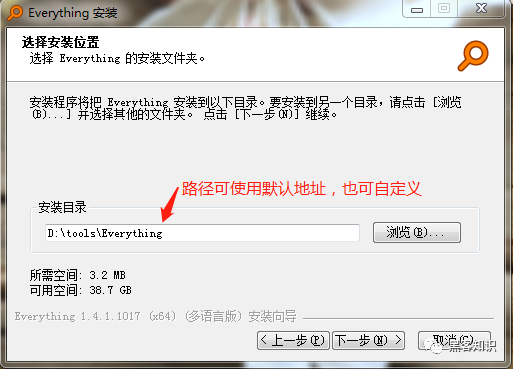

- Fichier local second Search Tool everything

- Recommend a capacity expansion tool to completely solve the problem of insufficient disk space in Disk C and other disks

- Intelligent digital asset management helps enterprises win the post epidemic Era

- Kotlin base generics

- 347. top k high frequency elements heap sort + bucket sort +map

- JetPack - - - DataBinding

- Differences among concurrent, parallel, serial, synchronous and asynchronous

- 【2022高考季】作为一个过来人想说的话

- Uni app dynamic setting title

- 本地文件秒搜工具 Everything

猜你喜欢

The boys x pubgmobile linkage is coming! Check out the latest game posters

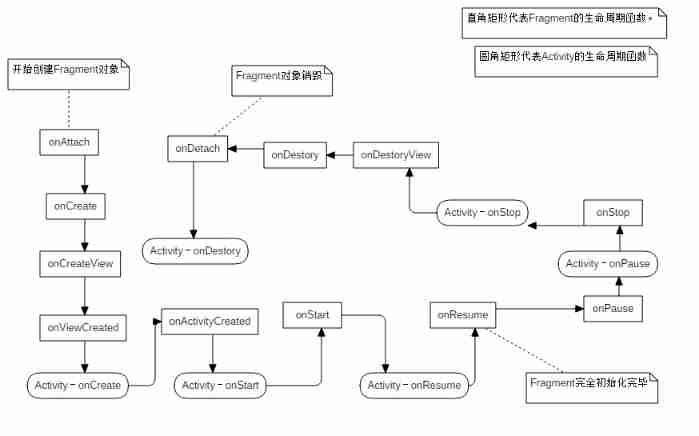

Relationship between fragment lifecycle and activity

Wechat applet (get location)

Solutions to common problems in small program development

c语言对文件相关的处理和应用

JetPack - - - Navigation

Local file search tool everything

‘ipconfig‘ 不是内部或外部命令,也不是可运行的程序 或批处理文件。

JVM基础

Kotlin collaboration channel

随机推荐

Common websites and tools

Uni app upload file

JS convert text to language for playback

Super model logo online design and production tool

Thread pool learning

El form form verification

Uni app disable native navigation bar

推荐扩容工具,彻底解决C盘及其它磁盘空间不够的难题

MFS详解(七)——MFS客户端与web监控安装配置

The processing and application of C language to documents

Detailed explanation of Yanghui triangle

[JS] handwriting call(), apply(), bind()

‘ipconfig‘ 不是内部或外部命令,也不是可运行的程序 或批处理文件。

MFS explanation (V) -- MFS metadata log server installation and configuration

Uniapp secondary encapsulates uview components, and the parent component controls display and hiding

Time complexity and space complexity

【Kernel】驱动编译的两种方式:编译成模块、编译进内核(使用杂项设备驱动模板)

线程相关点

BlockingQueue源码

Free screen recording software captura download and installation