当前位置:网站首页>Li Hongyi machine learning team learning punch in activity day06 --- convolutional neural network

Li Hongyi machine learning team learning punch in activity day06 --- convolutional neural network

2022-07-27 05:27:00 【Charleslc's blog】

Write it at the front

Reported for a team punch out , Now it's the task 6, Today is learning Convolutional Neural Networks , I've heard it before , But I didn't study hard , Just take advantage of this opportunity , Study hard .

Reference video :https://www.bilibili.com/video/av59538266

Reference notes : https://github.com/datawhalechina/leeml-notes

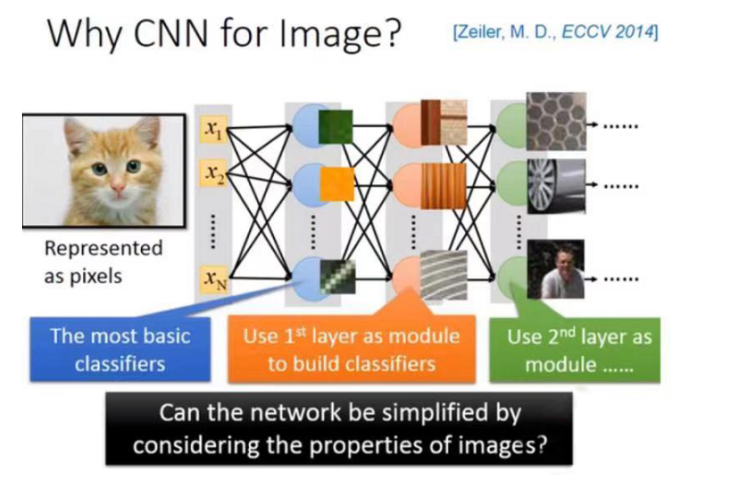

Why CNN?

CNN Generally used in image processing , Of course, you can also use general neural network Do image processing , You don't have to use CNN.

But in this way , Will make hidden layer Too many parameters , and CNN What you do is simplify neural network The architecture of .

CNN framework

CNN The architecture of is as follows , First input a sheet image in the future , This piece of image Will pass convolution layer, Next, I will do max pooling This matter , And then doing convolution, Do it again max pooling This matter . This process It can be repeated countless times , After enough repetitions ,( But how many times you have to decide in advance , It is network The architecture of ( It's like yours neural There are several layers ), How many layers do you want to make convolution, Make several layers Max Pooling, You decide neural When it comes to architecture , You have to decide in advance ). You finish what you decide to do convolution and Max Pooling in the future , You have to do another thing , This thing is called flatten, And then flatten Of output Throw it to the general fully connected feedforward network, Then get the result of image recognition

Convolution( Convolution )

Propetry1:

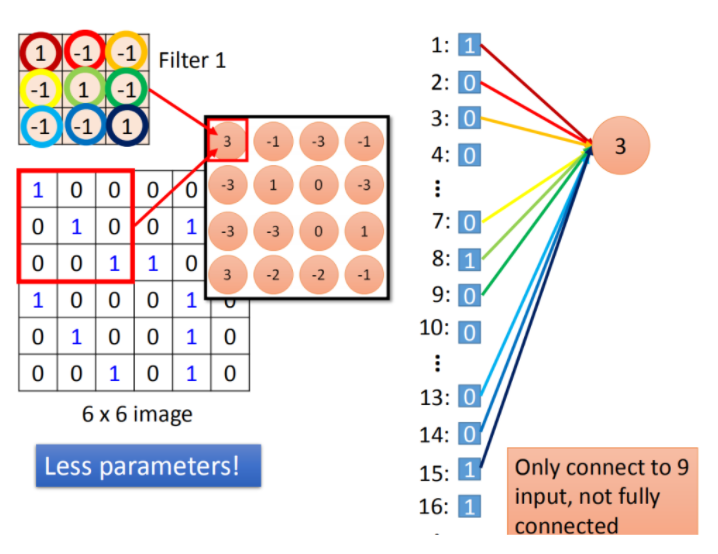

as follows , Suppose our network Of input It's a 6*6 Of Image, among 1 It means there is ink ,0 It means no ink . That's in convolution layer Inside , It consists of a group of filter,( Each of them filter In fact, it is equivalent to fully connect layer One of the inside neuron), every last filter It's really just a matrix(3 *3), Each of these filter The parameters inside (matrix Every one of them element value ) Namely network Of parameter( these parameter Is to learn , It doesn't need people to design )

If filter yes 33 Of , It means you need to spy on one 33 Of pattern, During reconnaissance, I only watch 3*3 Within the scope, you can determine whether there is a pattern Appearance .

first filter It's a 3*3 Of matrix, First from image Operate in the upper left corner , Calculation image Of 9 Value and filter Of 9 Worth inner product , The result is 3, After that stride Move ( This is designed by myself ), Let's set up first. stride by 1. The result is as follows ( On the right side of the figure )

After this incident , It was originally 6 *6 Of matrix, after convolution process You get 4 *4 Of matrix. If you look at it filter Value , The diagonal value is 1,1,1. So its job is detain1 Is there any 1,1,1( Continuous upper left to lower right appears in this image Inside ). for instance : Appear here ( As shown in the figure, the blue line ), So this filter I'll tell you : The maximum value appears at the top left and bottom left

In a convolution layer There will be a lot of them filter( Just a moment ago filter Result ), The other filter There will be different parameters ( Shown in the figure filter2), It also does the following filter1 Something as like as two peas. , stay filter Put it in the upper left corner and inner product to get the result -1, By analogy . hold filter2 Follow input image finish convolution after , You get another 4*4 Of matrix, Red 4 *4 Of matrix With the blue matrix Together, it is called feature map, Look how many you have filter, You get as many image( Do you have 100 individual filter, You get 100 individual 4 *4 Of image)

If for colored Image, It's the same thing , Color pictures are made of RGB Composed of . therefore , a sheet Image Just a few matrix Fold together .

convolution and fully connected The relationship between

convolution Namely fully connected layer Some of the weight Take it off . after convolution Of output It's really just a hidden layer Of neural Of output. If you take these two link Together ,convolution Namely fully connected Take off some weight Result .

stay fully connected in , One neural Should be connected in all input( Yes 36 individual pixel treat as input, This neuron It shall be connected to 36 individual input On ), But now it's only connected 9 individual input(detain One pattern, You don't need to look at the whole picture image, see 9 individual input Just fine ), In this way, less parameters are used .

take stride=1( Move one space ) Do the inner product to get another value -1, Suppose this -1 It's another one neural Of output, This neural Connect to input Of (2,3,4,8,9,10,14,15,16), alike weight Represents the same color . stay 9 individual matrix.

When we do this, we say : these two items. neuron It's in fully connect These two inside neural I had my own weight, When we are doing convolution when , First, put each neural Connected wight Reduce , Force these two neural Share one weight. This is called shared weight, When we do this , This parameter we use is less than the original one .

Max pooling

be relative to convolution Come on ,Max Pooling It's relatively simple . We according to the filter 1 obtain 4*4 Of maxtrix, according to filter2 Get another 4 *4 Of matrix, Next, put output ,4 In groups . In each group, you can choose their average or the largest , Is to put four value Synthesis of a value. This can make your image narrow .

Finish one convolution And once max pooling, It will be the same 6 * 6 Of image Become a 2 *2 Of image. This 2 *2 Of pixel The depth of the depend How many do you have filter( Do you have 50 individual filter You have 50 dimension ), The result is a new image but smaller, One filter It represents a channel.

This operation can repeat Many times , Through one convolution + max pooling You get a new image, It's a smaller one image, However , You can do it repeatedly convolution + max pooling, Get a smaller image.

Flatten

flatten Namely feature map straighten , After straightening, you can throw it to fully connected feedforward netwwork, And then it's over .

The following is about CNN The practice of , Add... Next time .

边栏推荐

- Detailed explanation of pointer constant and constant pointer

- 李宏毅机器学习组队学习打卡活动day05---网络设计的技巧

- pytorch中几个难理解的方法整理--gather&squeeze&unsqueeze

- cookie增删改查和异常

- B1028 人口普查

- Raspberry pie RTMP streaming local camera image

- 通用视图,DRF视图回顾

- 订单系统功能实现

- The provision of operation and maintenance manager is significantly affected, and, for example, it is like an eep command

- 6 zigzag conversion of leetcode

猜你喜欢

Prime number screening (Ehrlich sieve method, interval sieve method, Euler sieve method)

What should test / development programmers over 35 do? Many objective factors

JDBC API details

JVM上篇:内存与垃圾回收篇二--类加载子系统

mq设置过期时间、优先级、死信队列、延迟队列

上传七牛云的方法

李宏毅机器学习组队学习打卡活动day02---回归

数据库设计——关系数据理论(超详细)

JVM上篇:内存与垃圾回收篇三--运行时数据区-概述及线程

The concept of cloud native application and 15 characteristics of cloud native application

随机推荐

JVM Part 1: memory and garbage collection part 7 -- runtime data area heap

李宏毅机器学习组队学习打卡活动day02---回归

Prime number screening (Ehrlich sieve method, interval sieve method, Euler sieve method)

JVM上篇:内存与垃圾回收篇七--运行时数据区-堆

Li Hongyi machine learning team learning punch in activity day03 --- error and gradient decline

用户的管理-限制

B1025 reverse linked list*******

B1027 打印沙漏

B1030 perfect sequence

redis持久化

笔记系列之docker安装Postgresql 14

B1022 D进制的A+B

如何快速有效解决数据库连接失败问题

redis锁

2022年郑州轻工业新生赛题目-打死我也不说

Domestic mainstream ERP software market

Li Hongyi machine learning team learning punch in activity day01 --- introduction to machine learning

JDBC API details

李宏毅机器学习组队学习打卡活动day03---误差和梯度下降

mq设置过期时间、优先级、死信队列、延迟队列