当前位置:网站首页>Detailed explanation of extended physics informedneural networks paper

Detailed explanation of extended physics informedneural networks paper

2022-07-26 02:44:00 【Pinn Shanliwa】

author

- Ameya D. Jagtap1,∗ and George Em Karniadakis1,2

Periodical

- Communications in Computational Physics

date

- 2020

Code

1 Abstract

Propose a more flexible decomposition domain XPINN Method , Than cPINN Domain decomposition is more flexible , And use with all equations .

2 background

cPINN Through domain decomposition , Each area uses a small network for training , So that different regions can be calculated in parallel . Proposed by the paper XPINN have cPINN The advantages of domain decomposition , There are also the following advantages

- Generalized space-time domain decomposition,XPINN The formula provides highly irregular 、 Convex / Non convex space-time domain decomposition , Because of this decomposition XPINN The formula provides highly irregular 、 Convex / Non convex space-time domain decomposition

- XPINN The formula provides highly irregular 、 Convex / Non convex space-time domain decomposition

- Simple intermediate condition , stay XPINN in , For interfaces of any shape , The interface condition is very simple , Normal direction is not required , therefore , The proposed method can be easily extended to any complex geometry , Even higher dimensional geometry .

Accurately solve complex equations , In particular, high-dimensional equations have become one of the biggest challenges of Scientific Computing .XPINN Its advantages make it a candidate for such high-dimensional complex simulation , This high-dimensional simulation usually requires a lot of training costs .

3 XPINN Method

describe :

- Subdomains : Subdomain Ω q , q = 1 , 2 , ⋯ N s d \Omega_{q}, q=1,2, \cdots N_{s d} Ωq,q=1,2,⋯Nsd Is the entire computing domain Ω \Omega Ω Non overlapping subdomains of , Satisfy Ω = ⋃ q = 1 N s d Ω q \Omega=\bigcup_{q=1}^{N_{s d}} \Omega_{q} Ω=⋃q=1NsdΩq and Ω i ∩ Ω j = ∂ Ω i j , i ≠ j \Omega_{i} \cap \Omega_{j}=\partial \Omega_{i j}, i \neq j Ωi∩Ωj=∂Ωij,i=j Represents the number of decomposition fields , The intersection of subdomains is only at the boundary ∂ Ω i j \partial \Omega_{i j} ∂Ωij

- Interface : Represents the subnet corresponding to the common boundary of two or more subdomains (sub-Nets) To communicate with each other

- sub-Net: Son PINN It refers to the individuals with their own set of optimization super parameters used in each sub domain PINN

- Interface Conditions: These conditions are used to connect the decomposed subdomains , Thus, the solution of the governing partial differential equation on the complete field is obtained , According to the properties of the governing equation , One or more interface conditions can be applied to a common interface , Such as solution continuity 、 Flux continuity, etc

Above picture X Is the solution domain , The black solid line indicates the boundary of the area , The black dotted line indicates interface.XPINN Basic interface Conditions include strong form continuity conditions and in common interface Force the average solution given by different subnets .cPINN Mentioned in the text , For stability , There is no need to add the condition of average solution , But experiments also show that it will accelerate the convergence speed .XPINN have cPINN All the advantages of , Such as parallelization ability 、 Great presentation ability 、 An optimization method 、 Activation function 、 Efficient selection of super parameters such as network depth or width . And cPINN Different ,XPINN It can be used to solve any type of partial differential equation , Not necessarily the law of conservation . stay XPINN Under the circumstances , It is not necessary to find the normal direction to adopt the normal flux continuity condition . This greatly reduces the complexity of the algorithm , Especially in large-scale problems with complex fields and mobile interface problems .

The first q t h q^{t h} qth The neural network output of the subdomain is defined as

u Θ ~ q ( z ) = N L ( z ; Θ ~ q ) ∈ Ω q , q = 1 , 2 , ⋯ , N s d u_{\tilde{\mathbf{\Theta}}_{q}}(\mathbf{z})=\mathcal{N}^{L}\left(\mathbf{z} ; \tilde{\mathbf{\Theta}}_{q}\right) \in \Omega_{q}, \quad q=1,2, \cdots, N_{s d} uΘ~q(z)=NL(z;Θ~q)∈Ωq,q=1,2,⋯,Nsd

The final solution is defined as

u Θ ~ ( z ) = ∑ q = 1 N s d u Θ ~ q ( z ) ⋅ 1 Ω q ( z ) u_{\tilde{\mathbf{\Theta}}}(\mathbf{z})=\sum_{q=1}^{N_{s d}} u_{\tilde{\mathbf{\Theta}}_{q}}(\mathbf{z}) \cdot \mathbb{1}_{\Omega_{q}}(\mathbf{z}) uΘ~(z)=∑q=1NsduΘ~q(z)⋅1Ωq(z)

among

1 Ω q ( z ) : = { 0 if z ∉ Ω q 1 if z ∈ Ω q \ Common interface in the q t h subdomain 1 S if z ∈ Common interface in the q t h subdomain \mathbb{1}_{\Omega_{q}}(\mathbf{z}):=\left\{\begin{array}{ll} 0 & \text { if } \mathbf{z} \notin \Omega_{q} \\ 1 & \text { if } \mathbf{z} \in \Omega_{q} \backslash \text { Common interface in the } q^{t h} \text { subdomain } \\ \frac{1}{\mathcal{S}} & \text { if } \mathbf{z} \in \text { Common interface in the } q^{t h} \text { subdomain } \end{array}\right. 1Ωq(z):=⎩⎨⎧01S1 if z∈/Ωq if z∈Ωq\ Common interface in the qth subdomain if z∈ Common interface in the qth subdomain

S S S Express S Indicates the number of subdomains that intersect along the public interface

3.1 just 、 The loss function of the subdomain of the inverse problem

(1) Positive problem

stay q t h q^{t h} qth Subdomain { x u q ( i ) } i = 1 N u q , { x F q ( i ) } i = 1 N F q and { x I q ( i ) } i = 1 N I q \left\{\mathbf{x}_{u_{q}}^{(i)}\right\}_{i=1}^{N_{u q}},\left\{\mathbf{x}_{F_{q}}^{(i)}\right\}_{i=1}^{N_{F q}} \text { and }\left\{\mathbf{x}_{I_{q}}^{(i)}\right\}_{i=1}^{N_{I q}} { xuq(i)}i=1Nuq,{ xFq(i)}i=1NFq and { xIq(i)}i=1NIq Express training, residual, and the common interface points. N u q , N F q a n d N I q N_{u_{q}}, N_{F_{q}} and N_{I q} Nuq,NFqandNIq Respectively represent the number of corresponding points , Each subdomain uses one PINN, u q = u Θ ~ t u_{q}=u_{\tilde{\Theta}_{t}} uq=uΘ~t, The first q t h q^{t h} qth The sub domain loss function is defined as

J ( Θ ~ q ) = W u q MSE u q ( Θ ~ q ; { x u q ( i ) } i = 1 N u q ) + W F q MSE F q ( Θ ~ q ; { x F q ( i ) } i = 1 N F q ) + W I q MSE u a v g ( Θ ~ q ; { x I q ( i ) } i = 1 N I q ) ⏟ Interface condition + W I F q MSE R ( Θ ~ q ; { x I q ( i ) } i = 1 N I q ) ⏟ Interface condition + Additional Interface Condition’s ⏟ Optional \begin{aligned} \mathcal{J}\left(\tilde{\mathbf{\Theta}}_{q}\right)=& W_{u_{q}} \operatorname{MSE}_{u_{q}}\left(\tilde{\mathbf{\Theta}}_{q} ;\left\{\mathbf{x}_{u_{q}}^{(i)}\right\}_{i=1}^{N_{u q}}\right)+W_{\mathcal{F}_{q}} \operatorname{MSE}_{\mathcal{F}_{q}}\left(\tilde{\boldsymbol{\Theta}}_{q} ;\left\{\mathbf{x}_{F_{q}}^{(i)}\right\}_{i=1}^{N_{F q}}\right) \\ &+W_{I_{q}} \underbrace{\operatorname{MSE}_{u_{a v g}}\left(\tilde{\boldsymbol{\Theta}}_{q} ;\left\{\mathbf{x}_{I_{q}}^{(i)}\right\}_{i=1}^{N_{I q}}\right)}_{\text {Interface condition }}+W_{I_{\mathcal{F}_{q}}} \underbrace{\operatorname{MSE}_{\mathcal{R}}\left(\tilde{\boldsymbol{\Theta}}_{q} ;\left\{\mathbf{x}_{I_{q}}^{(i)}\right\}_{i=1}^{N_{I q}}\right)}_{\text {Interface condition }} \\ &+\underbrace{\text { Additional Interface Condition's }}_{\text {Optional }} \end{aligned} J(Θ~q)=WuqMSEuq(Θ~q;{ xuq(i)}i=1Nuq)+WFqMSEFq(Θ~q;{ xFq(i)}i=1NFq)+WIqInterface condition MSEuavg(Θ~q;{ xIq(i)}i=1NIq)+WIFqInterface condition MSER(Θ~q;{ xIq(i)}i=1NIq)+Optional Additional Interface Condition’s

W u q , W F q , W I F q and W I q W_{u_{q}}, W_{\mathcal{F}_{q}}, W_{I_{\mathcal{F}_{q}}} \text { and } W_{I_{q}} Wuq,WFq,WIFq and WIq Parameters representing different losses ,

MSE u q ( Θ ~ q ; { x u q ( i ) } i = 1 N u q ) = 1 N u q ∑ i = 1 N u q ∣ u ( i ) − u Θ ~ q ( x u q ( i ) ) ∣ 2 MSE F q ( Θ ~ q ; { x F q ( i ) } i = 1 N F q ) = 1 N F a ∑ i = 1 N F q ∣ F Θ ~ q ( x F q ( i ) ) ∣ 2 \begin{array}{l} \operatorname{MSE}_{u_{q}}\left(\tilde{\mathbf{\Theta}}_{q} ;\left\{\mathbf{x}_{u_{q}}^{(i)}\right\}_{i=1}^{N_{u q}}\right)=\frac{1}{N_{u_{q}}} \sum_{i=1}^{N_{u q}}\left|u^{(i)}-u_{\tilde{\mathbf{\Theta}}_{q}}\left(\mathbf{x}_{u_{q}}^{(i)}\right)\right|^{2} \\ \operatorname{MSE}_{\mathcal{F}_{q}}\left(\tilde{\mathbf{\Theta}}_{q} ;\left\{\mathbf{x}_{F_{q}}^{(i)}\right\}_{i=1}^{N_{F q}}\right)=\frac{1}{N_{F_{a}}} \sum_{i=1}^{N_{F q}}\left|\mathcal{F}_{\tilde{\mathbf{\Theta}}_{q}}\left(\mathbf{x}_{F_{q}}^{(i)}\right)\right|^{2} \end{array} MSEuq(Θ~q;{ xuq(i)}i=1Nuq)=Nuq1∑i=1Nuq∣∣u(i)−uΘ~q(xuq(i))∣∣2MSEFq(Θ~q;{ xFq(i)}i=1NFq)=NFa1∑i=1NFq∣∣FΘ~q(xFq(i))∣∣2

MSE u a v g ( Θ ~ q ; { x I q ( i ) } i = 1 N I q ) = ∑ ∀ q + ( 1 N I q ∑ i = 1 N I q ∣ u Θ ~ q ( x I q ( i ) ) − { { u Θ ~ q ( x I q ( i ) ) } } ∣ 2 ) MSE R ( Θ ~ q ; { x I q ( i ) } i = 1 N I q ) = ∑ ∀ q + ( 1 N I q ∑ i = 1 N I q ∣ F Θ ~ q ( x I q ( i ) ) − F Θ ~ q + ( x I q ( i ) ) ∣ 2 ) \begin{array}{l} \operatorname{MSE}_{u_{a v g}}\left(\tilde{\mathbf{\Theta}}_{q} ;\left\{\mathbf{x}_{I_{q}}^{(i)}\right\}_{i=1}^{N_{I q}}\right)=\sum_{\forall q^{+}}\left(\frac{1}{N_{I_{q}}} \sum_{i=1}^{N_{I_{q}}}\left|u_{\tilde{\mathbf{\Theta}}_{q}}\left(\mathbf{x}_{I_{q}}^{(i)}\right)-\left\{\left\{u_{\tilde{\mathbf{\Theta}}_{q}}\left(\mathbf{x}_{I_{q}}^{(i)}\right)\right\}\right\}\right|^{2}\right) \\ \operatorname{MSE}_{\mathcal{R}}\left(\tilde{\mathbf{\Theta}}_{q} ;\left\{\mathbf{x}_{I_{q}}^{(i)}\right\}_{i=1}^{N_{I q}}\right)=\sum_{\forall q^{+}}\left(\frac{1}{N_{I_{q}}} \sum_{i=1}^{N_{I_{q}}}\left|\mathcal{F}_{\tilde{\mathbf{\Theta}}_{q}}\left(\mathbf{x}_{I_{q}}^{(i)}\right)-\mathcal{F}_{\tilde{\Theta}_{q^{+}}}\left(\mathbf{x}_{I_{q}}^{(i)}\right)\right|^{2}\right) \end{array} MSEuavg(Θ~q;{ xIq(i)}i=1NIq)=∑∀q+(NIq1∑i=1NIq∣∣uΘ~q(xIq(i))−{ { uΘ~q(xIq(i))}}∣∣2)MSER(Θ~q;{ xIq(i)}i=1NIq)=∑∀q+(NIq1∑i=1NIq∣∣FΘ~q(xIq(i))−FΘ~q+(xIq(i))∣∣2)

The last two represent interface Condition loss , The fourth item is in the subdomain q q q and q + q^{+} q+ The residual continuity condition of two different networks , q + q^{+} q+ representative q q q The field of MSER and M S E u a v g MSE_{uavg} MSEuavg, Are defined in all adjacent sub domains , In the above formula { { u Θ ~ q } } = u avg : = u Θ ~ q + u Θ ~ q + 2 \left\{\left\{u_{\tilde{\mathbf{\Theta}}_{q}}\right\}\right\}=u_{\text {avg }}:=\frac{u_{\tilde{\mathbf{\Theta}}_{q}}+u_{\tilde{\mathbf{\Theta}}_{q^{+}}}}{2} { { uΘ~q}}=uavg :=2uΘ~q+uΘ~q+( Suppose only two subdomains intersect on the public interface ),additional interface conditions, for example flux continuity , c k c^{k} ck It can also be based on PDE And the type of interface The direction is added to the loss .

remark:

- interface conditions The type of determines the regularity of the solution of the whole interface , Thus affecting the convergence speed . stay interface The solution on is sufficiently continuous , So as to meet its control PDE

- Enough interface point To connect subdomains , This is very important for the convergence of the algorithm , Especially for internal

For the inverse problem :

J ( Θ ~ q , λ ) = W u q MSE u q ( Θ ~ q , λ ; { x u q ( i ) } i = 1 N u q ) + W F q MSE F q ( Θ ~ q , λ ; { x u q ( i ) } i = 1 N u q ) + W I q { MSE u a v g ( Θ ~ q , λ ; { x I q ( i ) } i = 1 N I q ) + MSE λ ( θ ~ q , λ ; { x I q ( i ) } i = 1 N I q ) } ⏟ Interface condition’s + W I F q MSE R ( Θ ~ q , λ ; { x I q ( i ) } i = 1 N I q ) ⏟ Intarf + Additional Interface Condition’s ⏟ Optional \begin{aligned} \mathcal{J}\left(\tilde{\mathbf{\Theta}}_{q}, \lambda\right)=& W_{u_{q}} \operatorname{MSE}_{u_{q}}\left(\tilde{\boldsymbol{\Theta}}_{q}, \lambda ;\left\{\mathbf{x}_{u_{q}}^{(i)}\right\}_{i=1}^{N_{u_{q}}}\right)+W_{\mathcal{F}_{q}} \operatorname{MSE}_{\mathcal{F}_{q}}\left(\tilde{\boldsymbol{\Theta}}_{q}, \lambda ;\left\{\mathbf{x}_{u_{q}}^{(i)}\right\}_{i=1}^{N_{u_{q}}}\right) \\ &+W_{I_{q}} \underbrace{\left\{\operatorname{MSE}_{u_{a v g}}\left(\tilde{\boldsymbol{\Theta}}_{q}, \lambda ;\left\{\mathbf{x}_{I_{q}}^{(i)}\right\}_{i=1}^{N_{I q}}\right)+\operatorname{MSE}_{\lambda}\left(\tilde{\boldsymbol{\theta}}_{q}, \lambda ;\left\{\mathbf{x}_{I_{q}}^{(i)}\right\}_{i=1}^{N_{I q}}\right)\right\}}_{\text {Interface condition's }} \\ &+W_{I_{\mathcal{F}_{q}}} \underbrace{\operatorname{MSE}_{\mathcal{R}}\left(\tilde{\boldsymbol{\Theta}}_{q}, \lambda ;\left\{\mathbf{x}_{I_{q}}^{(i)}\right\}_{i=1}^{N_{I q}}\right)}_{\text {Intarf }}+\underbrace{\text { Additional Interface Condition's }}_{\text {Optional }} \end{aligned} J(Θ~q,λ)=WuqMSEuq(Θ~q,λ;{ xuq(i)}i=1Nuq)+WFqMSEFq(Θ~q,λ;{ xuq(i)}i=1Nuq)+WIqInterface condition’s { MSEuavg(Θ~q,λ;{ xIq(i)}i=1NIq)+MSEλ(θ~q,λ;{ xIq(i)}i=1NIq)}+WIFqIntarf MSER(Θ~q,λ;{ xIq(i)}i=1NIq)+Optional Additional Interface Condition’s

among

MSE F q ( Θ ~ q , λ ; { x u q ( i ) } i = 1 N u q ) = 1 N u q ∑ i = 1 N u q ∣ F Θ ~ q ( x u q ( i ) ) ∣ 2 MSE λ ( Θ ~ q , λ ; { x I q ( i ) } i = 1 N I q ) = ∑ ∀ q + ( 1 N I q ∑ i = 1 N l q ∣ λ q ( x I q ( i ) ) − λ q + ( x I q ( i ) ) ∣ 2 ) \begin{array}{l} \operatorname{MSE}_{\mathcal{F}_{q}}\left(\tilde{\boldsymbol{\Theta}}_{q}, \lambda ;\left\{\mathbf{x}_{u_{q}}^{(i)}\right\}_{i=1}^{N_{u_{q}}}\right)=\frac{1}{N_{u_{q}}} \sum_{i=1}^{N_{u_{q}}}\left|\mathcal{F}_{\tilde{\mathbf{\Theta}}_{q}}\left(\mathbf{x}_{u_{q}}^{(i)}\right)\right|^{2} \\ \operatorname{MSE}_{\lambda}\left(\tilde{\mathbf{\Theta}}_{q}, \lambda ;\left\{\mathbf{x}_{I_{q}}^{(i)}\right\}_{i=1}^{N_{I q}}\right)=\sum_{\forall q^{+}}\left(\frac{1}{N_{I_{q}}} \sum_{i=1}^{N_{l q}}\left|\lambda_{q}\left(\mathbf{x}_{I_{q}}^{(i)}\right)-\lambda_{q^{+}}\left(\mathbf{x}_{I_{q}}^{(i)}\right)\right|^{2}\right) \end{array} MSEFq(Θ~q,λ;{ xuq(i)}i=1Nuq)=Nuq1∑i=1Nuq∣∣FΘ~q(xuq(i))∣∣2MSEλ(Θ~q,λ;{ xIq(i)}i=1NIq)=∑∀q+(NIq1∑i=1Nlq∣∣λq(xIq(i))−λq+(xIq(i))∣∣2)

Other residual losses are the same as positive losses .

**Remark:** It should be noted that , because XPINN Highly nonconvex loss function , It is very difficult to locate its global minimum . however , For several local minima , The value of the loss function is similar , The accuracy of the corresponding prediction solution is similar .

3.2 An optimization method

Automatic derivation

3.3 error

E app q = ∥ u a q − u q e x ∥ E gen q = ∥ u g q − u a q ∥ E opt q = ∥ u τ q − u g q ∥ \begin{aligned} \mathcal{E}_{\text {app }} q &=\left\|u_{a_{q}}-u_{q}^{e x}\right\| \\ \mathcal{E}_{\text {gen }} q &=\left\|u_{g_{q}}-u_{a_{q}}\right\| \\ \mathcal{E}_{\text {opt }} q &=\left\|u_{\tau_{q}}-u_{g_{q}}\right\| \end{aligned} Eapp qEgen qEopt q=∥∥uaq−uqex∥∥=∥∥ugq−uaq∥∥=∥∥uτq−ugq∥∥

Represent the approximation error、 generalization error as well as optimization error.

- u a q = arg min f ∈ F q ∥ f − u q e x ∥ u_{a_{q}}=\arg \min _{f \in F_{q}}\left\|f-u_{q}^{e x}\right\| uaq=argminf∈Fq∥∥f−uqex∥∥ True solution u q e x u_{q}^{e x} uqex Approximation of

- u g q = arg min Θ ~ q J ( Θ ~ q ) u_{g_{q}}=\arg \min _{\tilde{\mathbf{\Theta}}_{q}} \mathcal{J}\left(\tilde{\mathbf{\Theta}}_{q}\right) ugq=argminΘ~qJ(Θ~q) Is the global optimal solution

- u τ q = arg min Θ ~ q J ( Θ ~ q ) u_{\tau_{q}}=\arg \min _{\tilde{\mathbf{\Theta}}_{q}} \mathcal{J}\left(\tilde{\mathbf{\Theta}}_{q}\right) uτq=argminΘ~qJ(Θ~q) It is the solution obtained after the subnetwork training ,

Last XPINN The error of can be summarized as

E X P I N N : = ∥ u τ − u e x ∥ ≤ ∥ u τ − u g ∥ + ∥ u g − u a ∥ + ∥ u a − u e x ∥ \mathcal{E}_{X P I N N}:=\left\|u_{\tau}-u^{e x}\right\| \leq\left\|u_{\tau}-u_{g}\right\|+\left\|u_{g}-u_{a}\right\|+\left\|u_{a}-u^{e x}\right\| EXPINN:=∥uτ−uex∥≤∥uτ−ug∥+∥ug−ua∥+∥ua−uex∥

among , ( u e x , u τ , u g , u a ) ( z ) = ∑ q = 1 N s d ( u q e x , u τ q , u g q , u a q ) ( z ) ⋅ 1 Ω q ( z ) \left(u^{e x}, u_{\tau}, u_{g}, u_{a}\right)(\mathbf{z})=\sum_{q=1}^{N_{s d}}\left(u_{q}^{e x}, u_{\tau_{q}}, u_{g_{q}}, u_{a_{q}}\right)(\mathbf{z}) \cdot \mathbb{1}_{\Omega_{q}}(\mathbf{z}) (uex,uτ,ug,ua)(z)=∑q=1Nsd(uqex,uτq,ugq,uaq)(z)⋅1Ωq(z)

Remark:

- When the estimation error decreases ( Data fitting is better ), Generalization error will increase , This is a kind of bias variance trade-off, The two main factors affecting generalization error are the number and distribution of residual points

- The optimization error is affected by the complexity of the loss function , The network structure deeply affects the optimization error

3.4 XPINN、cPINN,PINN contrast

And PINN and cPINN Compared to the framework ,XPINN Framework has many advantages , But it also has the same limitations as the previous framework . Absolute error

PDE Solution , Not lower than the level , This is due to the inaccuracy involved in solving high-dimensional nonconvex optimization problems , May lead to a bad minimum

边栏推荐

- 必会面试题:1.浅拷贝和深拷贝_深拷贝

- [introduction to C language] zzulioj 1006-1010

- Mandatory interview questions: 1. shallow copy and deep copy_ Deep copy

- Li Kou 148: sorting linked list

- Information system project managers must recite the core examination site (50). The contract content is not clearly stipulated

- MySQL(4)

- [C]详解语言文件操作

- Wechat applet - get user location (longitude and latitude + city)

- Code dynamically controls textview to move right (not XML)

- DFS Niuke maze problem

猜你喜欢

Li Kou 148: sorting linked list

循环与分支(一)

What does the Red Cross in the SQL editor mean (toad and waterdrop have been encountered...)

![[steering wheel] use the 60 + shortcut keys of idea to share with you, in order to improve efficiency (reconstruction)](/img/b4/62a4c06743fdedacdffd9b156a760f.png)

[steering wheel] use the 60 + shortcut keys of idea to share with you, in order to improve efficiency (reconstruction)

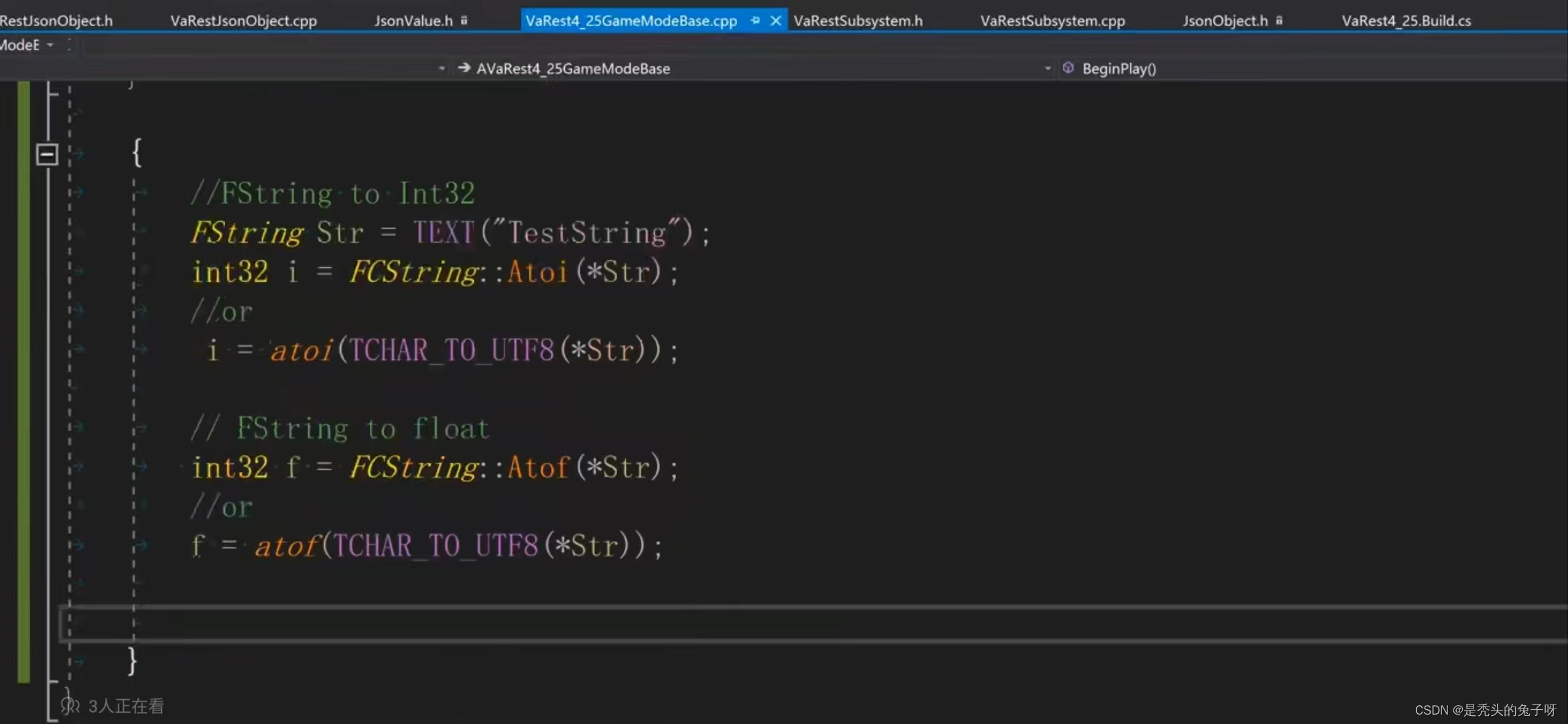

U++ common type conversion and common forms and proxies of lambda

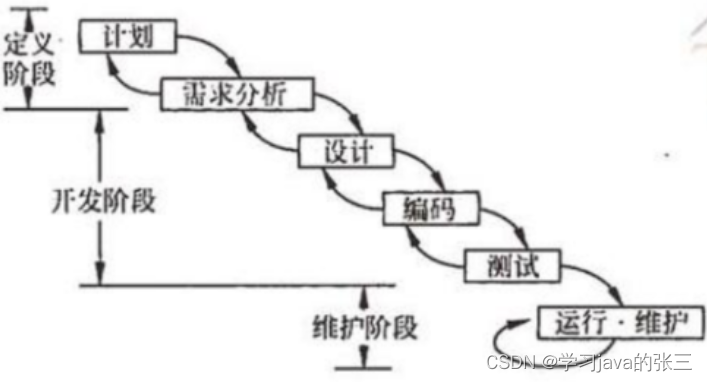

1. Software testing ----- the basic concept of software testing

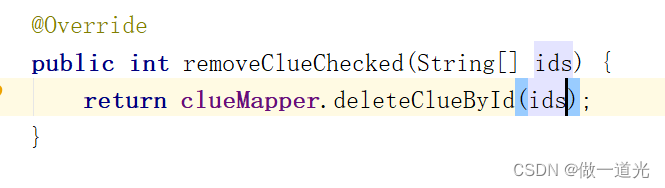

Chapter 3 business function development (delete clues)

![[steering wheel] how to transfer the start command and idea: VM parameters, command line parameters, system parameters, environment variable parameters, main method parameters](/img/97/159d7df5e2d11b129c400d61e3fde6.png)

[steering wheel] how to transfer the start command and idea: VM parameters, command line parameters, system parameters, environment variable parameters, main method parameters

(PC+WAP)织梦模板蔬菜水果类网站

AMD64 (x86_64) architecture ABI document: medium

随机推荐

How to effectively prevent others from wearing the homepage snapshot of the website

(Dynamic Programming Series) sword finger offer 48. the longest substring without repeated characters

Manifold learning

Mandatory interview questions: 1. shallow copy and deep copy_ Deep copy

How to speed up matrix multiplication

关于mysql的问题,希望个位能帮一下忙

Yum install MySQL FAQ

Pinia plugin persist, a data persistence plug-in of Pinia

What if the test / development programmer gets old? Lingering cruel facts

从各大APP年度报告看用户画像——标签,比你更懂你自己

Image recognition (VII) | what is the pooling layer? What's the effect?

numpy.sort

How to design automated test cases?

Information system project managers must recite the core examination site (50). The contract content is not clearly stipulated

Article setting top

Simply use MySQL index

Pinia的数据持久化插件 pinia-plugin-persist

Information System Project Manager - Chapter 10 communication management and stakeholder management examination questions over the years

Self-supervised learning method to solve the inverse problem of Fokker-Planck Equation

Keil's operation before programming with C language