当前位置:网站首页>MapReduce instance (II): Average

MapReduce instance (II): Average

2022-07-27 16:15:00 【Laugh at Fengyun Road】

MR Realization averaging

Hello everyone , I am Fengyun , Welcome to my blog perhaps WeChat official account 【 Laugh at Fengyun Road 】, In the days to come, let's learn about big data related technologies , Work hard together , Meet a better self !

Realize the idea

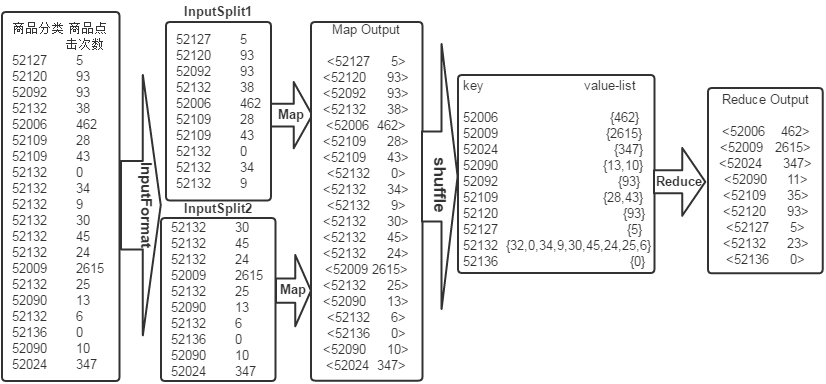

The average is MapReduce Common algorithms , The algorithm of finding the average is also relatively simple , One idea is Map End read data , Enter data into Reduce Go through before shuffle, take map Function output key All with the same value value Values form a set value-list, Then enter into Reduce End ,Reduce The end summarizes and counts the number of records , Then do business . The specific principle is shown in the figure below :

Write code

Mapper Code

public static class Map extends Mapper<Object , Text , Text , IntWritable>{

private static Text newKey=new Text();

// Realization map function

public void map(Object key,Text value,Context context) throws IOException, InterruptedException{

// Convert the data of the input plain text file into String

String line=value.toString();

System.out.println(line);

String arr[]=line.split("\t");

newKey.set(arr[0]);

int click=Integer.parseInt(arr[1]);

context.write(newKey, new IntWritable(click));

}

}

map End in use Hadoop After the default input method , Will input value Value through split() Method to intercept , We convert the intercepted product Click times field into IntWritable Type and set it to value, Set the commodity classification field to key, And then directly output key/value Value .

Reducer Code

public static class Reduce extends Reducer<Text, IntWritable, Text, IntWritable>{

// Realization reduce function

public void reduce(Text key,Iterable<IntWritable> values,Context context) throws IOException, InterruptedException{

int num=0;

int count=0;

for(IntWritable val:values){

num+=val.get(); // Sum each element num

count++; // Count the number of elements count

}

int avg=num/count; // Calculate the average

context.write(key,new IntWritable(avg));

}

}

map Output <key,value> after shuffle Process integration <key,values> Key value pair , And then <key,values> Key value pairs are given to reduce.reduce Termination received values after , Will input key Copy directly to the output key, take values adopt for Loop sums every element inside num And count the number of elements count, And then use num Divide count Get the average avg, take avg Set to value, Finally, output directly <key,value> That's all right. .

Complete code

package mapreduce;

import java.io.IOException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

public class MyAverage{

public static class Map extends Mapper<Object , Text , Text , IntWritable>{

private static Text newKey=new Text();

public void map(Object key,Text value,Context context) throws IOException, InterruptedException{

String line=value.toString();

System.out.println(line);

String arr[]=line.split("\t");

newKey.set(arr[0]);

int click=Integer.parseInt(arr[1]);

context.write(newKey, new IntWritable(click));

}

}

public static class Reduce extends Reducer<Text, IntWritable, Text, IntWritable>{

public void reduce(Text key,Iterable<IntWritable> values,Context context) throws IOException, InterruptedException{

int num=0;

int count=0;

for(IntWritable val:values){

num+=val.get();

count++;

}

int avg=num/count;

context.write(key,new IntWritable(avg));

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException{

Configuration conf=new Configuration();

System.out.println("start");

Job job =new Job(conf,"MyAverage");

job.setJarByClass(MyAverage.class);

job.setMapperClass(Map.class);

job.setReducerClass(Reduce.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

Path in=new Path("hdfs://localhost:9000/mymapreduce4/in/goods_click");

Path out=new Path("hdfs://localhost:9000/mymapreduce4/out");

FileInputFormat.addInputPath(job,in);

FileOutputFormat.setOutputPath(job,out);

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

-------------- end ----------------

WeChat official account : Below scan QR code or Search for Laugh at Fengyun Road Focus on

边栏推荐

- Wechat applet personal number opens traffic master

- Servlet基础知识点

- Ncnn reasoning framework installation; Onnx to ncnn

- 无线网络分析有关的安全软件(aircrack-ng)

- Paper_Book

- profileapi.h header

- Mapreduce实例(一):WordCount

- Text capture picture (Wallpaper of Nezha's demon child coming to the world)

- C语言程序设计(第三版)

- [sword finger offer] interview question 41: median in data flow - large and small heap implementation

猜你喜欢

Nacos

![[sword finger offer] interview question 50: the first character that appears only once - hash table lookup](/img/72/b35bdf9bde72423410e365e5b6c20e.png)

[sword finger offer] interview question 50: the first character that appears only once - hash table lookup

DeFi安全之DEX与AMMs

Flask连接mysql数据库已有表

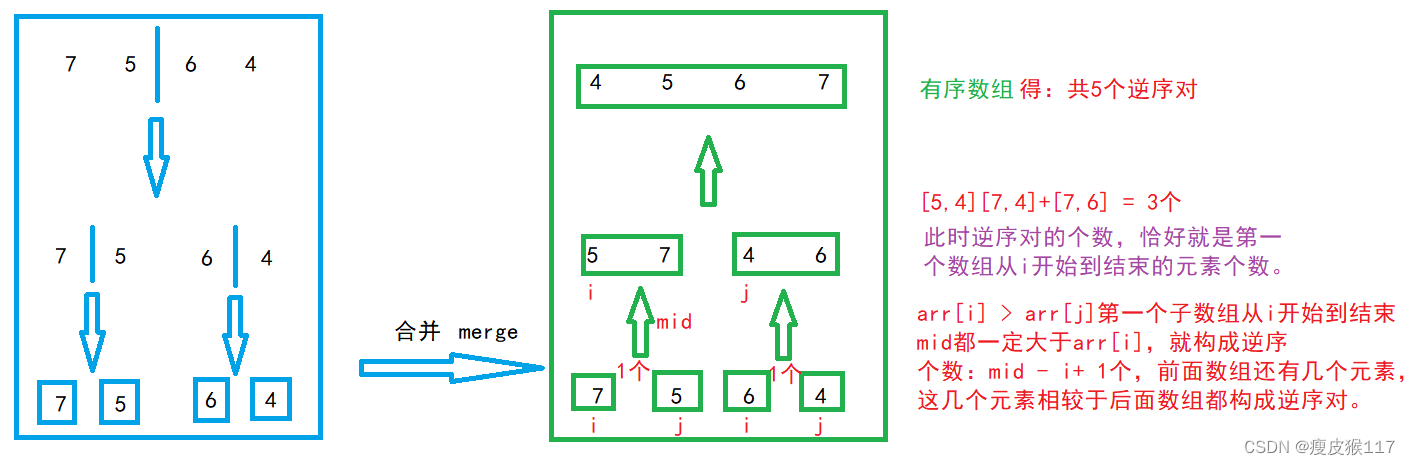

剑指 Offer 51. 数组中的逆序对

Single machine high concurrency model design

![[sword finger offer] interview question 49: ugly number](/img/7a/2bc9306578530fbb5ac3b32254676d.png)

[sword finger offer] interview question 49: ugly number

测试新手学习宝典(有思路有想法)

keil 采用 makefile 实现编译

Openwrt adds RTC (mcp7940 I2C bus) drive details

随机推荐

JSP Foundation

These questions~~

Have you ever used the comma operator?

Content ambiguity occurs when using transform:translate()

[sword finger offer] interview question 49: ugly number

企业运维安全就用行云管家堡垒机!

Hematemesis finishing c some commonly used help classes

一款功能强大的Web漏洞扫描和验证工具(Vulmap)

借5G东风,联发科欲再战高端市场?

drf使用:get请求获取数据(小例子)

Paper_ Book

A powerful web vulnerability scanning and verification tool (vulmap)

ARIMA模型选择与残差

Baidu picture copy picture address

Chapter I Marxist philosophy is a scientific world outlook and methodology

Single machine high concurrency model design

Common tool classes under JUC package

Openwrt增加对 sd card 支持

Coding technique - Global log switch

mysql设置密码时报错 Your password does not satisfy the current policy requirements(修改·mysql密码策略设置简单密码)